BREAKING NEWS

LATEST POSTS

-

VillageRoadShow production studio files for bankruptcy

Village Roadshow (prod company/financier: Wonka, the Matrix series, and Ocean’s 11) has filed for bankruptcy.

It’s a rough indicator of where we are in 2025 when one of the last independent production companies working with the studios goes under.

Here’s their balance sheet:

$400 M in library value of 100+ films (89 of which they co-own with Warner Bros.)

$500 M – $1bn total debt

$1.4 M in debt to WGA, whose members were told to stop working with Roadshow in December

$794 K owed to Bryan Cranston’s prod company

$250 K owed to Sony Pictures TV

$300 K/month overhead

The crowning expense that brought down this 36-year-old production company is the $18 M in (unpaid) legal fees from a lengthy and currently unresolved arbitration with their long-time partner Warner Bros, who they’ve had a co-financing arrangement since the late 90s.

Roadshow sued when WBD released their Matrix Resurrections (2021) film in theaters and on Max simultaneously, causing Roadshow to withhold their portion of the $190 M production costs.

Due to mounting financial pressures, Village Roadshow’s CEO, Steve Mosko, a veteran film and TV exec, left the company in January.

Now, this all falls on the shoulders of Jim Moore, CEO of Vine, an equity firm that owns Village Roadshow, as well as Luc Besson’s prod company EuropaCorp.

-

Google Gemini Robotics

For safety considerations, Google mentions a “layered, holistic approach” that maintains traditional robot safety measures like collision avoidance and force limitations. The company describes developing a “Robot Constitution” framework inspired by Isaac Asimov’s Three Laws of Robotics and releasing a dataset unsurprisingly called “ASIMOV” to help researchers evaluate safety implications of robotic actions.

This new ASIMOV dataset represents Google’s attempt to create standardized ways to assess robot safety beyond physical harm prevention. The dataset appears designed to help researchers test how well AI models understand the potential consequences of actions a robot might take in various scenarios. According to Google’s announcement, the dataset will “help researchers to rigorously measure the safety implications of robotic actions in real-world scenarios.”

-

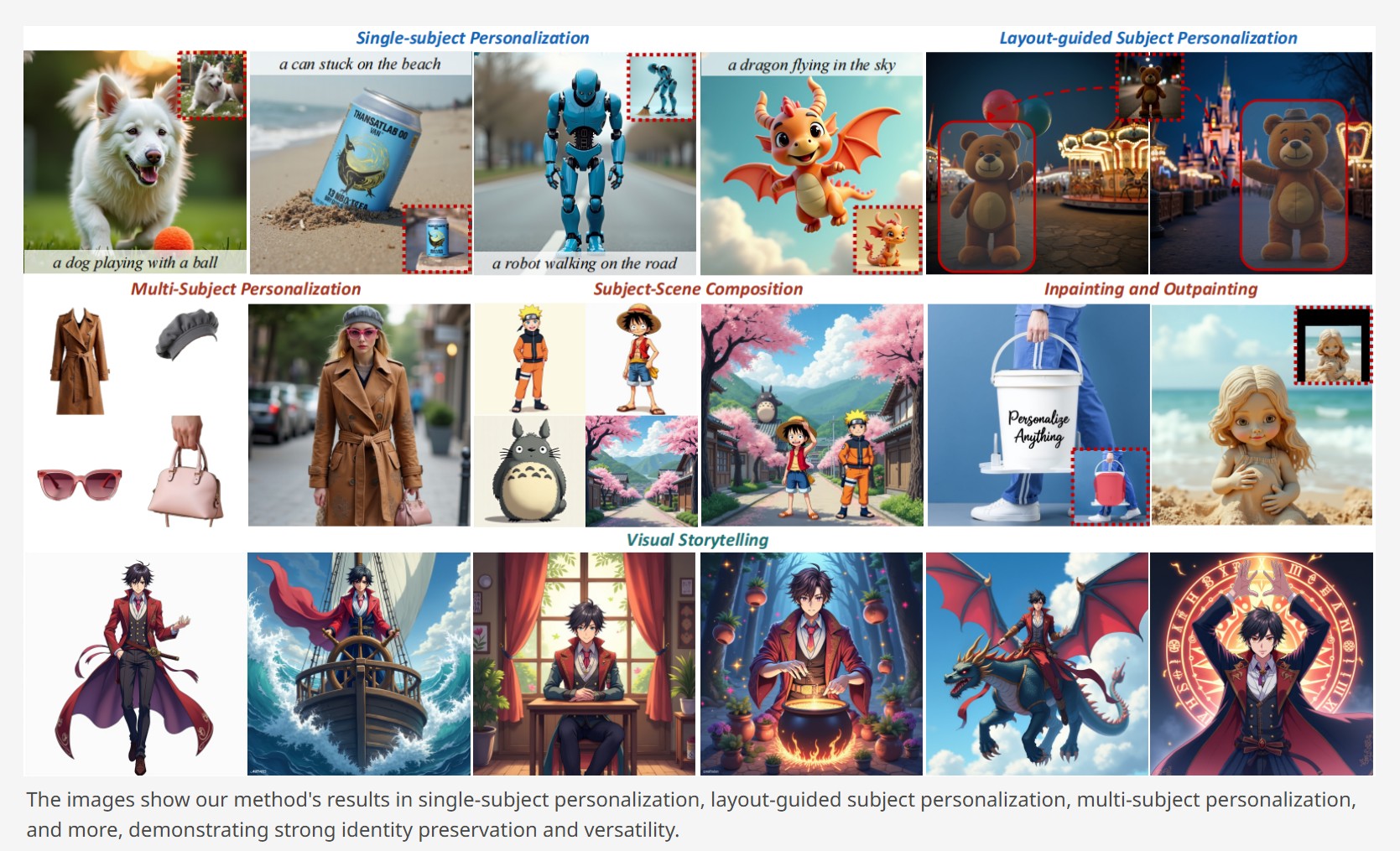

Personalize Anything – For Free with Diffusion Transformer

https://fenghora.github.io/Personalize-Anything-Page

Customize any subject with advanced DiT without additional fine-tuning.

-

Google Gemini 2.0 Flash new AI model extremely proficient at removing watermarks from images

-

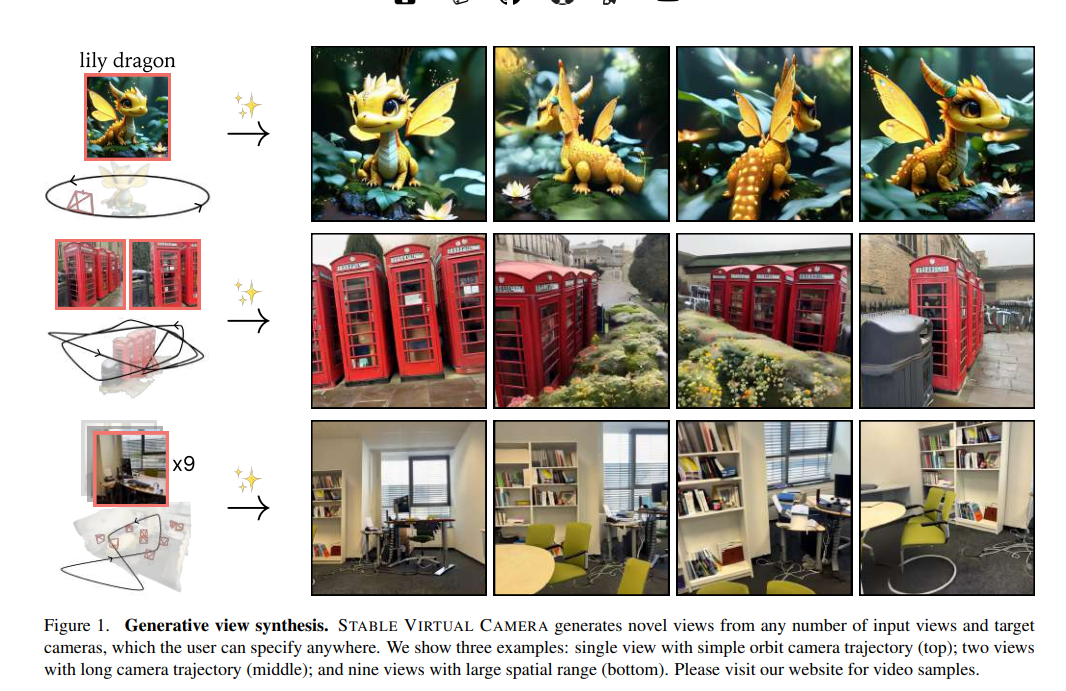

Stability.ai – Introducing Stable Virtual Camera: Multi-View Video Generation with 3D Camera Control

Capabilities

Stable Virtual Camera offers advanced capabilities for generating 3D videos, including:

- Dynamic Camera Control: Supports user-defined camera trajectories as well as multiple dynamic camera paths, including: 360°, Lemniscate (∞ shaped path), Spiral, Dolly Zoom In, Dolly Zoom Out, Zoom In, Zoom Out, Move Forward, Move Backward, Pan Up, Pan Down, Pan Left, Pan Right, and Roll.

- Flexible Inputs: Generates 3D videos from just one input image or up to 32.

- Multiple Aspect Ratios: Capable of producing videos in square (1:1), portrait (9:16), landscape (16:9), and other custom aspect ratios without additional training.

- Long Video Generation: Ensures 3D consistency in videos up to 1,000 frames, enabling seamless

Model limitations

In its initial version, Stable Virtual Camera may produce lower-quality results in certain scenarios. Input images featuring humans, animals, or dynamic textures like water often lead to degraded outputs. Additionally, highly ambiguous scenes, complex camera paths that intersect objects or surfaces, and irregularly shaped objects can cause flickering artifacts, especially when target viewpoints differ significantly from the input images.

FEATURED POSTS

-

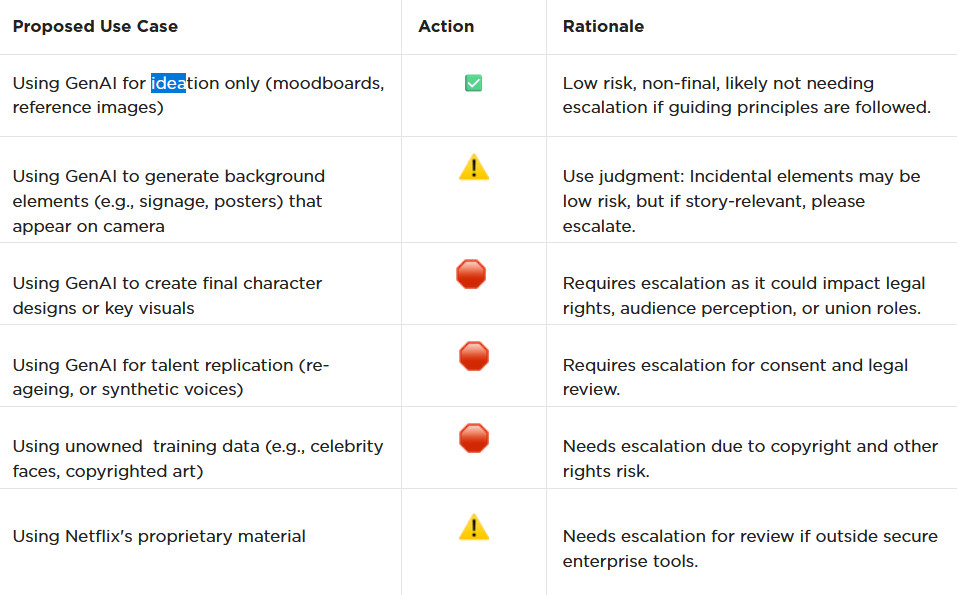

AI and the Law – Netflix : Using Generative AI in Content Production

https://www.cartoonbrew.com/business/netflix-generative-ai-use-guidelines-253300.html

- Temporary Use: AI-generated material can be used for ideation, visualization, and exploration—but is currently considered temporary and not part of final deliverables.

- Ownership & Rights: All outputs must be carefully reviewed to ensure rights, copyright, and usage are properly cleared before integrating into production.

- Transparency: Productions are expected to document and disclose how generative AI is used.

- Human Oversight: AI tools are meant to support creative teams, not replace them—final decision-making rests with human creators.

- Security & Compliance: Any use of AI tools must align with Netflix’s security protocols and protect confidential production material.

-

What is physically correct lighting all about?

http://gamedev.stackexchange.com/questions/60638/what-is-physically-correct-lighting-all-about

2012-08 Nathan Reed wrote:

Physically-based shading means leaving behind phenomenological models, like the Phong shading model, which are simply built to “look good” subjectively without being based on physics in any real way, and moving to lighting and shading models that are derived from the laws of physics and/or from actual measurements of the real world, and rigorously obey physical constraints such as energy conservation.

For example, in many older rendering systems, shading models included separate controls for specular highlights from point lights and reflection of the environment via a cubemap. You could create a shader with the specular and the reflection set to wildly different values, even though those are both instances of the same physical process. In addition, you could set the specular to any arbitrary brightness, even if it would cause the surface to reflect more energy than it actually received.

In a physically-based system, both the point light specular and the environment reflection would be controlled by the same parameter, and the system would be set up to automatically adjust the brightness of both the specular and diffuse components to maintain overall energy conservation. Moreover you would want to set the specular brightness to a realistic value for the material you’re trying to simulate, based on measurements.

Physically-based lighting or shading includes physically-based BRDFs, which are usually based on microfacet theory, and physically correct light transport, which is based on the rendering equation (although heavily approximated in the case of real-time games).

It also includes the necessary changes in the art process to make use of these features. Switching to a physically-based system can cause some upsets for artists. First of all it requires full HDR lighting with a realistic level of brightness for light sources, the sky, etc. and this can take some getting used to for the lighting artists. It also requires texture/material artists to do some things differently (particularly for specular), and they can be frustrated by the apparent loss of control (e.g. locking together the specular highlight and environment reflection as mentioned above; artists will complain about this). They will need some time and guidance to adapt to the physically-based system.

On the plus side, once artists have adapted and gained trust in the physically-based system, they usually end up liking it better, because there are fewer parameters overall (less work for them to tweak). Also, materials created in one lighting environment generally look fine in other lighting environments too. This is unlike more ad-hoc models, where a set of material parameters might look good during daytime, but it comes out ridiculously glowy at night, or something like that.

Here are some resources to look at for physically-based lighting in games:

SIGGRAPH 2013 Physically Based Shading Course, particularly the background talk by Naty Hoffman at the beginning. You can also check out the previous incarnations of this course for more resources.

Sébastien Lagarde, Adopting a physically-based shading model and Feeding a physically-based shading model

And of course, I would be remiss if I didn’t mention Physically-Based Rendering by Pharr and Humphreys, an amazing reference on this whole subject and well worth your time, although it focuses on offline rather than real-time rendering.