BREAKING NEWS

LATEST POSTS

-

Andrew Perfors – The work of creation in the age of AI

Meaning, authenticity, and the creative process – and why they matter

https://perfors.net/blog/creation-ai/

AI changes the landscape of creation, focusing on the alienation of the creator from their creation and the challenges in maintaining meaning. The author presents two significant problems:

- Loss of Connection with Creation:

- AI-assisted creation diminishes the creator’s role in the decision-making process.

- The resulting creation lacks the personal, intentional choices that contribute to meaningful expression.

- AI is considered a tool that, when misused, turns creation into automated button-pushing, stripping away the purpose of human expression.

- Difficulty in Assessing Authenticity:

- It becomes challenging to distinguish between human and AI contributions within a creation.

- AI-generated content lacks transparency regarding the intent behind specific choices or expressions.

- The author asserts that AI-generated content often falls short in providing the depth and authenticity required for meaningful communication.

- Loss of Connection with Creation:

-

Fouad Khan – Confirmed! We Live in a Simulation

https://www.scientificamerican.com/article/confirmed-we-live-in-a-simulation/

Ever since the philosopher Nick Bostrom proposed in the Philosophical Quarterly that the universe and everything in it might be a simulation, there has been intense public speculation and debate about the nature of reality.

Yet there have been skeptics. Physicist Frank Wilczek has argued that there’s too much wasted complexity in our universe for it to be simulated. Building complexity requires energy and time.

To understand if we live in a simulation we need to start by looking at the fact that we already have computers running all kinds of simulations for lower level “intelligences” or algorithms.

All computing hardware leaves an artifact of its existence within the world of the simulation it is running. This artifact is the processor speed.

No matter how complete the simulation is, the processor speed would intervene in the operations of the simulation.If we live in a simulation, then our universe should also have such an artifact. We can now begin to articulate some properties of this artifact that would help us in our search for such an artifact in our universe.

The artifact presents itself in the simulated world as an upper limit.Now that we have some defining features of the artifact, of course it becomes clear what the artifact manifests itself as within our universe. The artifact is manifested as the speed of light.

This maximum speed is the speed of light. We don’t know what hardware is running the simulation of our universe or what properties it has, but one thing we can say now is that the memory container size for the variable space would be about 300,000 kilometers if the processor performed one operation per second.We can see now that the speed of light meets all the criteria of a hardware artifact identified in our observation of our own computer builds. It remains the same irrespective of observer (simulated) speed, it is observed as a maximum limit, it is unexplainable by the physics of the universe, and it is absolute. The speed of light is a hardware artifact showing we live in a simulated universe.

Consciousness is an integrated (combining five senses) subjective interface between the self and the rest of the universe. The only reasonable explanation for its existence is that it is there to be an “experience”.

So here we are generating this product called consciousness that we apparently don’t have a use for, that is an experience and hence must serve as an experience. The only logical next step is to surmise that this product serves someone else.

-

AI and the Law – Why The New York Times might win its copyright lawsuit against OpenAI

Daniel Jeffries wrote:

“Trying to get everyone to license training data is not going to work because that’s not what copyright is about,” Jeffries wrote. “Copyright law is about preventing people from producing exact copies or near exact copies of content and posting it for commercial gain. Period. Anyone who tells you otherwise is lying or simply does not understand how copyright works.”

The AI community is full of people who understand how models work and what they’re capable of, and who are working to improve their systems so that the outputs aren’t full of regurgitated inputs. Google won the Google Books case because it could explain both of these persuasively to judges. But the history of technology law is littered with the remains of companies that were less successful in getting judges to see things their way.

-

M.T. Fletcher – WHY AGENCIES ARE OBSESSED WITH PITCHING ON PROCESS INSTEAD OF TALENT

“Every presentation featured a proprietary process designed by the agency. A custom approach to identify targets, develop campaigns and optimize impact—with every step of the process powered by AI, naturally.”

“The key to these one-of-a-kind models is apparently finding the perfect combination of circles, squares, diamonds and triangles…Arrows abounded and ellipses are replacing circles as the unifying shape of choice among the more fashionable strategists.”

“The only problem is that it’s all bullshit.”

“A blind man could see the creative ideas were not developed via the agency’s so-called process, and anyone who’s ever worked at an agency knows that creativity comes from collaboration, not an assembly line.”

“And since most clients can’t differentiate between creative ideas without validation from testing, data has become the collective crutch for an industry governed by fear.”

“If a proprietary process really produced foolproof creativity, then every formulaic movie would be a blockbuster, every potboiler novel published by risk-averse editors would become a bestseller and every clichéd pickup line would work in any bar in the world.”

FEATURED POSTS

-

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

https://www.chrbutler.com/understanding-the-eye-mind-connection

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning

-

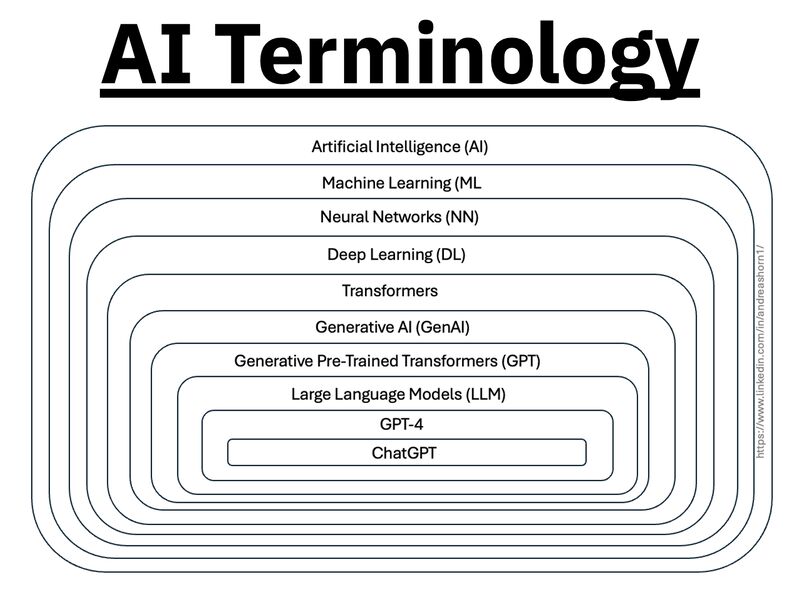

Using Meta’s Llama 3 for your business

Meta is the only Big Tech company committed to developing AI, particularly large language models, with an open-source approach.

There are 3 ways you can use Llama 3 for your business:

1- Llama 3 as a Service

Use Llama 3 from any cloud provider as a service. You pay by use, but the price is typically much cheaper than proprietary models like GPT-4 or Claude.

→ Use Llama 3 on Azure AI catalog:

https://techcommunity.microsoft.com/t5/ai-machine-learning-blog/introducing-meta-llama-3-models-on-azure-ai-model-catalog/ba-p/41171442- Self-Hosting

If you have GPU infrastructure (on-premises or cloud), you can run Llama 3 internally at your desired scale.

→ Deploy Llama 3 on Amazon SageMaker:

https://www.philschmid.de/sagemaker-llama33- Desktop (Offline)

Tools like Ollama allow you to run the small model offline on consumer hardware like current MacBooks.

→ Tutorial for Mac:

https://ollama.com/blog/llama3

-

Photography basics: Shutter angle and shutter speed and motion blur

http://www.shutterangle.com/2012/cinematic-look-frame-rate-shutter-speed/

https://www.cinema5d.com/global-vs-rolling-shutter/

https://www.wikihow.com/Choose-a-Camera-Shutter-Speed

https://www.provideocoalition.com/shutter-speed-vs-shutter-angle/

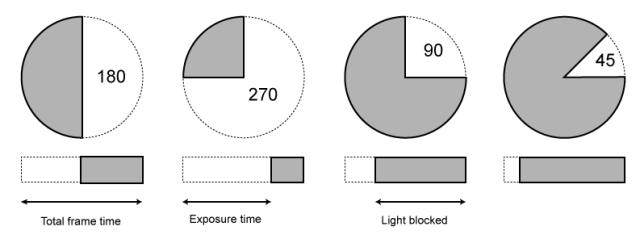

Shutter is the device that controls the amount of light through a lens. Basically in general it controls the amount of time a film is exposed.

Shutter speed is how long this device is open for, which also defines motion blur… the longer it stays open the blurrier the image captured.

The number refers to the amount of light actually allowed through.

As a reference, shooting at 24fps, at 180 shutter angle or 1/48th of shutter speed (0.0208 exposure time) will produce motion blur which is similar to what we perceive at naked eye

Talked of as in (shutter) angles, for historical reasons, as the original exposure mechanism was controlled through a pie shaped mirror in front of the lens.

A shutter of 180 degrees is blocking/allowing light for half circle. (half blocked, half open). 270 degrees is one quarter pie shaped, which would allow for a higher exposure time (3 quarter pie open, vs one quarter closed) 90 degrees is three quarter pie shaped, which would allow for a lower exposure (one quarter open, three quarters closed)

The shutter angle can be converted back and fort with shutter speed with the following formulas:

https://www.provideocoalition.com/shutter-speed-vs-shutter-angle/shutter angle =

(360 * fps) * (1/shutter speed)

or

(360 * fps) / shutter speedshutter speed =

(360 * fps) * (1/shutter angle)

or

(360 * fps) / shutter angleFor example here is a chart from shutter angle to shutter speed at 24 fps:

270 = 1/32

180 = 1/48

172.8 = 1/50

144 = 1/60

90 = 1/96

72 = 1/120

45 = 1/198

22.5 = 1/348

11 = 1/696

8.6 = 1/1000The above is basically the relation between the way a video camera calculates shutter (fractions of a second) and the way a film camera calculates shutter (in degrees).

Smaller shutter angles show strobing artifacts. As the camera only ever sees at least half of the time (for a typical 180 degree shutter). Due to being obscured by the shutter during that period, it doesn’t capture the scene continuously.

This means that fast moving objects, and especially objects moving across the frame, will exhibit jerky movement. This is called strobing. The defect is also very noticeable during pans. Smaller shutter angles (shorter exposure) exhibit more pronounced strobing effects.

Larger shutter angles show more motion blur. As the longer exposure captures more motion.

Note that in 3D you want to first sum the total of the shutter open and shutter close values, than compare that to the shutter angle aperture, ie:

shutter open -0.0625

shutter close 0.0625

Total shutter = 0.0625+0.0625 = 0.125

Shutter angle = 360*0.125 = 45shutter open -0.125

shutter close 0.125

Total shutter = 0.125+0.125 = 0.25

Shutter angle = 360*0.25 = 90shutter open -0.25

shutter close 0.25

Total shutter = 0.25+0.25 = 0.5

Shutter angle = 360*0.5 = 180shutter open -0.375

shutter close 0.375

Total shutter = 0.375+0.375 = 0.75

Shutter angle = 360*0.75 = 270Faster frame rates can resolve both these issues.