BREAKING NEWS

LATEST POSTS

-

The Hollywood VFX Jobs Most At-Risk From AI

https://www.hollywoodreporter.com/business/business-news/ai-hollywood-workers-job-cuts-1235811009/

Over the next three years, it estimates that nearly 204,000 positions will be adversely affected.

In November, former Dreamworks founder Jeffrey Katzenberg said the tech will replace 90 percent of jobs on animated films.

Roughly a third of respondents surveyed predicted that AI will displace sound editors, 3D modelers, re-recording mixers and audio and video technicians within three years, while a quarter said that sound designers, compositors and graphic designers are likely to be affected.

Roughly a third of respondents surveyed predicted that AI will displace sound editors, 3D modelers, re-recording mixers and audio and video technicians within three years, while a quarter said that sound designers, compositors and graphic designers are likely to be affected.

AI tools may increasingly be used to help create images that can streamline character design and storyboarding process, lowering demand for concept artists, illustrators and animators.

According to the study, the job tasks most likely to be impacted by AI in the film and TV industry are 3-D modeling, character and environment design, voice generation and cloning and compositing, followed by sound design, tools programming, script writing, animation and rigging, concept art/visual development and light/texture generation.

-

THOMAS MANSENCAL – The Apparent Simplicity of RGB Rendering

https://thomasmansencal.substack.com/p/the-apparent-simplicity-of-rgb-rendering

The primary goal of physically-based rendering (PBR) is to create a simulation that accurately reproduces the imaging process of electro-magnetic spectrum radiation incident to an observer. This simulation should be indistinguishable from reality for a similar observer.

Because a camera is not sensitive to incident light the same way than a human observer, the images it captures are transformed to be colorimetric. A project might require infrared imaging simulation, a portion of the electro-magnetic spectrum that is invisible to us. Radically different observers might image the same scene but the act of observing does not change the intrinsic properties of the objects being imaged. Consequently, the physical modelling of the virtual scene should be independent of the observer.

-

THE THEORY THAT CONSCIOUSNESS IS A QUANTUM SYSTEM

https://mindmatters.ai/2024/01/the-theory-that-consciousness-is-a-quantum-system-gains-support/

In short, it says that consciousness arises when gravitational instabilities in the fundamental structure of space-time collapse quantum wave functions in tiny structures called microtubules that are found inside neurons – and, in fact, in all complex cells.

In quantum theory, a particle does not really exist as a tiny bit of matter located somewhere but rather as a cloud of probabilities. If observed, it collapses into the state in which it was observed. Penrose has postulated that “each time a quantum wave function collapses in this way in the brain, it gives rise to a moment of conscious experience.”

Hameroff has been studying proteins known as tubulins inside the microtubules of neurons. He postulates that “microtubules inside neurons could be exploiting quantum effects, somehow translating gravitationally induced wave function collapse into consciousness, as Penrose had suggested.” Thus was born a collaboration, though their seminal 1996 paper failed to gain much traction.

-

Rafael Perez – RIFE, an interpolation retimer for Nuke

This project implements RIFE – Real-Time Intermediate Flow Estimation for Video Frame Interpolation for The Foundry’s Nuke.

RIFE is a powerful frame interpolation neural network, capable of high-quality retimes and optical flow estimation.

This implementation allows RIFE to be used natively inside Nuke without any external dependencies or complex installations. It wraps the network in an easy-to-use Gizmo with controls similar to those in OFlow or Kronos.

https://github.com/rafaelperez/RIFE-for-Nuke

FEATURED POSTS

-

CASSINI MISSION

Somewhere, something incredible is waiting to be known.

The footage in this little film was captured by the hardworking men and women at NASA with the Cassini Imaging Science System. If you’re interested in learning more about Cassini and the on-going Cassini Solstice Mission, check it out at NASA’s website:

saturn.jpl.nasa.gov/science/index.cfm

-

What is a Gamut or Color Space and why do I need to know about CIE

http://www.xdcam-user.com/2014/05/what-is-a-gamut-or-color-space-and-why-do-i-need-to-know-about-it/

In video terms gamut is normally related to as the full range of colours and brightness that can be either captured or displayed.

Generally speaking all color gamuts recommendations are trying to define a reasonable level of color representation based on available technology and hardware. REC-601 represents the old TVs. REC-709 is currently the most distributed solution. P3 is mainly available in movie theaters and is now being adopted in some of the best new 4K HDR TVs. Rec2020 (a wider space than P3 that improves on visibke color representation) and ACES (the full coverage of visible color) are other common standards which see major hardware development these days.

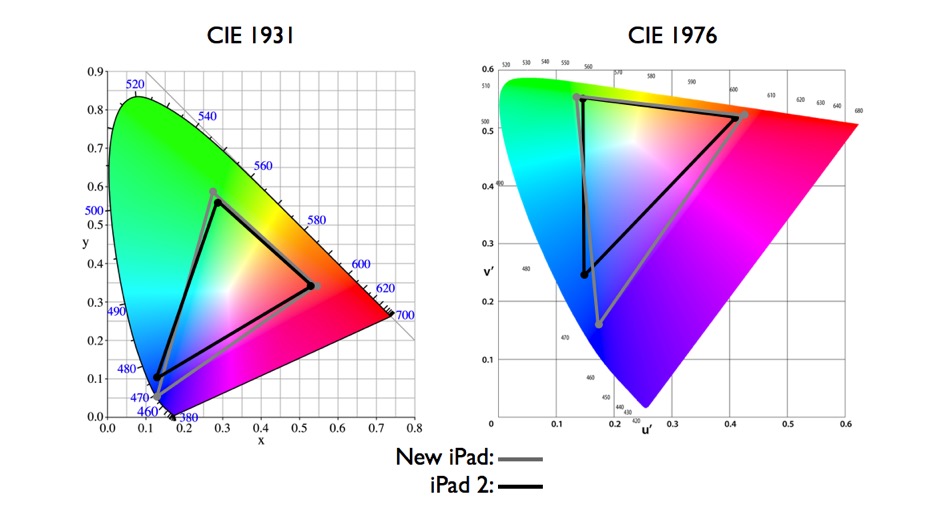

To compare and visualize different solution (across video and printing solutions), most developers use the CIE color model chart as a reference.

The CIE color model is a color space model created by the International Commission on Illumination known as the Commission Internationale de l’Elcairage (CIE) in 1931. It is also known as the CIE XYZ color space or the CIE 1931 XYZ color space.

This chart represents the first defined quantitative link between distributions of wavelengths in the electromagnetic visible spectrum, and physiologically perceived colors in human color vision. Or basically, the range of color a typical human eye can perceive through visible light.Note that while the human perception is quite wide, and generally speaking biased towards greens (we are apes after all), the amount of colors available through nature, generated through light reflection, tend to be a much smaller section. This is defined by the Pointer’s Chart.

In short. Color gamut is a representation of color coverage, used to describe data stored in images against available hardware and viewer technologies.

Camera color encoding from

https://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applicationsCIE 1976

http://bernardsmith.eu/computatrum/scan_and_restore_archive_and_print/scanning/

https://store.yujiintl.com/blogs/high-cri-led/understanding-cie1931-and-cie-1976

The CIE 1931 standard has been replaced by a CIE 1976 standard. Below we can see the significance of this.

People have observed that the biggest issue with CIE 1931 is the lack of uniformity with chromaticity, the three dimension color space in rectangular coordinates is not visually uniformed.

The CIE 1976 (also called CIELUV) was created by the CIE in 1976. It was put forward in an attempt to provide a more uniform color spacing than CIE 1931 for colors at approximately the same luminance

The CIE 1976 standard colour space is more linear and variations in perceived colour between different people has also been reduced. The disproportionately large green-turquoise area in CIE 1931, which cannot be generated with existing computer screens, has been reduced.

If we move from CIE 1931 to the CIE 1976 standard colour space we can see that the improvements made in the gamut for the “new” iPad screen (as compared to the “old” iPad 2) are more evident in the CIE 1976 colour space than in the CIE 1931 colour space, particularly in the blues from aqua to deep blue.

https://dot-color.com/2012/08/14/color-space-confusion/

Despite its age, CIE 1931, named for the year of its adoption, remains a well-worn and familiar shorthand throughout the display industry. CIE 1931 is the primary language of customers. When a customer says that their current display “can do 72% of NTSC,” they implicitly mean 72% of NTSC 1953 color gamut as mapped against CIE 1931.