BREAKING NEWS

LATEST POSTS

-

Human World Population Through Time

200,000 years to reach 1 billion.

200 years to reach 7 billions.

The global population has nearly tripled since 1950, from 2.6 billion people to 7.6 billion.https://edition.cnn.com/2018/11/08/health/global-burden-disease-fertility-study/index.html?no-st=1542440193

-

Equirectangular 360 videos/photos to Unity3D to VR

SUMMARY

- A lot of 360 technology is natively supported in Unity3D. Examples here: https://assetstore.unity.com/packages/essentials/tutorial-projects/vr-samples-51519

- Use the Google Cardboard VR API to export for Android or iOS. https://developers.google.com/vr/?hl=en https://developers.google.com/vr/develop/unity/get-started-ios

- Images and videos are for the most equirectangular 2:1 360 captures, mapped onto a skybox (stills) or an inverted sphere (videos). Panoramas are also supported.

- Stereo is achieved in different formats, but mostly with a 2:1 over-under layout.

- Videos can be streamed from a server.

- You can export 360 mono/stereo stills/videos from Unity3D with VR Panorama.

- 4K is probably the best average resolution size for mobiles.

- Interaction can be driven through the Google API gaze scripts/plugins or through Google Cloud Speech Recognition (paid service, https://assetstore.unity.com/packages/add-ons/machinelearning/google-cloud-speech-recognition-vr-ar-desktop-desktop-72625 )

DETAILS

- Google VR game to iOS in 15 minutes

- Step by Step Google VR and responding to events with Unity3D 2017.x

https://boostlog.io/@mohammedalsayedomar/create-cardboard-apps-in-unity-5ac8f81e47018500491f38c8

https://www.sitepoint.com/building-a-google-cardboard-vr-app-in-unity/- Gaze interaction examples

https://assetstore.unity.com/packages/tools/gui/gaze-ui-for-canvas-70881

https://s3.amazonaws.com/xrcommunity/tutorials/vrgazecontrol/VRGazeControl.unitypackage

https://assetstore.unity.com/packages/tools/gui/cardboard-vr-touchless-menu-trigger-58897

- Basics details about equirectangular 2:1 360 images and videos.

- Skybox cubemap texturing, shading and camera component for stills.

- Video player component on a sphere’s with a flipped normals shader.

- Note that you can also use a pre-modeled sphere with inverted normals.

- Note that for audio you will need an audio component on the sphere model.

- Setup a Full 360 stereoscopic video playback using an over-under layout split onto two cameras.

- Note you cannot generate a stereoscopic image from two 360 captures, it has to be done through a dedicated consumer rig.

http://bernieroehl.com/360stereoinunity/

VR Actions for Playmaker

https://assetstore.unity.com/packages/tools/vr-actions-for-playmaker-52109100 Best Unity3d VR Assets

http://meta-guide.com/embodiment/100-best-unity3d-vr-assets…find more tutorials/reference under this blog page

(more…) -

Guy Ritchie – You Must Be The Master of Your Own Suit and Your Own Kingdom

The world is trying to tell you who you are.

You yourself are trying to tell you who you are.

At some there has to be some reconciliation. -

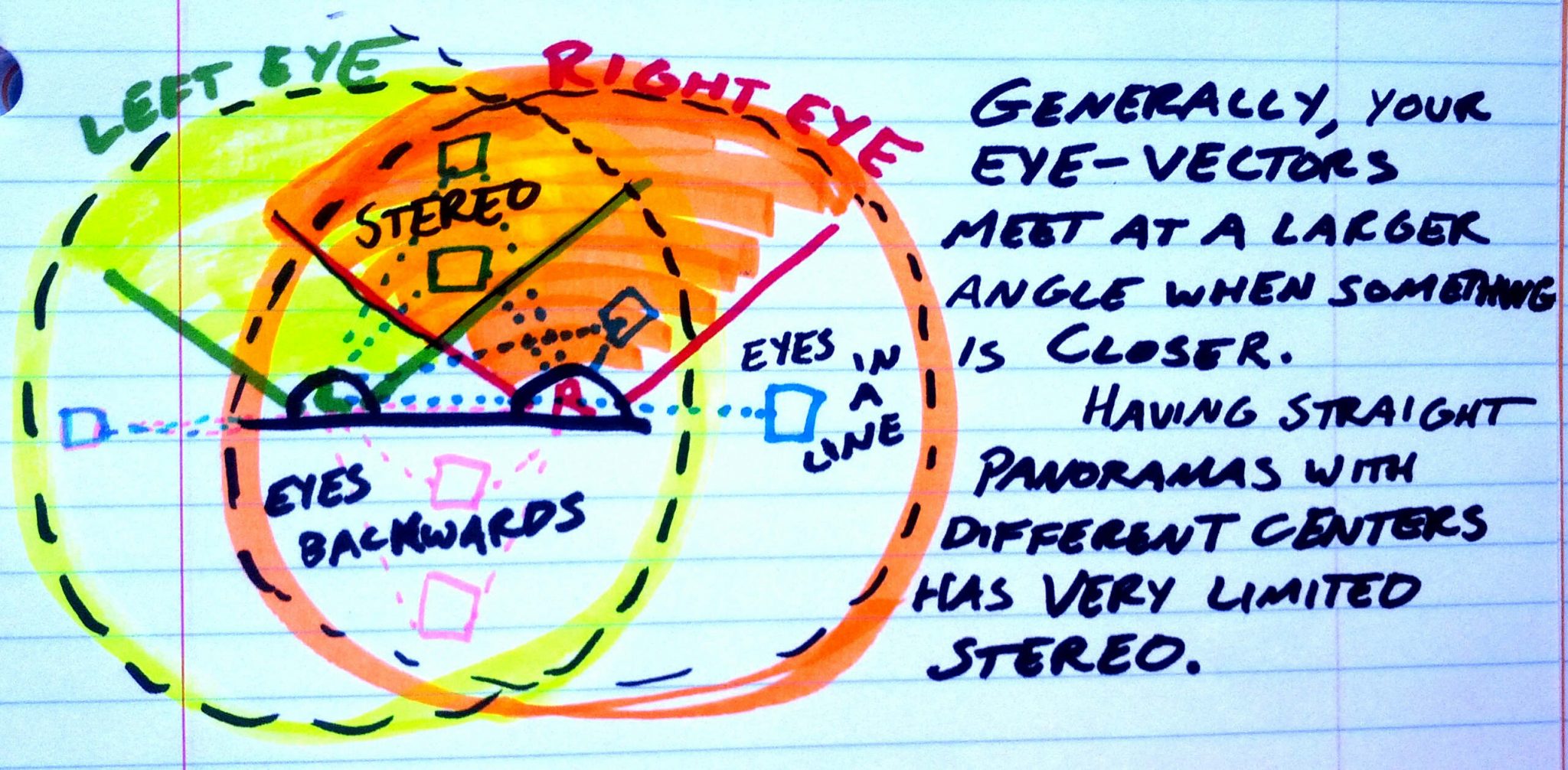

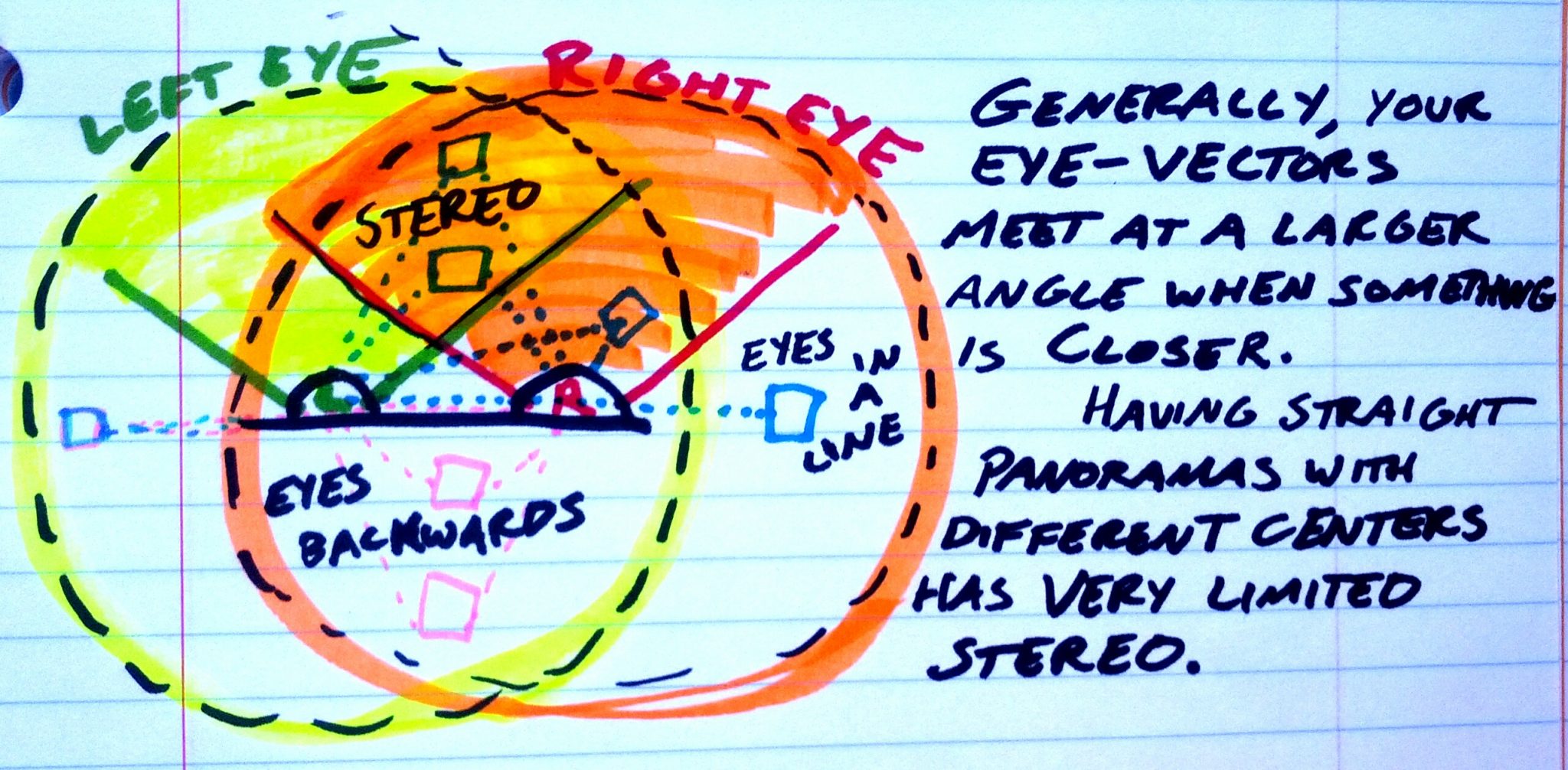

why you cannot generate a stereoscopic image from just two 360 captures

elevr.com/elevrant-panoramic-twist/

Today we discuss panoramic 3d video capture and how understanding its geometry leads to some new potential focus techniques.

With ordinary 2-camera stereoscopy, like you see at a 3d movie, each camera captures its own partial panorama of video, so the two partial circles of video are part of two side-by-side panoramas, each centering on a different point (where the cameras are).

This is great if you want to stare straight ahead from a fixed position. The eyes can measure the depth of any object in the middle of this Venn diagram of overlap. I think of the line of sight as being vectors shooting out of your eyeballs, and when those vectors hit an object from different angles, you get 3d information. When something’s closer, the vectors hit at a wider angle, and when an object is really far away, the vectors approach being parallel.

But even if both these cameras captured spherically, you’d have problems once you turn your head. Your ability to measure depth lessens and lessens, with generally smaller vector angles, until when you’re staring directly to the right they overlap entirely, zero angle no matter how close or far something is. And when you turn to face behind you, the panoramas are backwards, in a way that makes it impossible to focus your eyes on anything.

So a setup with two separate 360 panoramas captured an eye-width apart is no good for actual panoramas.

But you can stitch together a panorama using pairs of cameras an eye-width apart, where the center of the panorama is not on any one camera but at the center of a ball of cameras. Depending on the field of view that gets captured and how it’s stitched together, a four-cameras-per-eye setup might produce something with more or less twist, and more or less twist-reduction between cameras. Ideally, you’d have a many camera setup that lets you get a fully symmetric twist around each panorama. Or, for a circle of lots of cameras facing directly outward, you could crop the footage for each camera: stitch together the right parts of each camera’s capture for the left eye, and the left parts of each camera’s capture for the right eye.

-

Acting Upward

A growing community of collaborators dedicated to helping actors, artists & filmmakers gain experience & improve their craft.

FEATURED POSTS

-

AI and the Law – The Edge – World’s first major AI law enters into force in Europe

The EU Artificial Intelligence (AI) Act, which went into effect on August 1, 2024.

This act implements a risk-based approach to AI regulation, categorizing AI systems based on the level of risk they pose. High-risk systems, such as those used in healthcare, transport, and law enforcement, face stringent requirements, including risk management, transparency, and human oversight.

Key provisions of the AI Act include:

- Transparency and Safety Requirements: AI systems must be designed to be safe, transparent, and easily understandable to users. This includes labeling requirements for AI-generated content, such as deepfakes (Engadget).

- Risk Management and Compliance: Companies must establish comprehensive governance frameworks to assess and manage the risks associated with their AI systems. This includes compliance programs that cover data privacy, ethical use, and geographical considerations (Faegre Drinker Biddle & Reath LLP) (Passle).

- Copyright and Data Mining: Companies must adhere to copyright laws when training AI models, obtaining proper authorization from rights holders for text and data mining unless it is for research purposes (Engadget).

- Prohibitions and Restrictions: AI systems that manipulate behavior, exploit vulnerabilities, or perform social scoring are prohibited. The act also sets out specific rules for high-risk AI applications and imposes fines for non-compliance (Passle).

For US tech firms, compliance with the EU AI Act is critical due to the EU’s significant market size

-

Rendering – BRDF – Bidirectional reflectance distribution function

http://en.wikipedia.org/wiki/Bidirectional_reflectance_distribution_function

The bidirectional reflectance distribution function is a four-dimensional function that defines how light is reflected at an opaque surface

http://www.cs.ucla.edu/~zhu/tutorial/An_Introduction_to_BRDF-Based_Lighting.pdf

In general, when light interacts with matter, a complicated light-matter dynamic occurs. This interaction depends on the physical characteristics of the light as well as the physical composition and characteristics of the matter.

That is, some of the incident light is reflected, some of the light is transmitted, and another portion of the light is absorbed by the medium itself.

A BRDF describes how much light is reflected when light makes contact with a certain material. Similarly, a BTDF (Bi-directional Transmission Distribution Function) describes how much light is transmitted when light makes contact with a certain material

http://www.cs.princeton.edu/~smr/cs348c-97/surveypaper.html

It is difficult to establish exactly how far one should go in elaborating the surface model. A truly complete representation of the reflective behavior of a surface might take into account such phenomena as polarization, scattering, fluorescence, and phosphorescence, all of which might vary with position on the surface. Therefore, the variables in this complete function would be:

incoming and outgoing angle incoming and outgoing wavelength incoming and outgoing polarization (both linear and circular) incoming and outgoing position (which might differ due to subsurface scattering) time delay between the incoming and outgoing light ray