BREAKING NEWS

LATEST POSTS

-

Moondream Gaze Detection – Open source code

This is convenient for captioning videos, understanding social dynamics, and for specific cases such as sports analytics, or detecting when drivers or operators are distracted.

https://huggingface.co/spaces/moondream/gaze-demo

https://moondream.ai/blog/announcing-gaze-detection

-

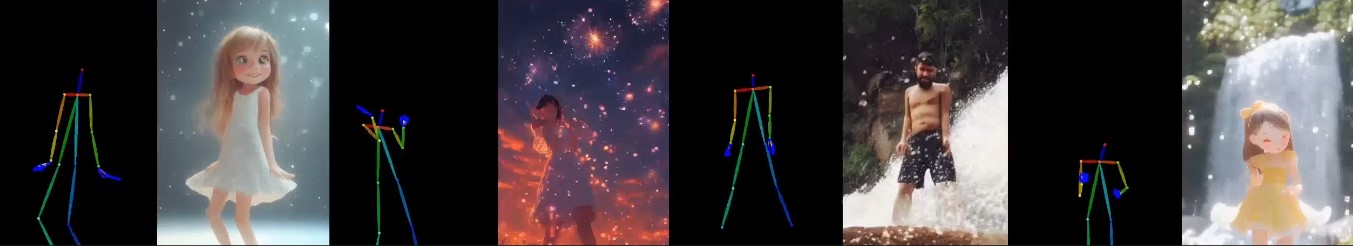

X-Dyna – Expressive Dynamic Human Image Animation

https://x-dyna.github.io/xdyna.github.io

A novel zero-shot, diffusion-based pipeline for animating a single human image using facial expressions and body movements derived from a driving video, that generates realistic, context-aware dynamics for both the subject and the surrounding environment.

-

Flex 1 Alpha – a pre-trained base 8 billion parameter rectified flow transformer

https://huggingface.co/ostris/Flex.1-alpha

Flex.1 started as the FLUX.1-schnell-training-adapter to make training LoRAs on FLUX.1-schnell possible.

-

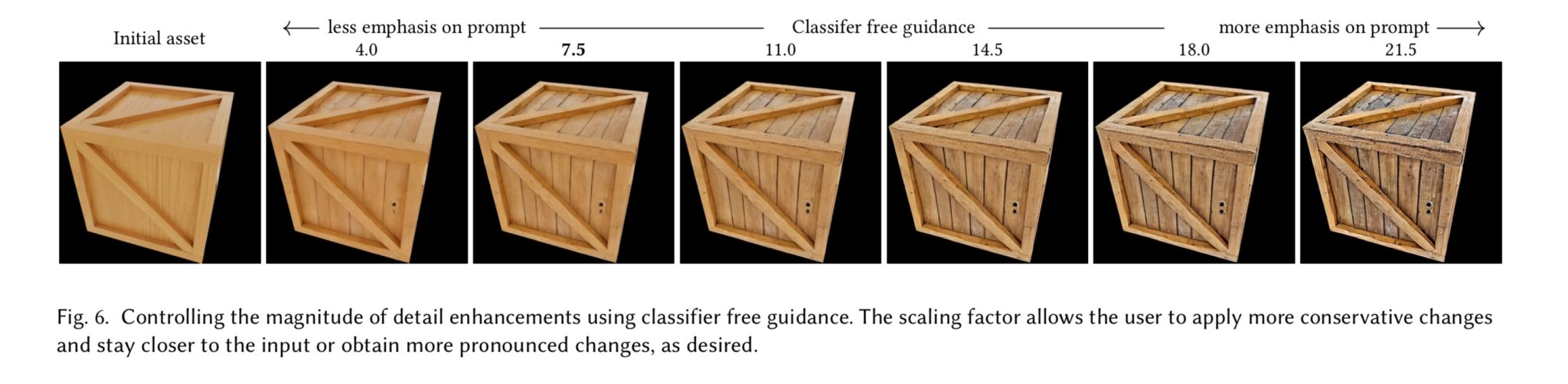

Generative Detail Enhancement for Physically Based Materials

https://arxiv.org/html/2502.13994v1

https://arxiv.org/pdf/2502.13994

A tool for enhancing the detail of physically based materials using an off-the-shelf diffusion model and inverse rendering.

-

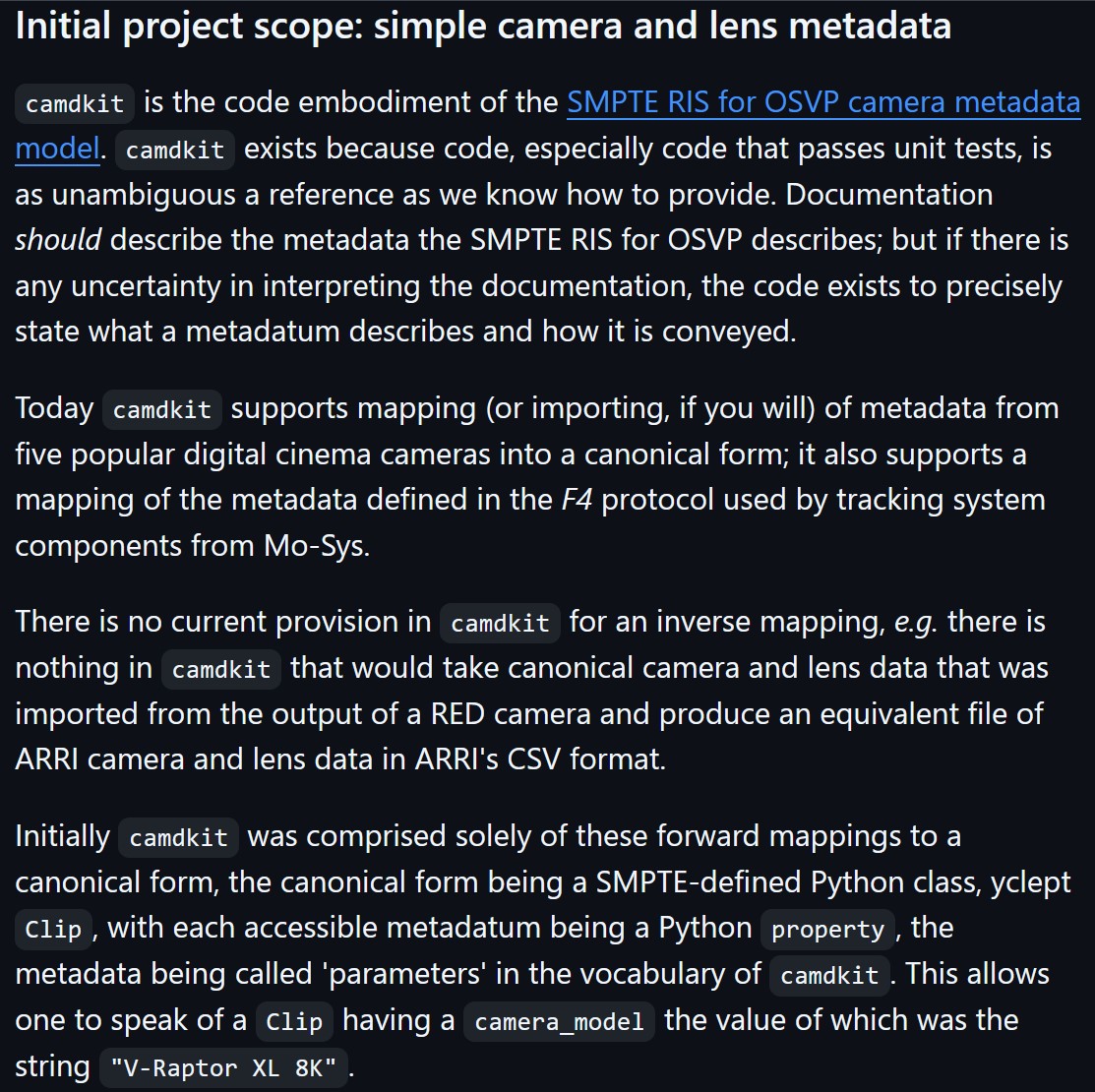

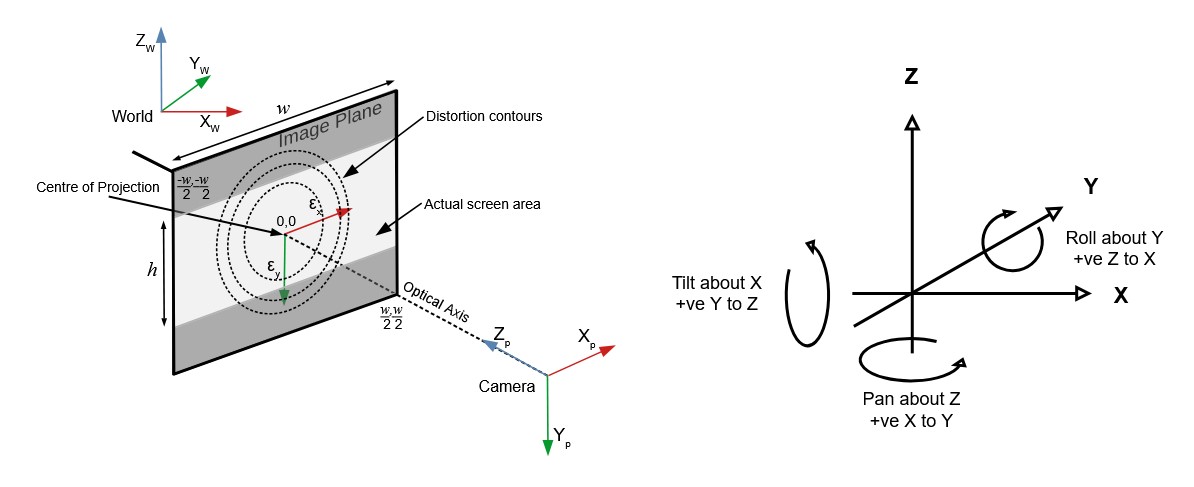

Camera Metadata Toolkit (camdkit) for Virtual Production

https://github.com/SMPTE/ris-osvp-metadata-camdkit

Today

camdkitsupports mapping (or importing, if you will) of metadata from five popular digital cinema cameras into a canonical form; it also supports a mapping of the metadata defined in the F4 protocol used by tracking system components from Mo-Sys.

-

OpenTrackIO – free and open-source protocol designed to improve interoperability in Virtual Production

OpenTrackIO defines the schema of JSON samples that contain a wide range of metadata about the device, its transform(s), associated camera and lens. The full schema is given below and can be downloaded here.

-

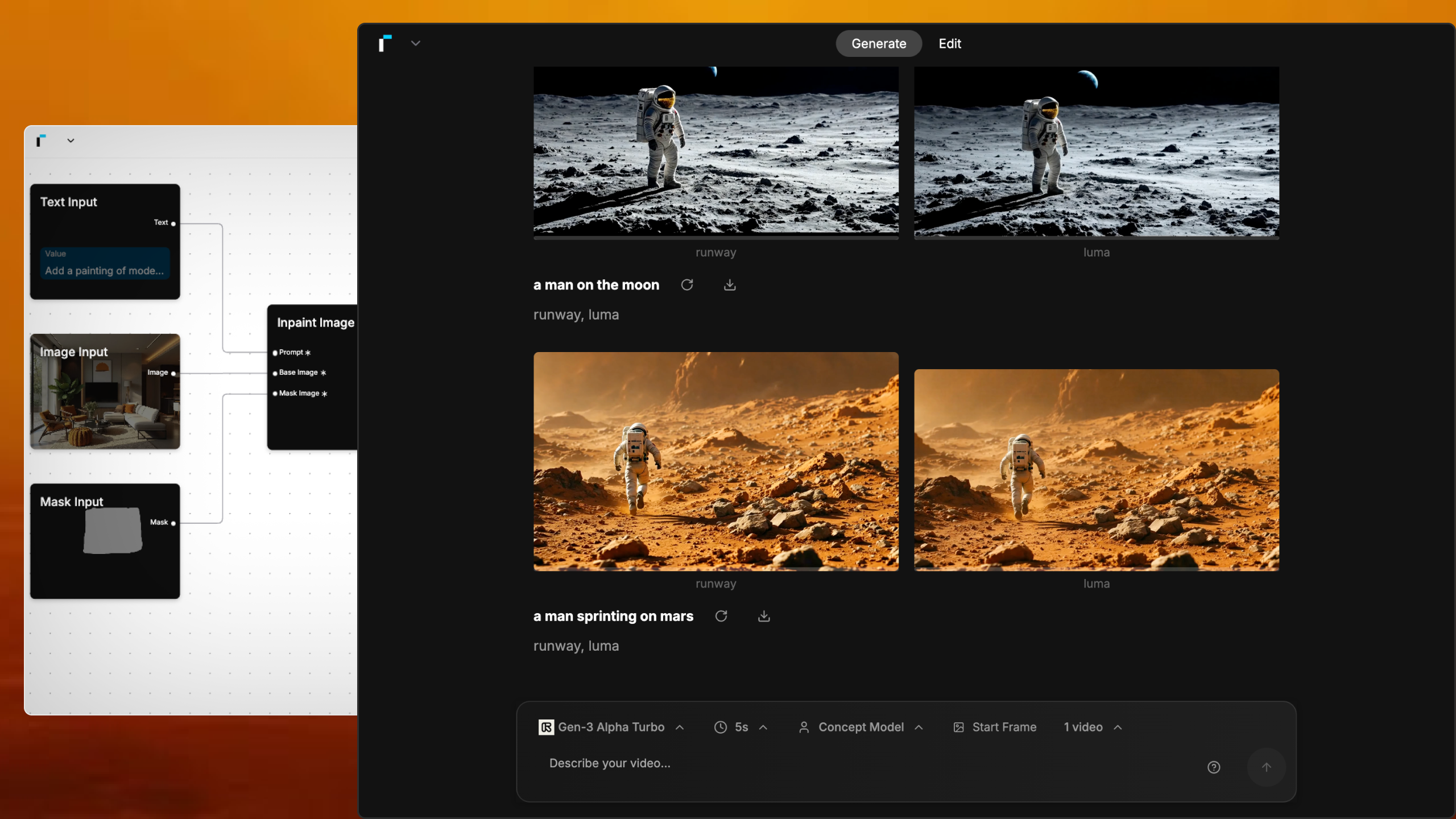

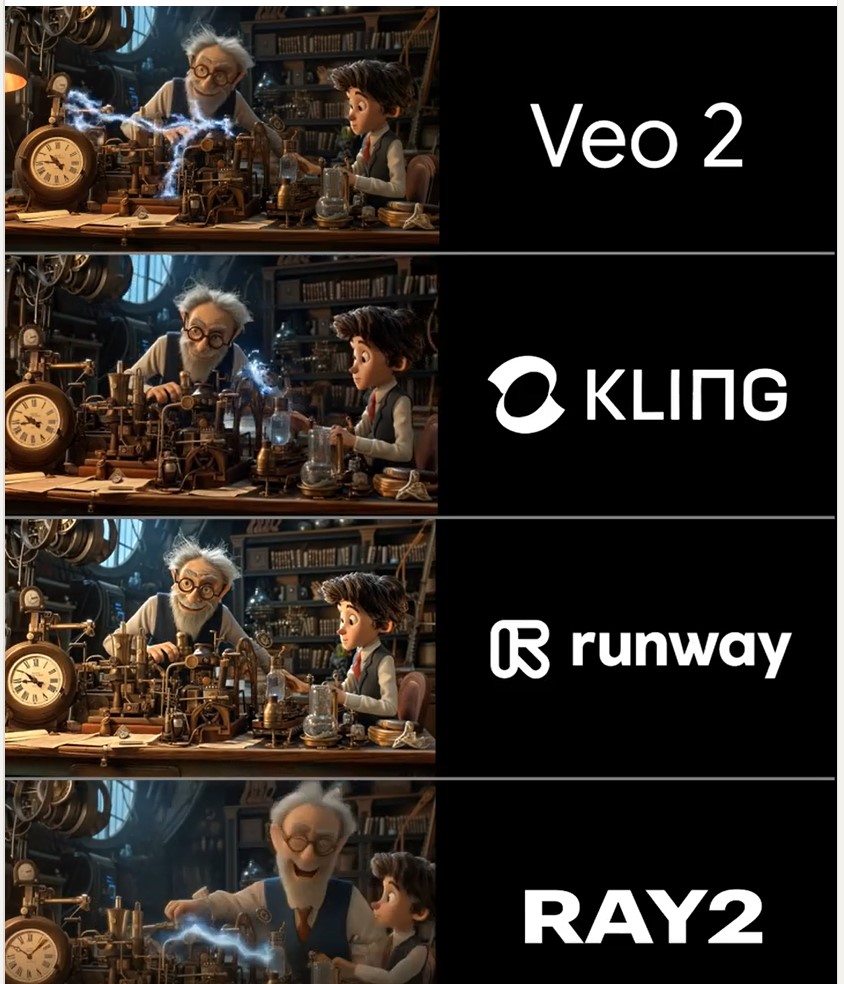

Martin Gent – Comparing current video AI models

https://www.linkedin.com/posts/martingent_imagineapp-veo2-kling-activity-7298979787962806272-n0Sn

🔹 𝗩𝗲𝗼 2 – After the legendary prompt adherence of Veo 2 T2V, I have to say I2V is a little disappointing, especially when it comes to camera moves. You often get those Sora-like jump-cuts too which can be annoying.

🔹 𝗞𝗹𝗶𝗻𝗴 1.6 Pro – Still the one to beat for I2V, both for image quality and prompt adherence. It’s also a lot cheaper than Veo 2. Generations can be slow, but are usually worth the wait.

🔹 𝗥𝘂𝗻𝘄𝗮𝘆 Gen 3 – Useful for certain shots, but overdue an update. The worst performer here by some margin. Bring on Gen 4!

🔹 𝗟𝘂𝗺𝗮 Ray 2 – I love the energy and inventiveness Ray 2 brings, but those came with some image quality issues. I want to test more with this model though for sure.

-

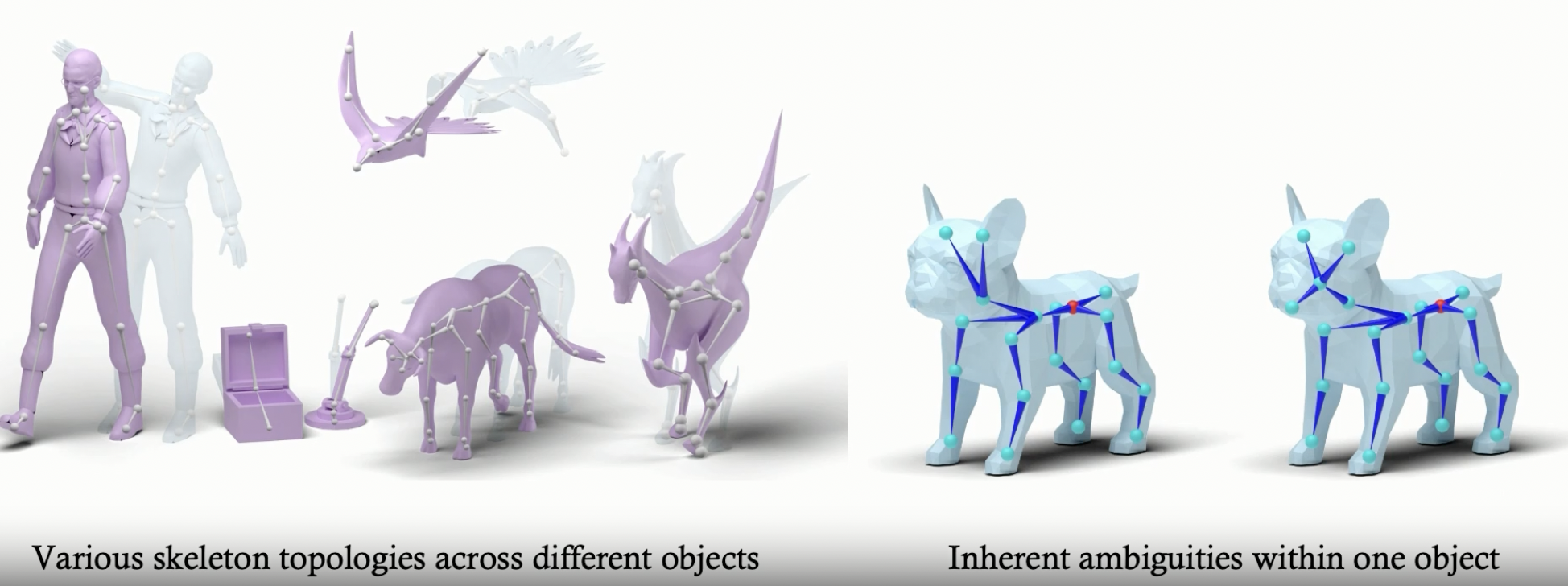

RigAnything – Template-Free Autoregressive Rigging for Diverse 3D Assets

https://www.liuisabella.com/RigAnything

RigAnything was developed through a collaboration between UC San Diego, Adobe Research, and Hillbot Inc. It addresses one of 3D animation’s most persistent challenges: automatic rigging.

- Template-Free Autoregressive Rigging. A transformer-based model that sequentially generates skeletons without predefined templates, enabling automatic rigging across diverse 3D assets through probabilistic joint prediction and skinning weight assignment.

- Support Arbitrary Input Pose. Generates high-quality skeletons for shapes in any pose through online joint pose augmentation during training, eliminating the common rest-pose requirement of existing methods and enabling broader real-world applications.

- Fast Rigging Speed. Achieves 20x faster performance than existing template-based methods, completing rigging in under 2 seconds per shape.

FEATURED POSTS

-

mind-blowing ChatGPT extensions to use it anywhere

https://medium.com/geekculture/6-chatgpt-mind-blowing-extensions-to-use-it-anywhere-db6638640ec7

- Use ChatGPT anywhere — Google Chrome Extension https://github.com/gragland/chatgpt-chrome-extension

- Combining ChatGPT with search engines https://github.com/wong2/chatgpt-google-extension

- Using voice commands with ChatGTP https://chrome.google.com/webstore/detail/promptheus-converse-with/eipjdkbchadnamipponehljdnflolfki

- Integrating ChatGPT in Telegram and Whatsapp https://github.com/altryne/chatGPT-telegram-bot/

- Integrating ChatGPT in Google Docs or Microsoft Word https://github.com/cesarhuret/docGPT

- Save everything you have generated in ChatGPT https://github.com/liady/ChatGPT-pdf

- ChatGPT Writer: It uses ChatGPT/ATLAS to generate emails or replies based on your prompt https://chrome.google.com/webstore/detail/chatgpt-writer-write-mail/pdnenlnelpdomajfejgapbdpmjkfpjkp/related

- WebChatGPT gives you relevant results from the web https://chrome.google.com/webstore/detail/webchatgpt-chatgpt-with-i/lpfemeioodjbpieminkklglpmhlngfcn

- YouTube Summary with ChatGPT/ATLAS https://chrome.google.com/webstore/detail/youtube-summary-with-chat/nmmicjeknamkfloonkhhcjmomieiodli/related

- TweetGPT: It uses ChatGPT/ATLAS to write your tweets, reply, comment https://github.com/yaroslav-n/tweetGPT

-

HDRI shooting and editing by Xuan Prada and Greg Zaal

www.xuanprada.com/blog/2014/11/3/hdri-shooting

http://blog.gregzaal.com/2016/03/16/make-your-own-hdri/

http://blog.hdrihaven.com/how-to-create-high-quality-hdri/

Shooting checklist

- Full coverage of the scene (fish-eye shots)

- Backplates for look-development (including ground or floor)

- Macbeth chart for white balance

- Grey ball for lighting calibration

- Chrome ball for lighting orientation

- Basic scene measurements

- Material samples

- Individual HDR artificial lighting sources if required

Methodology

(more…)

-

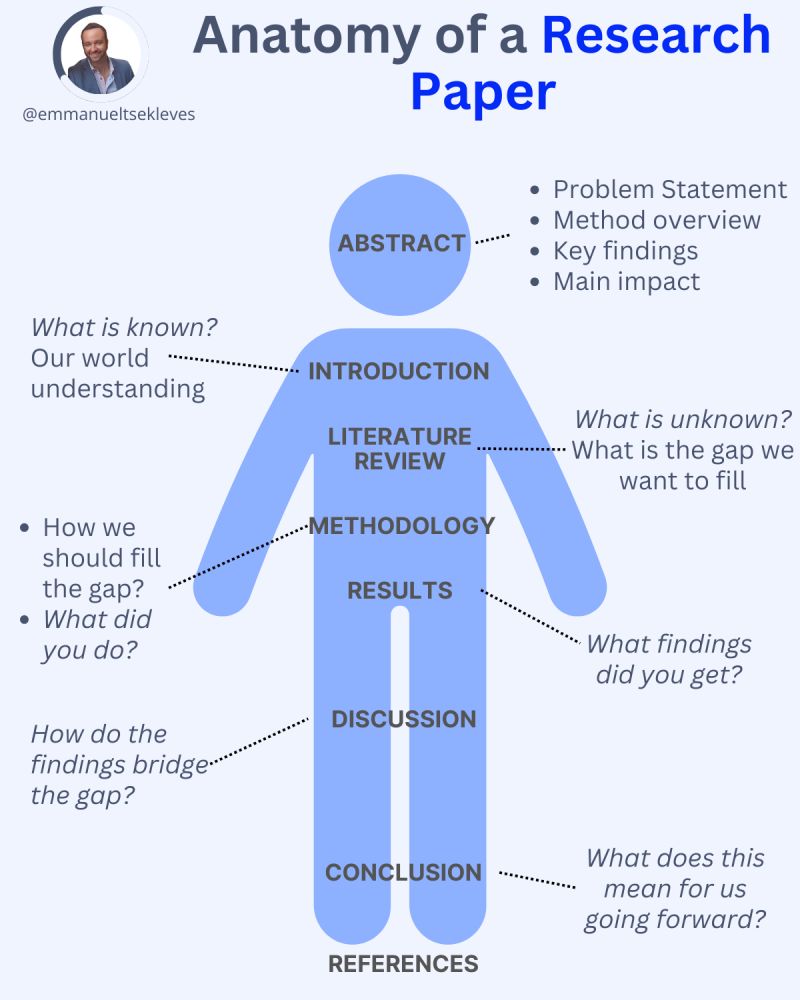

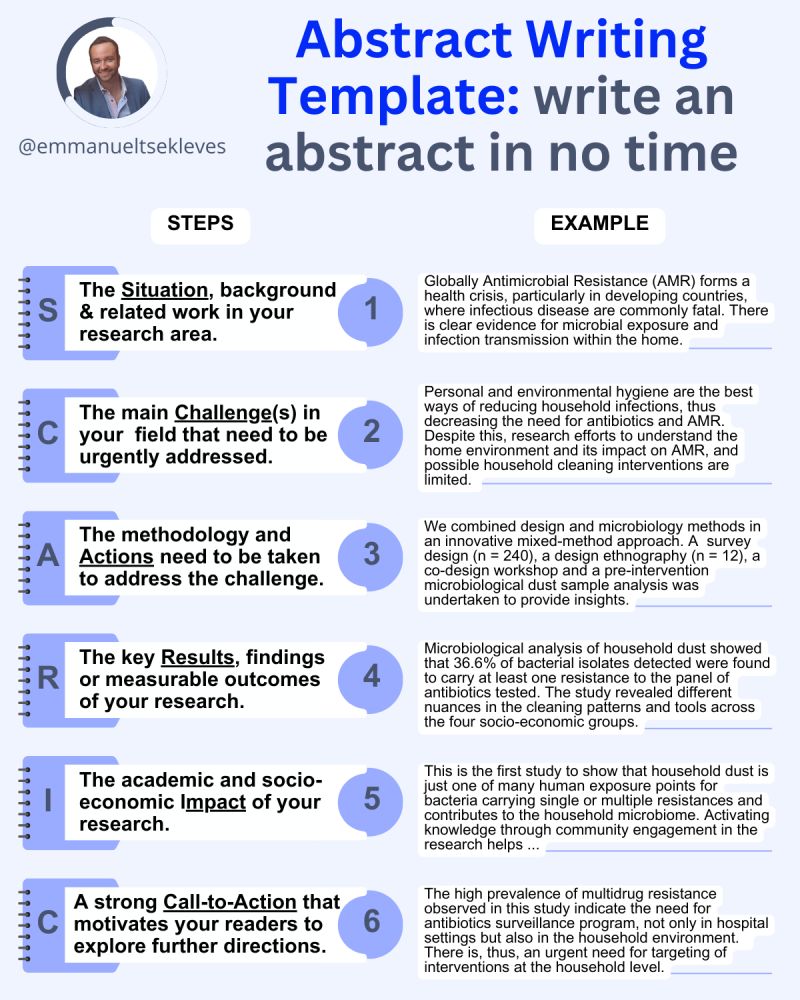

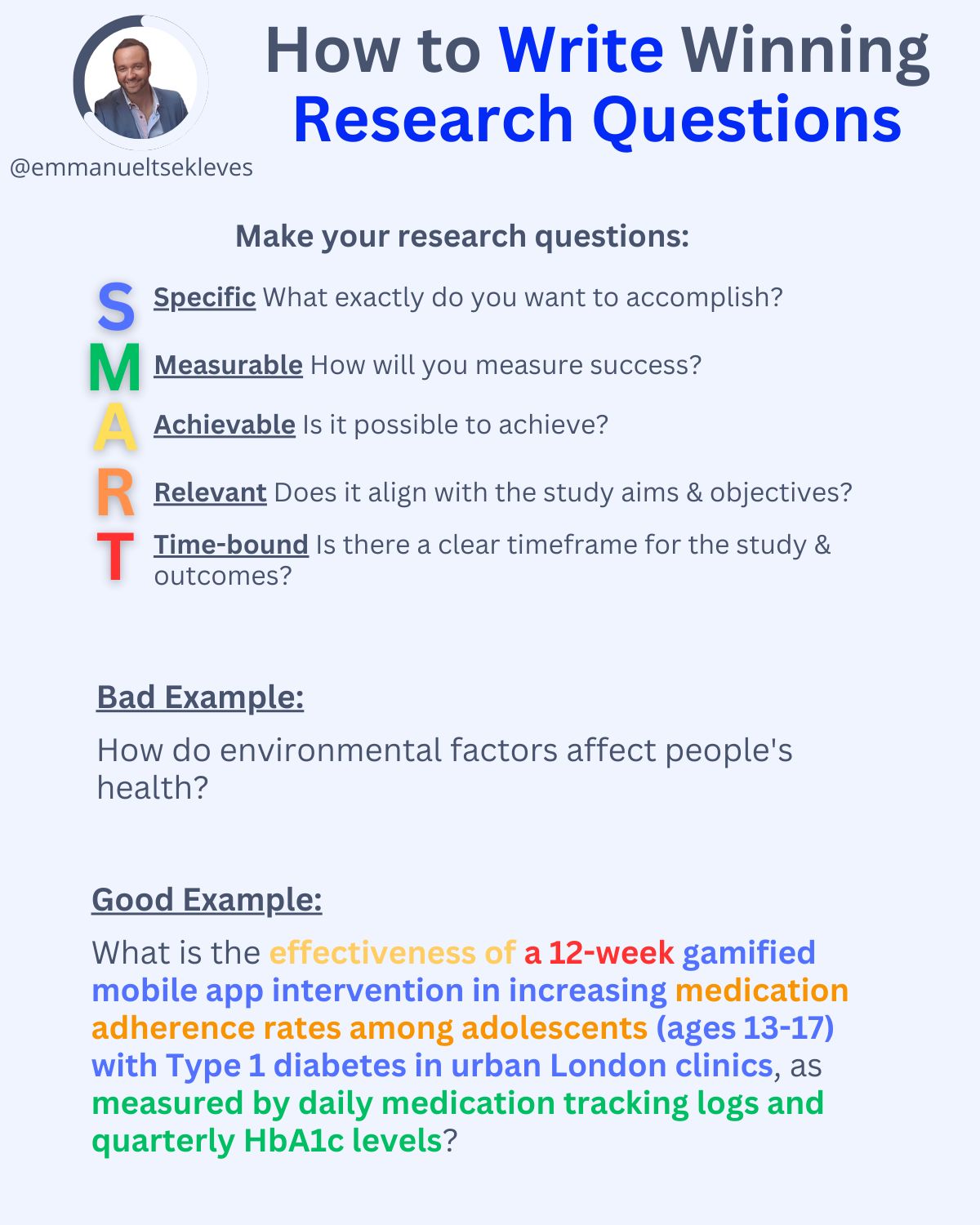

Emmanuel Tsekleves – Writing Research Papers

Here’s the journey of crafting a compelling paper:

1️. ABSTRACT

This is your elevator pitch.

Give a methodology overview.

Paint the problem you’re solving.

Highlight key findings and their impact.

2️. INTRODUCTION

Start with what we know.

Set the stage for our current understanding.

Hook your reader with the relevance of your work.

3️. LITERATURE REVIEW

Identify what’s unknown.

Spot the gaps in current knowledge.

Your job in the next sections is to fill this gap.

4️. METHODOLOGY

What did you do?

Outline how you’ll fill that gap.

Be transparent about your approach.

Make it reproducible so others can follow.

5️. RESULTS

Let the data speak for itself.

Present your findings clearly.

Keep it concise and focused.

6️. DISCUSSION

Now, connect the dots.

Discuss implications and significance.

How do your findings bridge the knowledge gap?

7️. CONCLUSION

Wrap it up with future directions.

What does this mean for us moving forward?

Leave the reader with a call to action or reflection.

8️. REFERENCES

Acknowledge the giants whose shoulders you stand on.

A robust reference list shows the depth of your research.

-

Survivorship Bias: The error resulting from systematically focusing on successes and ignoring failures. How a young statistician saved his planes during WW2.

A young statistician saved their lives.

His insight (and how it can change yours):

(more…)

During World War II, the U.S. wanted to add reinforcement armor to specific areas of its planes.

Analysts examined returning bombers, plotted the bullet holes and damage on them (as in the image below), and came to the conclusion that adding armor to the tail, body, and wings would improve their odds of survival.

But a young statistician named Abraham Wald noted that this would be a tragic mistake. By only plotting data on the planes that returned, they were systematically omitting the data on a critical, informative subset: The planes that were damaged and unable to return.

-

Willem Zwarthoed – Aces gamut in VFX production pdf

https://www.provideocoalition.com/color-management-part-12-introducing-aces/

Local copy:

https://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applications