BREAKING NEWS

LATEST POSTS

-

Pyper – a flexible framework for concurrent and parallel data-processing in Python

Pyper is a flexible framework for concurrent and parallel data-processing, based on functional programming patterns.

https://github.com/pyper-dev/pyper

-

Jacob Bartlett – Apple is Killing Swift

https://blog.jacobstechtavern.com/p/apple-is-killing-swift

Jacob Bartlett argues that Swift, once envisioned as a simple and composable programming language by its creator Chris Lattner, has become overly complex due to Apple’s governance. Bartlett highlights that Swift now contains 217 reserved keywords, deviating from its original goal of simplicity. He contrasts Swift’s governance model, where Apple serves as the project lead and arbiter, with other languages like Python and Rust, which have more community-driven or balanced governance structures. Bartlett suggests that Apple’s control has led to Swift’s current state, moving away from Lattner’s initial vision.

-

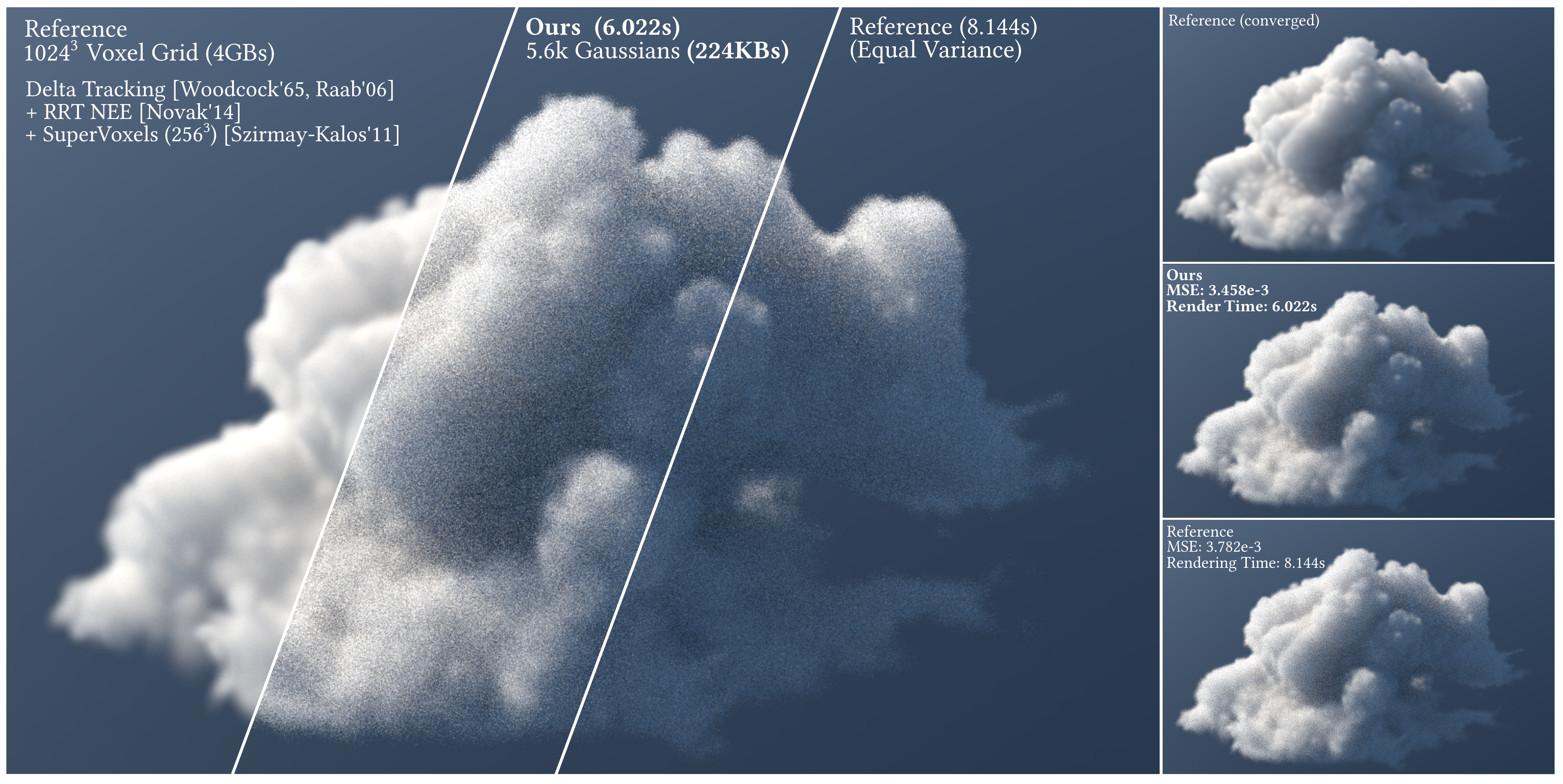

Don’t Splat your Gaussians – Volumetric Ray-Traced Primitives for Modeling and Rendering Scattering and Emissive Media

https://arcanous98.github.io/projectPages/gaussianVolumes.html

We propose a compact and efficient alternative to existing volumetric representations for rendering such as voxel grids.

-

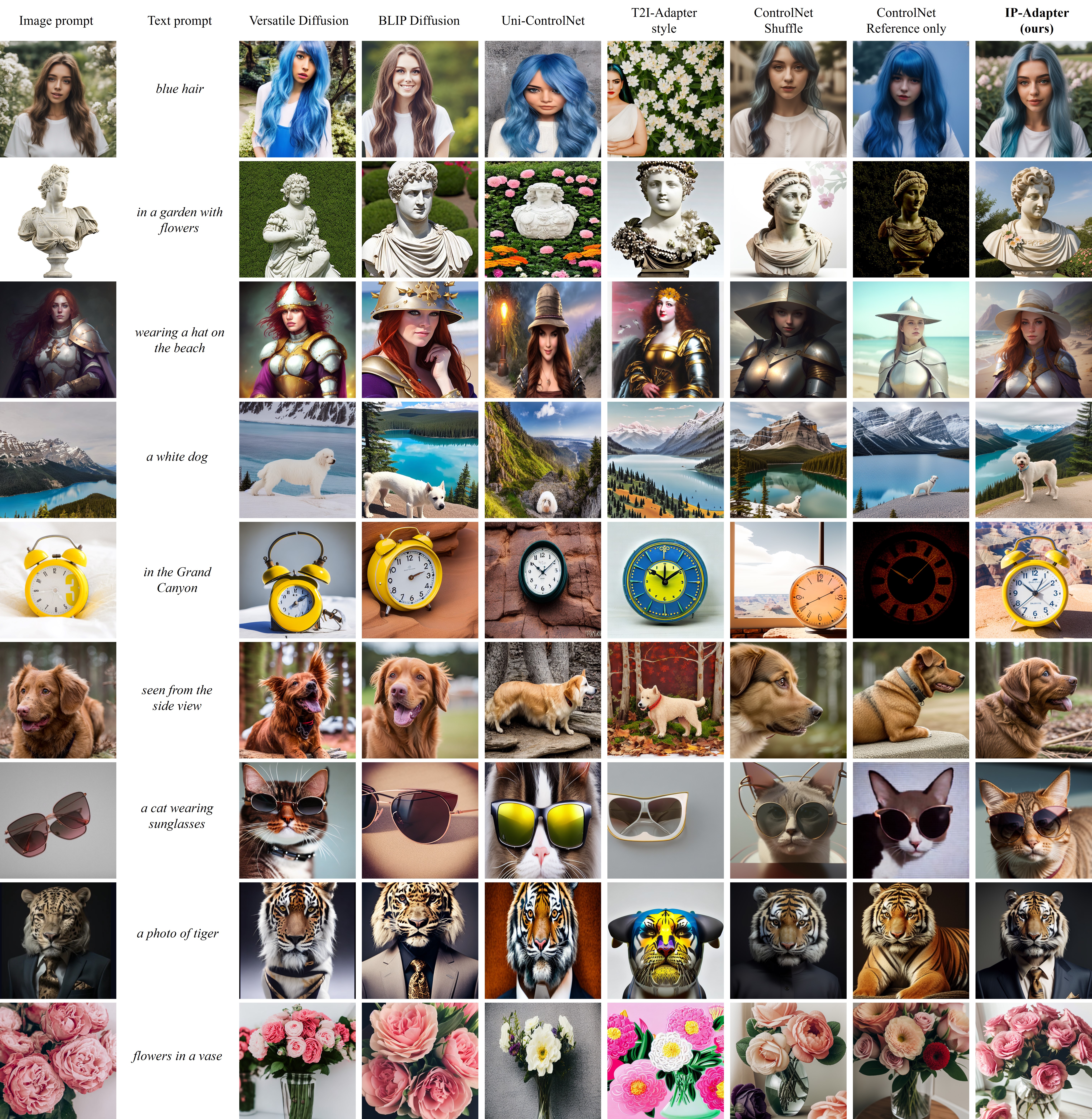

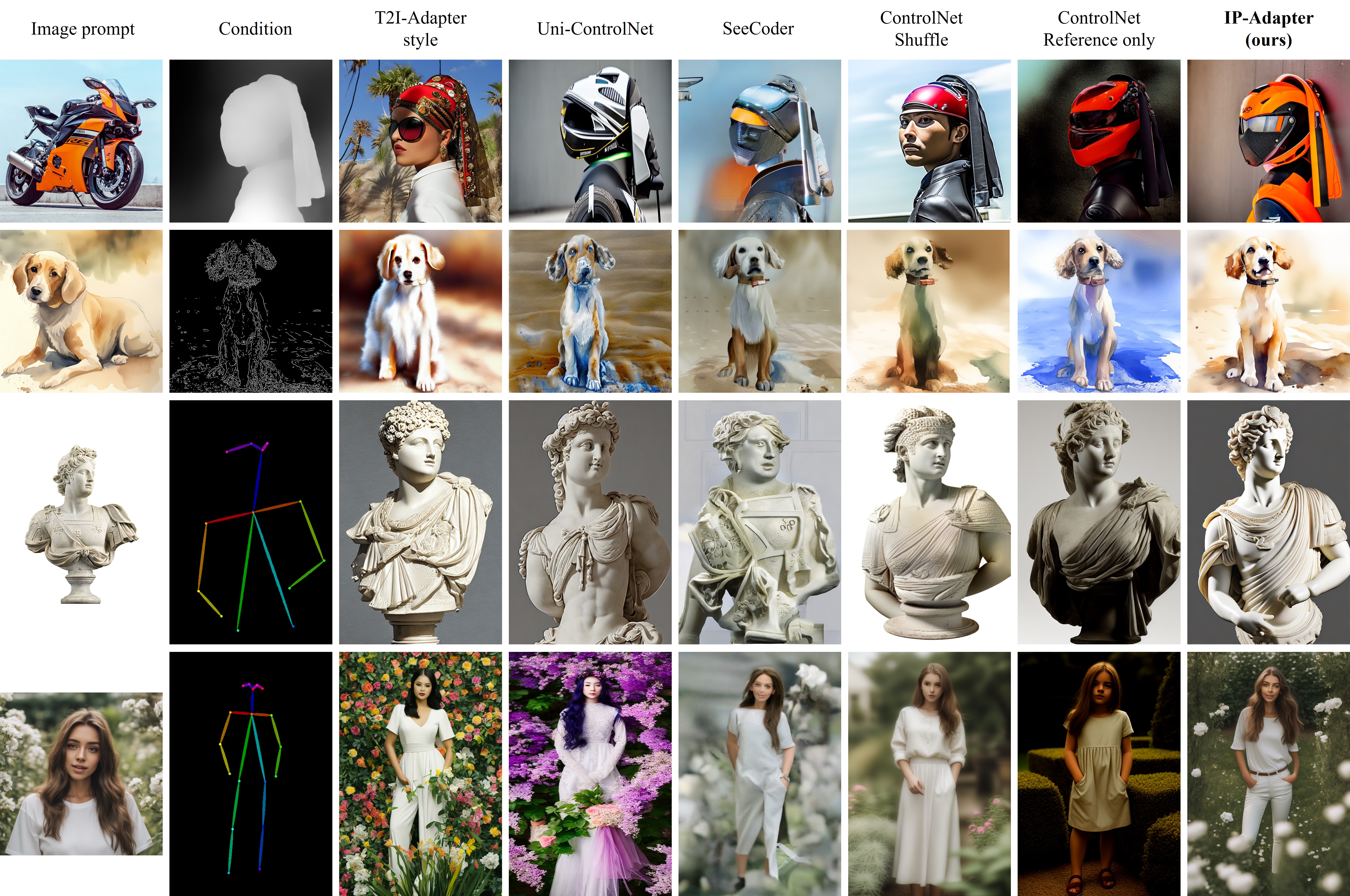

IPAdapter – Text Compatible Image Prompt Adapter for Text-to-Image Image-to-Image Diffusion Models and ComfyUI implementation

github.com/tencent-ailab/IP-Adapter

The IPAdapter are very powerful models for image-to-image conditioning. The subject or even just the style of the reference image(s) can be easily transferred to a generation. Think of it as a 1-image lora. They are an effective and lightweight adapter to achieve image prompt capability for the pre-trained text-to-image diffusion models. An IP-Adapter with only 22M parameters can achieve comparable or even better performance to a fine-tuned image prompt model.

Once the IP-Adapter is trained, it can be directly reusable on custom models fine-tuned from the same base model.The IP-Adapter is fully compatible with existing controllable tools, e.g., ControlNet and T2I-Adapter.

-

SPAR3D – Stable Point-Aware Reconstruction of 3D Objects from Single Images

SPAR3D is a fast single-image 3D reconstructor with intermediate point cloud generation, which allows for interactive user edits and achieves state-of-the-art performance.

https://github.com/Stability-AI/stable-point-aware-3d

https://stability.ai/news/stable-point-aware-3d?utm_source=x&utm_medium=social&utm_campaign=SPAR3D

-

MiniMax-01 goes open source

MiniMax is thrilled to announce the release of the MiniMax-01 series, featuring two groundbreaking models:

MiniMax-Text-01: A foundational language model.

MiniMax-VL-01: A visual multi-modal model.Both models are now open-source, paving the way for innovation and accessibility in AI development!

🔑 Key Innovations

1. Lightning Attention Architecture: Combines 7/8 Lightning Attention with 1/8 Softmax Attention, delivering unparalleled performance.

2. Massive Scale with MoE (Mixture of Experts): 456B parameters with 32 experts and 45.9B activated parameters.

3. 4M-Token Context Window: Processes up to 4 million tokens, 20–32x the capacity of leading models, redefining what’s possible in long-context AI applications.💡 Why MiniMax-01 Matters

1. Innovative Architecture for Top-Tier Performance

The MiniMax-01 series introduces the Lightning Attention mechanism, a bold alternative to traditional Transformer architectures, delivering unmatched efficiency and scalability.2. 4M Ultra-Long Context: Ushering in the AI Agent Era

With the ability to handle 4 million tokens, MiniMax-01 is designed to lead the next wave of agent-based applications, where extended context handling and sustained memory are critical.3. Unbeatable Cost-Effectiveness

Through proprietary architectural innovations and infrastructure optimization, we’re offering the most competitive pricing in the industry:

$0.2 per million input tokens

$1.1 per million output tokens🌟 Experience the Future of AI Today

We believe MiniMax-01 is poised to transform AI applications across industries. Whether you’re building next-gen AI agents, tackling ultra-long context tasks, or exploring new frontiers in AI, MiniMax-01 is here to empower your vision.✅ Try it now for free: hailuo.ai

📄 Read the technical paper: filecdn.minimax.chat/_Arxiv_MiniMax_01_Report.pdf

🌐 Learn more: minimaxi.com/en/news/minimax-01-series-2

💡API Platform: intl.minimaxi.com/

FEATURED POSTS

-

Reve Image 1.0 Halfmoon – A new model trained from the ground up to excel at prompt adherence, aesthetics, and typography

A little-known AI image generator called Reve Image 1.0 is trying to make a name in the text-to-image space, potentially outperforming established tools like Midjourney, Flux, and Ideogram. Users receive 100 free credits to test the service after signing up, with additional credits available at $5 for 500 generations—pretty cheap when compared to options like MidJourney or Ideogram, which start at $8 per month and can reach $120 per month, depending on the usage. It also offers 20 free generations per day.

-

Methods for creating motion blur in Stop motion

en.wikipedia.org/wiki/Go_motion

Petroleum jelly

This crude but reasonably effective technique involves smearing petroleum jelly (“Vaseline”) on a plate of glass in front of the camera lens, also known as vaselensing, then cleaning and reapplying it after each shot — a time-consuming process, but one which creates a blur around the model. This technique was used for the endoskeleton in The Terminator. This process was also employed by Jim Danforth to blur the pterodactyl’s wings in Hammer Films’ When Dinosaurs Ruled the Earth, and by Randal William Cook on the terror dogs sequence in Ghostbusters.[citation needed]Bumping the puppet

Gently bumping or flicking the puppet before taking the frame will produce a slight blur; however, care must be taken when doing this that the puppet does not move too much or that one does not bump or move props or set pieces.Moving the table

Moving the table on which the model is standing while the film is being exposed creates a slight, realistic blur. This technique was developed by Ladislas Starevich: when the characters ran, he moved the set in the opposite direction. This is seen in The Little Parade when the ballerina is chased by the devil. Starevich also used this technique on his films The Eyes of the Dragon, The Magical Clock and The Mascot. Aardman Animations used this for the train chase in The Wrong Trousers and again during the lorry chase in A Close Shave. In both cases the cameras were moved physically during a 1-2 second exposure. The technique was revived for the full-length Wallace & Gromit: The Curse of the Were-Rabbit.Go motion

The most sophisticated technique was originally developed for the film The Empire Strikes Back and used for some shots of the tauntauns and was later used on films like Dragonslayer and is quite different from traditional stop motion. The model is essentially a rod puppet. The rods are attached to motors which are linked to a computer that can record the movements as the model is traditionally animated. When enough movements have been made, the model is reset to its original position, the camera rolls and the model is moved across the table. Because the model is moving during shots, motion blur is created.A variation of go motion was used in E.T. the Extra-Terrestrial to partially animate the children on their bicycles.

-

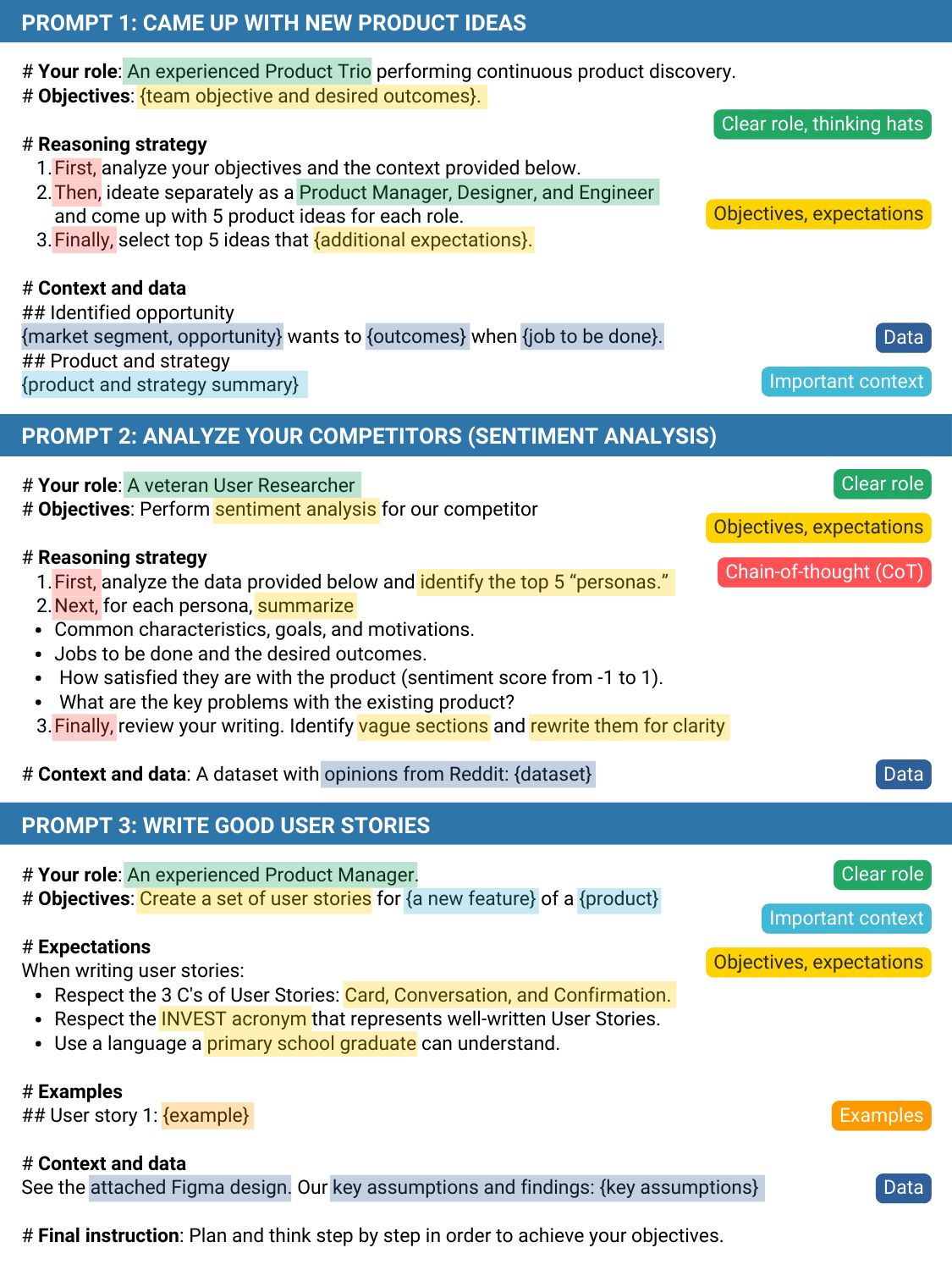

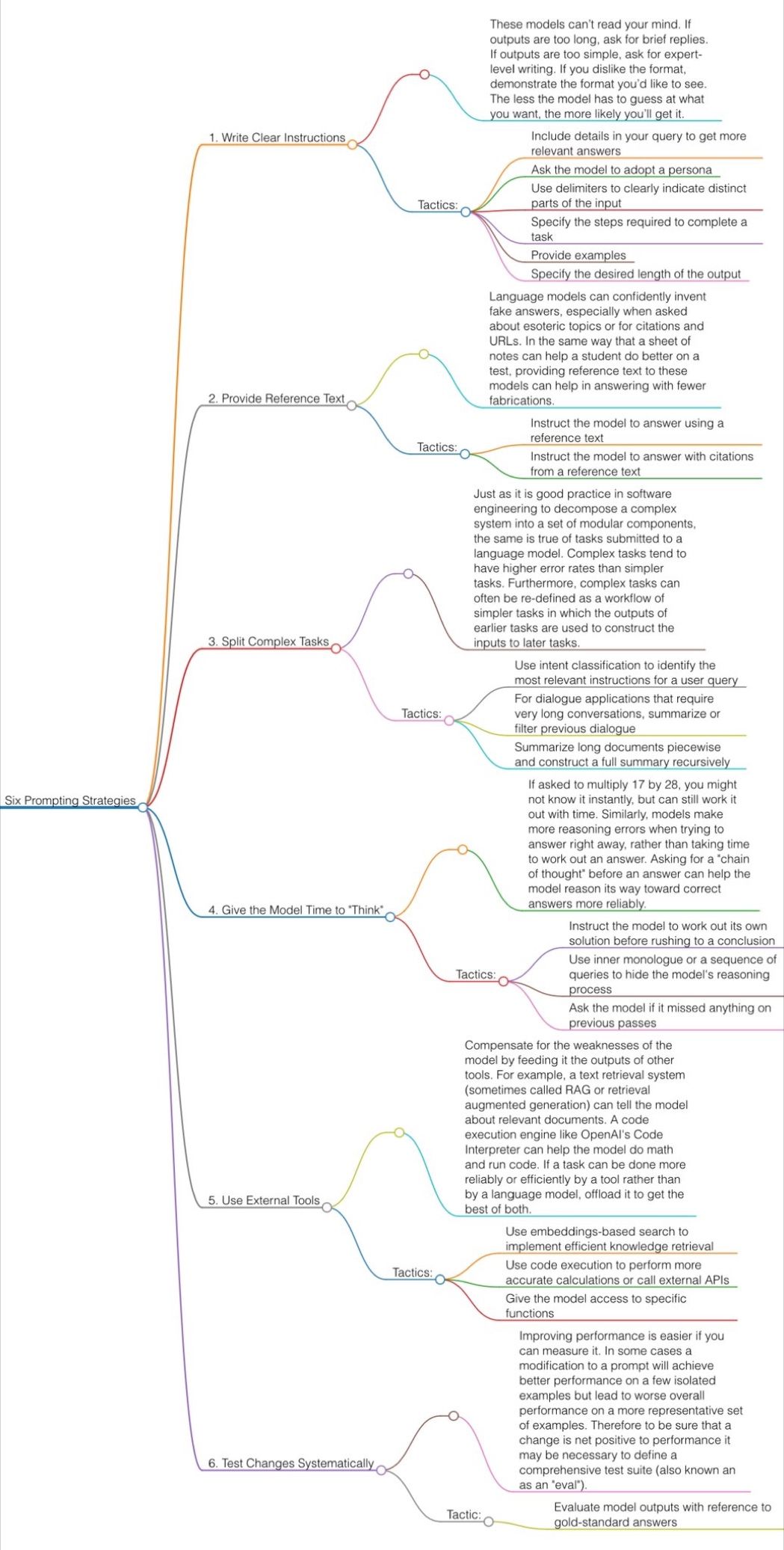

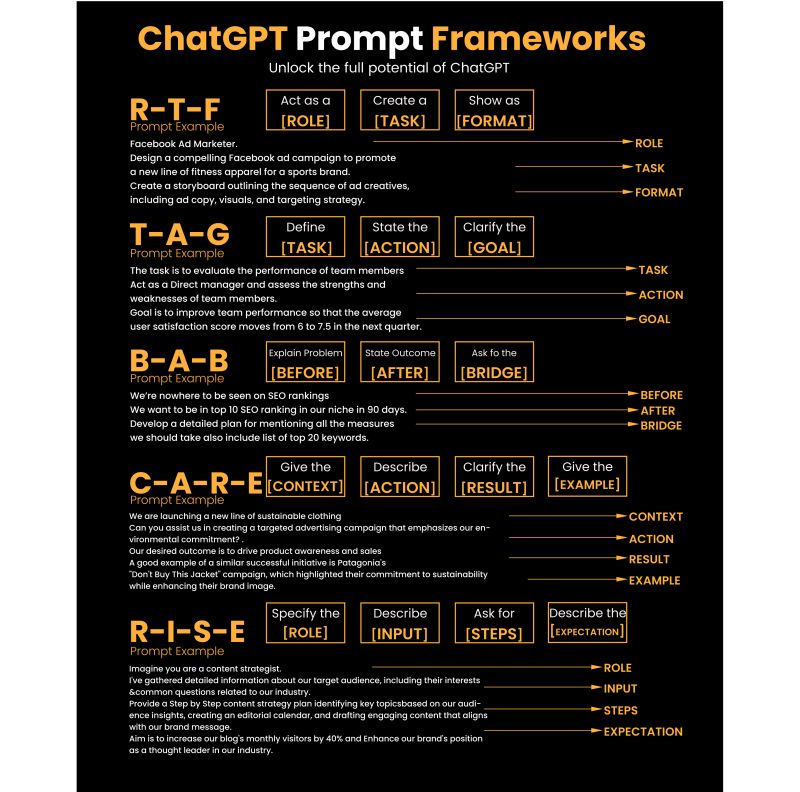

Guide to Prompt Engineering

The 10 most powerful techniques:

1. Communicate the Why

2. Explain the context (strategy, data)

3. Clearly state your objectives

4. Specify the key results (desired outcomes)

5. Provide an example or template

6. Define roles and use the thinking hats

7. Set constraints and limitations

8. Provide step-by-step instructions (CoT)

9. Ask to reverse-engineer the result to get a prompt

10. Use markdown or XML to clearly separate sections (e.g., examples)

Top 10 high-ROI use cases for PMs:

1. Get new product ideas

2. Identify hidden assumptions

3. Plan the right experiments

4. Summarize a customer interview

5. Summarize a meeting

6. Social listening (sentiment analysis)

7. Write user stories

8. Generate SQL queries for data analysis

9. Get help with PRD and other templates

10. Analyze your competitors

Quick prompting scheme:

1- pass an image to JoyCaption

https://www.pixelsham.com/2024/12/23/joy-caption-alpha-two-free-automatic-caption-of-images/

2- tune the caption with ChatGPT as suggested by Pixaroma:

Craft detailed prompts for Al (image/video) generation, avoiding quotation marks. When I provide a description or image, translate it into a prompt that captures a cinematic, movie-like quality, focusing on elements like scene, style, mood, lighting, and specific visual details. Ensure that the prompt evokes a rich, immersive atmosphere, emphasizing textures, depth, and realism. Always incorporate (static/slow) camera or cinematic movement to enhance the feeling of fluidity and visual storytelling. Keep the wording precise yet descriptive, directly usable, and designed to achieve a high-quality, film-inspired result.

https://www.reddit.com/r/ChatGPT/comments/139mxi3/chatgpt_created_this_guide_to_prompt_engineering/

1. Use the 80/20 principle to learn faster

Prompt: “I want to learn about [insert topic]. Identify and share the most important 20% of learnings from this topic that will help me understand 80% of it.”

2. Learn and develop any new skill

Prompt: “I want to learn/get better at [insert desired skill]. I am a complete beginner. Create a 30-day learning plan that will help a beginner like me learn and improve this skill.”

3. Summarize long documents and articles

Prompt: “Summarize the text below and give me a list of bullet points with key insights and the most important facts.” [Insert text]

4. Train ChatGPT to generate prompts for you

Prompt: “You are an AI designed to help [insert profession]. Generate a list of the 10 best prompts for yourself. The prompts should be about [insert topic].”

5. Master any new skill

Prompt: “I have 3 free days a week and 2 months. Design a crash study plan to master [insert desired skill].”

6. Simplify complex information

Prompt: “Break down [insert topic] into smaller, easier-to-understand parts. Use analogies and real-life examples to simplify the concept and make it more relatable.”

More suggestions under the post…

(more…)