BREAKING NEWS

LATEST POSTS

-

Odyssey.systems Explorer – pioneering generative world models and gaussian splatting

Odyssey and a Pixar co-founder, Ed Catmull (also an investor), just dropped Explorer, a revolutionary 3D world generator that turns any image into an editable 3D world.

https://odyssey.systems/introducing-explorer

-

Genesis AI – a physics platform designed for general purpose Robotics/Embodied AI/Physical AI applications

https://github.com/Genesis-Embodied-AI/Genesis

https://genesis-world.readthedocs.io/en/latest

Genesis is a physics platform designed for general purpose Robotics/Embodied AI/Physical AI applications. It is simultaneously multiple things:

- A universal physics engine re-built from the ground up, capable of simulating a wide range of materials and physical phenomena.

- A lightweight, ultra-fast, pythonic, and user-friendly robotics simulation platform.

- A powerful and fast photo-realistic rendering system.

- A generative data engine that transforms user-prompted natural language description into various modalities of data.

FEATURED POSTS

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

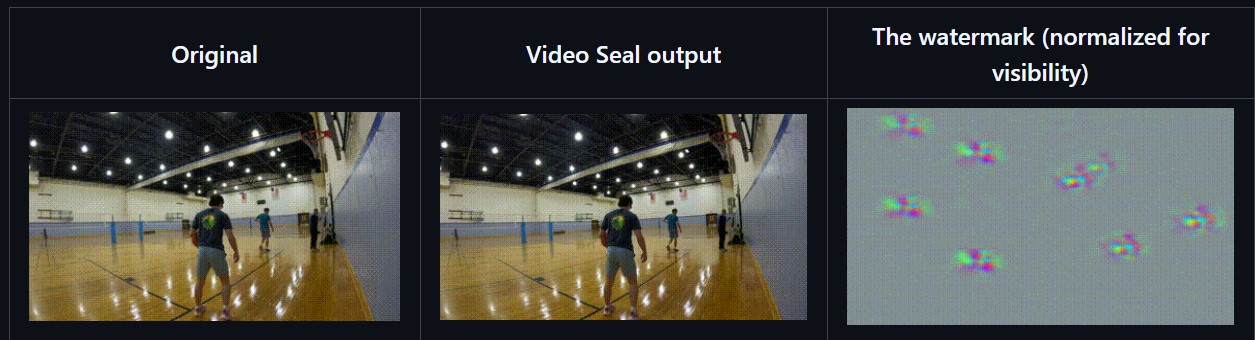

“Simon Willison created a Datasette browser to explore WebVid-10M, one of the two datasets used to train the video generation model, and quickly learned that all 10.7 million video clips were scraped from Shutterstock, watermarks and all.”

“In addition to the Shutterstock clips, Meta also used 10 million video clips from this 100M video dataset from Microsoft Research Asia. It’s not mentioned on their GitHub, but if you dig into the paper, you learn that every clip came from over 3 million YouTube videos.”

“It’s become standard practice for technology companies working with AI to commercially use datasets and models collected and trained by non-commercial research entities like universities or non-profits.”

“Like with the artists, photographers, and other creators found in the 2.3 billion images that trained Stable Diffusion, I can’t help but wonder how the creators of those 3 million YouTube videos feel about Meta using their work to train their new model.”

-

Zibra.AI – Real-Time Volumetric Effects in Virtual Production. Now free for Indies!

A New Era for Volumetrics

For a long time, volumetric visual effects were viable only in high-end offline VFX workflows. Large data footprints and poor real-time rendering performance limited their use: most teams simply avoided volumetrics altogether. It’s similar to the early days of online video: limited computational power and low network bandwidth made video content hard to share or stream. Today, of course, we can’t imagine the internet without it, and we believe volumetrics are on a similar path.

With advanced data compression and real-time, GPU-driven decompression, anyone can now bring CGI-class visual effects into Unreal Engine.

From now on, it’s completely free for individual creators!

What it means for you?

(more…)

-

HDRI Resources

Text2Light

- https://www.cgtrader.com/free-3d-models/exterior/other/10-free-hdr-panoramas-created-with-text2light-zero-shot

- https://frozenburning.github.io/projects/text2light/

- https://github.com/FrozenBurning/Text2Light

Royalty free links

- https://locationtextures.com/panoramas/

- http://www.noahwitchell.com/freebies

- https://polyhaven.com/hdris

- https://hdrmaps.com/

- https://www.ihdri.com/

- https://hdrihaven.com/

- https://www.domeble.com/

- http://www.hdrlabs.com/sibl/archive.html

- https://www.hdri-hub.com/hdrishop/hdri

- http://noemotionhdrs.net/hdrevening.html

- https://www.openfootage.net/hdri-panorama/

- https://www.zwischendrin.com/en/browse/hdri

Nvidia GauGAN360