BREAKING NEWS

LATEST POSTS

-

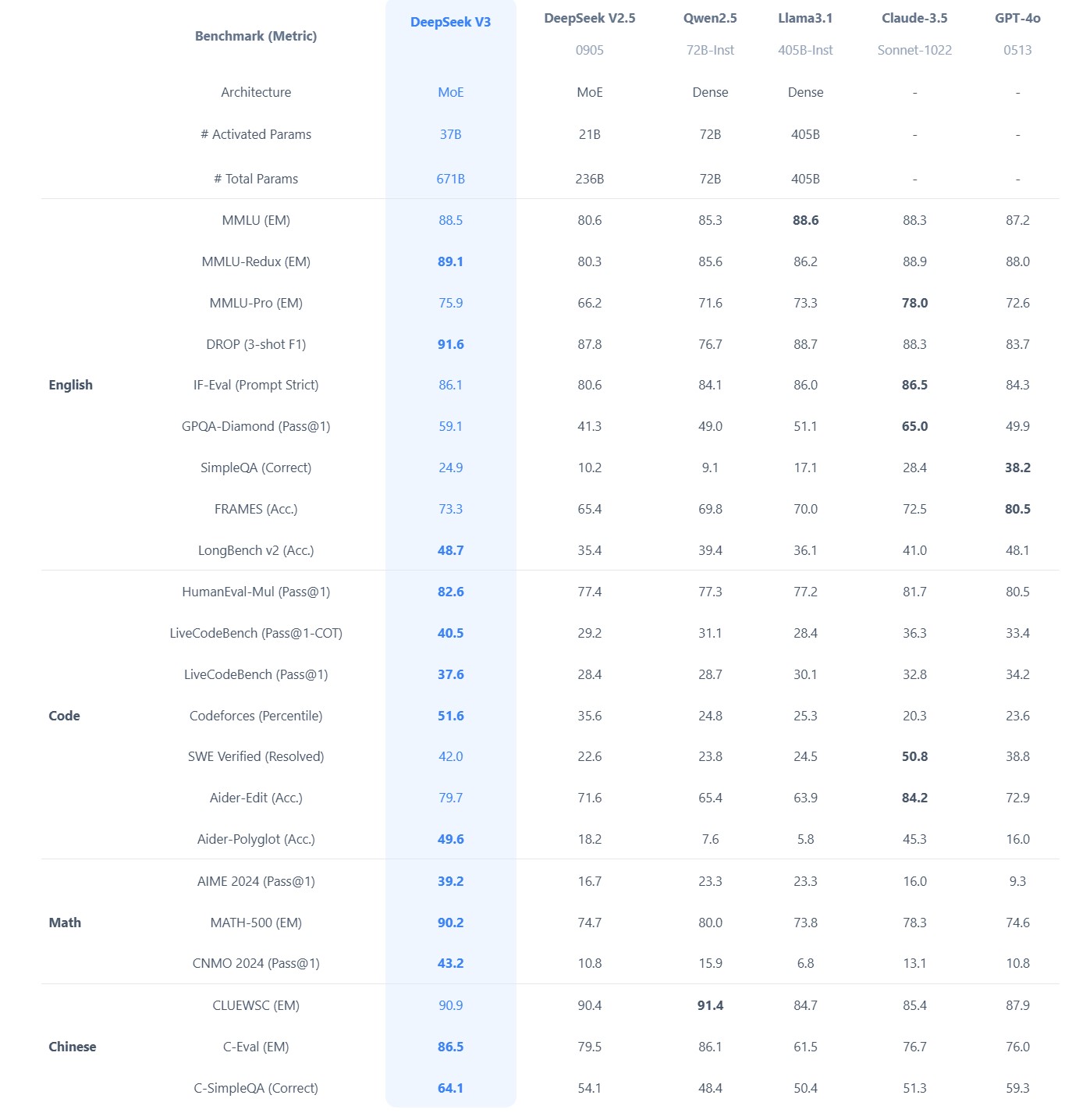

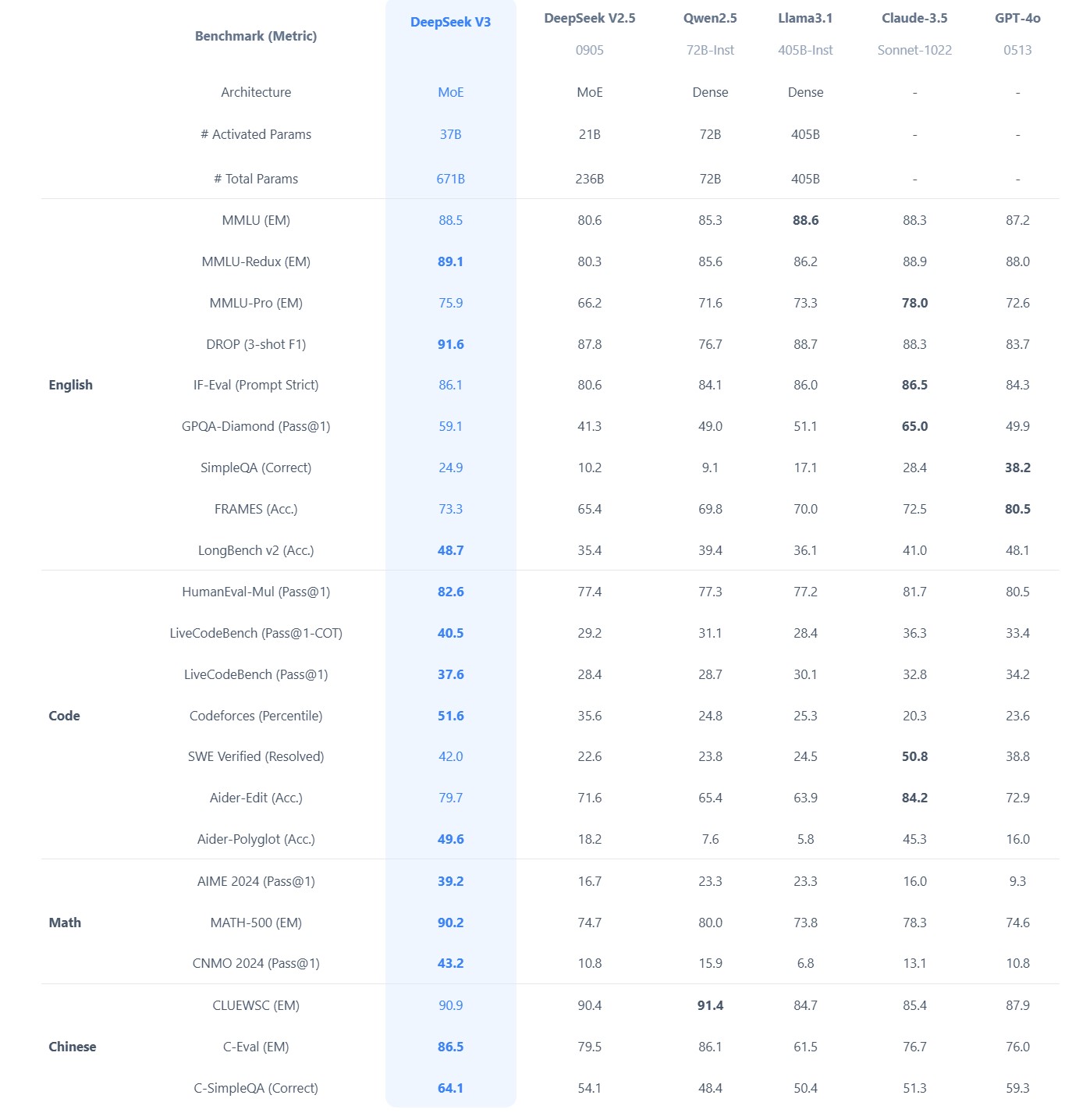

Brian Gallagher – Why Almost Everybody Is Wrong About DeepSeek vs. All the Other AI Companies

Benchmarks don’t capture real-world complexity like latency, domain-specific tasks, or edge cases. Enterprises often need more than raw performance, also needing reliability, ease of integration, and robust vendor support. Enterprise money will support the industries providing these services.

… it is also reasonable to assume that anything you put into the app or their website will be going to the Chinese government as well, so factor that in as well.

-

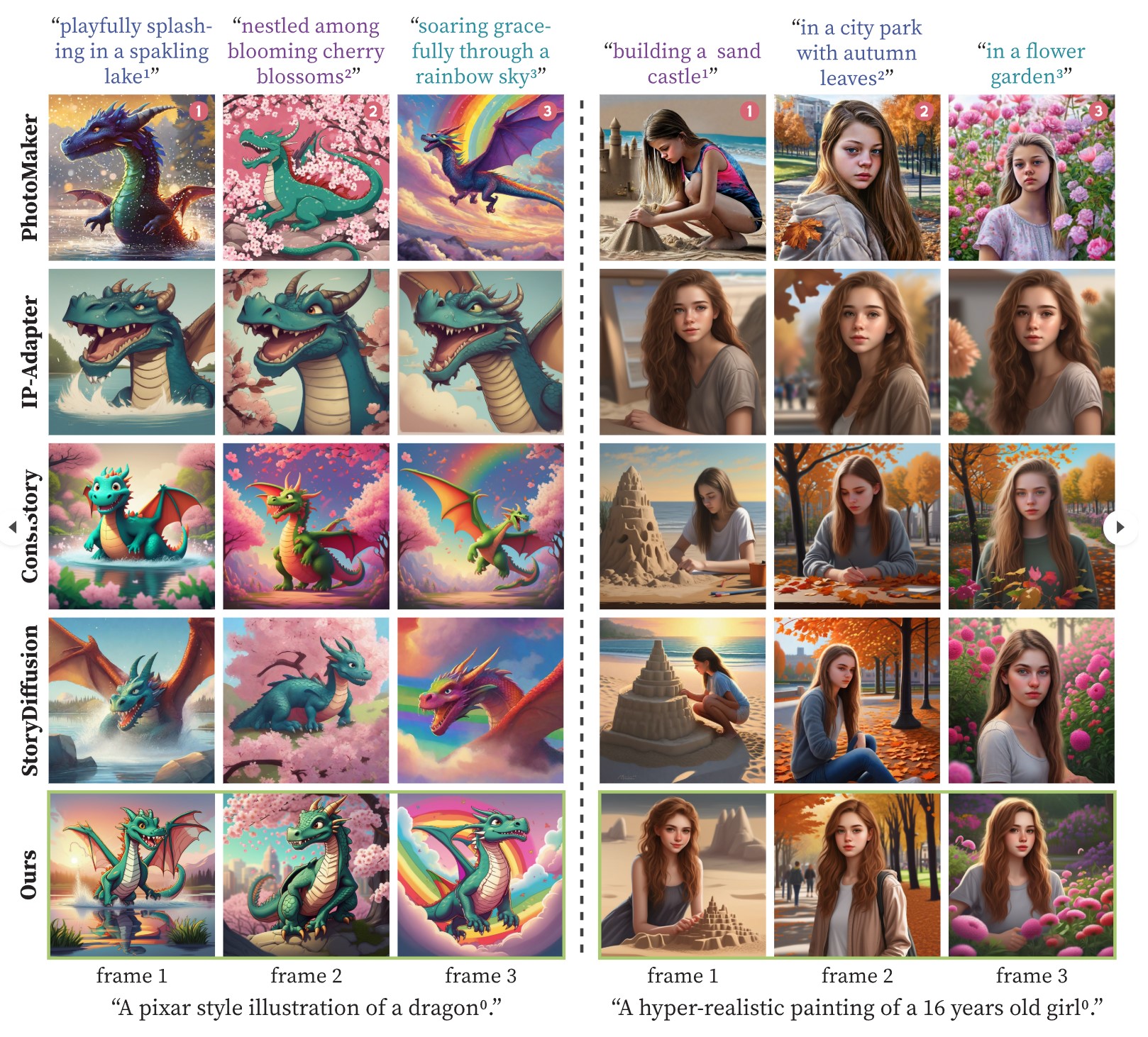

One-Prompt-One-Story – Free-Lunch Consistent Text-to-Image Generation Using a Single Prompt

https://byliutao.github.io/1Prompt1Story.github.io

Tneration models can create high-quality images from input prompts. However, they struggle to support the consistent generation of identity-preserving requirements for storytelling.

Our approach 1Prompt1Story concatenates all prompts into a single input for T2I diffusion models, initially preserving character identities.

-

What did DeepSeek figure out about reasoning with DeepSeek-R1?

https://www.seangoedecke.com/deepseek-r1

The Chinese AI lab DeepSeek recently released their new reasoning model R1, which is supposedly (a) better than the current best reasoning models (OpenAI’s o1- series), and (b) was trained on a GPU cluster a fraction the size of any of the big western AI labs.

DeepSeek uses a reinforcement learning approach, not a fine-tuning approach. There’s no need to generate a huge body of chain-of-thought data ahead of time, and there’s no need to run an expensive answer-checking model. Instead, the model generates its own chains-of-thought as it goes.

The secret behind their success? A bold move to train their models using FP8 (8-bit floating-point precision) instead of the standard FP32 (32-bit floating-point precision).

…

By using a clever system that applies high precision only when absolutely necessary, they achieved incredible efficiency without losing accuracy.

…

The impressive part? These multi-token predictions are about 85–90% accurate, meaning DeepSeek R1 can deliver high-quality answers at double the speed of its competitors.Chinese AI firm DeepSeek has 50,000 NVIDIA H100 AI GPUs

-

CaPa – Carve-n-Paint Synthesisfor Efficient 4K Textured Mesh Generation

https://github.com/ncsoft/CaPa

a novel method for generating hyper-quality 4K textured mesh under only 30 seconds, providing 3D assets ready for commercial applications such as games, movies, and VR/AR.

FEATURED POSTS

-

memerwala_londa – Ghibli like Midjourney and Kling video

https://www.reddit.com/r/midjourney/comments/1lbblfq/ghibli_style_game_guide_included/

Made everything on Edits App

Image Generation on Midjourney

Video Generation on Kling 2.1I used Joystick png to add buttons,then some asmr video sounds to make it look more lively,I used text as Buttons,

Prompts:

All Prompts are in order just like in video

First-person POV video game screenshot, playing as a young anime protagonist in a slightly oversized white t-shirt and knee-length blue shorts. Visible hands pushing open a sun-faded wooden door, forearms resting on the frame. In a dusty hallway mirror reflection: character’s soft Ghibli-style face with windblown hair. Inside a cozy coastal cottage: slanted sunlight through lace curtains, pastel walls with watercolor seascapes, overstuffed bookshelf spilling seashells. Foreground: ‘E: Rest’ prompt over a quilted sofa. Background: steaming teacup on a driftwood table, open window revealing distant lighthouse and Miyazaki fluffy clouds. Soft painterly textures, slight fisheye lens, identical HUD (minimap corner, health bar)

First-person POV video game screenshot, playing as a young anime protagonist in a slightly oversized white t-shirt and knee-length blue shorts. View includes visible hands gripping a steering wheel, sunlit arms resting on car door, and rearview mirror showing character’s soft Ghibli-style face with windblown hair. Driving through a vibrant coastal town: cobblestone streets, pastel houses with flower boxes, distant lighthouse. Soft painterly textures, Miyazaki skies with fluffy clouds, slight fisheye lens effect, HUD elements (minimap corner, health bar).

First-person POV video game screenshot, playing as a young protagonist in a loose white t-shirt and faded denim shorts. Visible arms holding a woven basket, sneakers stepping on rain-damp cobblestones. Walking through a chaotic Ghibli street market: cramped stalls selling glowing mushrooms, floating lanterns, and spiral-cut fruits. Fishmonger shouts while soot sprites dart between crates. Foreground: vendor handing you a peach (interactive ‘E’ prompt). Background: yakuza thugs lurking near a steaming noodle cart. Soft painterly lighting, depth of field, subtle HUD (minimap corner, health bar). Studio Ghibli meets Grand Theft Auto

First-person POV video game screenshot, playing as a young anime protagonist in a slightly oversized white t-shirt (salt-stained sleeves) and knee-length blue shorts, visible hands gripping a bamboo fishing rod. Kneeling on a mossy dock pier at sunset, arms resting on knees. Foreground: ‘E: Reel In’ prompt as line pulls taut. Background: pastel fishing boats, distant lighthouse under Miyazaki’s fluffy clouds. Glowing koi fish breaching turquoise water, soot sprites stealing bait from a tin. Identical soft painterly textures, fisheye lens effect, HUD (minimap corner, health bar).

Video Prompts :

All Prompts are in order just like in video

The black-haired boy strides from the rustic house toward the ocean, the camera tracking his movement in a GTA-style third-person perspective as coastal winds flutter white curtains and sunlight glimmers on distant sailboats, blending warm interior details with expanding seaside horizons under a tranquil sky.

The brown-haired boy drives a vintage blue convertible along the coastal cobblestone street, colorful flower-adorned buildings passing by as the camera follows the car’s journey toward the sunlit ocean horizon, sea breeze gently tousling his hair under a serene sky.

The young boy navigates the bustling cobblestone market, basket of oranges in arm, as vibrant stalls and fluttering awnings frame his journey, the camera tracking his focused stride through chattering crowds under swaying traditional lanterns.

A school of fish swims gracefully through crystal-clear water, sunlight filtering through the surface, coral reefs swaying gently, creating a serene underwater scene with the camera stationary.

-

No one could see the colour blue until modern times

https://www.businessinsider.com/what-is-blue-and-how-do-we-see-color-2015-2

The way humans see the world… until we have a way to describe something, even something so fundamental as a colour, we may not even notice that something it’s there.

Ancient languages didn’t have a word for blue — not Greek, not Chinese, not Japanese, not Hebrew, not Icelandic cultures. And without a word for the colour, there’s evidence that they may not have seen it at all.

https://www.wnycstudios.org/story/211119-colorsEvery language first had a word for black and for white, or dark and light. The next word for a colour to come into existence — in every language studied around the world — was red, the colour of blood and wine.

After red, historically, yellow appears, and later, green (though in a couple of languages, yellow and green switch places). The last of these colours to appear in every language is blue.The only ancient culture to develop a word for blue was the Egyptians — and as it happens, they were also the only culture that had a way to produce a blue dye.

https://mymodernmet.com/shades-of-blue-color-history/True blue hues are rare in the natural world because synthesizing pigments that absorb longer-wavelength light (reds and yellows) while reflecting shorter-wavelength blue light requires exceptionally elaborate molecular structures—biochemical feats that most plants and animals simply don’t undertake.

When you gaze at a blueberry’s deep blue surface, you’re actually seeing structural coloration rather than a true blue pigment. A fine, waxy bloom on the berry’s skin contains nanostructures that preferentially scatter blue and violet light, giving the fruit its signature blue sheen even though its inherent pigment is reddish.

Similarly, many of nature’s most striking blues—like those of blue jays and morpho butterflies—arise not from blue pigments but from microscopic architectures in feathers or wing scales. These tiny ridges and air pockets manipulate incoming light so that blue wavelengths emerge most prominently, creating vivid, angle-dependent colors through scattering rather than pigment alone.

(more…)