BREAKING NEWS

LATEST POSTS

-

OpenAI Backs Critterz, an AI-Made Animated Feature Film

https://www.wsj.com/tech/ai/openai-backs-ai-made-animated-feature-film-389f70b0

Film, called ‘Critterz,’ aims to debut at Cannes Film Festival and will leverage startup’s AI tools and resources.

“Critterz,” about forest creatures who go on an adventure after their village is disrupted by a stranger, is the brainchild of Chad Nelson, a creative specialist at OpenAI. Nelson started sketching out the characters three years ago while trying to make a short film with what was then OpenAI’s new DALL-E image-generation tool.

-

AI and the Law: Anthropic to Pay $1.5 Billion to Settle Book Piracy Class Action Lawsuit

https://variety.com/2025/digital/news/anthropic-class-action-settlement-billion-1236509571

The settlement amounts to about $3,000 per book and is believed to be the largest ever recovery in a U.S. copyright case, according to the plaintiffs’ attorneys.

-

Sir Peter Jackson’s Wētā FX records $140m loss in two years, amid staff layoffs

https://www.thepost.co.nz/business/360813799/weta-fx-posts-59m-loss-amid-industry-headwinds

Wētā FX, Sir Peter Jackson’s largest business has posted a $59.3 million loss for the year to March 31, an improvement on an $83m loss last year.

-

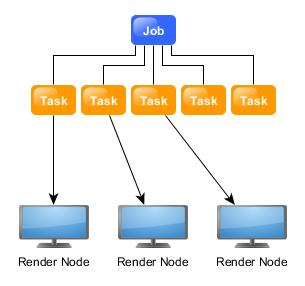

ComfyUI Thinkbox Deadline plugin

Submit ComfyUI workflows to Thinkbox Deadline render farm.

Features

- Submit ComfyUI workflows directly to Deadline

- Batch rendering with seed variation

- Real-time progress monitoring via Deadline Monitor

- Configurable pools, groups, and priorities

https://github.com/doubletwisted/ComfyUI-Deadline-Plugin

https://docs.thinkboxsoftware.com/products/deadline/latest/1_User%20Manual/manual/overview.html

Deadline 10 is a cross-platform render farm management tool for Windows, Linux, and macOS. It gives users control of their rendering resources and can be used on-premises, in the cloud, or both. It handles asset syncing to the cloud, manages data transfers, and supports tagging for cost tracking purposes.

Deadline 10’s Remote Connection Server allows for communication over HTTPS, improving performance and scalability. Where supported, users can use usage-based licensing to supplement their existing fixed pool of software licenses when rendering through Deadline 10.

-

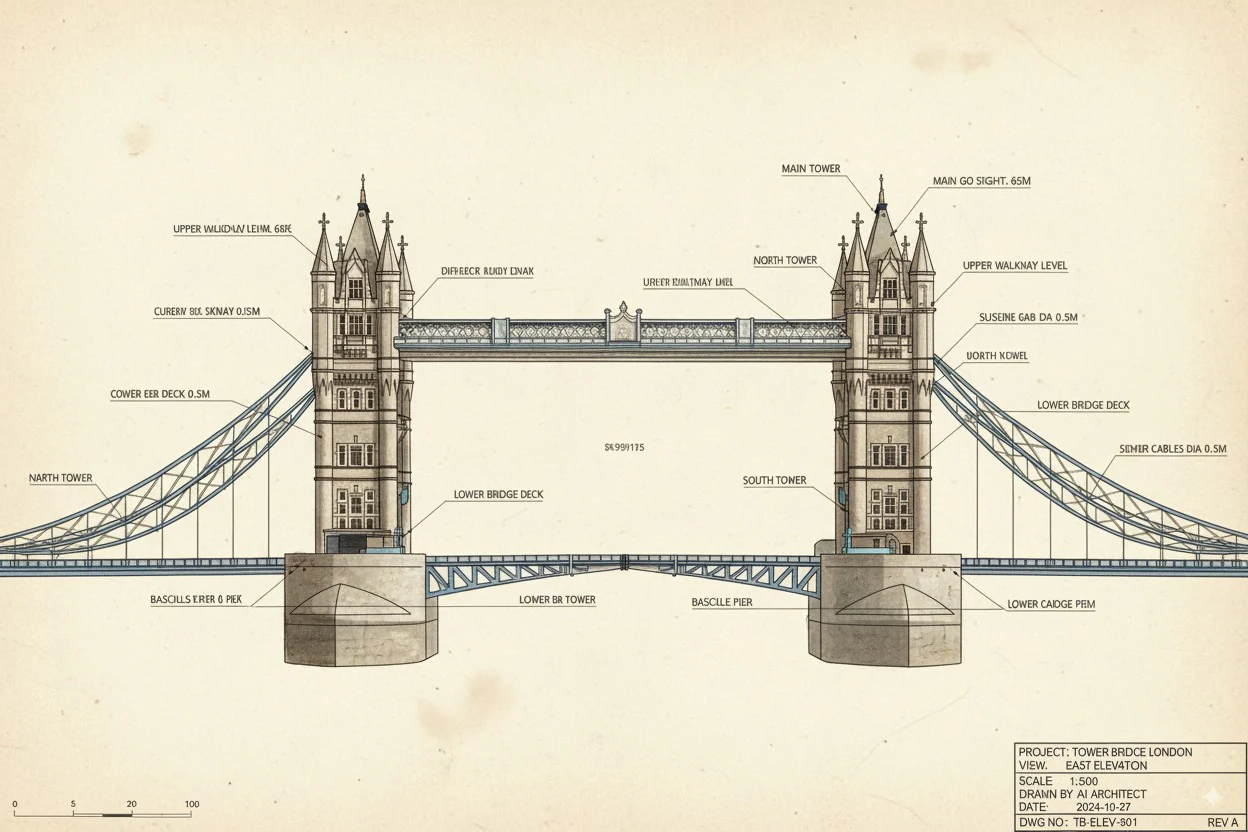

Google’s Nano Banana AI: Free Tool for 3D Architecture Models

https://landscapearchitecture.store/blogs/news/nano-banana-ai-free-tool-for-3d-architecture-models

How to Use Nano Banana AI for Architecture- Go to Google AI Studio.

- Log in with your Gmail and select Gemini 2.5 (Nano Banana).

- Upload a photo — either from your laptop or a Google Street View screenshot.

- Paste this example prompt:

“Use the provided architectural photo as reference. Generate a high-fidelity 3D building model in the look of a 3D-printed architecture model.” - Wait a few seconds, and your 3D architecture model will be ready.

Pro tip: If you want more accuracy, upload two images — a street photo for the facade and an aerial view for the roof/top.

-

Blender 4.5 switches from OpenGL to Vulkan support

Blender is switching from OpenGL to Vulkan as its default graphics backend, starting significantly with Blender 4.5, to achieve better performance and prepare for future features like real-time ray tracing and global illumination. To enable this switch, go to Edit > Preferences > System and set the “Backend” option to “Vulkan,” then restart Blender. This change offers substantial benefits, including faster startup times, improved viewport responsiveness, and more efficient handling of complex scenes by better utilizing your CPU and GPU resources.

Why the Switch to Vulkan?

- Modern Graphics API: Vulkan is a newer, lower-level, and more efficient API that provides developers with greater control over hardware, unlike the older, higher-level OpenGL.

- Performance Boost: This change significantly improves performance in various areas, such as viewport rendering, material loading, and overall UI responsiveness, especially in complex scenes with many textures.

- Better Resource Utilization: Vulkan distributes work more effectively across the CPU and reduces driver overhead, allowing Blender to make better use of your computer’s power.

- Future-Proofing: The Vulkan backend paves the way for advanced features like real-time ray tracing and global illumination in future versions of Blender.

-

Diffuman4D – 4D Consistent Human View Synthesis from Sparse-View Videos with Spatio-Temporal Diffusion Models

Given sparse-view videos, Diffuman4D (1) generates 4D-consistent multi-view videos conditioned on these inputs, and (2) reconstructs a high-fidelity 4DGS model of the human performance using both the input and the generated videos.

FEATURED POSTS

-

Lovis Odin ComfyUI-8iPlayer – Seamlessly integrate 8i volumetric videos into your AI workflows

Load holograms, animate cameras, capture frames, and feed them to your favorite AI models. Developed by Lovis Odin for Kartel.ai

You can obtain the MPD URL directly from the official 8i Web Player.https://github.com/Kartel-ai/ComfyUI-8iPlayer/

-

Ethan Roffler interviews CG Supervisor Daniele Tosti

Ethan Roffler

I recently had the honor of interviewing this VFX genius and gained great insight into what it takes to work in the entertainment industry. Keep in mind, these questions are coming from an artist’s perspective but can be applied to any creative individual looking for some wisdom from a professional. So grab a drink, sit back, and enjoy this fun and insightful conversation.

Ethan

To start, I just wanted to say thank you so much for taking the time for this interview!Daniele

My pleasure.

When I started my career I struggled to find help. Even people in the industry at the time were not that helpful. Because of that, I decided very early on that I was going to do exactly the opposite. I spend most of my weekends talking or helping students. ;)Ethan

(more…)

That’s awesome! I have also come across the same struggle! Just a heads up, this will probably be the most informal interview you’ll ever have haha! Okay, so let’s start with a small introduction!

-

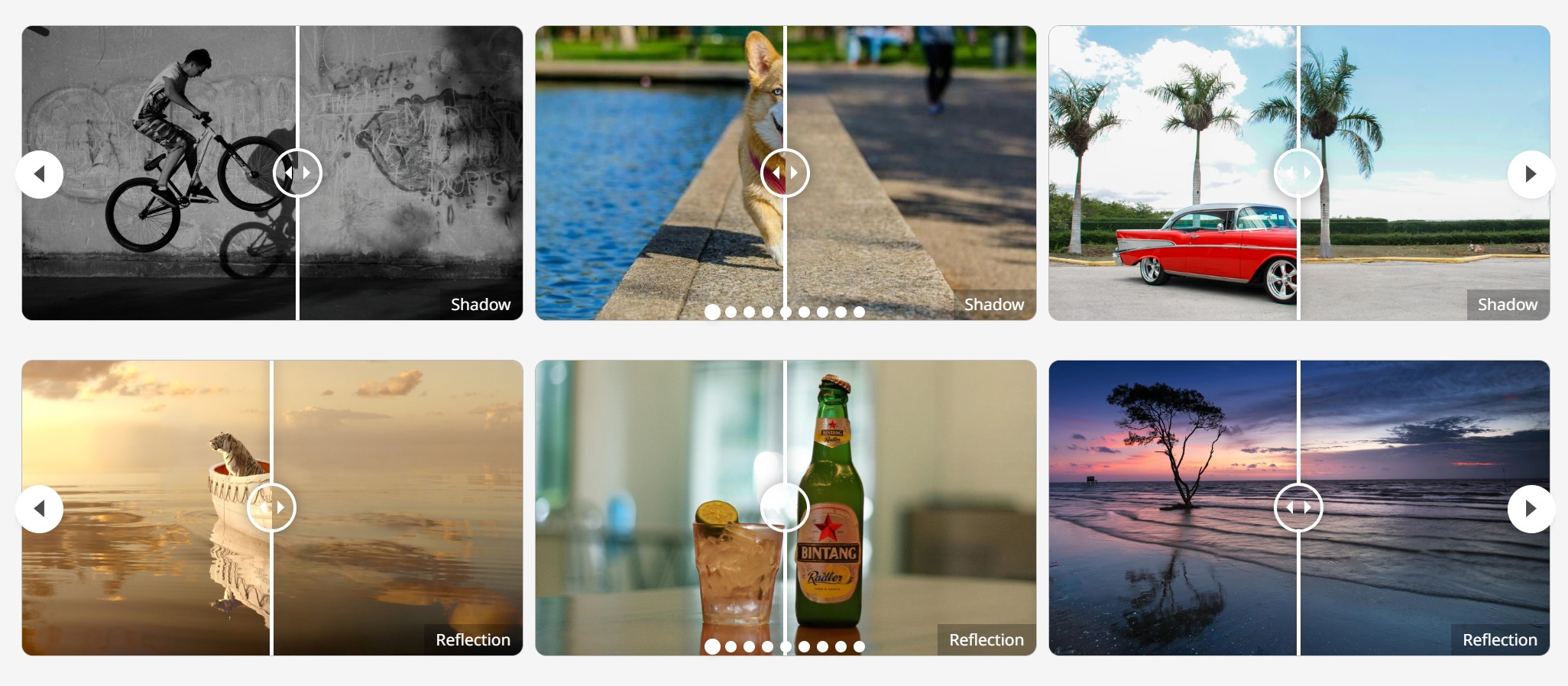

domeble – Hi-Resolution CGI Backplates and 360° HDRI

When collecting hdri make sure the data supports basic metadata, such as:

- Iso

- Aperture

- Exposure time or shutter time

- Color temperature

- Color space Exposure value (what the sensor receives of the sun intensity in lux)

- 7+ brackets (with 5 or 6 being the perceived balanced exposure)

In image processing, computer graphics, and photography, high dynamic range imaging (HDRI or just HDR) is a set of techniques that allow a greater dynamic range of luminances (a Photometry measure of the luminous intensity per unit area of light travelling in a given direction. It describes the amount of light that passes through or is emitted from a particular area, and falls within a given solid angle) between the lightest and darkest areas of an image than standard digital imaging techniques or photographic methods. This wider dynamic range allows HDR images to represent more accurately the wide range of intensity levels found in real scenes ranging from direct sunlight to faint starlight and to the deepest shadows.

The two main sources of HDR imagery are computer renderings and merging of multiple photographs, which in turn are known as low dynamic range (LDR) or standard dynamic range (SDR) images. Tone Mapping (Look-up) techniques, which reduce overall contrast to facilitate display of HDR images on devices with lower dynamic range, can be applied to produce images with preserved or exaggerated local contrast for artistic effect. Photography

In photography, dynamic range is measured in Exposure Values (in photography, exposure value denotes all combinations of camera shutter speed and relative aperture that give the same exposure. The concept was developed in Germany in the 1950s) differences or stops, between the brightest and darkest parts of the image that show detail. An increase of one EV or one stop is a doubling of the amount of light.

The human response to brightness is well approximated by a Steven’s power law, which over a reasonable range is close to logarithmic, as described by the Weber�Fechner law, which is one reason that logarithmic measures of light intensity are often used as well.

HDR is short for High Dynamic Range. It’s a term used to describe an image which contains a greater exposure range than the “black” to “white” that 8 or 16-bit integer formats (JPEG, TIFF, PNG) can describe. Whereas these Low Dynamic Range images (LDR) can hold perhaps 8 to 10 f-stops of image information, HDR images can describe beyond 30 stops and stored in 32 bit images.