BREAKING NEWS

LATEST POSTS

-

Google Stitch – Transform ideas into UI designs for mobile and web applications

https://stitch.withgoogle.com/

Stitch is available for free of charge with certain usage limits. Each user receives a monthly allowance of 350 generations using Flash mode and 50 generations using Experimental mode. Please note that these limits are subject to change.

-

Runway Partners with AMC Networks Across Marketing and TV Development

https://runwayml.com/news/runway-amc-partnership

Runway and AMC Networks, the international entertainment company known for popular and award-winning titles including MAD MEN, BREAKING BAD, BETTER CALL SAUL, THE WALKING DEAD and ANNE RICE’S INTERVIEW WITH THE VAMPIRE, are partnering to incorporate Runway’s AI models and tools in AMC Networks’ marketing and TV development processes.

-

LumaLabs.ai – Introducing Modify Video

https://lumalabs.ai/blog/news/introducing-modify-video

Reimagine any video. Shoot it in post with director-grade control over style, character, and setting. Restyle expressive actions and performances, swap entire worlds, or redesign the frame to your vision.

Shoot once. Shape infinitely. -

Transformer Explainer -Interactive Learning of Text-Generative Models

https://github.com/poloclub/transformer-explainer

Transformer Explainer is an interactive visualization tool designed to help anyone learn how Transformer-based models like GPT work. It runs a live GPT-2 model right in your browser, allowing you to experiment with your own text and observe in real time how internal components and operations of the Transformer work together to predict the next tokens. Try Transformer Explainer at http://poloclub.github.io/transformer-explainer

-

Henry Daubrez – How to generate VR/ 360 videos directly with Google VEO

https://www.linkedin.com/posts/upskydown_vr-googleveo-veo3-activity-7334269406396461059-d8Da

If you prompt for a 360° video in VEO (like literally write “360°” ) it can generate a Monoscopic 360 video, then the next step is to inject the right metadata in your file so you can play it as an actual 360 video.

Once it’s saved with the right Metadata, it will be recognized as an actual 360/VR video, meaning you can just play it in VLC and drag your mouse to look around.

FEATURED POSTS

-

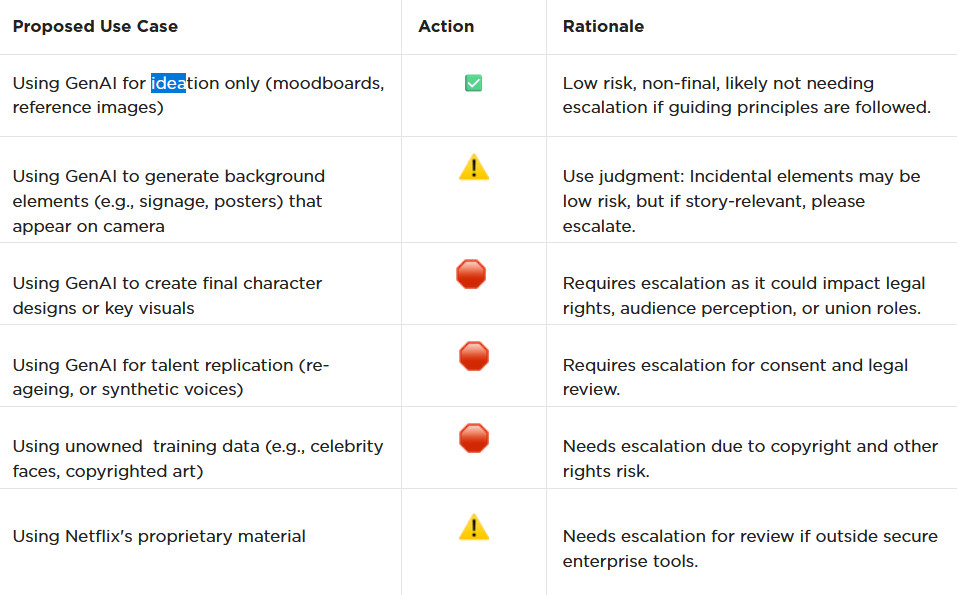

AI and the Law – Netflix : Using Generative AI in Content Production

https://www.cartoonbrew.com/business/netflix-generative-ai-use-guidelines-253300.html

- Temporary Use: AI-generated material can be used for ideation, visualization, and exploration—but is currently considered temporary and not part of final deliverables.

- Ownership & Rights: All outputs must be carefully reviewed to ensure rights, copyright, and usage are properly cleared before integrating into production.

- Transparency: Productions are expected to document and disclose how generative AI is used.

- Human Oversight: AI tools are meant to support creative teams, not replace them—final decision-making rests with human creators.

- Security & Compliance: Any use of AI tools must align with Netflix’s security protocols and protect confidential production material.

-

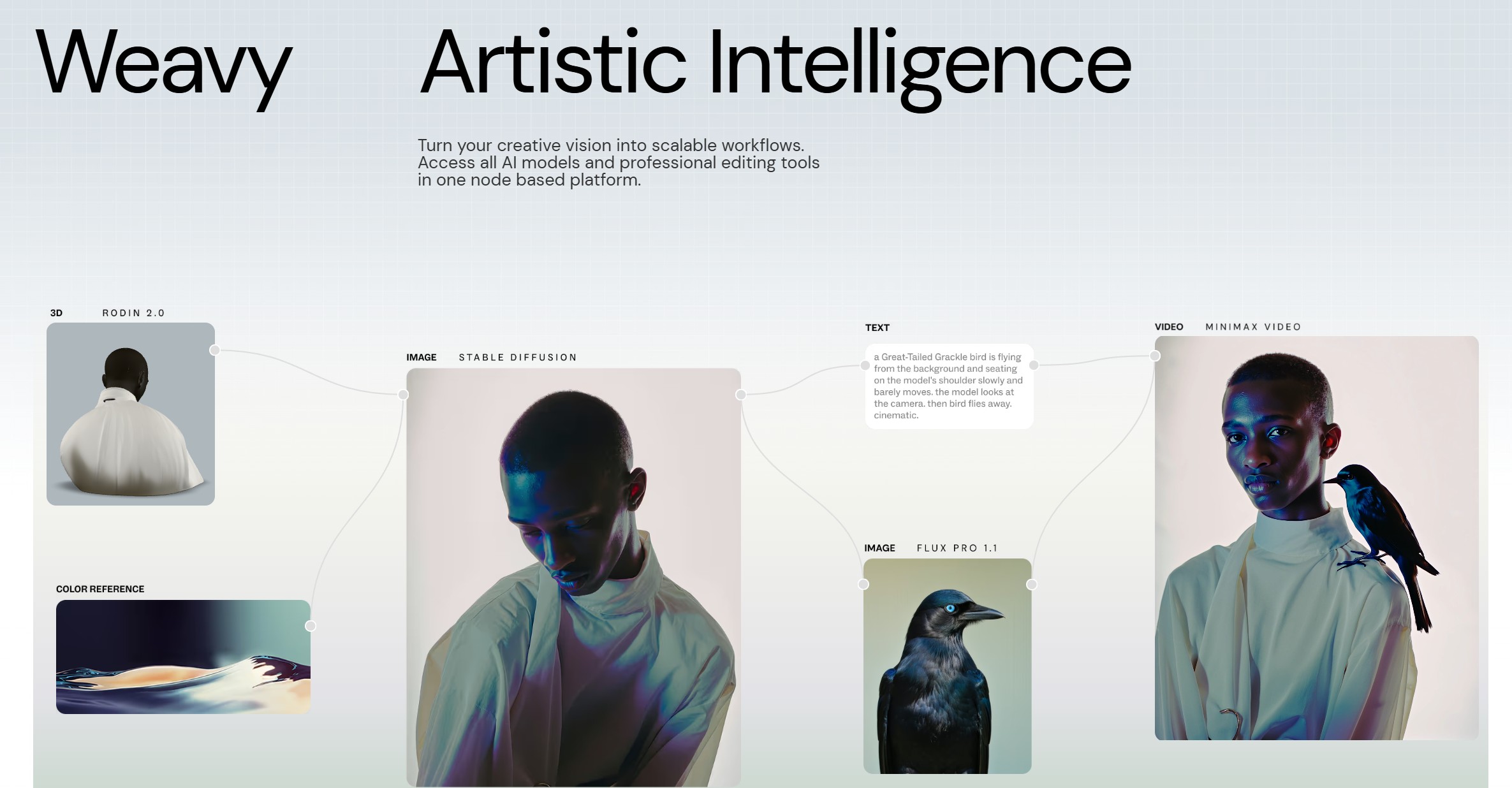

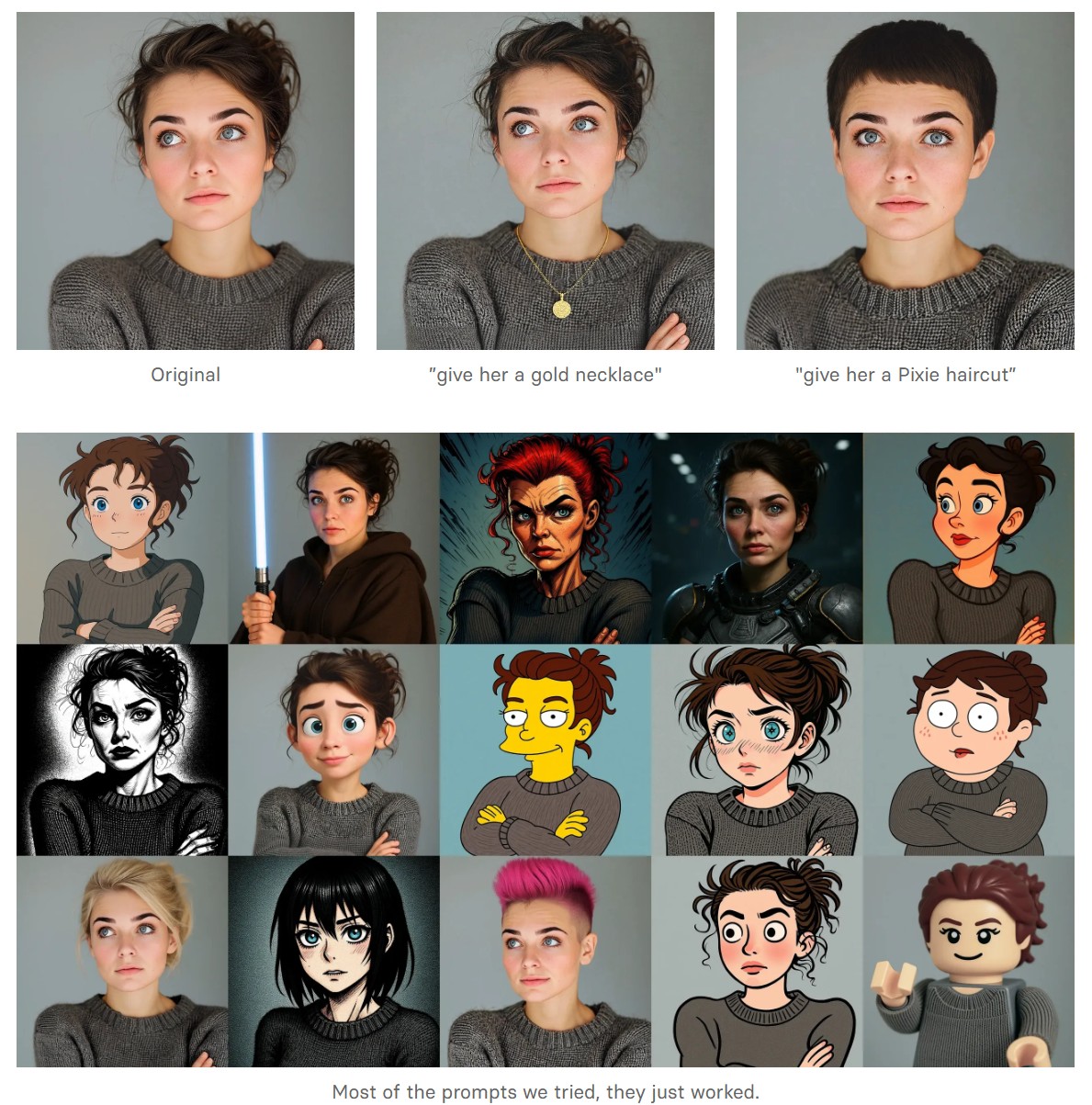

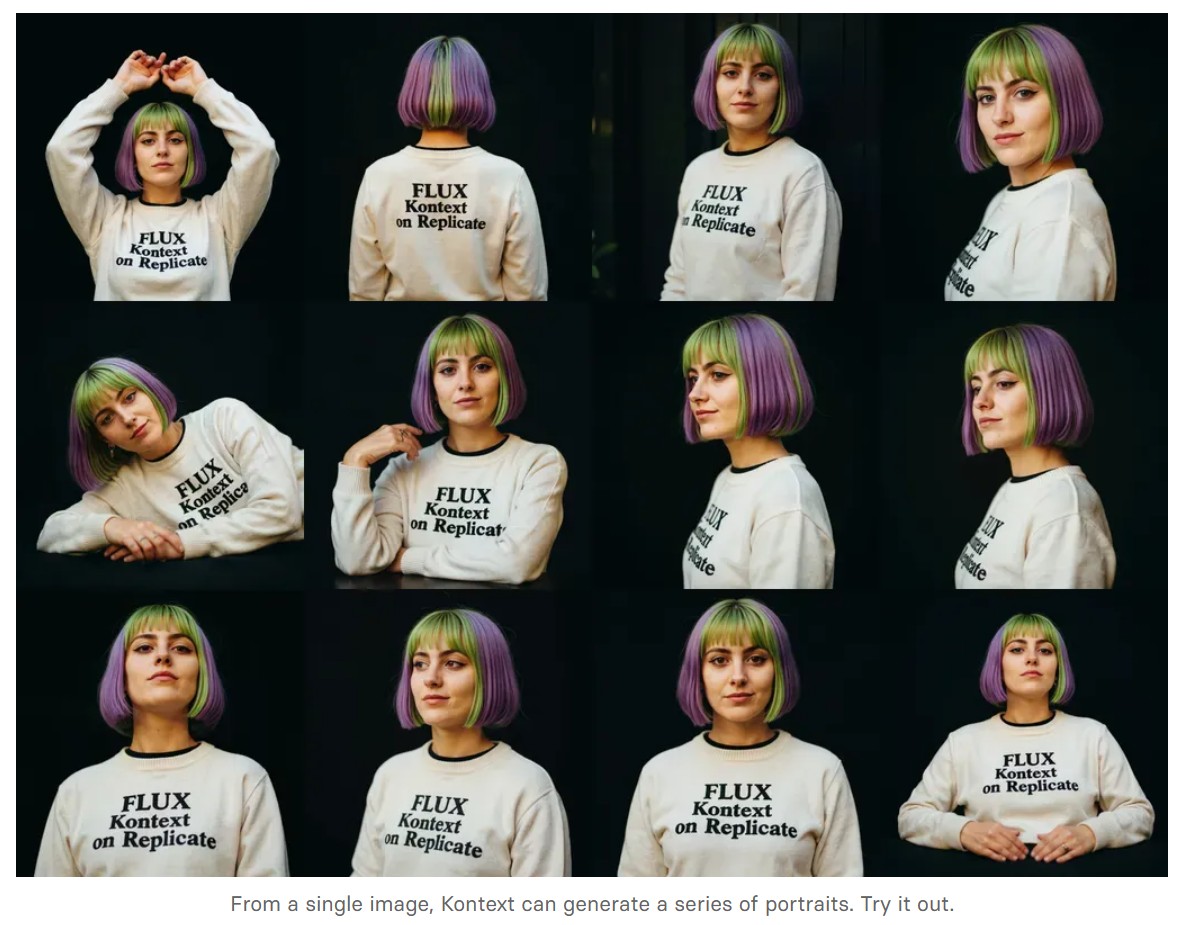

Black Forest Labs released FLUX.1 Kontext

https://replicate.com/blog/flux-kontext

https://replicate.com/black-forest-labs/flux-kontext-pro

There are three models, two are available now, and a third open-weight version is coming soon:

- FLUX.1 Kontext [pro]: State-of-the-art performance for image editing. High-quality outputs, great prompt following, and consistent results.

- FLUX.1 Kontext [max]: A premium model that brings maximum performance, improved prompt adherence, and high-quality typography generation without compromise on speed.

- Coming soon: FLUX.1 Kontext [dev]: An open-weight, guidance-distilled version of Kontext.

We’re so excited with what Kontext can do, we’ve created a collection of models on Replicate to give you ideas:

- Multi-image kontext: Combine two images into one.

- Portrait series: Generate a series of portraits from a single image

- Change haircut: Change a person’s hair style and color

- Iconic locations: Put yourself in front of famous landmarks

- Professional headshot: Generate a professional headshot from any image

-

OLED vs QLED – What TV is better?

Supported by LG, Philips, Panasonic and Sony sell the OLED system TVs.

OLED stands for “organic light emitting diode.”

It is a fundamentally different technology from LCD, the major type of TV today.

OLED is “emissive,” meaning the pixels emit their own light.Samsung is branding its best TVs with a new acronym: “QLED”

QLED (according to Samsung) stands for “quantum dot LED TV.”

It is a variation of the common LED LCD, adding a quantum dot film to the LCD “sandwich.”

QLED, like LCD, is, in its current form, “transmissive” and relies on an LED backlight.OLED is the only technology capable of absolute blacks and extremely bright whites on a per-pixel basis. LCD definitely can’t do that, and even the vaunted, beloved, dearly departed plasma couldn’t do absolute blacks.

QLED, as an improvement over OLED, significantly improves the picture quality. QLED can produce an even wider range of colors than OLED, which says something about this new tech. QLED is also known to produce up to 40% higher luminance efficiency than OLED technology. Further, many tests conclude that QLED is far more efficient in terms of power consumption than its predecessor, OLED.

(more…)

-

Outpost VFX lighting tips

www.outpost-vfx.com/en/news/18-pro-tips-and-tricks-for-lighting

Get as much information regarding your plate lighting as possible

- Always use a reference

- Replicate what is happening in real life

- Invest into a solid HDRI

- Start Simple

- Observe real world lighting, photography and cinematography

- Don’t neglect the theory

- Learn the difference between realism and photo-realism.

- Keep your scenes organised