BREAKING NEWS

LATEST POSTS

-

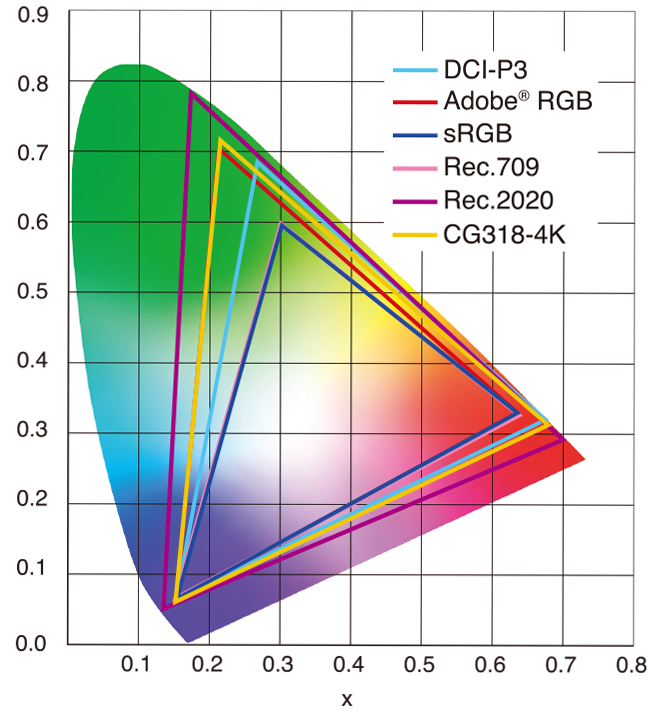

sRGB vs REC709 – An introduction and FFmpeg implementations

1. Basic Comparison

- What they are

- sRGB: A standard “web”/computer-display RGB color space defined by IEC 61966-2-1. It’s used for most monitors, cameras, printers, and the vast majority of images on the Internet.

- Rec. 709: An HD-video color space defined by ITU-R BT.709. It’s the go-to standard for HDTV broadcasts, Blu-ray discs, and professional video pipelines.

- Why they exist

- sRGB: Ensures consistent colors across different consumer devices (PCs, phones, webcams).

- Rec. 709: Ensures consistent colors across video production and playback chains (cameras → editing → broadcast → TV).

- What you’ll see

- On your desktop or phone, images tagged sRGB will look “right” without extra tweaking.

- On an HDTV or video-editing timeline, footage tagged Rec. 709 will display accurate contrast and hue on broadcast-grade monitors.

2. Digging Deeper

Feature sRGB Rec. 709 White point D65 (6504 K), same for both D65 (6504 K) Primaries (x,y) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) Gamut size Identical triangle on CIE 1931 chart Identical to sRGB Gamma / transfer Piecewise curve: approximate 2.2 with linear toe Pure power-law γ≈2.4 (often approximated as 2.2 in practice) Matrix coefficients N/A (pure RGB usage) Y = 0.2126 R + 0.7152 G + 0.0722 B (Rec. 709 matrix) Typical bit-depth 8-bit/channel (with 16-bit variants) 8-bit/channel (10-bit for professional video) Usage metadata Tagged as “sRGB” in image files (PNG, JPEG, etc.) Tagged as “bt709” in video containers (MP4, MOV) Color range Full-range RGB (0–255) Studio-range Y′CbCr (Y′ [16–235], Cb/Cr [16–240])

Why the Small Differences Matter

(more…) - What they are

-

Narcis Calin’s Galaxy Engine – A free, open source simulation software

This 2025 I decided to start learning how to code, so I installed Visual Studio and I started looking into C++. After days of watching tutorials and guides about the basics of C++ and programming, I decided to make something physics-related. I started with a dot that fell to the ground and then I wanted to simulate gravitational attraction, so I made 2 circles attracting each other. I thought it was really cool to see something I made with code actually work, so I kept building on top of that small, basic program. And here we are after roughly 8 months of learning programming. This is Galaxy Engine, and it is a simulation software I have been making ever since I started my learning journey. It currently can simulate gravity, dark matter, galaxies, the Big Bang, temperature, fluid dynamics, breakable solids, planetary interactions, etc. The program can run many tens of thousands of particles in real time on the CPU thanks to the Barnes-Hut algorithm, mixed with Morton curves. It also includes its own PBR 2D path tracer with BVH optimizations. The path tracer can simulate a bunch of stuff like diffuse lighting, specular reflections, refraction, internal reflection, fresnel, emission, dispersion, roughness, IOR, nested IOR and more! I tried to make the path tracer closer to traditional 3D render engines like V-Ray. I honestly never imagined I would go this far with programming, and it has been an amazing learning experience so far. I think that mixing this knowledge with my 3D knowledge can unlock countless new possibilities. In case you are curious about Galaxy Engine, I made it completely free and Open-Source so that anyone can build and compile it locally! You can find the source code in GitHub

https://github.com/NarcisCalin/Galaxy-Engine

-

Introduction to BytesIO

When you’re working with binary data in Python—whether that’s image bytes, network payloads, or any in-memory binary stream—you often need a file-like interface without touching the disk. That’s where

BytesIOfrom the built-iniomodule comes in handy. It lets you treat a bytes buffer as if it were a file.What Is

BytesIO?- Module:

io - Class:

BytesIO - Purpose:

- Provides an in-memory binary stream.

- Acts like a file opened in binary mode (

'rb'/'wb'), but data lives in RAM rather than on disk.

from io import BytesIO

Why Use

BytesIO?- Speed

- No disk I/O—reads and writes happen in memory.

- Convenience

- Emulates file methods (

read(),write(),seek(), etc.). - Ideal for testing code that expects a file-like object.

- Emulates file methods (

- Safety

- No temporary files cluttering up your filesystem.

- Integration

- Libraries that accept file-like objects (e.g., PIL,

requests) will work withBytesIO.

- Libraries that accept file-like objects (e.g., PIL,

Basic Examples

1. Writing Bytes to a Buffer

(more…)from io import BytesIO # Create a BytesIO buffer buffer = BytesIO() # Write some binary data buffer.write(b'Hello, \xF0\x9F\x98\x8A') # includes a smiley emoji in UTF-8 # Retrieve the entire contents data = buffer.getvalue() print(data) # b'Hello, \xf0\x9f\x98\x8a' print(data.decode('utf-8')) # Hello, 😊 # Always close when done buffer.close() - Module:

-

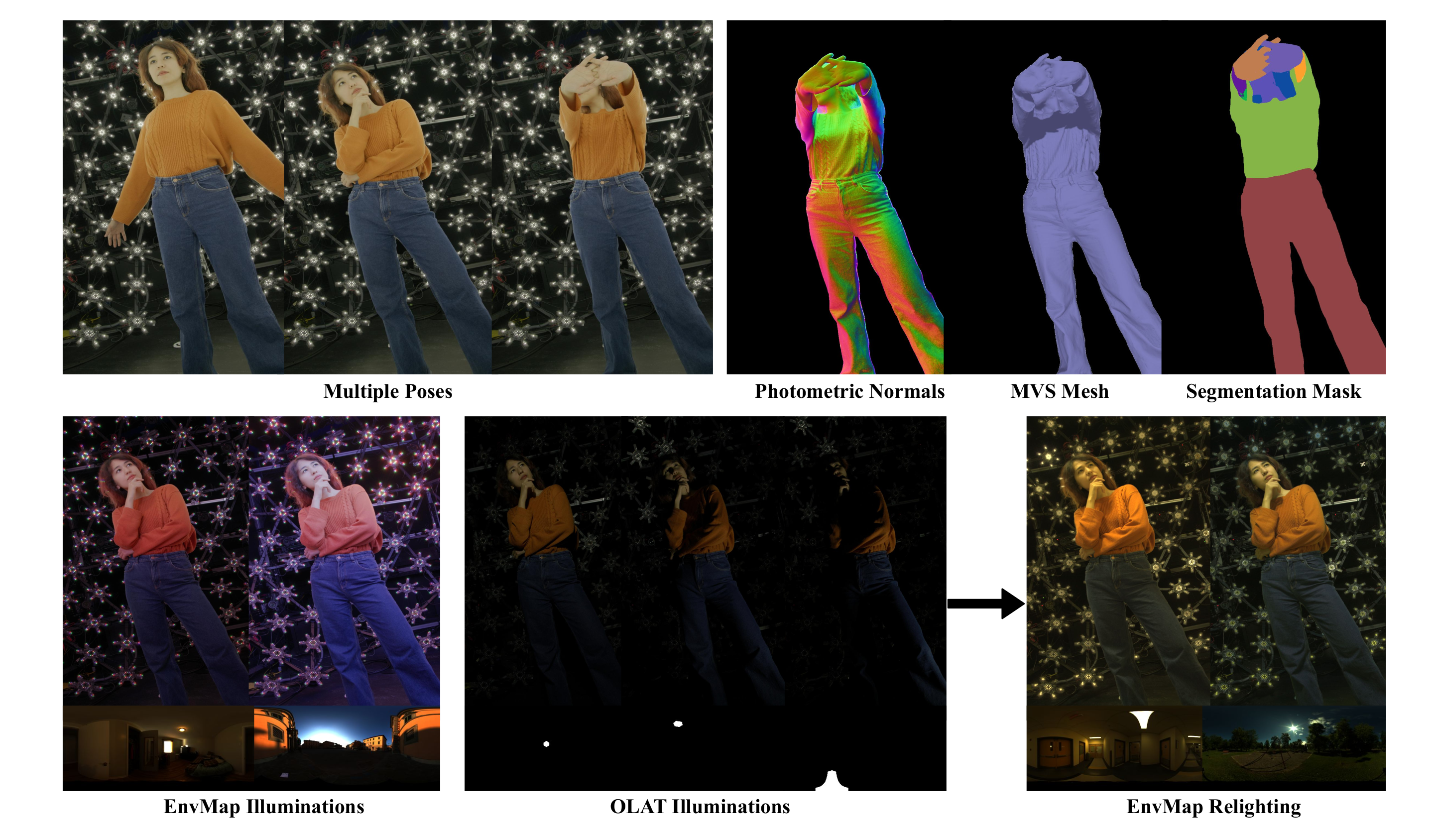

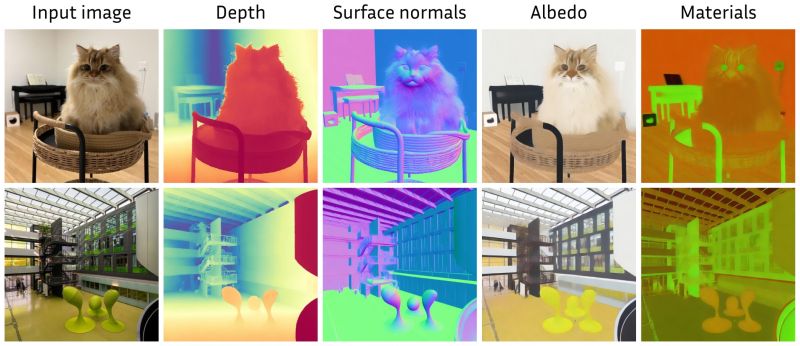

Marigold – repurposing diffusion-based image generators for dense predictions

Marigold repurposes Stable Diffusion for dense prediction tasks such as monocular depth estimation and surface normal prediction, delivering a level of detail often missing even in top discriminative models.

Key aspects that make it great:

– Reuses the original VAE and only lightly fine-tunes the denoising UNet

– Trained on just tens of thousands of synthetic image–modality pairs

– Runs on a single consumer GPU (e.g., RTX 4090)

– Zero-shot generalization to real-world, in-the-wild imageshttps://mlhonk.substack.com/p/31-marigold

https://arxiv.org/pdf/2505.09358

https://marigoldmonodepth.github.io/

FEATURED POSTS

-

Aider.chat – A free, open-source AI pair-programming CLI tool

Aider enables developers to interactively generate, modify, and test code by leveraging both cloud-hosted and local LLMs directly from the terminal or within an IDE. Key capabilities include comprehensive codebase mapping, support for over 100 programming languages, automated git commit messages, voice-to-code interactions, and built-in linting and testing workflows. Installation is straightforward via pip or uv, and while the tool itself has no licensing cost, actual usage costs stem from the underlying LLM APIs, which are billed separately by providers like OpenAI or Anthropic.

Key Features

- Cloud & Local LLM Support

Connect to most major LLM providers out of the box, or run models locally for privacy and cost control aider.chat. - Codebase Mapping

Automatically indexes all project files so that even large repositories can be edited contextually aider.chat. - 100+ Language Support

Works with Python, JavaScript, Rust, Ruby, Go, C++, PHP, HTML, CSS, and dozens more aider.chat. - Git Integration

Generates sensible commit messages and automates diffs/undo operations through familiar git tooling aider.chat. - Voice-to-Code

Speak commands to Aider to request features, tests, or fixes without typing aider.chat. - Images & Web Pages

Attach screenshots, diagrams, or documentation URLs to provide visual context for edits aider.chat. - Linting & Testing

Runs lint and test suites automatically after each change, and can fix issues it detects

- Cloud & Local LLM Support

-

Emmanuel Tsekleves – Writing Research Papers

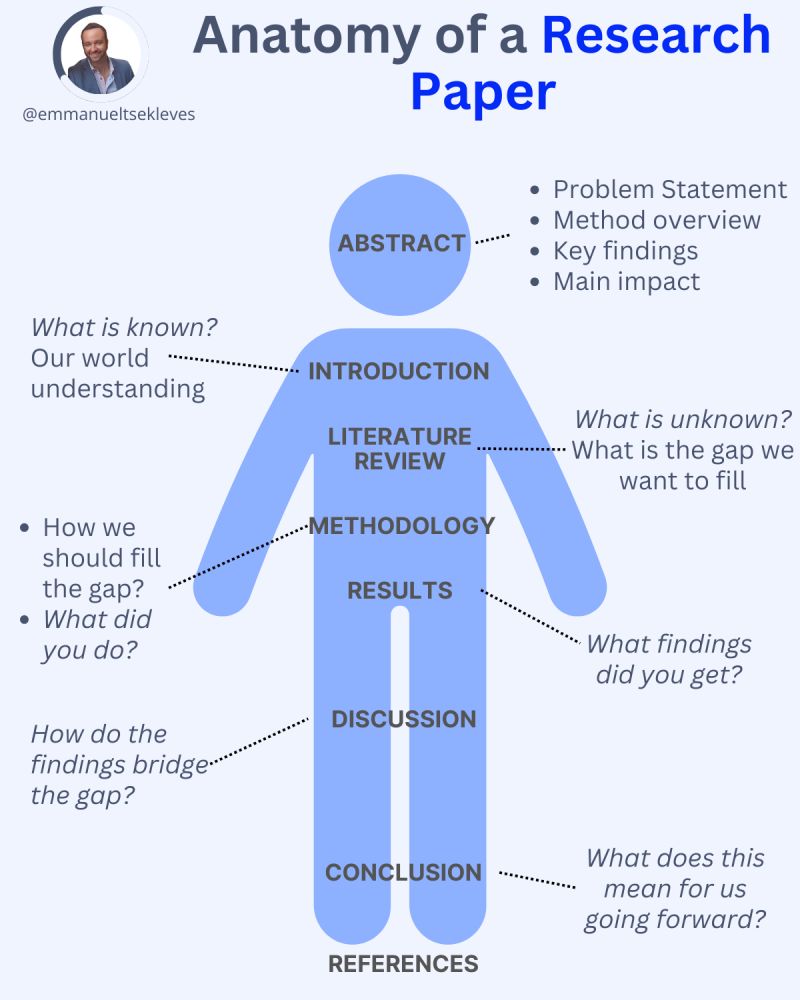

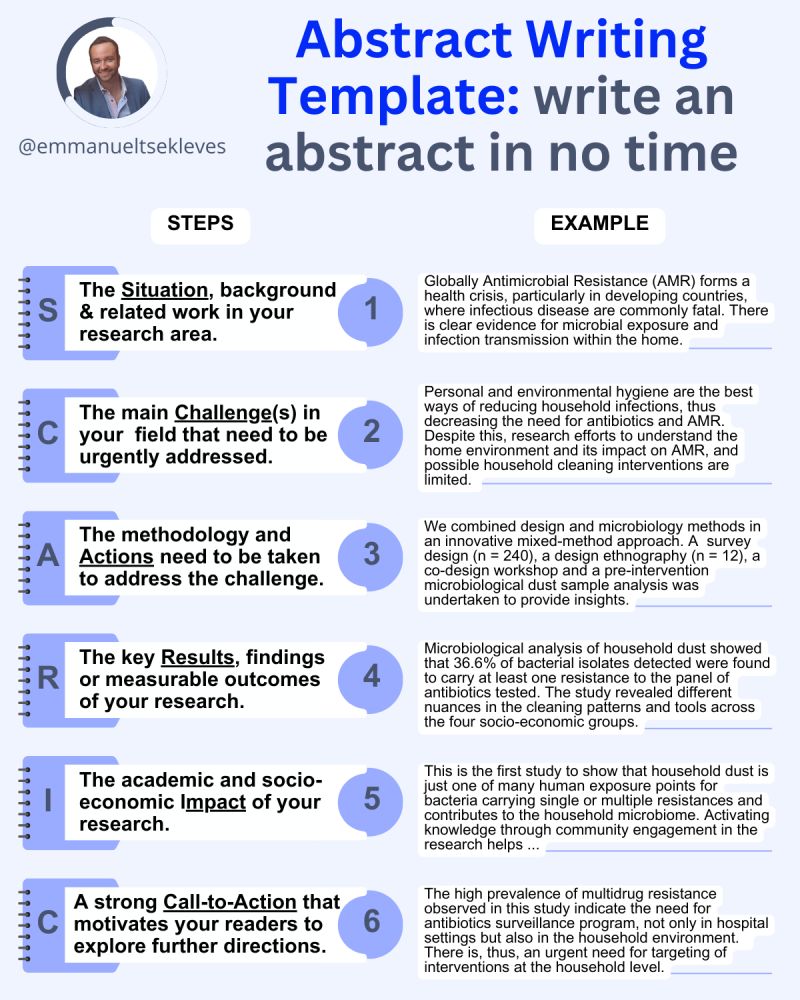

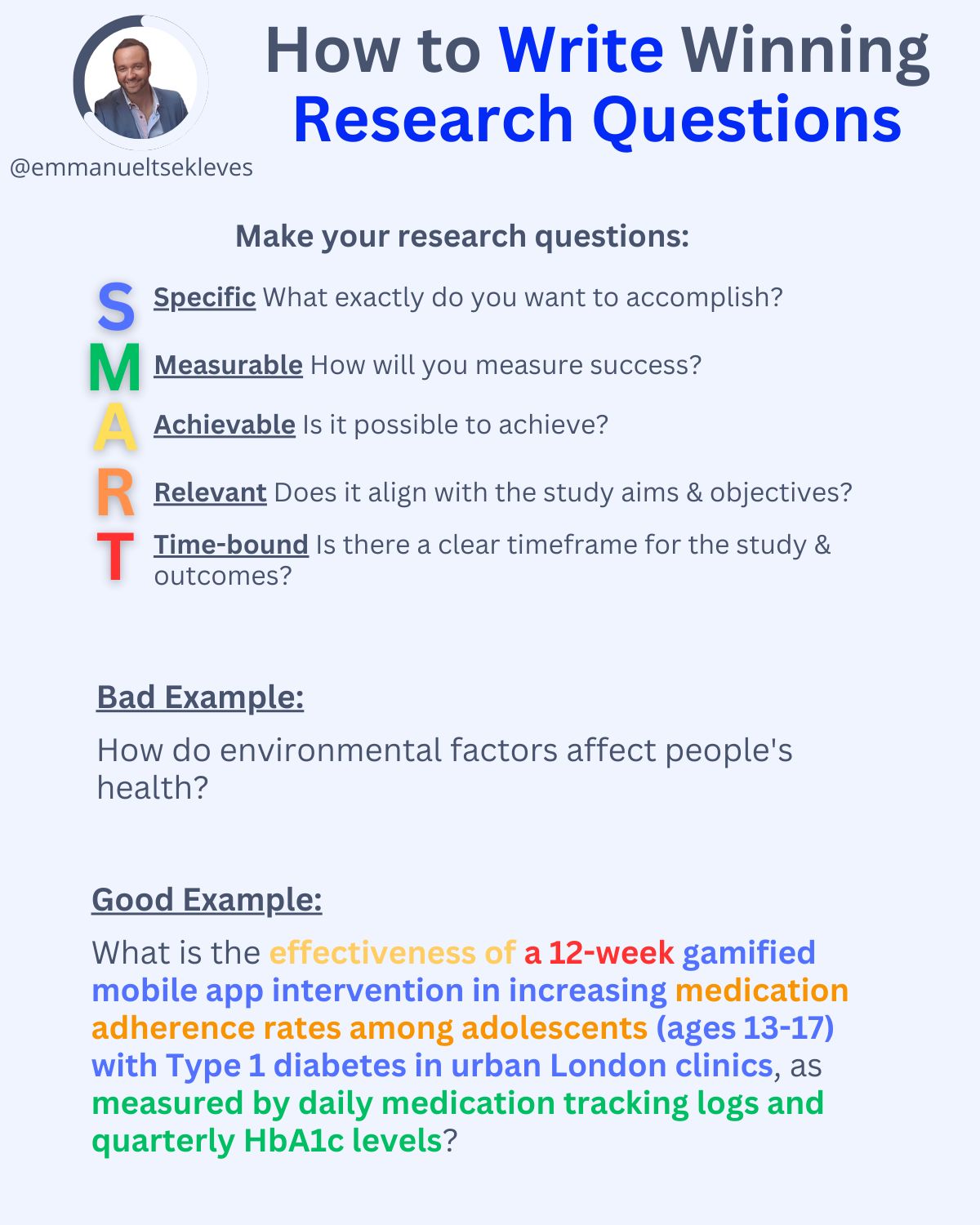

Here’s the journey of crafting a compelling paper:

1️. ABSTRACT

This is your elevator pitch.

Give a methodology overview.

Paint the problem you’re solving.

Highlight key findings and their impact.

2️. INTRODUCTION

Start with what we know.

Set the stage for our current understanding.

Hook your reader with the relevance of your work.

3️. LITERATURE REVIEW

Identify what’s unknown.

Spot the gaps in current knowledge.

Your job in the next sections is to fill this gap.

4️. METHODOLOGY

What did you do?

Outline how you’ll fill that gap.

Be transparent about your approach.

Make it reproducible so others can follow.

5️. RESULTS

Let the data speak for itself.

Present your findings clearly.

Keep it concise and focused.

6️. DISCUSSION

Now, connect the dots.

Discuss implications and significance.

How do your findings bridge the knowledge gap?

7️. CONCLUSION

Wrap it up with future directions.

What does this mean for us moving forward?

Leave the reader with a call to action or reflection.

8️. REFERENCES

Acknowledge the giants whose shoulders you stand on.

A robust reference list shows the depth of your research.