BREAKING NEWS

LATEST POSTS

-

Adobe buys InvokeAI and launches Adobe AI Foundry

https://mlq.ai/news/adobe-stock-rises-following-launch-of-adobe-ai-foundry/

https://theaieconomy.substack.com/p/adobe-ai-foundry-invoke-acquisition

Adobe is announcing the acquisition of Invoke, a generative media solution for creative production. The startup’s team will join the AI Foundry to build out AI-powered creative workflows for businesses.

“AI Foundry unites years of Adobe innovation and expertise, spanning our generative AI models and modalities, to help businesses solve today’s most complex content and media production challenges,” Hannah Elsakr, Adobe’s vice president for GenAI new business ventures, says in a release.

-

DeepBeepMeep – AI solutions specifically optimized for low spec GPUs

https://huggingface.co/DeepBeepMeep

https://github.com/deepbeepmeep

Wan2GP – A fast AI Video Generator for the GPU Poor. Supports Wan 2.1/2.2, Qwen Image, Hunyuan Video, LTX Video and Flux.

mmgp – Memory Management for the GPU Poor, run the latest open source frontier models on consumer Nvidia GPUs.

YuEGP – Open full-song generation foundation that transforms lyrics into complete songs.

HunyuanVideoGP – Large video generation model optimized for low-VRAM GPUs.

FluxFillGP – Flux-based inpainting and outpainting tool for low-VRAM GPUs.

Cosmos1GP – Text-to-world and image/video-to-world generator for the GPU Poor.

Hunyuan3D-2GP – GPU-friendly version of Hunyuan3D-2 for 3D content generation.

OminiControlGP – Lightweight version of OminiControl enabling 3D, pose, and control tasks with FLUX.

SageAttention – Quantized attention achieving 2.1–3.1× and 2.7–5.1× speedups over FlashAttention2 and xformers without losing end-to-end accuracy.

insightface – State-of-the-art 2D and 3D face analysis project for recognition, detection, and alignment.

FEATURED POSTS

-

Methods for creating motion blur in Stop motion

en.wikipedia.org/wiki/Go_motion

Petroleum jelly

This crude but reasonably effective technique involves smearing petroleum jelly (“Vaseline”) on a plate of glass in front of the camera lens, also known as vaselensing, then cleaning and reapplying it after each shot — a time-consuming process, but one which creates a blur around the model. This technique was used for the endoskeleton in The Terminator. This process was also employed by Jim Danforth to blur the pterodactyl’s wings in Hammer Films’ When Dinosaurs Ruled the Earth, and by Randal William Cook on the terror dogs sequence in Ghostbusters.[citation needed]Bumping the puppet

Gently bumping or flicking the puppet before taking the frame will produce a slight blur; however, care must be taken when doing this that the puppet does not move too much or that one does not bump or move props or set pieces.Moving the table

Moving the table on which the model is standing while the film is being exposed creates a slight, realistic blur. This technique was developed by Ladislas Starevich: when the characters ran, he moved the set in the opposite direction. This is seen in The Little Parade when the ballerina is chased by the devil. Starevich also used this technique on his films The Eyes of the Dragon, The Magical Clock and The Mascot. Aardman Animations used this for the train chase in The Wrong Trousers and again during the lorry chase in A Close Shave. In both cases the cameras were moved physically during a 1-2 second exposure. The technique was revived for the full-length Wallace & Gromit: The Curse of the Were-Rabbit.Go motion

The most sophisticated technique was originally developed for the film The Empire Strikes Back and used for some shots of the tauntauns and was later used on films like Dragonslayer and is quite different from traditional stop motion. The model is essentially a rod puppet. The rods are attached to motors which are linked to a computer that can record the movements as the model is traditionally animated. When enough movements have been made, the model is reset to its original position, the camera rolls and the model is moved across the table. Because the model is moving during shots, motion blur is created.A variation of go motion was used in E.T. the Extra-Terrestrial to partially animate the children on their bicycles.

-

VES Cinematic Color – Motion-Picture Color Management

This paper presents an introduction to the color pipelines behind modern feature-film visual-effects and animation.

Authored by Jeremy Selan, and reviewed by the members of the VES Technology Committee including Rob Bredow, Dan Candela, Nick Cannon, Paul Debevec, Ray Feeney, Andy Hendrickson, Gautham Krishnamurti, Sam Richards, Jordan Soles, and Sebastian Sylwan.

-

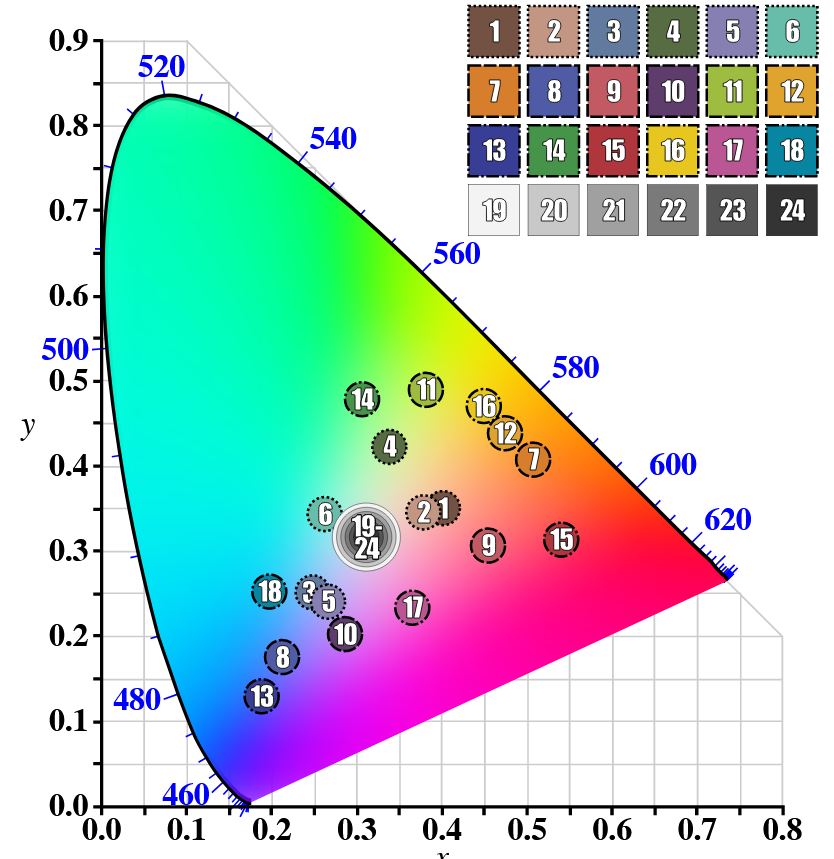

GretagMacbeth Color Checker Numeric Values and Middle Gray

The human eye perceives half scene brightness not as the linear 50% of the present energy (linear nature values) but as 18% of the overall brightness. We are biased to perceive more information in the dark and contrast areas. A Macbeth chart helps with calibrating back into a photographic capture into this “human perspective” of the world.

https://en.wikipedia.org/wiki/Middle_gray

In photography, painting, and other visual arts, middle gray or middle grey is a tone that is perceptually about halfway between black and white on a lightness scale in photography and printing, it is typically defined as 18% reflectance in visible light

Light meters, cameras, and pictures are often calibrated using an 18% gray card[4][5][6] or a color reference card such as a ColorChecker. On the assumption that 18% is similar to the average reflectance of a scene, a grey card can be used to estimate the required exposure of the film.

https://en.wikipedia.org/wiki/ColorChecker

(more…)