BREAKING NEWS

LATEST POSTS

-

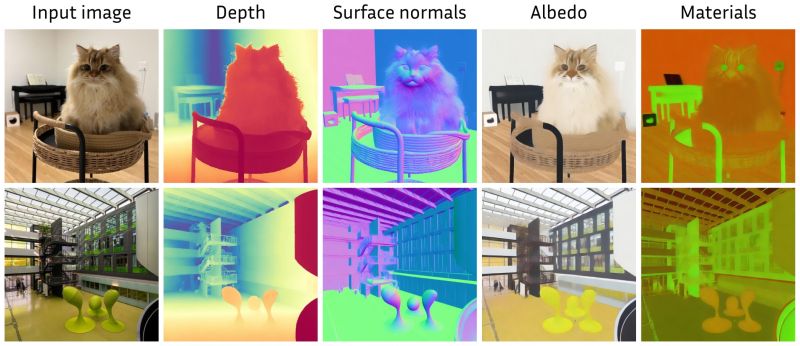

Marigold – repurposing diffusion-based image generators for dense predictions

Marigold repurposes Stable Diffusion for dense prediction tasks such as monocular depth estimation and surface normal prediction, delivering a level of detail often missing even in top discriminative models.

Key aspects that make it great:

– Reuses the original VAE and only lightly fine-tunes the denoising UNet

– Trained on just tens of thousands of synthetic image–modality pairs

– Runs on a single consumer GPU (e.g., RTX 4090)

– Zero-shot generalization to real-world, in-the-wild imageshttps://mlhonk.substack.com/p/31-marigold

https://arxiv.org/pdf/2505.09358

https://marigoldmonodepth.github.io/

-

Runway Aleph

https://runwayml.com/research/introducing-runway-aleph

Generate New Camera Angles

Generate the Next Shot

Use Any Style to Transfer to a Video

Change Environments, Locations, Seasons and Time of Day

Add Things to a Scene

Remove Things from a Scene

Change Objects in a Scene

Apply the Motion of a Video to an Image

Alter a Character’s Appearance

Recolor Elements of a Scene

Relight Shots

Green Screen Any Object, Person or Situation -

Mike Wong – AtoMeow – A Blue noise image stippling in Processing

https://github.com/mwkm/atoMeow

https://www.shadertoy.com/view/7s3XzX

This demo is created for coders who are familiar with this awesome creative coding platform. You may quickly modify the code to work for video or to stipple your own Procssing drawings by turning them into

PImageand run the simulation. This demo code also serves as a reference implementation of my article Blue noise sampling using an N-body simulation-based method. If you are interested in 2.5D, you may mod the code to achieve what I discussed in this artist friendly article.Convert your video to a dotted noise.

-

Aitor Echeveste – Free CG and Comp Projection Shot, Download the Assets & Follow the Workflow

What’s Included:

- Cleaned and extended base plates

- Full Maya and Nuke 3D projection layouts

- Bullet and environment CG renders with AOVs (RGB, normals, position, ID, etc.)

- Explosion FX in slow motion

- 3D scene geometry for projection

- Camera + lensing setup

- Light groups and passes for look development

-

Tauseef Fayyaz About readable code – Clean Code Practices

𝗛𝗲𝗿𝗲’𝘀 𝘄𝗵𝗮𝘁 𝘁𝗼 𝗺𝗮𝘀𝘁𝗲𝗿 𝗶𝗻 𝗖𝗹𝗲𝗮𝗻 𝗖𝗼𝗱𝗲 𝗣𝗿𝗮𝗰𝘁𝗶𝗰𝗲𝘀:

🔹 Code Readability & Simplicity – Use meaningful names, write short functions, follow SRP, flatten logic, and remove dead code.

→ Clarity is a feature.

🔹 Function & Class Design – Limit parameters, favor pure functions, small classes, and composition over inheritance.

→ Structure drives scalability.

🔹 Testing & Maintainability – Write readable unit tests, avoid over-mocking, test edge cases, and refactor with confidence.

→ Test what matters.

🔹 Code Structure & Architecture – Organize by features, minimize global state, avoid god objects, and abstract smartly.

→ Architecture isn’t just backend.

🔹 Refactoring & Iteration – Apply the Boy Scout Rule, DRY, KISS, and YAGNI principles regularly.

→ Refactor like it’s part of development.

🔹 Robustness & Safety – Validate early, handle errors gracefully, avoid magic numbers, and favor immutability.

→ Safe code is future-proof.

🔹 Documentation & Comments – Let your code explain itself. Comment why, not what, and document at the source.

→ Good docs reduce team friction.

🔹 Tooling & Automation – Use linters, formatters, static analysis, and CI reviews to automate code quality.

→ Let tools guard your gates.

🔹 Final Review Practices – Review, refactor nearby code, and avoid cleverness in the name of brevity.

→ Readable code is better than smart code. -

Mark Theriault “Steamboat Willie” – AI Re-Imagining of a 1928 Classic in 4k

I ran Steamboat Willie (now public domain) through Flux Kontext to reimagine it as a 3D-style animated piece. Instead of going the polished route with something like W.A.N. 2.1 for full image-to-video generation, I leaned into the raw, handmade vibe that comes from converting each frame individually. It gave it a kind of stop-motion texture, imperfect, a bit wobbly, but full of character.

-

Microsoft DAViD – Data-efficient and Accurate Vision Models from Synthetic Data

Our human-centric dense prediction model delivers high-quality, detailed (depth) results while achieving remarkable efficiency, running orders of magnitude faster than competing methods, with inference speeds as low as 21 milliseconds per frame (the large multi-task model on an NVIDIA A100). It reliably captures a wide range of human characteristics under diverse lighting conditions, preserving fine-grained details such as hair strands and subtle facial features. This demonstrates the model’s robustness and accuracy in complex, real-world scenarios.

https://microsoft.github.io/DAViD

The state of the art in human-centric computer vision achieves high accuracy and robustness across a diverse range of tasks. The most effective models in this domain have billions of parameters, thus requiring extremely large datasets, expensive training regimes, and compute-intensive inference. In this paper, we demonstrate that it is possible to train models on much smaller but high-fidelity synthetic datasets, with no loss in accuracy and higher efficiency. Using synthetic training data provides us with excellent levels of detail and perfect labels, while providing strong guarantees for data provenance, usage rights, and user consent. Procedural data synthesis also provides us with explicit control on data diversity, that we can use to address unfairness in the models we train. Extensive quantitative assessment on real input images demonstrates accuracy of our models on three dense prediction tasks: depth estimation, surface normal estimation, and soft foreground segmentation. Our models require only a fraction of the cost of training and inference when compared with foundational models of similar accuracy.

-

-

Embedding frame ranges into Quicktime movies with FFmpeg

QuickTime (.mov) files are fundamentally time-based, not frame-based, and so don’t have a built-in, uniform “first frame/last frame” field you can set as numeric frame IDs. Instead, tools like Shotgun Create rely on the timecode track and the movie’s duration to infer frame numbers. If you want Shotgun to pick up a non-default frame range (e.g. start at 1001, end at 1064), you must bake in an SMPTE timecode that corresponds to your desired start frame, and ensure the movie’s duration matches your clip length.

How Shotgun Reads Frame Ranges

- Default start frame is 1. If no timecode metadata is present, Shotgun assumes the movie begins at frame 1.

- Timecode ⇒ frame number. Shotgun Create “honors the timecodes of media sources,” mapping the embedded TC to frame IDs. For example, a 24 fps QuickTime tagged with a start timecode of 00:00:41:17 will be interpreted as beginning on frame 1001 (1001 ÷ 24 fps ≈ 41.71 s).

Embedding a Start Timecode

QuickTime uses a

tmcd(timecode) track. You can bake in an SMPTE track via FFmpeg’s-timecodeflag or via Compressor/encoder settings:- Compute your start TC.

- Desired start frame = 1001

- Frame 1001 at 24 fps ⇒ 1001 ÷ 24 ≈ 41.708 s ⇒ TC 00:00:41:17

- FFmpeg example:

ffmpeg -i input.mov \ -c copy \ -timecode 00:00:41:17 \ output.movThis adds a timecode track beginning at 00:00:41:17, which Shotgun maps to frame 1001.

Ensuring the Correct End Frame

Shotgun infers the last frame from the movie’s duration. To end on frame 1064:

- Frame count = 1064 – 1001 + 1 = 64 frames

- Duration = 64 ÷ 24 fps ≈ 2.667 s

FFmpeg trim example:

ffmpeg -i input.mov \ -c copy \ -timecode 00:00:41:17 \ -t 00:00:02.667 \ output_trimmed.movThis results in a 64-frame clip (1001→1064) at 24 fps.

FEATURED POSTS

-

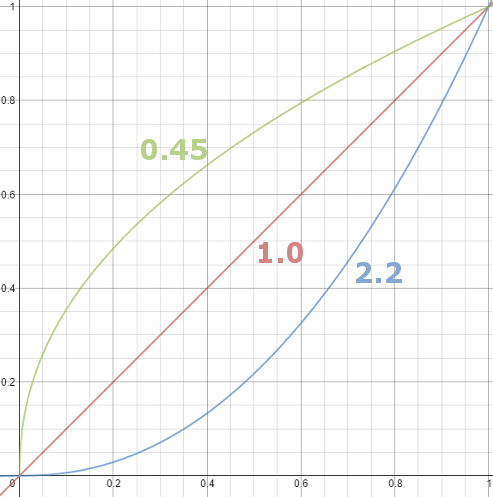

Gamma correction

http://www.normankoren.com/makingfineprints1A.html#Gammabox

https://en.wikipedia.org/wiki/Gamma_correction

http://www.photoscientia.co.uk/Gamma.htm

https://www.w3.org/Graphics/Color/sRGB.html

http://www.eizoglobal.com/library/basics/lcd_display_gamma/index.html

https://forum.reallusion.com/PrintTopic308094.aspx

Basically, gamma is the relationship between the brightness of a pixel as it appears on the screen, and the numerical value of that pixel. Generally Gamma is just about defining relationships.

Three main types:

– Image Gamma encoded in images

– Display Gammas encoded in hardware and/or viewing time

– System or Viewing Gamma which is the net effect of all gammas when you look back at a final image. In theory this should flatten back to 1.0 gamma.

(more…)

-

Photography basics: Solid Angle measures

http://www.calculator.org/property.aspx?name=solid+angle

A measure of how large the object appears to an observer looking from that point. Thus. A measure for objects in the sky. Useful to retuen the size of the sun and moon… and in perspective, how much of their contribution to lighting. Solid angle can be represented in ‘angular diameter’ as well.

http://en.wikipedia.org/wiki/Solid_angle

http://www.mathsisfun.com/geometry/steradian.html

A solid angle is expressed in a dimensionless unit called a steradian (symbol: sr). By default in terms of the total celestial sphere and before atmospheric’s scattering, the Sun and the Moon subtend fractional areas of 0.000546% (Sun) and 0.000531% (Moon).

http://en.wikipedia.org/wiki/Solid_angle#Sun_and_Moon

On earth the sun is likely closer to 0.00011 solid angle after athmospheric scattering. The sun as perceived from earth has a diameter of 0.53 degrees. This is about 0.000064 solid angle.

http://www.numericana.com/answer/angles.htm

The mean angular diameter of the full moon is 2q = 0.52° (it varies with time around that average, by about 0.009°). This translates into a solid angle of 0.0000647 sr, which means that the whole night sky covers a solid angle roughly one hundred thousand times greater than the full moon.

More info

http://lcogt.net/spacebook/using-angles-describe-positions-and-apparent-sizes-objects

http://amazing-space.stsci.edu/glossary/def.php.s=topic_astronomy

Angular Size

The apparent size of an object as seen by an observer; expressed in units of degrees (of arc), arc minutes, or arc seconds. The moon, as viewed from the Earth, has an angular diameter of one-half a degree.

The angle covered by the diameter of the full moon is about 31 arcmin or 1/2°, so astronomers would say the Moon’s angular diameter is 31 arcmin, or the Moon subtends an angle of 31 arcmin.

-

Composition and The Expressive Nature Of Light

http://www.huffingtonpost.com/bill-danskin/post_12457_b_10777222.html

George Sand once said “ The artist vocation is to send light into the human heart.”