BREAKING NEWS

LATEST POSTS

-

Luma Interactive Scenes announced: Gaussian Splatting

https://neuralradiancefields.io/luma-interactive-scenes-announced/

“…these are in fact Gaussian Splats that are being run and it’s a proprietary iteration of the original Inria paper. They hybridize the performance gain of realtime rendering with Gaussian Splatting with robust cloud based rendering that’s already widely being used in commercial applications. This has been in the works for a while over at Luma and I had the opportunity to try out some of my datasets on their new method.”

MICHAEL RUBLOFF

-

Meta Quest 3 is here

https://www.roadtovr.com/meta-quest-3-oculus-preview-connect-2023/

- Better lenses

- Better resolution

- Better processor

- Better audio

- Better passthrough

- Better controllers

- Better form-factor

-

HuggingFace ai-comic-factory – a FREE AI Comic Book Creator

https://huggingface.co/spaces/jbilcke-hf/ai-comic-factory

this is the epic story of a group of talented digital artists trying to overcame daily technical challenges to achieve incredibly photorealistic projects of monsters and aliens

-

Blackmagic Camera Introducing Digital Film for iPhone!

https://www.blackmagicdesign.com/ca/products/blackmagiccamera

You can adjust settings such as frame rate, shutter angle, white balance and ISO all in a single tap. Or, record directly to Blackmagic Cloud in industry standard 10-bit Apple ProRes files up to 4K! Recording to Blackmagic Cloud Storage lets you collaborate on DaVinci Resolve projects with editors anywhere in the world, all at the same time!

-

Getting Started With 3D Gaussian Splatting for Windows (Beginner Tutorial)

https://www.reshot.ai/3d-gaussian-splatting

what are 3D Gaussians? They are a generalization of 1D Gaussians (the bell curve) to 3D. Essentially they are ellipsoids in 3D space, with a center, a scale, a rotation, and “softened edges”.

Each 3D Gaussian is optimized along with a (viewdependant) color and opacity. When blended together, here’s the visualization of the full model, rendered from ANY angle. As you can see, 3D Gaussian Splatting captures extremely well the fuzzy and soft nature of the plush toy, something that photogrammetry-based methods struggle to do.

-

Laowa 25mm f/2.8 2.5-5X Ultra Macro vs 100mm f/2.8 2x lens

https://gilwizen.com/laowa-25mm-ultra-macro-lens-review/

https://www.cameralabs.com/laowa-25mm-f2-8-2-5-5x-ultra-macro-review/

- Pros:

– Lightweight, small size for a high-magnification macro lens

– Highest magnification lens available for non-Canon users

– Excellent sharpness and image quality

– Consistent working distance

– Narrow lens barrel makes it easy to find and track subject

– Affordable

- Cons:

– Manual, no auto aperture control

– No filter thread (but still customizable with caution)

– Dark viewfinder when closing aperture makes focusing difficult in poor light conditions

– Magnification range is short 2.5-5x compared to the competition

Combining a Laowa 25mm 2.5x lens with a Kenko 12mm extension tube

To find the combined magnification when using a Laowa 25mm 2.5x lens with a 12mm Kenko extension tube, given the magnification of the lens itself, the extension tube length, and the combined setup, you can calculate the total magnification.

First, consider the magnification of the lens itself, which is 2.5x.

Then, to find the total magnification when the extension tube is attached, you can use the formula:

Total Magnification = Magnification of the Lens + (Magnification of the Lens * Extension Tube Length / Focal Length of the Lens)

In this case, the extension tube length is 12mm, and the focal length of the lens is 25mm. Using the values:

Total Magnification with 2.5x = 2.5 + (2.5 * 12 / 25) = 2.5 + (30 / 25) = 2.5 + 1.2 = 3.7x

Total Magnification with 5x = 5 + (5 * 12 / 25) = 5 + (60 / 25) = 5 + 2.4 = 7.4x

- Pros:

FEATURED POSTS

-

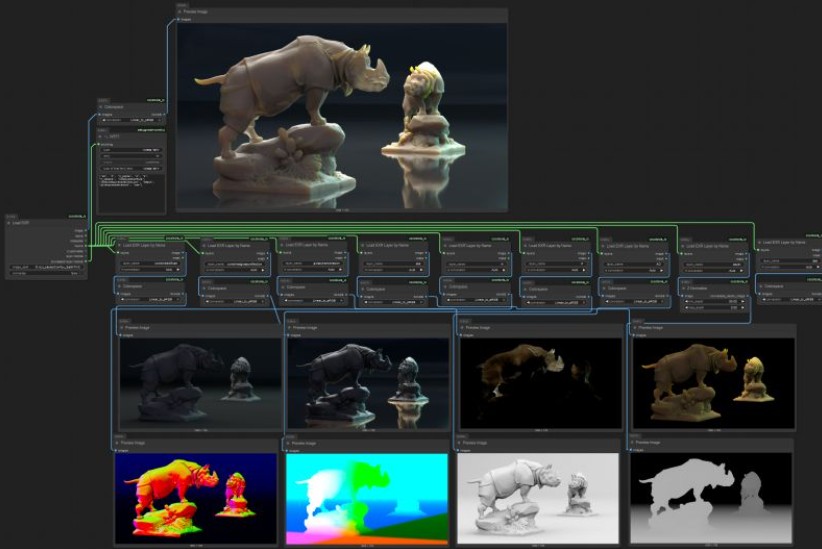

ComfyUI-CoCoTools_IO – A set of nodes focused on advanced image I/O operations, particularly for EXR file handling

https://github.com/Conor-Collins/ComfyUI-CoCoTools_IO

Features

- Advanced EXR image input with multilayer support

- EXR layer extraction and manipulation

- High-quality image saving with format-specific options

- Standard image format loading with bit depth awareness

Current Nodes

Image I/O

- Image Loader: Load standard image formats (PNG, JPG, WebP, etc.) with proper bit depth handling

- Load EXR: Comprehensive EXR file loading with support for multiple layers, channels, and cryptomatte data

- Load EXR Layer by Name: Extract specific layers from EXR files (similar to Nuke’s Shuffle node)

- Cryptomatte Layer: Specialized handling for cryptomatte layers in EXR files

- Image Saver: Save images in various formats with format-specific options (bit depth, compression, etc.)

Image Processing

- Colorspace: Convert between sRGB and Linear colorspaces

- Z Normalize: Normalize depth maps and other single-channel data

-

Björn Ottosson – How software gets color wrong

https://bottosson.github.io/posts/colorwrong/

Most software around us today are decent at accurately displaying colors. Processing of colors is another story unfortunately, and is often done badly.

To understand what the problem is, let’s start with an example of three ways of blending green and magenta:

- Perceptual blend – A smooth transition using a model designed to mimic human perception of color. The blending is done so that the perceived brightness and color varies smoothly and evenly.

- Linear blend – A model for blending color based on how light behaves physically. This type of blending can occur in many ways naturally, for example when colors are blended together by focus blur in a camera or when viewing a pattern of two colors at a distance.

- sRGB blend – This is how colors would normally be blended in computer software, using sRGB to represent the colors.

Let’s look at some more examples of blending of colors, to see how these problems surface more practically. The examples use strong colors since then the differences are more pronounced. This is using the same three ways of blending colors as the first example.

Instead of making it as easy as possible to work with color, most software make it unnecessarily hard, by doing image processing with representations not designed for it. Approximating the physical behavior of light with linear RGB models is one easy thing to do, but more work is needed to create image representations tailored for image processing and human perception.

Also see: