3Dprinting (185) A.I. (926) animation (356) blender (224) colour (241) commercials (53) composition (154) cool (375) design (661) Featured (94) hardware (319) IOS (109) jokes (141) lighting (302) modeling (160) music (189) photogrammetry (199) photography (758) production (1311) python (108) quotes (501) reference (318) software (1385) trailers (311) ves (579) VR (221)

POPULAR SEARCHES unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

https://www.kellyboeschdesign.com

I was working an album cover last night and got these really cool images in midjourney so made a video out of it. Animated using Pika. Song made using Suno Full version on my bandcamp. It’s called Static.

https://www.linkedin.com/posts/kellyboesch_midjourney-keyframes-ai-activity-7359244714853736450-Wvcr

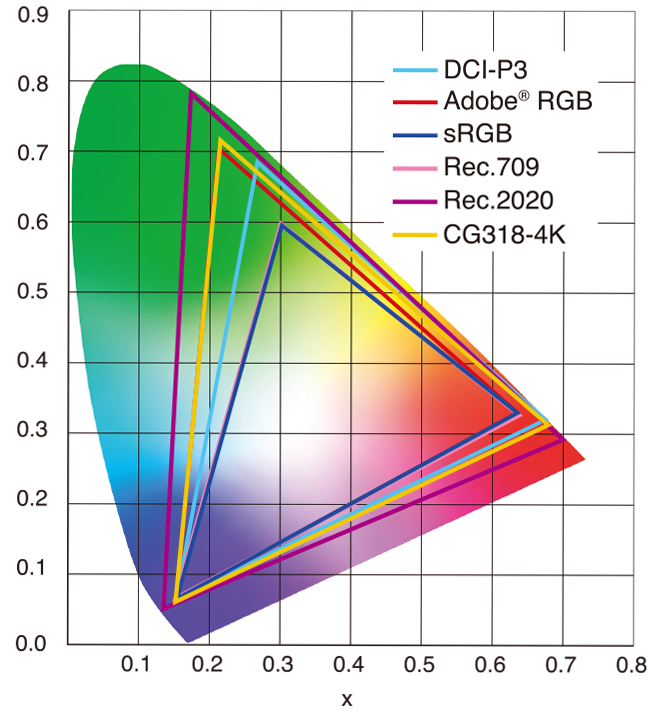

| Feature | sRGB | Rec. 709 |

|---|---|---|

| White point | D65 (6504 K), same for both | D65 (6504 K) |

| Primaries (x,y) | R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) | R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) |

| Gamut size | Identical triangle on CIE 1931 chart | Identical to sRGB |

| Gamma / transfer | Piecewise curve: approximate 2.2 with linear toe | Pure power-law γ≈2.4 (often approximated as 2.2 in practice) |

| Matrix coefficients | N/A (pure RGB usage) | Y = 0.2126 R + 0.7152 G + 0.0722 B (Rec. 709 matrix) |

| Typical bit-depth | 8-bit/channel (with 16-bit variants) | 8-bit/channel (10-bit for professional video) |

| Usage metadata | Tagged as “sRGB” in image files (PNG, JPEG, etc.) | Tagged as “bt709” in video containers (MP4, MOV) |

| Color range | Full-range RGB (0–255) | Studio-range Y′CbCr (Y′ [16–235], Cb/Cr [16–240]) |

This 2025 I decided to start learning how to code, so I installed Visual Studio and I started looking into C++. After days of watching tutorials and guides about the basics of C++ and programming, I decided to make something physics-related. I started with a dot that fell to the ground and then I wanted to simulate gravitational attraction, so I made 2 circles attracting each other. I thought it was really cool to see something I made with code actually work, so I kept building on top of that small, basic program. And here we are after roughly 8 months of learning programming. This is Galaxy Engine, and it is a simulation software I have been making ever since I started my learning journey. It currently can simulate gravity, dark matter, galaxies, the Big Bang, temperature, fluid dynamics, breakable solids, planetary interactions, etc. The program can run many tens of thousands of particles in real time on the CPU thanks to the Barnes-Hut algorithm, mixed with Morton curves. It also includes its own PBR 2D path tracer with BVH optimizations. The path tracer can simulate a bunch of stuff like diffuse lighting, specular reflections, refraction, internal reflection, fresnel, emission, dispersion, roughness, IOR, nested IOR and more! I tried to make the path tracer closer to traditional 3D render engines like V-Ray. I honestly never imagined I would go this far with programming, and it has been an amazing learning experience so far. I think that mixing this knowledge with my 3D knowledge can unlock countless new possibilities. In case you are curious about Galaxy Engine, I made it completely free and Open-Source so that anyone can build and compile it locally! You can find the source code in GitHub

https://github.com/NarcisCalin/Galaxy-Engine

When you’re working with binary data in Python—whether that’s image bytes, network payloads, or any in-memory binary stream—you often need a file-like interface without touching the disk. That’s where BytesIO from the built-in io module comes in handy. It lets you treat a bytes buffer as if it were a file.

BytesIO?ioBytesIO'rb'/'wb'), but data lives in RAM rather than on disk.from io import BytesIO

BytesIO?read(), write(), seek(), etc.).requests) will work with BytesIO.from io import BytesIO

# Create a BytesIO buffer

buffer = BytesIO()

# Write some binary data

buffer.write(b'Hello, \xF0\x9F\x98\x8A') # includes a smiley emoji in UTF-8

# Retrieve the entire contents

data = buffer.getvalue()

print(data) # b'Hello, \xf0\x9f\x98\x8a'

print(data.decode('utf-8')) # Hello, 😊

# Always close when done

buffer.close()

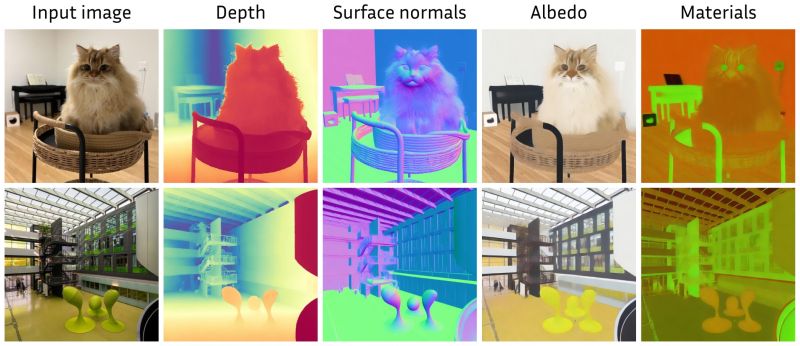

Marigold repurposes Stable Diffusion for dense prediction tasks such as monocular depth estimation and surface normal prediction, delivering a level of detail often missing even in top discriminative models.

Key aspects that make it great:

– Reuses the original VAE and only lightly fine-tunes the denoising UNet

– Trained on just tens of thousands of synthetic image–modality pairs

– Runs on a single consumer GPU (e.g., RTX 4090)

– Zero-shot generalization to real-world, in-the-wild images

https://mlhonk.substack.com/p/31-marigold

https://arxiv.org/pdf/2505.09358

https://marigoldmonodepth.github.io/

https://runwayml.com/research/introducing-runway-aleph

Generate New Camera Angles

Generate the Next Shot

Use Any Style to Transfer to a Video

Change Environments, Locations, Seasons and Time of Day

Add Things to a Scene

Remove Things from a Scene

Change Objects in a Scene

Apply the Motion of a Video to an Image

Alter a Character’s Appearance

Recolor Elements of a Scene

Relight Shots

Green Screen Any Object, Person or Situation

https://github.com/mwkm/atoMeow

https://www.shadertoy.com/view/7s3XzX

This demo is created for coders who are familiar with this awesome creative coding platform. You may quickly modify the code to work for video or to stipple your own Procssing drawings by turning them into PImage and run the simulation. This demo code also serves as a reference implementation of my article Blue noise sampling using an N-body simulation-based method. If you are interested in 2.5D, you may mod the code to achieve what I discussed in this artist friendly article.

Convert your video to a dotted noise.

What’s Included:

𝗛𝗲𝗿𝗲’𝘀 𝘄𝗵𝗮𝘁 𝘁𝗼 𝗺𝗮𝘀𝘁𝗲𝗿 𝗶𝗻 𝗖𝗹𝗲𝗮𝗻 𝗖𝗼𝗱𝗲 𝗣𝗿𝗮𝗰𝘁𝗶𝗰𝗲𝘀:

🔹 Code Readability & Simplicity – Use meaningful names, write short functions, follow SRP, flatten logic, and remove dead code.

→ Clarity is a feature.

🔹 Function & Class Design – Limit parameters, favor pure functions, small classes, and composition over inheritance.

→ Structure drives scalability.

🔹 Testing & Maintainability – Write readable unit tests, avoid over-mocking, test edge cases, and refactor with confidence.

→ Test what matters.

🔹 Code Structure & Architecture – Organize by features, minimize global state, avoid god objects, and abstract smartly.

→ Architecture isn’t just backend.

🔹 Refactoring & Iteration – Apply the Boy Scout Rule, DRY, KISS, and YAGNI principles regularly.

→ Refactor like it’s part of development.

🔹 Robustness & Safety – Validate early, handle errors gracefully, avoid magic numbers, and favor immutability.

→ Safe code is future-proof.

🔹 Documentation & Comments – Let your code explain itself. Comment why, not what, and document at the source.

→ Good docs reduce team friction.

🔹 Tooling & Automation – Use linters, formatters, static analysis, and CI reviews to automate code quality.

→ Let tools guard your gates.

🔹 Final Review Practices – Review, refactor nearby code, and avoid cleverness in the name of brevity.

→ Readable code is better than smart code.

I ran Steamboat Willie (now public domain) through Flux Kontext to reimagine it as a 3D-style animated piece. Instead of going the polished route with something like W.A.N. 2.1 for full image-to-video generation, I leaned into the raw, handmade vibe that comes from converting each frame individually. It gave it a kind of stop-motion texture, imperfect, a bit wobbly, but full of character.

Our human-centric dense prediction model delivers high-quality, detailed (depth) results while achieving remarkable efficiency, running orders of magnitude faster than competing methods, with inference speeds as low as 21 milliseconds per frame (the large multi-task model on an NVIDIA A100). It reliably captures a wide range of human characteristics under diverse lighting conditions, preserving fine-grained details such as hair strands and subtle facial features. This demonstrates the model’s robustness and accuracy in complex, real-world scenarios.

https://microsoft.github.io/DAViD

The state of the art in human-centric computer vision achieves high accuracy and robustness across a diverse range of tasks. The most effective models in this domain have billions of parameters, thus requiring extremely large datasets, expensive training regimes, and compute-intensive inference. In this paper, we demonstrate that it is possible to train models on much smaller but high-fidelity synthetic datasets, with no loss in accuracy and higher efficiency. Using synthetic training data provides us with excellent levels of detail and perfect labels, while providing strong guarantees for data provenance, usage rights, and user consent. Procedural data synthesis also provides us with explicit control on data diversity, that we can use to address unfairness in the models we train. Extensive quantitative assessment on real input images demonstrates accuracy of our models on three dense prediction tasks: depth estimation, surface normal estimation, and soft foreground segmentation. Our models require only a fraction of the cost of training and inference when compared with foundational models of similar accuracy.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.