3Dprinting (178) A.I. (846) animation (350) blender (210) colour (233) commercials (52) composition (153) cool (364) design (649) Featured (80) hardware (314) IOS (109) jokes (139) lighting (289) modeling (145) music (186) photogrammetry (192) photography (755) production (1291) python (94) quotes (497) reference (314) software (1356) trailers (307) ves (555) VR (221)

Category: hardware

-

Google Gemini Robotics

For safety considerations, Google mentions a “layered, holistic approach” that maintains traditional robot safety measures like collision avoidance and force limitations. The company describes developing a “Robot Constitution” framework inspired by Isaac Asimov’s Three Laws of Robotics and releasing a dataset unsurprisingly called “ASIMOV” to help researchers evaluate safety implications of robotic actions.

This new ASIMOV dataset represents Google’s attempt to create standardized ways to assess robot safety beyond physical harm prevention. The dataset appears designed to help researchers test how well AI models understand the potential consequences of actions a robot might take in various scenarios. According to Google’s announcement, the dataset will “help researchers to rigorously measure the safety implications of robotic actions in real-world scenarios.”

-

Lumotive Light Control Metasurface – This Tiny Chip Replaces Bulky Optics & Mechanical Mirrors

Programmable Optics for LiDAR and 3D Sensing: How Lumotive’s LCM is Changing the Game

For decades, LiDAR and 3D sensing systems have relied on mechanical mirrors and bulky optics to direct light and measure distance. But at CES 2025, Lumotive unveiled a breakthrough—a semiconductor-based programmable optic that removes the need for moving parts altogether.

The Problem with Traditional LiDAR and Optical Systems

LiDAR and 3D sensing systems work by sending out light and measuring when it returns, creating a precise depth map of the environment. However, traditional systems have relied on physically moving mirrors and lenses, which introduce several limitations:

- Size and weight – Bulky components make integration difficult.

- Complexity – Mechanical parts are prone to failure and expensive to produce.

- Speed limitations – Physical movement slows down scanning and responsiveness.

To bring high-resolution depth sensing to wearables, smart devices, and autonomous systems, a new approach is needed.

Enter the Light Control Metasurface (LCM)

Lumotive’s Light Control Metasurface (LCM) replaces mechanical mirrors with a semiconductor-based optical chip. This allows LiDAR and 3D sensing systems to steer light electronically, just like a processor manages data. The advantages are game-changing:

- No moving parts – Increased durability and reliability

- Ultra-compact form factor – Fits into small devices and wearables

- Real-time reconfigurability – Optics can adapt instantly to changing environments

- Energy-efficient scanning – Focuses on relevant areas, saving power

How Does it Work?

LCM technology works by controlling how light is directed using programmable metasurfaces. Unlike traditional optics that require physical movement, Lumotive’s approach enables light to be redirected with software-controlled precision.

This means:

- No mechanical delays – Everything happens at electronic speeds.

- AI-enhanced tracking – The sensor can focus only on relevant objects.

- Scalability – The same technology can be adapted for industrial, automotive, AR/VR, and smart city applications.

Live Demo: Real-Time 3D Sensing

At CES 2025, Lumotive showcased how their LCM-enabled sensor can scan a room in real time, creating an instant 3D point cloud. Unlike traditional LiDAR, which has a fixed scan pattern, this system can dynamically adjust to track people, objects, and even gestures on the fly.

This is a huge leap forward for AI-powered perception systems, allowing cameras and sensors to interpret their environment more intelligently than ever before.

Who Needs This Technology?

Lumotive’s programmable optics have the potential to disrupt multiple industries, including:

- Automotive – Advanced LiDAR for autonomous vehicles

- Industrial automation – Precision 3D scanning for robotics and smart factories

- Smart cities – Real-time monitoring of public spaces

- AR/VR/XR – Depth-aware tracking for immersive experiences

The Future of 3D Sensing Starts Here

Lumotive’s Light Control Metasurface represents a fundamental shift in how we think about optics and 3D sensing. By bringing programmability to light steering, it opens up new possibilities for faster, smarter, and more efficient depth-sensing technologies.

With traditional LiDAR now facing a serious challenge, the question is: Who will be the first to integrate programmable optics into their designs?

-

Micro LED displays

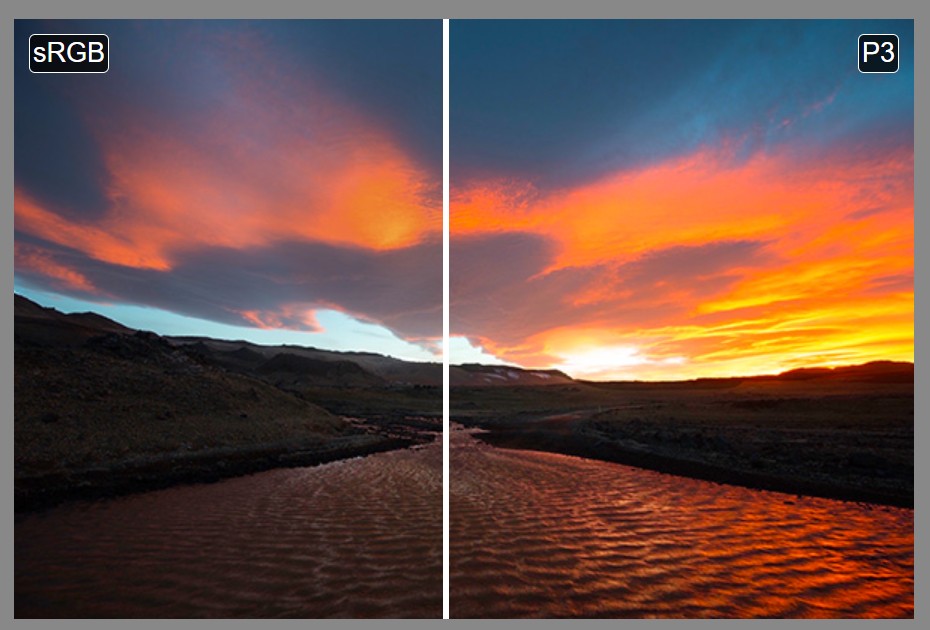

Micro LED displays are a cutting-edge technology that promise significant improvements over existing display methods like OLED and LCD. By using tiny, individual LEDs for each pixel, these displays can deliver exceptional brightness, contrast, and energy efficiency. Their inherent durability and superior performance make them an attractive option for high-end consumer electronics, wearable devices, and even large-scale display panels.

The technology is seen as the future of display innovation, aiming to merge high-quality visuals with low power consumption and long-lasting performance.Despite their advantages, micro LED displays face substantial manufacturing hurdles that have slowed their mass-market adoption. The production process requires the precise transfer and alignment of millions of microscopic LEDs onto a substrate—a task that is both technically challenging and cost-intensive. Issues with yield, scalability, and quality control continue to persist, making it difficult to achieve the economies of scale necessary for widespread commercial use. As industry leaders invest heavily in research and development to overcome these obstacles, the technology remains on the cusp of becoming a viable alternative to current display technologies.

-

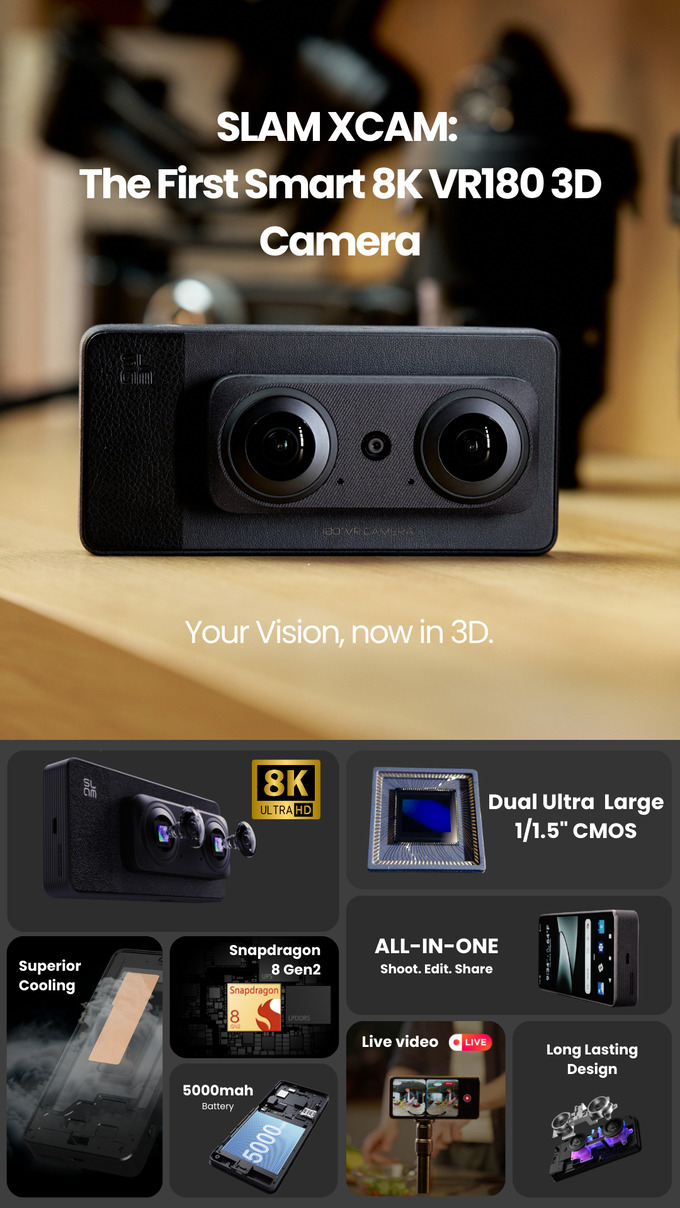

SLAM XCAM 8K VR180 3D Camera

8K 30FPS VR180 3D Video | Dual 1/1.5″ CMOS Sensors | 10-bit Color | Snapdragon8 GN2 | Android13 | 6.67″AMOLED|5000mAh |100Mbps Data

-

LG 45GX990A – The world’s first bendable gaming monitor

The monitor resembles a typical thin flat screen when in its home position, but it can flex its 45-inch body to 900R curvature in the blink of an eye.

-

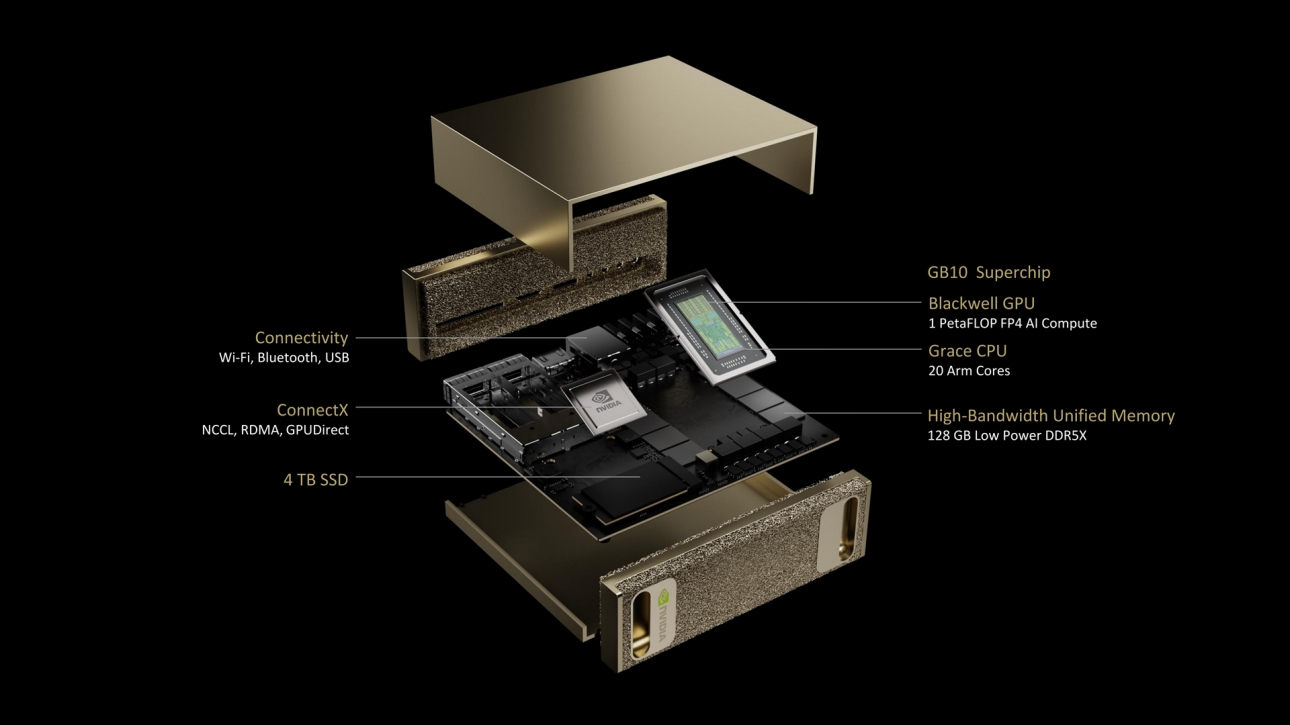

Nvidia unveils $3,000 desktop AI computer for home LLM researchers

https://arstechnica.com/ai/2025/01/nvidias-first-desktop-pc-can-run-local-ai-models-for-3000

https://www.nvidia.com/en-us/project-digits

Some smaller open-weights AI language models (such as Llama 3.1 70B, with 70 billion parameters) and various AI image-synthesis models like Flux.1 dev (12 billion parameters) could probably run comfortably on Project DIGITS, but larger open models like Llama 3.1 405B, with 405 billion parameters, may not. Given the recent explosion of smaller AI models, a creative developer could likely run quite a few interesting models on the unit.

DIGITS’ 128GB of unified RAM is notable because a high-power consumer GPU like the RTX 4090 has only 24GB of VRAM. Memory serves as a hard limit on AI model parameter size, and more memory makes room for running larger local AI models.

-

A Looming Threat to Bitcoin (and the financial world)- The Risk of a Quantum Hack

Advancements in quantum computing pose a potential threat to Bitcoin’s security. Google’s recent progress with its Willow quantum-computing chip has highlighted the possibility that future quantum computers could break the encryption protecting Bitcoin, enabling hackers to access secure digital wallets and potentially causing significant devaluation.

Researchers estimate that a quantum computer capable of such decryption is likely more than a decade away. Nonetheless, the Bitcoin developer community faces the complex task of upgrading the system to incorporate quantum-resistant encryption methods. Achieving consensus within the decentralized community may be a slow process, and users would eventually need to transfer their holdings to quantum-resistant addresses to safeguard their assets.

A quantum-powered attack on Bitcoin could also negatively impact traditional financial markets, possibly leading to substantial losses and a deep recession. To mitigate such threats, President-elect Donald Trump has proposed creating a strategic reserve for the government’s Bitcoin holdings.

-

Microsoft is discontinuing its HoloLens headsets

https://www.theverge.com/2024/10/1/24259369/microsoft-hololens-2-discontinuation-support

Software support for the original HoloLens headset will end on December 10th.

Microsoft’s struggles with HoloLens have been apparent over the past two years.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

VFX pipeline – Render Wall management topics

-

HDRI Median Cut plugin

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

-

The Perils of Technical Debt – Understanding Its Impact on Security, Usability, and Stability

-

Yann Lecun: Meta AI, Open Source, Limits of LLMs, AGI & the Future of AI | Lex Fridman Podcast #416

-

How does Stable Diffusion work?

-

Top 3D Printing Website Resources

-

AI Search – Find The Best AI Tools & Apps

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.