https://www.sdiolatz.info/publications/00ImageGS.html

3Dprinting (179) A.I. (899) animation (353) blender (217) colour (241) commercials (53) composition (154) cool (368) design (657) Featured (91) hardware (316) IOS (109) jokes (140) lighting (300) modeling (156) music (189) photogrammetry (197) photography (757) production (1308) python (101) quotes (498) reference (317) software (1379) trailers (308) ves (573) VR (221)

POPULAR SEARCHES unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

Given sparse-view videos, Diffuman4D (1) generates 4D-consistent multi-view videos conditioned on these inputs, and (2) reconstructs a high-fidelity 4DGS model of the human performance using both the input and the generated videos.

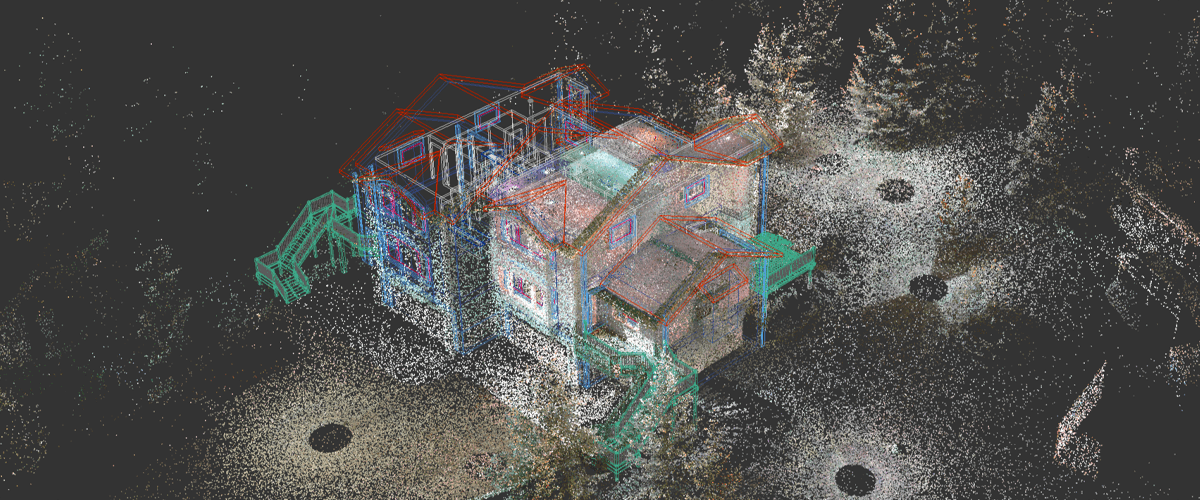

The goal was ambitious: to generate a hyper-detailed 3DGS scan from a massive dataset—20,000 drone photos at full resolution (5280x3956px). All of this on a single machine with just one RTX 4090 GPU.

What was the problem?

Most existing tools simply can’t handle this volume of data. For instance, Postshot, which is excellent for many tasks, confidently processed up to 7,000 photos but choked on 20,000—it ran for two days without even starting the model training.

The Breakthrough Solution.

The real discovery was the software from GreenValley International

https://www.greenvalleyintl.com/LiDAR360MLS

Their approach is brilliant: instead of trying to swallow the entire dataset at once, the program intelligently divides it into smaller, manageable chunks, trains each one individually, and then seamlessly merges them into one giant, detailed scene. After 40 hours of rendering, we got this stunning 103 million splats PLY result:

(more…)https://superhivemarket.com/products/3dgs-render-by-kiri-engine

https://github.com/Kiri-Innovation/3dgs-render-blender-addon

https://www.kiriengine.app/blender-addon/3dgs-render

The addon is a full 3DGS editing and rendering suite for Blender.3DGS scans can be created from .OBJ files, or 3DGS .PLY files can be imported as mesh objects, offering two distinct workflows. The created objects can be manipulated, animated and rendered inside Blender. Or Blender can be used as an intermediate editing and painting software – with the results being exportable to other 3DGS software and viewers.

https://xdimlab.github.io/GIFStream/

Immersive video offers a 6-Dof-free viewing experience, potentially playing a key role in future video technology. Recently, 4D Gaussian Splatting has gained attention as an effective approach for immersive video due to its high rendering efficiency and quality, though maintaining quality with manageable storage remains challenging. To address this, we introduce GIFStream, a novel 4D Gaussian representation using a canonical space and a deformation field enhanced with time-dependent feature streams. These feature streams enable complex motion modeling and allow efficient compression by leveraging their motion-awareness and temporal correspondence. Additionally, we incorporate both temporal and spatial compression networks for endto-end compression.

Experimental results show that GIFStream delivers high-quality immersive video at 30 Mbps, with real-time rendering and fast decoding on an RTX 4090.

https://github.com/nvpro-samples/vk_gaussian_splatting

vk_gaussian_splatting is a new Vulkan-based sample that demonstrates real-time Gaussian splatting, a cutting-edge volume rendering technique that enables highly efficient representations of radiance fields. It is the latest addition to the NVIDIA DesignWorks Samples.

As point cloud processing becomes increasingly important across industries, I wanted to share the most powerful open-source tools I’ve used in my projects:

1️⃣ Open3D (http://www.open3d.org/)

The gold standard for point cloud processing in Python. Incredible visualization capabilities, efficient data structures, and comprehensive geometry processing functions. Perfect for both research and production.

2️⃣ PCL – Point Cloud Library (https://pointclouds.org/)

The C++ powerhouse of point cloud processing. Extensive algorithms for filtering, feature estimation, surface reconstruction, registration, and segmentation. Steep learning curve but unmatched performance.

3️⃣ PyTorch3D (https://pytorch3d.org/)

Facebook’s differentiable 3D library. Seamlessly integrates point cloud operations with deep learning. Essential if you’re building neural networks for 3D data.

4️⃣ PyTorch Geometric (https://lnkd.in/eCutwTuB)

Specializes in graph neural networks for point clouds. Implements cutting-edge architectures like PointNet, PointNet++, and DGCNN with optimized performance.

5️⃣ Kaolin (https://lnkd.in/eyj7QzCR)

NVIDIA’s 3D deep learning library. Offers differentiable renderers and accelerated GPU implementations of common point cloud operations.

6️⃣ CloudCompare (https://lnkd.in/emQtPz4d)

More than just visualization. This desktop application lets you perform complex processing without writing code. Perfect for quick exploration and comparison.

7️⃣ LAStools (https://lnkd.in/eRk5Bx7E)

The industry standard for LiDAR processing. Fast, scalable, and memory-efficient tools specifically designed for massive aerial and terrestrial LiDAR data.

8️⃣ PDAL – Point Data Abstraction Library (https://pdal.io/)

Think of it as “GDAL for point clouds.” Powerful for building processing pipelines and handling various file formats and coordinate transformations.

9️⃣ Open3D-ML (https://lnkd.in/eWnXufgG)

Extends Open3D with machine learning capabilities. Implementations of state-of-the-art 3D deep learning methods with consistent APIs.

🔟 MeshLab (https://www.meshlab.net/)

The Swiss Army knife for mesh processing. While primarily for meshes, its point cloud processing capabilities are excellent for cleanup, simplification, and reconstruction.

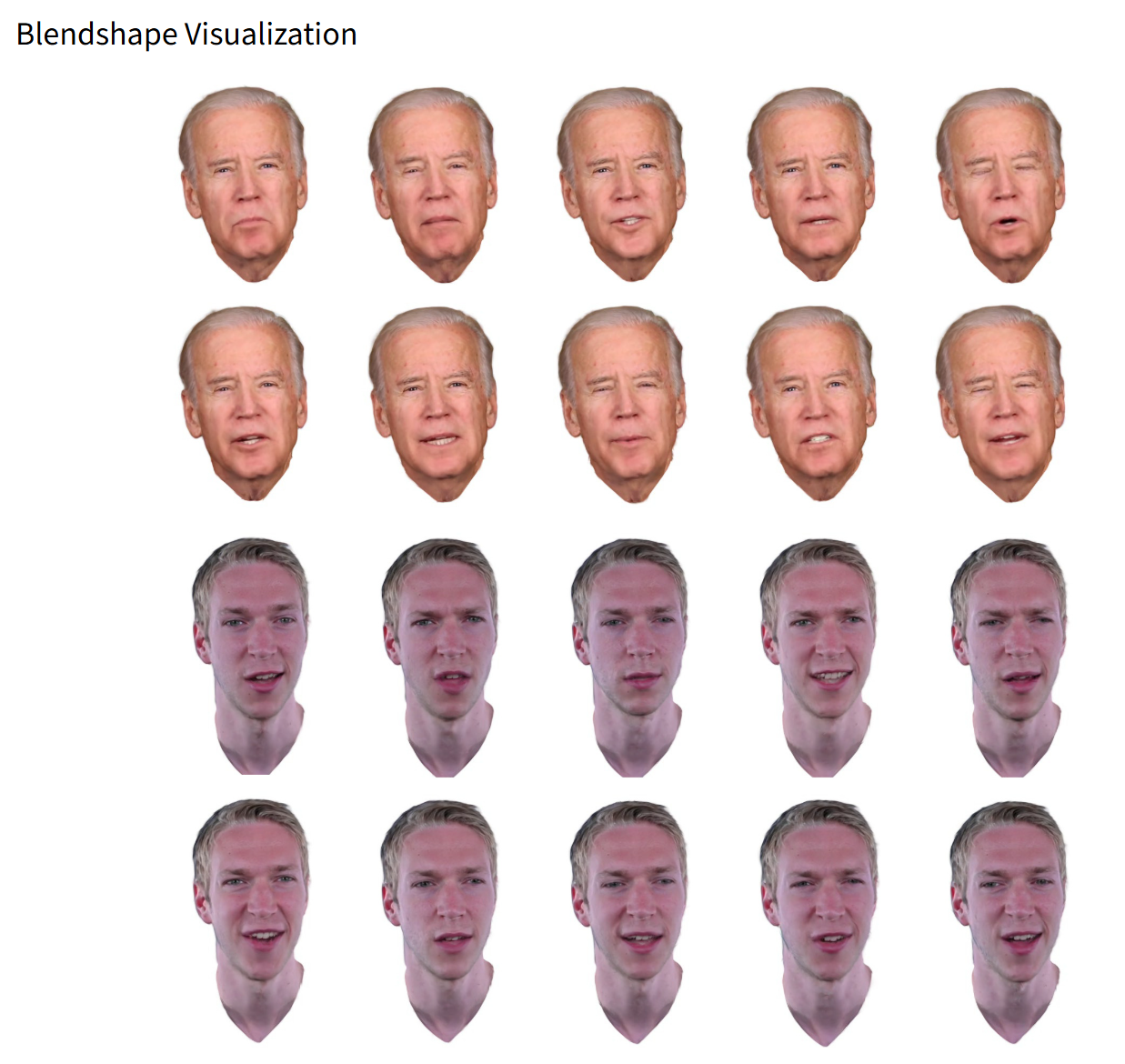

https://gapszju.github.io/RGBAvatar

A method for reconstructing photorealistic, animatable head avatars at speeds sufficient for on-the-fly reconstruction. Unlike prior approaches that utilize linear bases from 3D morphable models (3DMM) to model Gaussian blendshapes, our method maps tracked 3DMM parameters into reduced blendshape weights with an MLP, leading to a compact set of blendshape bases.

https://github.com/gapszju/RGBAvatar

For decades, LiDAR and 3D sensing systems have relied on mechanical mirrors and bulky optics to direct light and measure distance. But at CES 2025, Lumotive unveiled a breakthrough—a semiconductor-based programmable optic that removes the need for moving parts altogether.

LiDAR and 3D sensing systems work by sending out light and measuring when it returns, creating a precise depth map of the environment. However, traditional systems have relied on physically moving mirrors and lenses, which introduce several limitations:

To bring high-resolution depth sensing to wearables, smart devices, and autonomous systems, a new approach is needed.

Lumotive’s Light Control Metasurface (LCM) replaces mechanical mirrors with a semiconductor-based optical chip. This allows LiDAR and 3D sensing systems to steer light electronically, just like a processor manages data. The advantages are game-changing:

LCM technology works by controlling how light is directed using programmable metasurfaces. Unlike traditional optics that require physical movement, Lumotive’s approach enables light to be redirected with software-controlled precision.

This means:

At CES 2025, Lumotive showcased how their LCM-enabled sensor can scan a room in real time, creating an instant 3D point cloud. Unlike traditional LiDAR, which has a fixed scan pattern, this system can dynamically adjust to track people, objects, and even gestures on the fly.

This is a huge leap forward for AI-powered perception systems, allowing cameras and sensors to interpret their environment more intelligently than ever before.

Lumotive’s programmable optics have the potential to disrupt multiple industries, including:

Lumotive’s Light Control Metasurface represents a fundamental shift in how we think about optics and 3D sensing. By bringing programmability to light steering, it opens up new possibilities for faster, smarter, and more efficient depth-sensing technologies.

With traditional LiDAR now facing a serious challenge, the question is: Who will be the first to integrate programmable optics into their designs?

https://tobias-kirschstein.github.io/avat3r

Avat3r takes 4 input images of a person’s face and generates an animatable 3D head avatar in a single forward pass. The resulting 3D head representation can be animated at interactive rates. The entire creation process of the 3D avatar, from taking 4 smartphone pictures to the final result, can be executed within minutes.

https://www.uploadvr.com/meta-researchers-generate-photorealistic-avatars-from-just-four-selfies

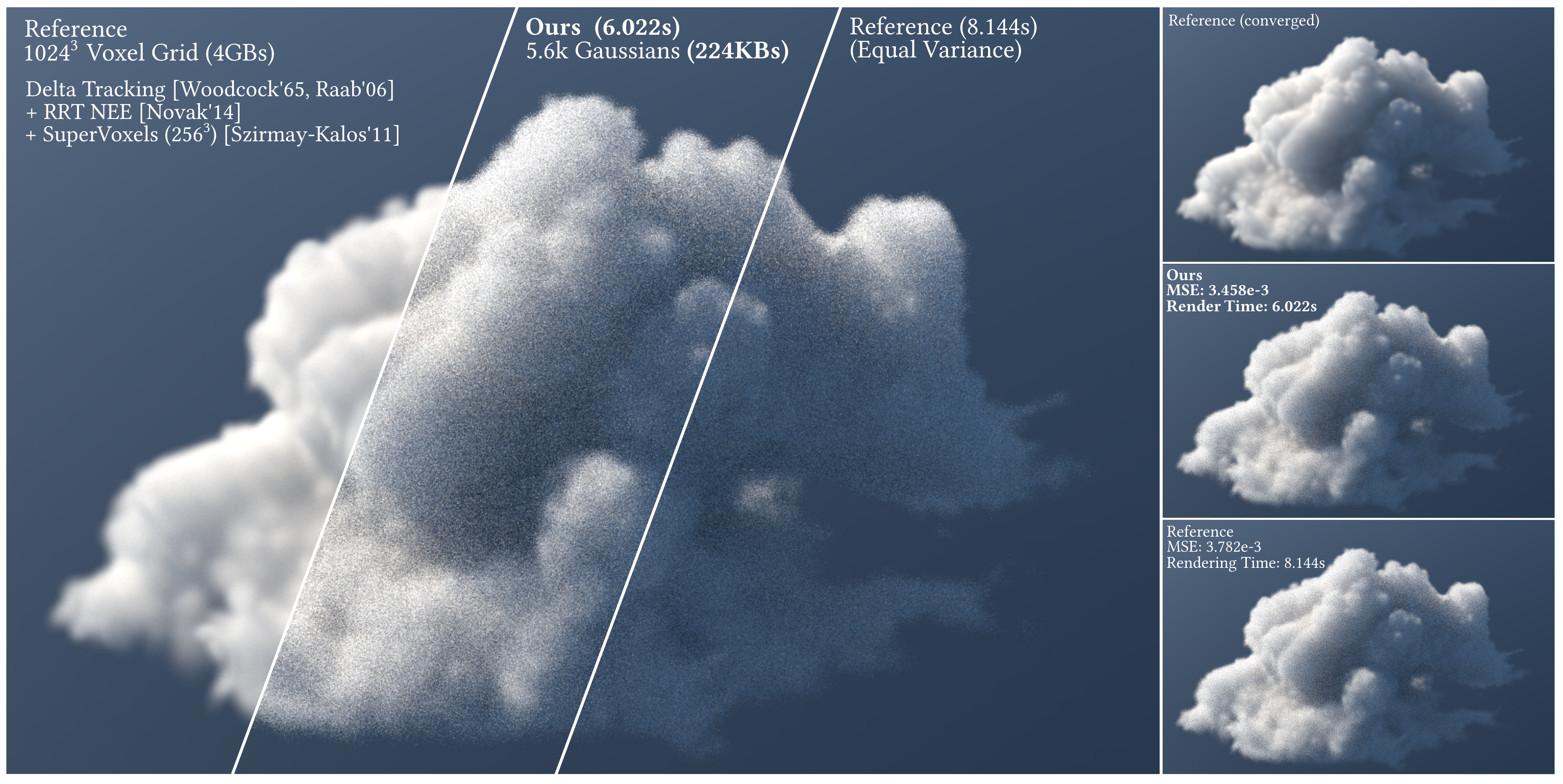

https://arcanous98.github.io/projectPages/gaussianVolumes.html

We propose a compact and efficient alternative to existing volumetric representations for rendering such as voxel grids.

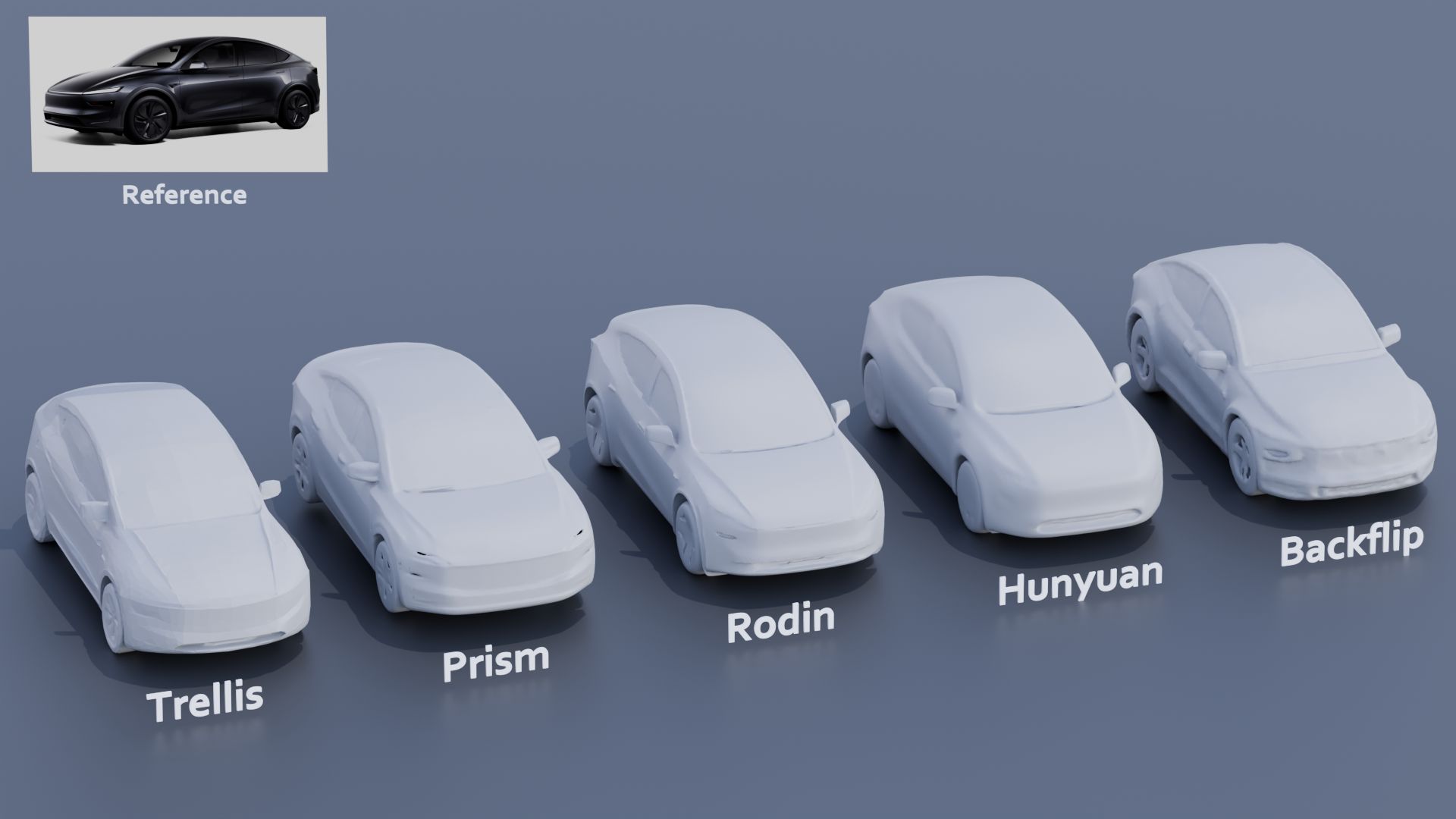

SPAR3D is a fast single-image 3D reconstructor with intermediate point cloud generation, which allows for interactive user edits and achieves state-of-the-art performance.

https://github.com/Stability-AI/stable-point-aware-3d

https://stability.ai/news/stable-point-aware-3d?utm_source=x&utm_medium=social&utm_campaign=SPAR3D

https://sonsang.github.io/dmesh2-project

An efficient differentiable mesh-based method that can effectively handle complex 2D and 3D shapes. For instance, it can be used for reconstructing complex shapes from point clouds and multi-view images.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.