Views : 570

3Dprinting (179) A.I. (898) animation (353) blender (217) colour (241) commercials (53) composition (154) cool (368) design (657) Featured (91) hardware (316) IOS (109) jokes (140) lighting (300) modeling (156) music (189) photogrammetry (197) photography (757) production (1308) python (101) quotes (498) reference (317) software (1379) trailers (308) ves (571) VR (221)

POPULAR SEARCHES unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

Category: production

-

Autodesk open sources RV playback tool to democratize access and drive open standards

https://github.com/AcademySoftwareFoundation/OpenRV

https://adsknews.autodesk.com/news/rv-open-source

“Autodesk is committed to helping creators envision a better world, and having access to great tools allows them do just that. So we are making RV, our Sci-Tech award-winning media review and playback software, open source. Code contributions from RV along with DNEG’s xStudio and Sony Pictures Imageworks’ itview will shape the Open Review Initiative, the Academy Software Foundation’s (ASWF) newest sandbox project to build a unified, open source toolset for playback, review, and approval. ”

-

Texel Density measurement unit

Texel density (also referred to as pixel density or texture density) is a measurement unit used to make asset textures cohesive compared to each other throughout your entire world.

It’s measured in pixels per centimeter (ie: 2.56px/cm) or pixels per meter (ie: 256px/m).

https://www.beyondextent.com/deep-dives/deepdive-texeldensity

-

Mohsen Tabasi – Stable Diffusion for Houdini through DreamStudio

https://github.com/proceduralit/StableDiffusion_Houdini

https://github.com/proceduralit/StableDiffusion_Houdini/wiki/

This is a Houdini HDA that submits the render output as the init_image and with getting help from PDG, enables artists to easily define variations on the Stable Diffusion parameters like Sampling Method, Steps, Prompt Strength, and Noise Strength.

Right now DreamStudio is the only public server that the HDA is supporting. So you need to have an account there and connect the HDA to your account.

DreamStudio: https://beta.dreamstudio.ai/membership -

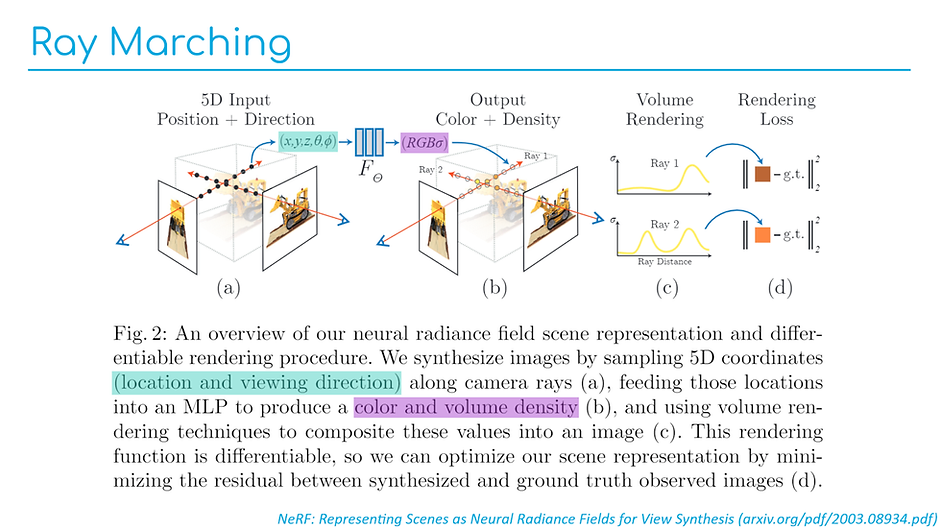

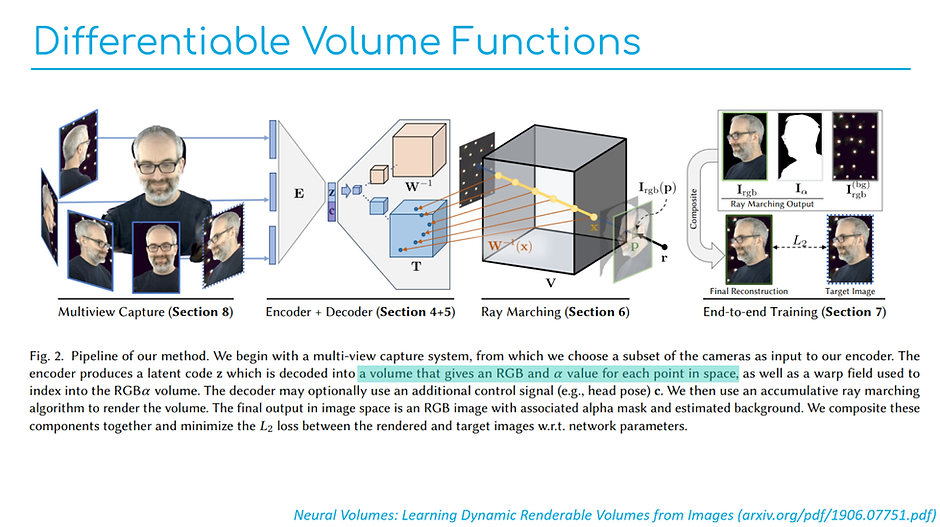

What is Neural Rendering?

https://www.zumolabs.ai/post/what-is-neural-rendering

“The key concept behind neural rendering approaches is that they are differentiable. A differentiable function is one whose derivative exists at each point in the domain. This is important because machine learning is basically the chain rule with extra steps: a differentiable rendering function can be learned with data, one gradient descent step at a time. Learning a rendering function statistically through data is fundamentally different from the classic rendering methods we described above, which calculate and extrapolate from the known laws of physics.”

-

Foundry Nuke Cattery – A library of open source machine learning models

The Cattery is a library of free third-party machine learning models converted to .cat files to run natively in Nuke, designed to bridge the gap between academia and production, providing all communities access to different ML models that all run in Nuke. Users will have access to state-of-the-art models addressing segmentation, depth estimation, optical flow, upscaling, denoising, and style transfer, with plans to expand the models hosted in the future.

https://www.foundry.com/insights/machine-learning/the-artists-guide-to-cattery

https://community.foundry.com/cattery

-

Scene Referred vs Display Referred color workflows

Display Referred it is tied to the target hardware, as such it bakes color requirements into every type of media output request.

Scene Referred uses a common unified wide gamut and targeting audience through CDL and DI libraries instead.

So that color information stays untouched and only “transformed” as/when needed.Sources:

– Victor Perez – Color Management Fundamentals & ACES Workflows in Nuke

– https://z-fx.nl/ColorspACES.pdf

– Wicus

-

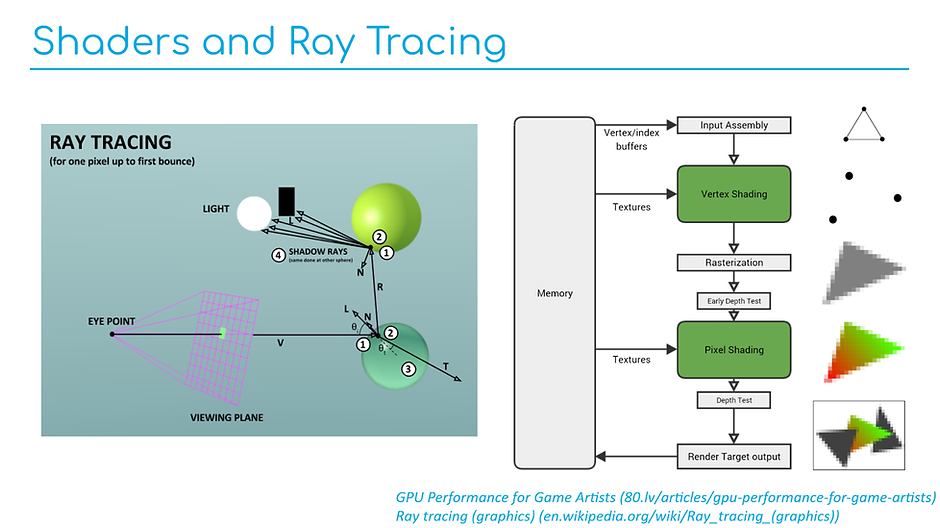

Autodesk open sources Aurora – an interactive path tracing renderer that leverages graphics processing unit (GPU) hardware ray tracing

https://github.com/autodesk/Aurora

Goals for Aurora

- Renders noise-free in 50 milliseconds or less per frame.

- Intended for design iteration (viewport, performance) rather than final frames (production, quality), which are produced from a renderer like Autodesk Arnold.

- OS-independent: Runs on Windows, Linux, MacOS.

- Vendor-independent: Runs on GPUs from AMD, Apple, Intel, NVIDIA.

Features

- Path tracing and the global effects that come with it: soft shadows, reflections, refractions, bounced light, and others.

- Autodesk Standard Surface materials defined with MaterialX documents.

- Arbitrary blended layers of materials, which can be used to implement decals.

- Environment lighting with a wrap-around lat-long image.

- Triangle geometry with object instancing.

- Real-time denoising

- Interactive performance for complex scenes.

- A USD Hydra render delegate called HdAurora.

-

Peregrine Bokeh moving to Foundry Nuke

After 12 years developing and supporting Bokeh we are excited to announce the product has found a new home with Foundry.

https://peregrinelabs.com/blogs/news/bokeh-has-a-new-home

-

Fitpoly or polynomial regression plots for converting sparse data into an usable curve formula

https://www.geogebra.org/calculator

- enter the sparse data

- make a list out of it

- use a fitpoly(listname,3) function to return a curve and related formula

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Photography basics: How Exposure Stops (Aperture, Shutter Speed, and ISO) Affect Your Photos – cheat sheet cards

-

GretagMacbeth Color Checker Numeric Values and Middle Gray

-

Photography basics: Shutter angle and shutter speed and motion blur

-

AI and the Law – studiobinder.com – What is Fair Use: Definition, Policies, Examples and More

-

Ethan Roffler interviews CG Supervisor Daniele Tosti

-

VFX pipeline – Render Wall management topics

-

Top 3D Printing Website Resources

-

AI and the Law – Netflix : Using Generative AI in Content Production

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.