COMPOSITION

-

Mastering Camera Shots and Angles: A Guide for Filmmakers

Read more: Mastering Camera Shots and Angles: A Guide for Filmmakershttps://website.ltx.studio/blog/mastering-camera-shots-and-angles

1. Extreme Wide Shot

2. Wide Shot

3. Medium Shot

4. Close Up

5. Extreme Close Up

DESIGN

COLOR

-

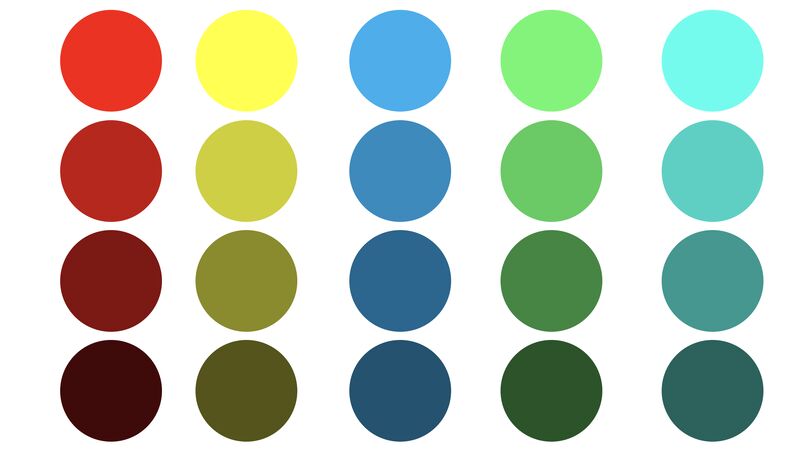

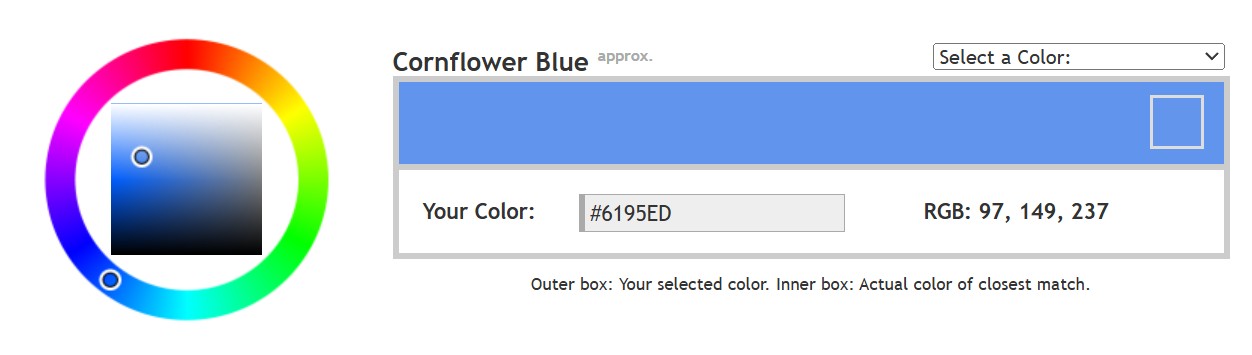

Is it possible to get a dark yellow

Read more: Is it possible to get a dark yellowhttps://www.patreon.com/posts/102660674

https://www.linkedin.com/posts/stephenwestland_here-is-a-post-about-the-dark-yellow-problem-activity-7187131643764092929-7uCL

-

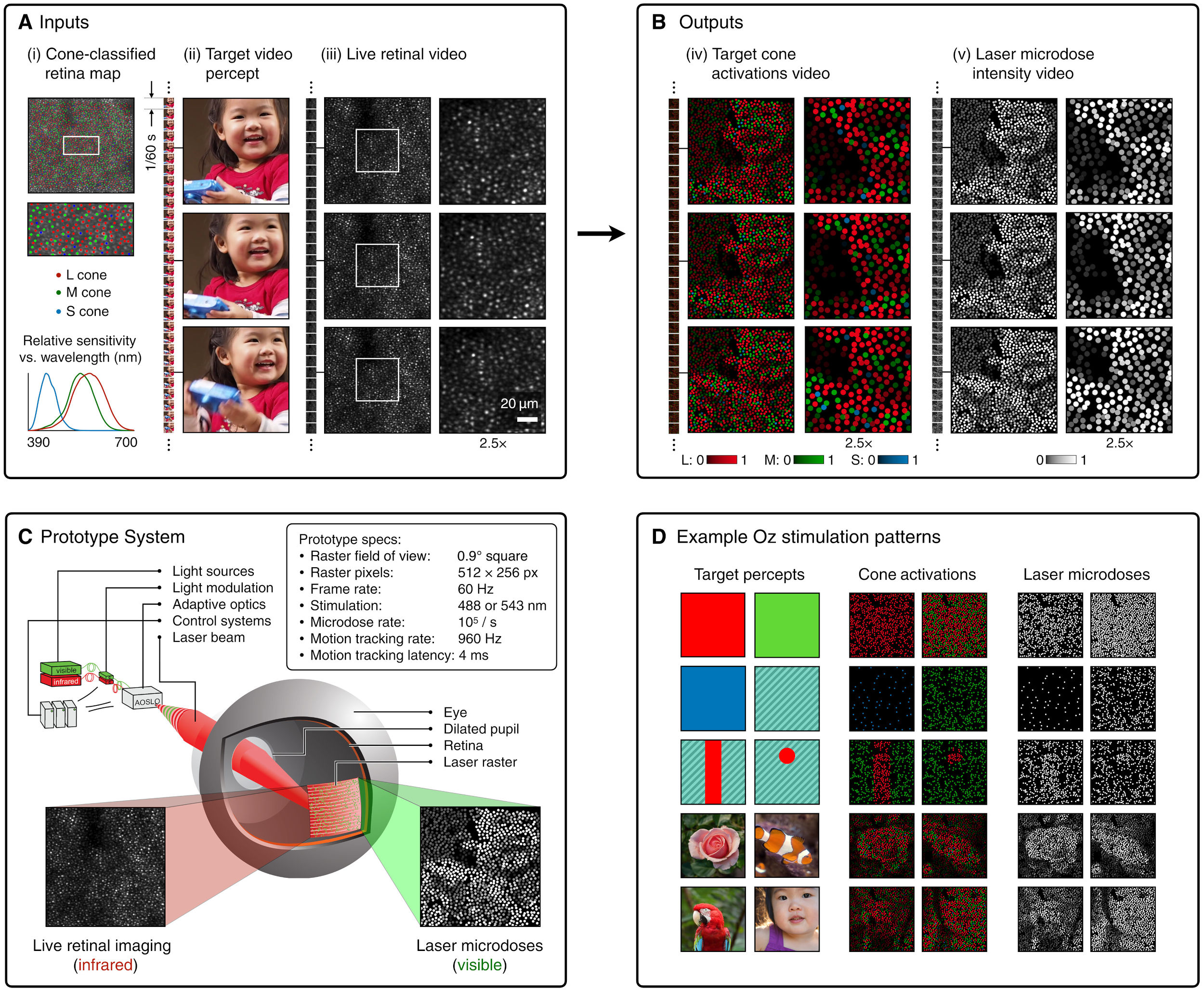

Scientists claim to have discovered ‘new colour’ no one has seen before: Olo

Read more: Scientists claim to have discovered ‘new colour’ no one has seen before: Olohttps://www.bbc.com/news/articles/clyq0n3em41o

By stimulating specific cells in the retina, the participants claim to have witnessed a blue-green colour that scientists have called “olo”, but some experts have said the existence of a new colour is “open to argument”.

The findings, published in the journal Science Advances on Friday, have been described by the study’s co-author, Prof Ren Ng from the University of California, as “remarkable”.

(A) System inputs. (i) Retina map of 103 cone cells preclassified by spectral type (7). (ii) Target visual percept (here, a video of a child, see movie S1 at 1:04). (iii) Infrared cellular-scale imaging of the retina with 60-frames-per-second rolling shutter. Fixational eye movement is visible over the three frames shown.

(B) System outputs. (iv) Real-time per-cone target activation levels to reproduce the target percept, computed by: extracting eye motion from the input video relative to the retina map; identifying the spectral type of every cone in the field of view; computing the per-cone activation the target percept would have produced. (v) Intensities of visible-wavelength 488-nm laser microdoses at each cone required to achieve its target activation level.

(C) Infrared imaging and visible-wavelength stimulation are physically accomplished in a raster scan across the retinal region using AOSLO. By modulating the visible-wavelength beam’s intensity, the laser microdoses shown in (v) are delivered. Drawing adapted with permission [Harmening and Sincich (54)].

(D) Examples of target percepts with corresponding cone activations and laser microdoses, ranging from colored squares to complex imagery. Teal-striped regions represent the color “olo” of stimulating only M cones.

-

Tobia Montanari – Memory Colors: an essential tool for Colorists

Read more: Tobia Montanari – Memory Colors: an essential tool for Coloristshttps://www.tobiamontanari.com/memory-colors-an-essential-tool-for-colorists/

“Memory colors are colors that are universally associated with specific objects, elements or scenes in our environment. They are the colors that we expect to see in specific situations: these colors are based on our expectation of how certain objects should look based on our past experiences and memories.

For instance, we associate specific hues, saturation and brightness values with human skintones and a slight variation can significantly affect the way we perceive a scene.

Similarly, we expect blue skies to have a particular hue, green trees to be a specific shade and so on.

Memory colors live inside of our brains and we often impose them onto what we see. By considering them during the grading process, the resulting image will be more visually appealing and won’t distract the viewer from the intended message of the story. Even a slight deviation from memory colors in a movie can create a sense of discordance, ultimately detracting from the viewer’s experience.”

-

Brett Jones / Phil Reyneri (Lightform) / Philipp7pc: The study of Projection Mapping through Projectors

Read more: Brett Jones / Phil Reyneri (Lightform) / Philipp7pc: The study of Projection Mapping through ProjectorsVideo Projection Tool Software

https://hcgilje.wordpress.com/vpt/https://www.projectorpoint.co.uk/news/how-bright-should-my-projector-be/

http://www.adwindowscreens.com/the_calculator/

heavym

https://heavym.net/en/MadMapper

https://madmapper.com/ -

Composition – cinematography Cheat Sheet

Read more: Composition – cinematography Cheat Sheet

Where is our eye attracted first? Why?

Size. Focus. Lighting. Color.

Size. Mr. White (Harvey Keitel) on the right.

Focus. He’s one of the two objects in focus.

Lighting. Mr. White is large and in focus and Mr. Pink (Steve Buscemi) is highlighted by

a shaft of light.

Color. Both are black and white but the read on Mr. White’s shirt now really stands out.

(more…)

What type of lighting? -

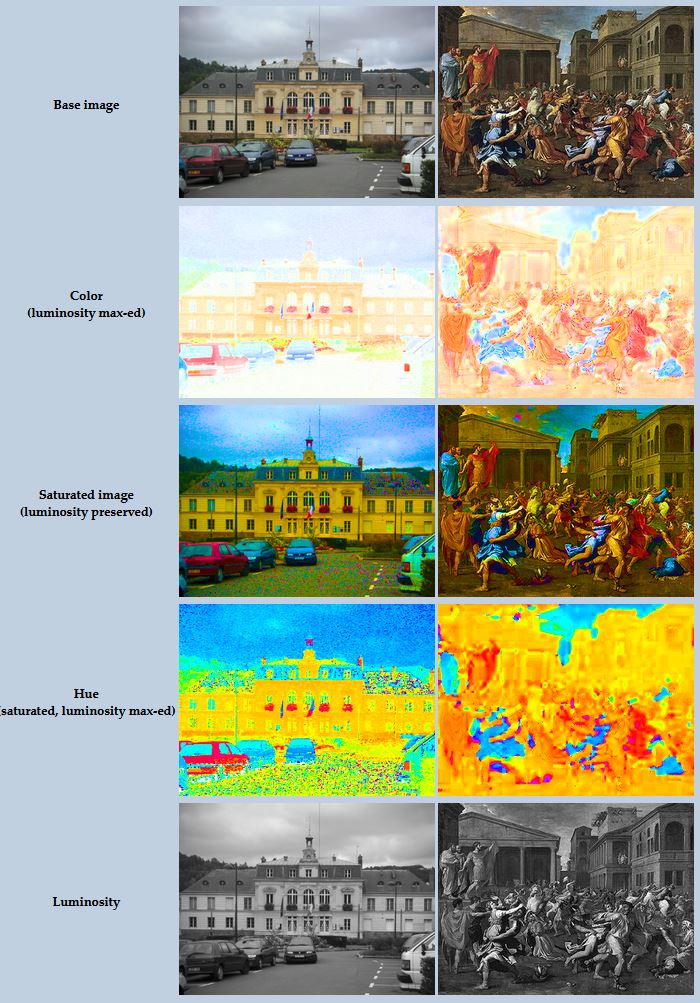

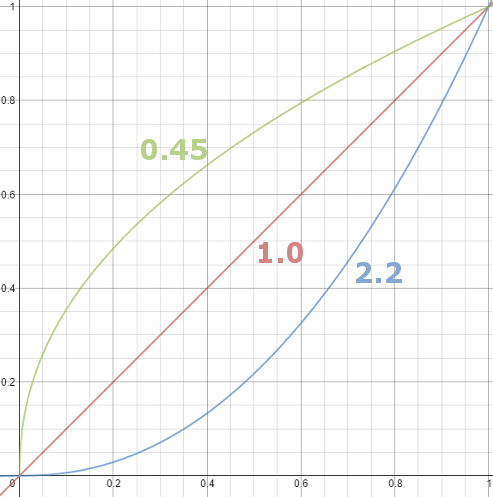

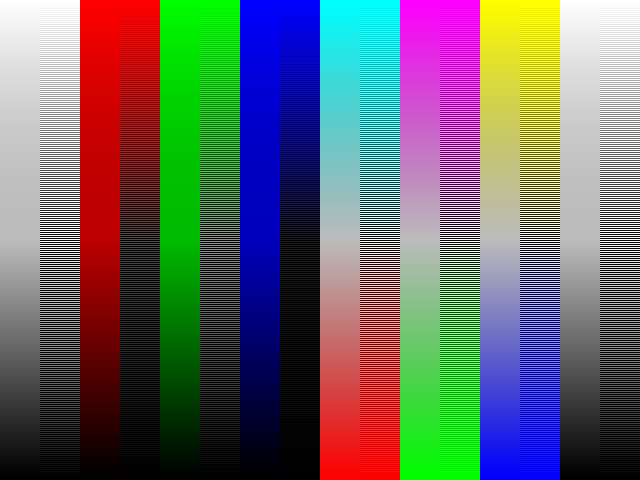

Gamma correction

Read more: Gamma correction

http://www.normankoren.com/makingfineprints1A.html#Gammabox

https://en.wikipedia.org/wiki/Gamma_correction

http://www.photoscientia.co.uk/Gamma.htm

https://www.w3.org/Graphics/Color/sRGB.html

http://www.eizoglobal.com/library/basics/lcd_display_gamma/index.html

https://forum.reallusion.com/PrintTopic308094.aspx

Basically, gamma is the relationship between the brightness of a pixel as it appears on the screen, and the numerical value of that pixel. Generally Gamma is just about defining relationships.

Three main types:

– Image Gamma encoded in images

– Display Gammas encoded in hardware and/or viewing time

– System or Viewing Gamma which is the net effect of all gammas when you look back at a final image. In theory this should flatten back to 1.0 gamma.

(more…) -

The Maya civilization and the color blue

Read more: The Maya civilization and the color blueMaya blue is a highly unusual pigment because it is a mix of organic indigo and an inorganic clay mineral called palygorskite.

Echoing the color of an azure sky, the indelible pigment was used to accentuate everything from ceramics to human sacrifices in the Late Preclassic period (300 B.C. to A.D. 300).

A team of researchers led by Dean Arnold, an adjunct curator of anthropology at the Field Museum in Chicago, determined that the key to Maya blue was actually a sacred incense called copal.

By heating the mixture of indigo, copal and palygorskite over a fire, the Maya produced the unique pigment, he reported at the time.

-

Thomas Mansencal – Colour Science for Python

Read more: Thomas Mansencal – Colour Science for Pythonhttps://thomasmansencal.substack.com/p/colour-science-for-python

https://www.colour-science.org/

Colour is an open-source Python package providing a comprehensive number of algorithms and datasets for colour science. It is freely available under the BSD-3-Clause terms.

LIGHTING

-

Magnific.ai Relight – change the entire lighting of a scene

Read more: Magnific.ai Relight – change the entire lighting of a sceneIt’s a new Magnific spell that allows you to change the entire lighting of a scene and, optionally, the background with just:

1/ A prompt OR

2/ A reference image OR

3/ A light map (drawing your own lights)https://x.com/javilopen/status/1805274155065176489

-

About green screens

Read more: About green screenshackaday.com/2015/02/07/how-green-screen-worked-before-computers/

www.newtek.com/blog/tips/best-green-screen-materials/

www.chromawall.com/blog//chroma-key-green

Chroma Key Green, the color of green screens is also known as Chroma Green and is valued at approximately 354C in the Pantone color matching system (PMS).

Chroma Green can be broken down in many different ways. Here is green screen green as other values useful for both physical and digital production:

Green Screen as RGB Color Value: 0, 177, 64

Green Screen as CMYK Color Value: 81, 0, 92, 0

Green Screen as Hex Color Value: #00b140

Green Screen as Websafe Color Value: #009933Chroma Key Green is reasonably close to an 18% gray reflectance.

Illuminate your green screen with an uniform source with less than 2/3 EV variation.

The level of brightness at any given f-stop should be equivalent to a 90% white card under the same lighting. -

7 Easy Portrait Lighting Setups

Read more: 7 Easy Portrait Lighting SetupsButterfly

Loop

Rembrandt

Split

Rim

Broad

Short

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Ethan Roffler interviews CG Supervisor Daniele Tosti

-

FFmpeg – examples and convenience lines

-

Python and TCL: Tips and Tricks for Foundry Nuke

-

Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminance

-

JavaScript how-to free resources

-

AI Search – Find The Best AI Tools & Apps

-

Matt Gray – How to generate a profitable business

-

Ross Pettit on The Agile Manager – How tech firms went for prioritizing cash flow instead of talent (and artists)

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.

![sRGB gamma correction test [gamma correction test]](http://www.madore.org/~david/misc/color/gammatest.png)