COMPOSITION

-

Photography basics: Depth of Field and composition

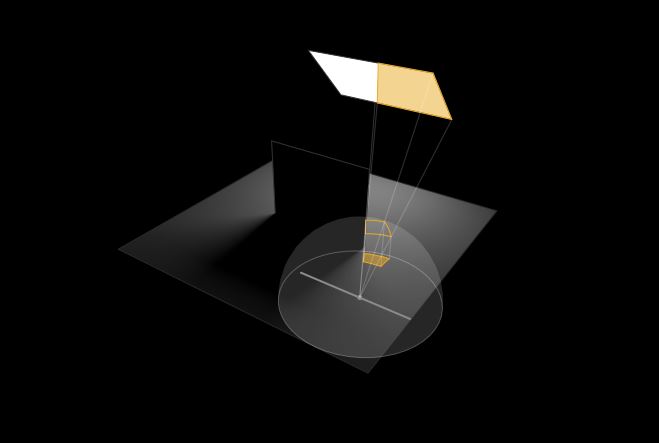

Read more: Photography basics: Depth of Field and compositionDepth of field is the range within which focusing is resolved in a photo.

Aperture has a huge affect on to the depth of field.Changing the f-stops (f/#) of a lens will change aperture and as such the DOF.

f-stops are a just certain number which is telling you the size of the aperture. That’s how f-stop is related to aperture (and DOF).

If you increase f-stops, it will increase DOF, the area in focus (and decrease the aperture). On the other hand, decreasing the f-stop it will decrease DOF (and increase the aperture).

The red cone in the figure is an angular representation of the resolution of the system. Versus the dotted lines, which indicate the aperture coverage. Where the lines of the two cones intersect defines the total range of the depth of field.

This image explains why the longer the depth of field, the greater the range of clarity.

-

HuggingFace ai-comic-factory – a FREE AI Comic Book Creator

Read more: HuggingFace ai-comic-factory – a FREE AI Comic Book Creatorhttps://huggingface.co/spaces/jbilcke-hf/ai-comic-factory

this is the epic story of a group of talented digital artists trying to overcame daily technical challenges to achieve incredibly photorealistic projects of monsters and aliens

DESIGN

-

How to paint a boardgame miniatures

Read more: How to paint a boardgame miniaturesSteps:

- soap wash cleaning

- primer

- base-coat layer (black/white)

- detailing

- washing aka shade (could be done after highlighting)

- highlights aka dry brushing (could be done after washing)

- varnish (gloss/satin/matte)

COLOR

-

If a blind person gained sight, could they recognize objects previously touched?

Read more: If a blind person gained sight, could they recognize objects previously touched?Blind people who regain their sight may find themselves in a world they don’t immediately comprehend. “It would be more like a sighted person trying to rely on tactile information,” Moore says.

Learning to see is a developmental process, just like learning language, Prof Cathleen Moore continues. “As far as vision goes, a three-and-a-half year old child is already a well-calibrated system.”

-

Björn Ottosson – How software gets color wrong

Read more: Björn Ottosson – How software gets color wronghttps://bottosson.github.io/posts/colorwrong/

Most software around us today are decent at accurately displaying colors. Processing of colors is another story unfortunately, and is often done badly.

To understand what the problem is, let’s start with an example of three ways of blending green and magenta:

- Perceptual blend – A smooth transition using a model designed to mimic human perception of color. The blending is done so that the perceived brightness and color varies smoothly and evenly.

- Linear blend – A model for blending color based on how light behaves physically. This type of blending can occur in many ways naturally, for example when colors are blended together by focus blur in a camera or when viewing a pattern of two colors at a distance.

- sRGB blend – This is how colors would normally be blended in computer software, using sRGB to represent the colors.

Let’s look at some more examples of blending of colors, to see how these problems surface more practically. The examples use strong colors since then the differences are more pronounced. This is using the same three ways of blending colors as the first example.

Instead of making it as easy as possible to work with color, most software make it unnecessarily hard, by doing image processing with representations not designed for it. Approximating the physical behavior of light with linear RGB models is one easy thing to do, but more work is needed to create image representations tailored for image processing and human perception.

Also see:

-

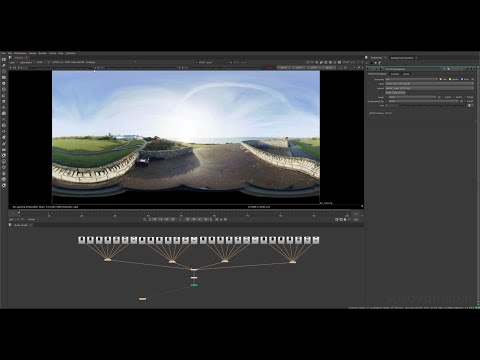

Photography basics: Why Use a (MacBeth) Color Chart?

Read more: Photography basics: Why Use a (MacBeth) Color Chart?Start here: https://www.pixelsham.com/2013/05/09/gretagmacbeth-color-checker-numeric-values/

https://www.studiobinder.com/blog/what-is-a-color-checker-tool/

In LightRoom

in Final Cut

in Nuke

Note: In Foundry’s Nuke, the software will map 18% gray to whatever your center f/stop is set to in the viewer settings (f/8 by default… change that to EV by following the instructions below).

You can experiment with this by attaching an Exposure node to a Constant set to 0.18, setting your viewer read-out to Spotmeter, and adjusting the stops in the node up and down. You will see that a full stop up or down will give you the respective next value on the aperture scale (f8, f11, f16 etc.).One stop doubles or halves the amount or light that hits the filmback/ccd, so everything works in powers of 2.

So starting with 0.18 in your constant, you will see that raising it by a stop will give you .36 as a floating point number (in linear space), while your f/stop will be f/11 and so on.If you set your center stop to 0 (see below) you will get a relative readout in EVs, where EV 0 again equals 18% constant gray.

In other words. Setting the center f-stop to 0 means that in a neutral plate, the middle gray in the macbeth chart will equal to exposure value 0. EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

This will set the sun usually around EV12-17 and the sky EV1-4 , depending on cloud coverage.

To switch Foundry’s Nuke’s SpotMeter to return the EV of an image, click on the main viewport, and then press s, this opens the viewer’s properties. Now set the center f-stop to 0 in there. And the SpotMeter in the viewport will change from aperture and fstops to EV.

-

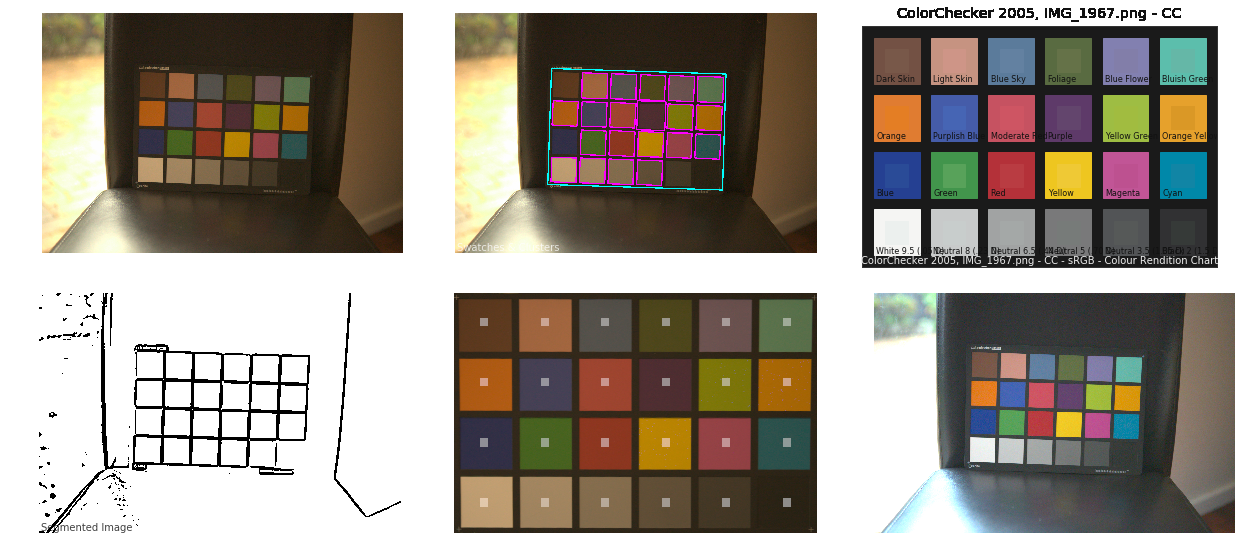

Colour – MacBeth Chart Checker Detection

Read more: Colour – MacBeth Chart Checker Detectiongithub.com/colour-science/colour-checker-detection

A Python package implementing various colour checker detection algorithms and related utilities.

-

Willem Zwarthoed – Aces gamut in VFX production pdf

Read more: Willem Zwarthoed – Aces gamut in VFX production pdfhttps://www.provideocoalition.com/color-management-part-12-introducing-aces/

Local copy:

https://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applications

-

Virtual Production volumes study

Read more: Virtual Production volumes studyColor Fidelity in LED Volumes

https://theasc.com/articles/color-fidelity-in-led-volumesVirtual Production Glossary

https://vpglossary.com/What is Virtual Production – In depth analysis

https://www.leadingledtech.com/what-is-a-led-virtual-production-studio-in-depth-technical-analysis/A comparison of LED panels for use in Virtual Production:

Findings and recommendations

https://eprints.bournemouth.ac.uk/36826/1/LED_Comparison_White_Paper%281%29.pdf

LIGHTING

-

Vahan Sosoyan MakeHDR – an OpenFX open source plug-in for merging multiple LDR images into a single HDRI

Read more: Vahan Sosoyan MakeHDR – an OpenFX open source plug-in for merging multiple LDR images into a single HDRIhttps://github.com/Sosoyan/make-hdr

Feature notes

- Merge up to 16 inputs with 8, 10 or 12 bit depth processing

- User friendly logarithmic Tone Mapping controls within the tool

- Advanced controls such as Sampling rate and Smoothness

Available at cross platform on Linux, MacOS and Windows Works consistent in compositing applications like Nuke, Fusion, Natron.

NOTE: The goal is to clean the initial individual brackets before or at merging time as much as possible.

This means:- keeping original shooting metadata

- de-fringing

- removing aberration (through camera lens data or automatically)

- at 32 bit

- in ACEScg (or ACES) wherever possible

-

IES Light Profiles and editing software

Read more: IES Light Profiles and editing softwarehttp://www.derekjenson.com/3d-blog/ies-light-profiles

https://ieslibrary.com/en/browse#ies

https://leomoon.com/store/shaders/ies-lights-pack

https://docs.arnoldrenderer.com/display/a5afmug/ai+photometric+light

IES profiles are useful for creating life-like lighting, as they can represent the physical distribution of light from any light source.

The IES format was created by the Illumination Engineering Society, and most lighting manufacturers provide IES profile for the lights they manufacture.

-

studiobinder.com – What is Tenebrism and Hard Lighting — The Art of Light and Shadow and chiaroscuro Explained

Read more: studiobinder.com – What is Tenebrism and Hard Lighting — The Art of Light and Shadow and chiaroscuro Explainedhttps://www.studiobinder.com/blog/what-is-tenebrism-art-definition/

https://www.studiobinder.com/blog/what-is-hard-light-photography/

-

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

Read more: Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental processhttps://www.chrbutler.com/understanding-the-eye-mind-connection

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning

-

Narcis Calin’s Galaxy Engine – A free, open source simulation software

Read more: Narcis Calin’s Galaxy Engine – A free, open source simulation softwareThis 2025 I decided to start learning how to code, so I installed Visual Studio and I started looking into C++. After days of watching tutorials and guides about the basics of C++ and programming, I decided to make something physics-related. I started with a dot that fell to the ground and then I wanted to simulate gravitational attraction, so I made 2 circles attracting each other. I thought it was really cool to see something I made with code actually work, so I kept building on top of that small, basic program. And here we are after roughly 8 months of learning programming. This is Galaxy Engine, and it is a simulation software I have been making ever since I started my learning journey. It currently can simulate gravity, dark matter, galaxies, the Big Bang, temperature, fluid dynamics, breakable solids, planetary interactions, etc. The program can run many tens of thousands of particles in real time on the CPU thanks to the Barnes-Hut algorithm, mixed with Morton curves. It also includes its own PBR 2D path tracer with BVH optimizations. The path tracer can simulate a bunch of stuff like diffuse lighting, specular reflections, refraction, internal reflection, fresnel, emission, dispersion, roughness, IOR, nested IOR and more! I tried to make the path tracer closer to traditional 3D render engines like V-Ray. I honestly never imagined I would go this far with programming, and it has been an amazing learning experience so far. I think that mixing this knowledge with my 3D knowledge can unlock countless new possibilities. In case you are curious about Galaxy Engine, I made it completely free and Open-Source so that anyone can build and compile it locally! You can find the source code in GitHub

https://github.com/NarcisCalin/Galaxy-Engine

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

PixelSham – Introduction to Python 2022

-

Decart AI Mirage – The first ever World Transformation Model – turning any video, game, or camera feed into a new digital world, in real time

-

MiniTunes V1 – Free MP3 library app

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

-

Guide to Prompt Engineering

-

Methods for creating motion blur in Stop motion

-

STOP FCC – SAVE THE FREE NET

-

4dv.ai – Remote Interactive 3D Holographic Presentation Technology and System running on the PlayCanvas engine

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.