COMPOSITION

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

Read more: Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacynofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. “

Use CRI, Luminous Efficacy and color temperature controls to match your needs.Color Temperature

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. “https://www.studiobinder.com/blog/what-is-color-rendering-index

(more…)

DESIGN

COLOR

-

The Forbidden colors – Red-Green & Blue-Yellow: The Stunning Colors You Can’t See

Read more: The Forbidden colors – Red-Green & Blue-Yellow: The Stunning Colors You Can’t Seewww.livescience.com/17948-red-green-blue-yellow-stunning-colors.html

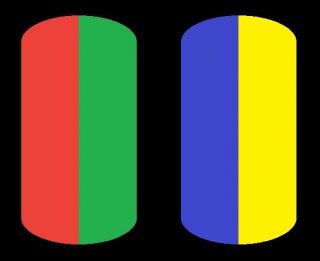

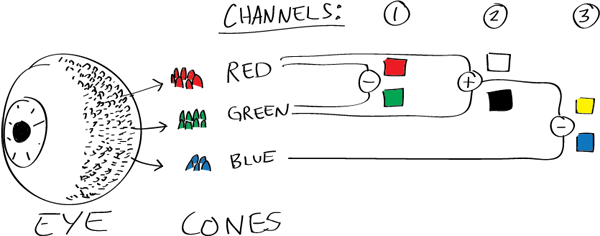

While the human eye has red, green, and blue-sensing cones, those cones are cross-wired in the retina to produce a luminance channel plus a red-green and a blue-yellow channel, and it’s data in that color space (known technically as “LAB”) that goes to the brain. That’s why we can’t perceive a reddish-green or a yellowish-blue, whereas such colors can be represented in the RGB color space used by digital cameras.

https://en.rockcontent.com/blog/the-use-of-yellow-in-data-design

The back of the retina is covered in light-sensitive neurons known as cone cells and rod cells. There are three types of cone cells, each sensitive to different ranges of light. These ranges overlap, but for convenience the cones are referred to as blue (short-wavelength), green (medium-wavelength), and red (long-wavelength). The rod cells are primarily used in low-light situations, so we’ll ignore those for now.

When light enters the eye and hits the cone cells, the cones get excited and send signals to the brain through the visual cortex. Different wavelengths of light excite different combinations of cones to varying levels, which generates our perception of color. You can see that the red cones are most sensitive to light, and the blue cones are least sensitive. The sensitivity of green and red cones overlaps for most of the visible spectrum.

Here’s how your brain takes the signals of light intensity from the cones and turns it into color information. To see red or green, your brain finds the difference between the levels of excitement in your red and green cones. This is the red-green channel.

To get “brightness,” your brain combines the excitement of your red and green cones. This creates the luminance, or black-white, channel. To see yellow or blue, your brain then finds the difference between this luminance signal and the excitement of your blue cones. This is the yellow-blue channel.

From the calculations made in the brain along those three channels, we get four basic colors: blue, green, yellow, and red. Seeing blue is what you experience when low-wavelength light excites the blue cones more than the green and red.

Seeing green happens when light excites the green cones more than the red cones. Seeing red happens when only the red cones are excited by high-wavelength light.

Here’s where it gets interesting. Seeing yellow is what happens when BOTH the green AND red cones are highly excited near their peak sensitivity. This is the biggest collective excitement that your cones ever have, aside from seeing pure white.

Notice that yellow occurs at peak intensity in the graph to the right. Further, the lens and cornea of the eye happen to block shorter wavelengths, reducing sensitivity to blue and violet light.

-

Pattern generators

Read more: Pattern generatorshttp://qrohlf.com/trianglify-generator/

https://halftonepro.com/app/polygons#

https://mattdesl.svbtle.com/generative-art-with-nodejs-and-canvas

https://www.patterncooler.com/

http://permadi.com/java/spaint/spaint.html

https://dribbble.com/shots/1847313-Kaleidoscope-Generator-PSD

http://eskimoblood.github.io/gerstnerizer/

http://www.stripegenerator.com/

http://btmills.github.io/geopattern/geopattern.html

http://fractalarchitect.net/FA4-Random-Generator.html

https://sciencevsmagic.net/fractal/#0605,0000,3,2,0,1,2

https://sites.google.com/site/mandelbulber/home

-

HDR and Color

Read more: HDR and Colorhttps://www.soundandvision.com/content/nits-and-bits-hdr-and-color

In HD we often refer to the range of available colors as a color gamut. Such a color gamut is typically plotted on a two-dimensional diagram, called a CIE chart, as shown in at the top of this blog. Each color is characterized by its x/y coordinates.

Good enough for government work, perhaps. But for HDR, with its higher luminance levels and wider color, the gamut becomes three-dimensional.

For HDR the color gamut therefore becomes a characteristic we now call the color volume. It isn’t easy to show color volume on a two-dimensional medium like the printed page or a computer screen, but one method is shown below. As the luminance becomes higher, the picture eventually turns to white. As it becomes darker, it fades to black. The traditional color gamut shown on the CIE chart is simply a slice through this color volume at a selected luminance level, such as 50%.

Three different color volumes—we still refer to them as color gamuts though their third dimension is important—are currently the most significant. The first is BT.709 (sometimes referred to as Rec.709), the color gamut used for pre-UHD/HDR formats, including standard HD.

The largest is known as BT.2020; it encompasses (roughly) the range of colors visible to the human eye (though ET might find it insufficient!).

Between these two is the color gamut used in digital cinema, known as DCI-P3.

sRGB

D65

LIGHTING

-

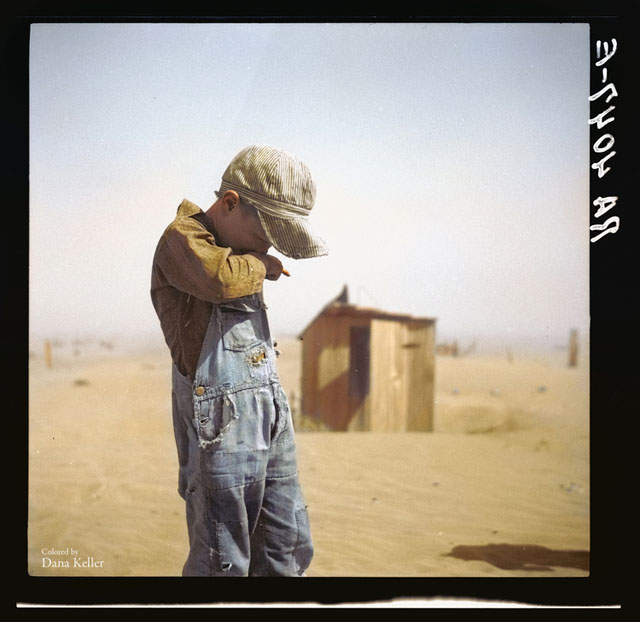

Composition and The Expressive Nature Of Light

Read more: Composition and The Expressive Nature Of Lighthttp://www.huffingtonpost.com/bill-danskin/post_12457_b_10777222.html

George Sand once said “ The artist vocation is to send light into the human heart.”

-

Light properties

Read more: Light propertiesHow It Works – Issue 114

https://www.howitworksdaily.com/ -

Composition – 5 tips for creating perfect cinematic lighting and making your work look stunning

Read more: Composition – 5 tips for creating perfect cinematic lighting and making your work look stunninghttp://www.diyphotography.net/5-tips-creating-perfect-cinematic-lighting-making-work-look-stunning/

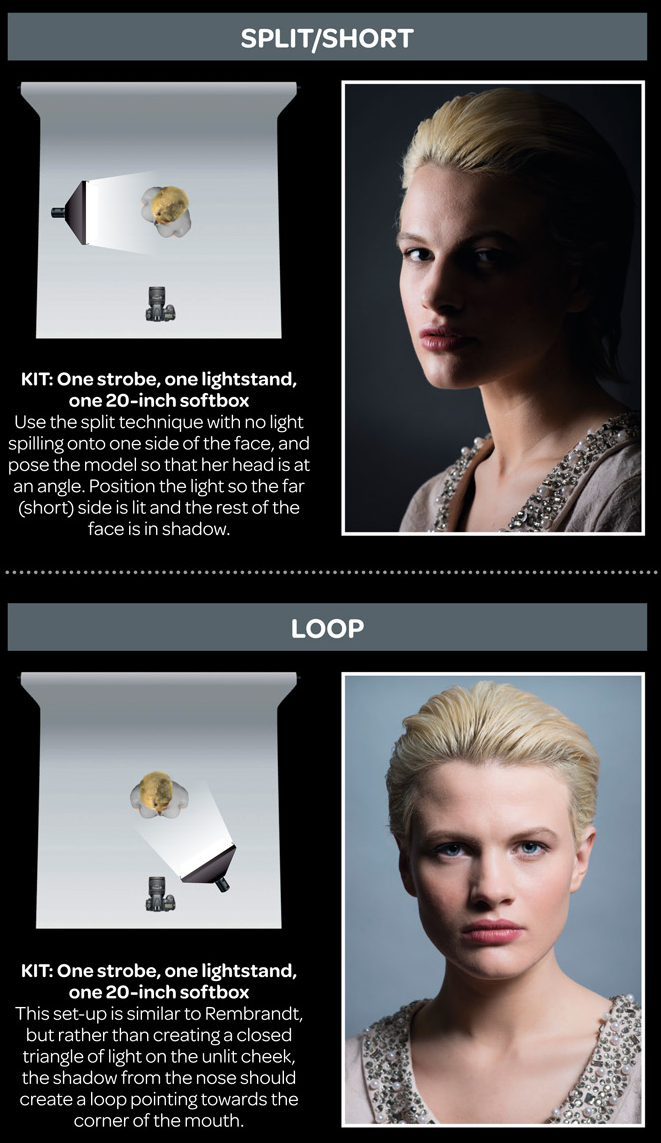

1. Learn the rules of lighting

2. Learn when to break the rules

3. Make your key light larger

4. Reverse keying

5. Always be backlighting

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Decart AI Mirage – The first ever World Transformation Model – turning any video, game, or camera feed into a new digital world, in real time

-

AI and the Law – studiobinder.com – What is Fair Use: Definition, Policies, Examples and More

-

What’s the Difference Between Ray Casting, Ray Tracing, Path Tracing and Rasterization? Physical light tracing…

-

JavaScript how-to free resources

-

Most common ways to smooth 3D prints

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

-

MiniTunes V1 – Free MP3 library app

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.