COMPOSITION

-

Composition – cinematography Cheat Sheet

Read more: Composition – cinematography Cheat Sheet

Where is our eye attracted first? Why?

Size. Focus. Lighting. Color.

Size. Mr. White (Harvey Keitel) on the right.

Focus. He’s one of the two objects in focus.

Lighting. Mr. White is large and in focus and Mr. Pink (Steve Buscemi) is highlighted by

a shaft of light.

Color. Both are black and white but the read on Mr. White’s shirt now really stands out.

(more…)

What type of lighting? -

Photography basics: Camera Aspect Ratio, Sensor Size and Depth of Field – resolutions

Read more: Photography basics: Camera Aspect Ratio, Sensor Size and Depth of Field – resolutionshttp://www.shutterangle.com/2012/cinematic-look-aspect-ratio-sensor-size-depth-of-field/

http://www.shutterangle.com/2012/film-video-aspect-ratio-artistic-choice/

DESIGN

COLOR

-

Mysterious animation wins best illusion of 2011 – Motion silencing illusion

Read more: Mysterious animation wins best illusion of 2011 – Motion silencing illusionThe 2011 Best Illusion of the Year uses motion to render color changes invisible, and so reveals a quirk in our visual systems that is new to scientists.

https://en.wikipedia.org/wiki/Motion_silencing_illusion

“It is a really beautiful effect, revealing something about how our visual system works that we didn’t know before,” said Daniel Simons, a professor at the University of Illinois, Champaign-Urbana. Simons studies visual cognition, and did not work on this illusion. Before its creation, scientists didn’t know that motion had this effect on perception, Simons said.

A viewer stares at a speck at the center of a ring of colored dots, which continuously change color. When the ring begins to rotate around the speck, the color changes appear to stop. But this is an illusion. For some reason, the motion causes our visual system to ignore the color changes. (You can, however, see the color changes if you follow the rotating circles with your eyes.)

-

3D Lighting Tutorial by Amaan Kram

Read more: 3D Lighting Tutorial by Amaan Kramhttp://www.amaanakram.com/lightingT/part1.htm

The goals of lighting in 3D computer graphics are more or less the same as those of real world lighting.

Lighting serves a basic function of bringing out, or pushing back the shapes of objects visible from the camera’s view.

It gives a two-dimensional image on the monitor an illusion of the third dimension-depth.But it does not just stop there. It gives an image its personality, its character. A scene lit in different ways can give a feeling of happiness, of sorrow, of fear etc., and it can do so in dramatic or subtle ways. Along with personality and character, lighting fills a scene with emotion that is directly transmitted to the viewer.

Trying to simulate a real environment in an artificial one can be a daunting task. But even if you make your 3D rendering look absolutely photo-realistic, it doesn’t guarantee that the image carries enough emotion to elicit a “wow” from the people viewing it.

Making 3D renderings photo-realistic can be hard. Putting deep emotions in them can be even harder. However, if you plan out your lighting strategy for the mood and emotion that you want your rendering to express, you make the process easier for yourself.

Each light source can be broken down in to 4 distinct components and analyzed accordingly.

· Intensity

· Direction

· Color

· SizeThe overall thrust of this writing is to produce photo-realistic images by applying good lighting techniques.

-

mmColorTarget – Nuke Gizmo for color matching a MacBeth chart

Read more: mmColorTarget – Nuke Gizmo for color matching a MacBeth charthttps://www.marcomeyer-vfx.de/posts/2014-04-11-mmcolortarget-nuke-gizmo/

https://www.marcomeyer-vfx.de/posts/mmcolortarget-nuke-gizmo/

https://vimeo.com/9.1652466e+07

https://www.nukepedia.com/gizmos/colour/mmcolortarget

-

Photography basics: Color Temperature and White Balance

Read more: Photography basics: Color Temperature and White BalanceColor Temperature of a light source describes the spectrum of light which is radiated from a theoretical “blackbody” (an ideal physical body that absorbs all radiation and incident light – neither reflecting it nor allowing it to pass through) with a given surface temperature.

https://en.wikipedia.org/wiki/Color_temperature

Or. Most simply it is a method of describing the color characteristics of light through a numerical value that corresponds to the color emitted by a light source, measured in degrees of Kelvin (K) on a scale from 1,000 to 10,000.

More accurately. The color temperature of a light source is the temperature of an ideal backbody that radiates light of comparable hue to that of the light source.

(more…) -

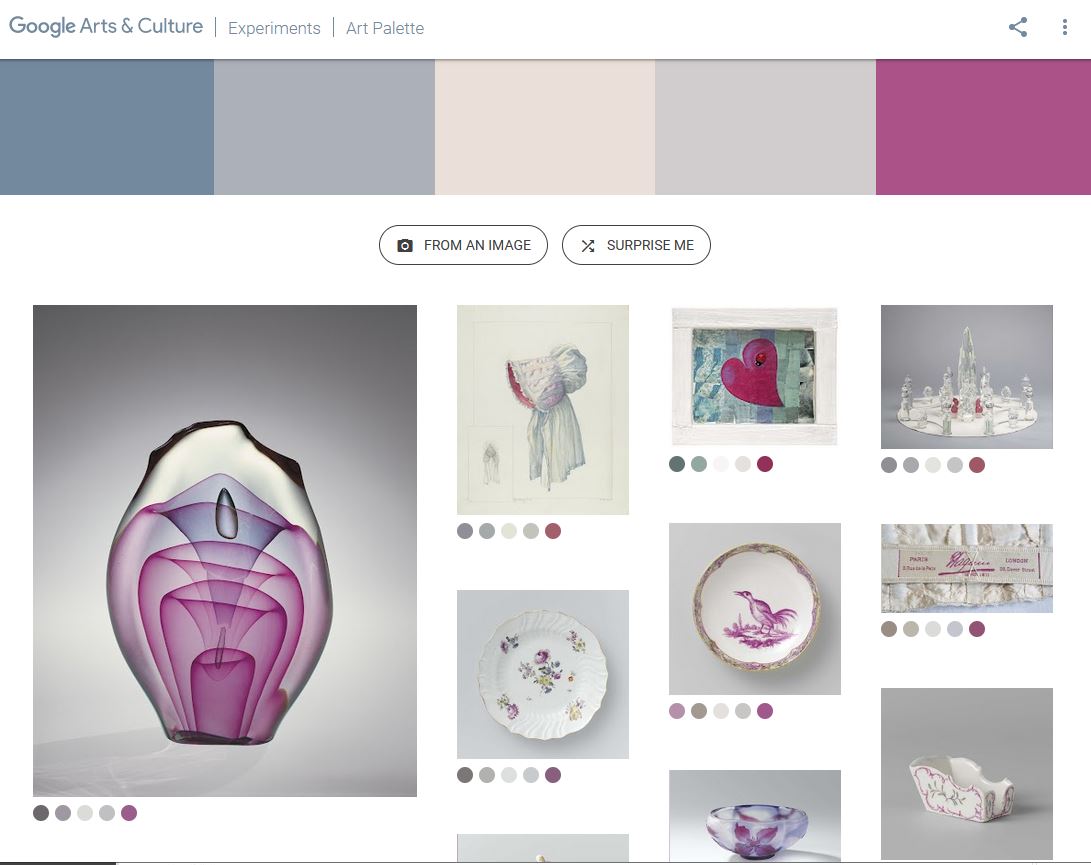

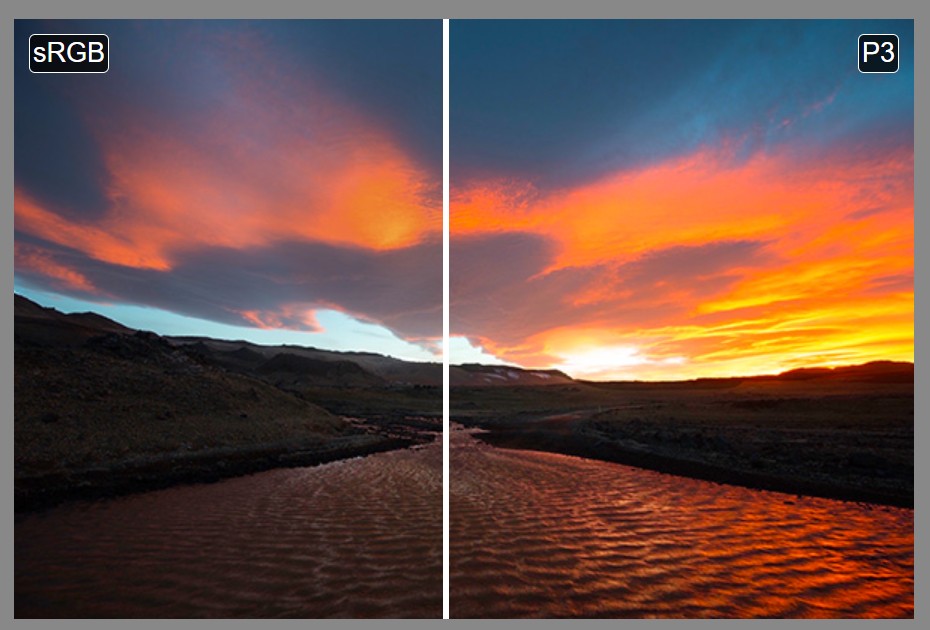

Colormaxxing – What if I told you that rgb(255, 0, 0) is not actually the reddest red you can have in your browser?

Read more: Colormaxxing – What if I told you that rgb(255, 0, 0) is not actually the reddest red you can have in your browser?https://karuna.dev/colormaxxing

https://webkit.org/blog-files/color-gamut/comparison.html

https://oklch.com/#70,0.1,197,100

-

Tobia Montanari – Memory Colors: an essential tool for Colorists

Read more: Tobia Montanari – Memory Colors: an essential tool for Coloristshttps://www.tobiamontanari.com/memory-colors-an-essential-tool-for-colorists/

“Memory colors are colors that are universally associated with specific objects, elements or scenes in our environment. They are the colors that we expect to see in specific situations: these colors are based on our expectation of how certain objects should look based on our past experiences and memories.

For instance, we associate specific hues, saturation and brightness values with human skintones and a slight variation can significantly affect the way we perceive a scene.

Similarly, we expect blue skies to have a particular hue, green trees to be a specific shade and so on.

Memory colors live inside of our brains and we often impose them onto what we see. By considering them during the grading process, the resulting image will be more visually appealing and won’t distract the viewer from the intended message of the story. Even a slight deviation from memory colors in a movie can create a sense of discordance, ultimately detracting from the viewer’s experience.”

LIGHTING

-

Ethan Roffler interviews CG Supervisor Daniele Tosti

Read more: Ethan Roffler interviews CG Supervisor Daniele TostiEthan Roffler

I recently had the honor of interviewing this VFX genius and gained great insight into what it takes to work in the entertainment industry. Keep in mind, these questions are coming from an artist’s perspective but can be applied to any creative individual looking for some wisdom from a professional. So grab a drink, sit back, and enjoy this fun and insightful conversation.

Ethan

To start, I just wanted to say thank you so much for taking the time for this interview!Daniele

My pleasure.

When I started my career I struggled to find help. Even people in the industry at the time were not that helpful. Because of that, I decided very early on that I was going to do exactly the opposite. I spend most of my weekends talking or helping students. ;)Ethan

(more…)

That’s awesome! I have also come across the same struggle! Just a heads up, this will probably be the most informal interview you’ll ever have haha! Okay, so let’s start with a small introduction! -

Black Body color aka the Planckian Locus curve for white point eye perception

Read more: Black Body color aka the Planckian Locus curve for white point eye perceptionhttp://en.wikipedia.org/wiki/Black-body_radiation

Black-body radiation is the type of electromagnetic radiation within or surrounding a body in thermodynamic equilibrium with its environment, or emitted by a black body (an opaque and non-reflective body) held at constant, uniform temperature. The radiation has a specific spectrum and intensity that depends only on the temperature of the body.

A black-body at room temperature appears black, as most of the energy it radiates is infra-red and cannot be perceived by the human eye. At higher temperatures, black bodies glow with increasing intensity and colors that range from dull red to blindingly brilliant blue-white as the temperature increases.

The Black Body Ultraviolet Catastrophe Experiment

In photography, color temperature describes the spectrum of light which is radiated from a “blackbody” with that surface temperature. A blackbody is an object which absorbs all incident light — neither reflecting it nor allowing it to pass through.

The Sun closely approximates a black-body radiator. Another rough analogue of blackbody radiation in our day to day experience might be in heating a metal or stone: these are said to become “red hot” when they attain one temperature, and then “white hot” for even higher temperatures. Similarly, black bodies at different temperatures also have varying color temperatures of “white light.”

Despite its name, light which may appear white does not necessarily contain an even distribution of colors across the visible spectrum.

Although planets and stars are neither in thermal equilibrium with their surroundings nor perfect black bodies, black-body radiation is used as a first approximation for the energy they emit. Black holes are near-perfect black bodies, and it is believed that they emit black-body radiation (called Hawking radiation), with a temperature that depends on the mass of the hole.

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

Read more: Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacynofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. “

Use CRI, Luminous Efficacy and color temperature controls to match your needs.Color Temperature

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. “https://www.studiobinder.com/blog/what-is-color-rendering-index

(more…) -

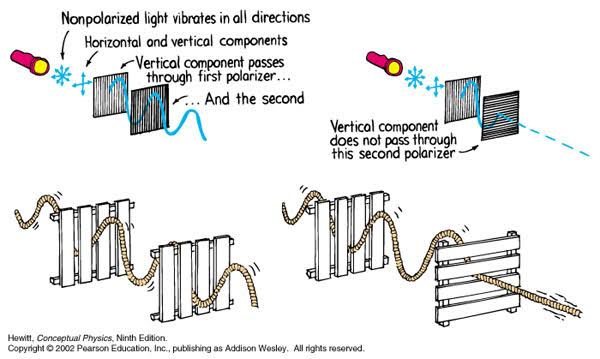

Polarised vs unpolarized filtering

Read more: Polarised vs unpolarized filteringA light wave that is vibrating in more than one plane is referred to as unpolarized light. …

Polarized light waves are light waves in which the vibrations occur in a single plane. The process of transforming unpolarized light into polarized light is known as polarization.

en.wikipedia.org/wiki/Polarizing_filter_(photography)

The most common use of polarized technology is to reduce lighting complexity on the subject.

(more…)

Details such as glare and hard edges are not removed, but greatly reduced. -

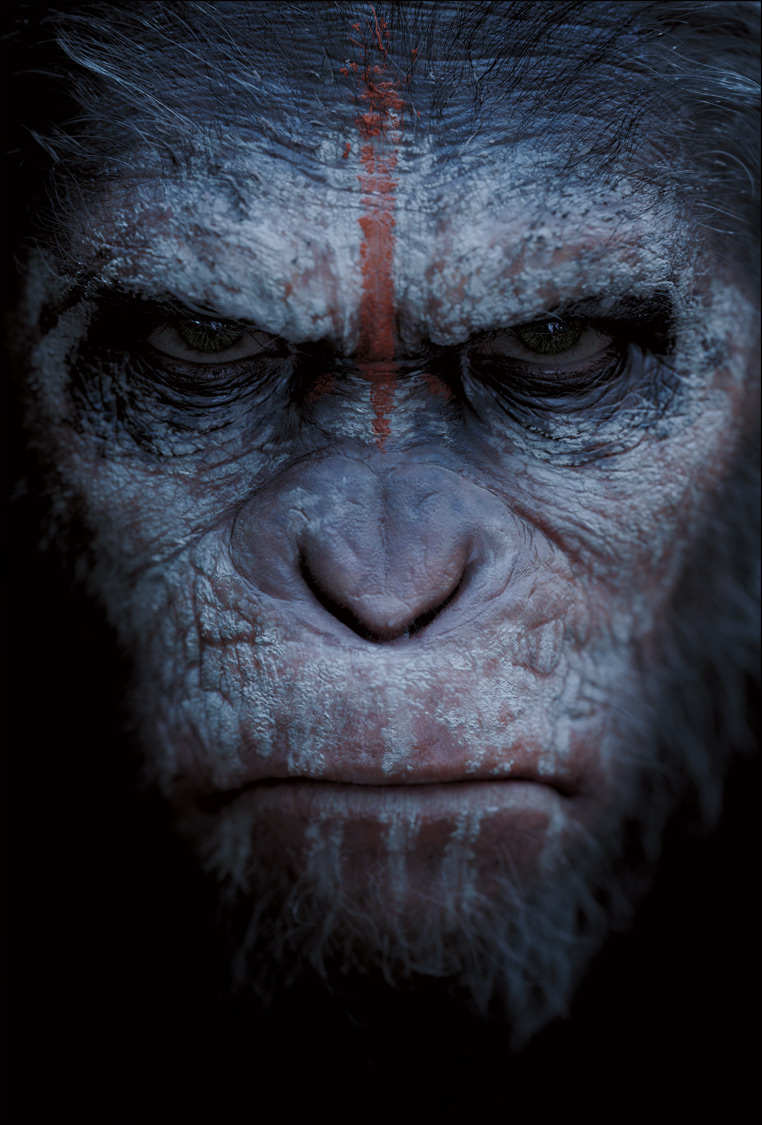

Terminators and Iron Men: HDRI, Image-based lighting and physical shading at ILM – Siggraph 2010

Read more: Terminators and Iron Men: HDRI, Image-based lighting and physical shading at ILM – Siggraph 2010 -

Rendering – BRDF – Bidirectional reflectance distribution function

Read more: Rendering – BRDF – Bidirectional reflectance distribution functionhttp://en.wikipedia.org/wiki/Bidirectional_reflectance_distribution_function

The bidirectional reflectance distribution function is a four-dimensional function that defines how light is reflected at an opaque surface

http://www.cs.ucla.edu/~zhu/tutorial/An_Introduction_to_BRDF-Based_Lighting.pdf

In general, when light interacts with matter, a complicated light-matter dynamic occurs. This interaction depends on the physical characteristics of the light as well as the physical composition and characteristics of the matter.

That is, some of the incident light is reflected, some of the light is transmitted, and another portion of the light is absorbed by the medium itself.

A BRDF describes how much light is reflected when light makes contact with a certain material. Similarly, a BTDF (Bi-directional Transmission Distribution Function) describes how much light is transmitted when light makes contact with a certain material

http://www.cs.princeton.edu/~smr/cs348c-97/surveypaper.html

It is difficult to establish exactly how far one should go in elaborating the surface model. A truly complete representation of the reflective behavior of a surface might take into account such phenomena as polarization, scattering, fluorescence, and phosphorescence, all of which might vary with position on the surface. Therefore, the variables in this complete function would be:

incoming and outgoing angle incoming and outgoing wavelength incoming and outgoing polarization (both linear and circular) incoming and outgoing position (which might differ due to subsurface scattering) time delay between the incoming and outgoing light ray

-

HDRI shooting and editing by Xuan Prada and Greg Zaal

Read more: HDRI shooting and editing by Xuan Prada and Greg Zaalwww.xuanprada.com/blog/2014/11/3/hdri-shooting

http://blog.gregzaal.com/2016/03/16/make-your-own-hdri/

http://blog.hdrihaven.com/how-to-create-high-quality-hdri/

Shooting checklist

- Full coverage of the scene (fish-eye shots)

- Backplates for look-development (including ground or floor)

- Macbeth chart for white balance

- Grey ball for lighting calibration

- Chrome ball for lighting orientation

- Basic scene measurements

- Material samples

- Individual HDR artificial lighting sources if required

Methodology

(more…)

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Kling 1.6 and competitors – advanced tests and comparisons

-

AI Search – Find The Best AI Tools & Apps

-

Photography basics: How Exposure Stops (Aperture, Shutter Speed, and ISO) Affect Your Photos – cheat sheet cards

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

-

Image rendering bit depth

-

What’s the Difference Between Ray Casting, Ray Tracing, Path Tracing and Rasterization? Physical light tracing…

-

Top 3D Printing Website Resources

-

Jesse Zumstein – Jobs in games

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.