COMPOSITION

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

Read more: Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacynofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. “

Use CRI, Luminous Efficacy and color temperature controls to match your needs.Color Temperature

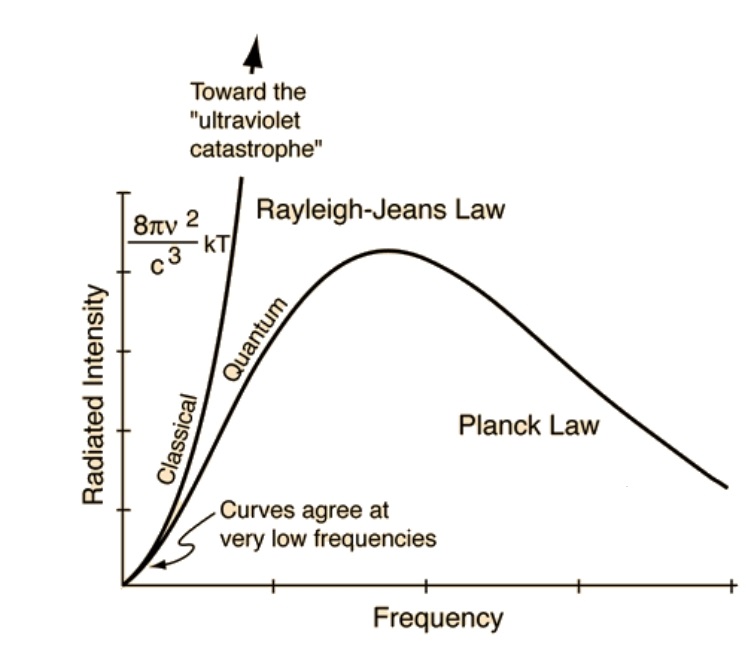

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. “https://www.studiobinder.com/blog/what-is-color-rendering-index

(more…)

DESIGN

-

Mike Mitchell x Marvel x Mondo – Iconic portraits of Marvel’s huge stable of heroes and villains

Read more: Mike Mitchell x Marvel x Mondo – Iconic portraits of Marvel’s huge stable of heroes and villainshttps://mondoshop.com/blogs/gallery/16910155-mike-mitchell-x-marvel-x-mondo

https://time.com/69659/marvel-comics-mike-mitchell-artist-portraits/

COLOR

-

About color: What is a LUT

Read more: About color: What is a LUThttp://www.lightillusion.com/luts.html

https://www.shutterstock.com/blog/how-use-luts-color-grading

A LUT (Lookup Table) is essentially the modifier between two images, the original image and the displayed image, based on a mathematical formula. Basically conversion matrices of different complexities. There are different types of LUTS – viewing, transform, calibration, 1D and 3D.

-

OLED vs QLED – What TV is better?

Read more: OLED vs QLED – What TV is better?Supported by LG, Philips, Panasonic and Sony sell the OLED system TVs.

OLED stands for “organic light emitting diode.”

It is a fundamentally different technology from LCD, the major type of TV today.

OLED is “emissive,” meaning the pixels emit their own light.Samsung is branding its best TVs with a new acronym: “QLED”

QLED (according to Samsung) stands for “quantum dot LED TV.”

It is a variation of the common LED LCD, adding a quantum dot film to the LCD “sandwich.”

QLED, like LCD, is, in its current form, “transmissive” and relies on an LED backlight.OLED is the only technology capable of absolute blacks and extremely bright whites on a per-pixel basis. LCD definitely can’t do that, and even the vaunted, beloved, dearly departed plasma couldn’t do absolute blacks.

QLED, as an improvement over OLED, significantly improves the picture quality. QLED can produce an even wider range of colors than OLED, which says something about this new tech. QLED is also known to produce up to 40% higher luminance efficiency than OLED technology. Further, many tests conclude that QLED is far more efficient in terms of power consumption than its predecessor, OLED.

(more…) -

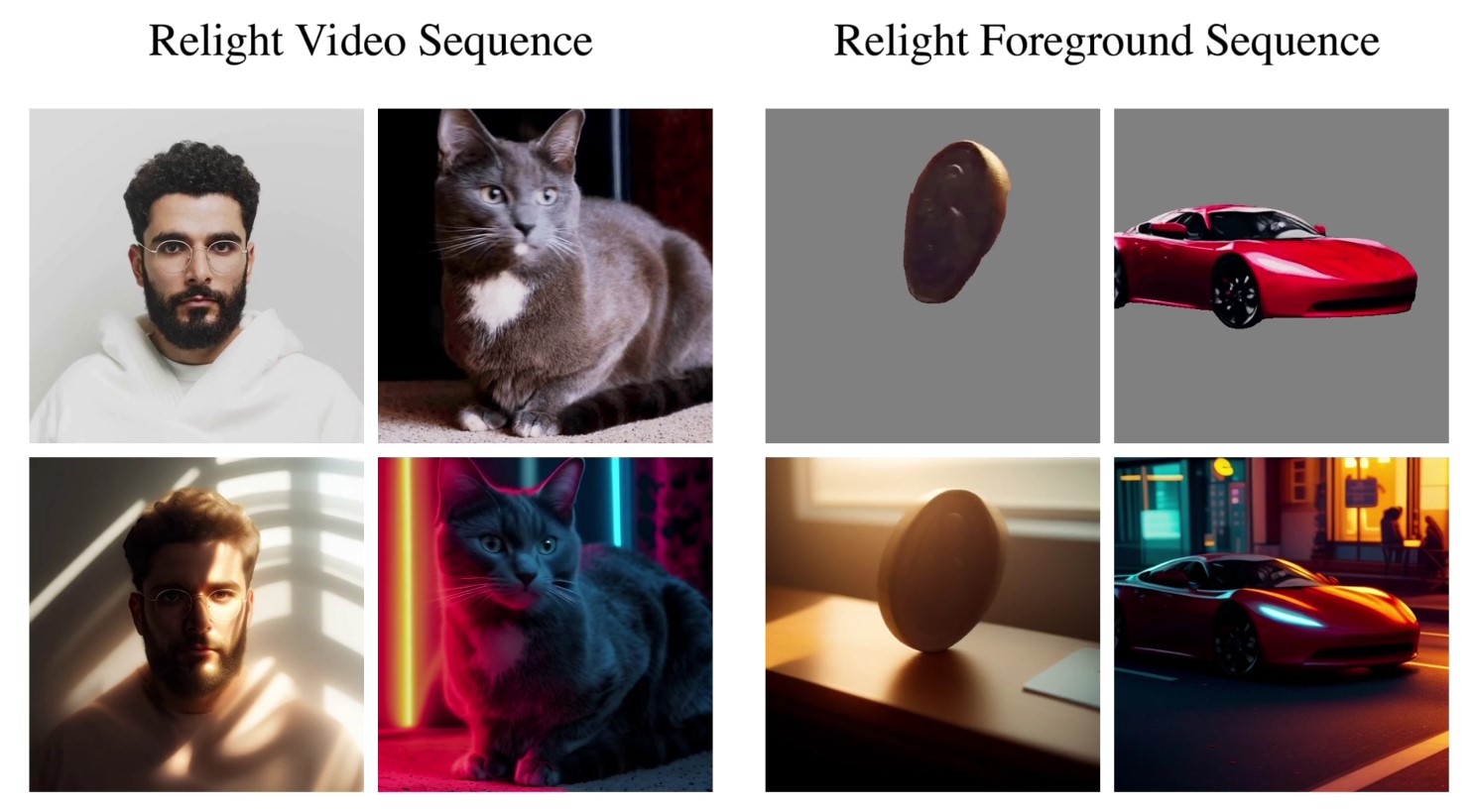

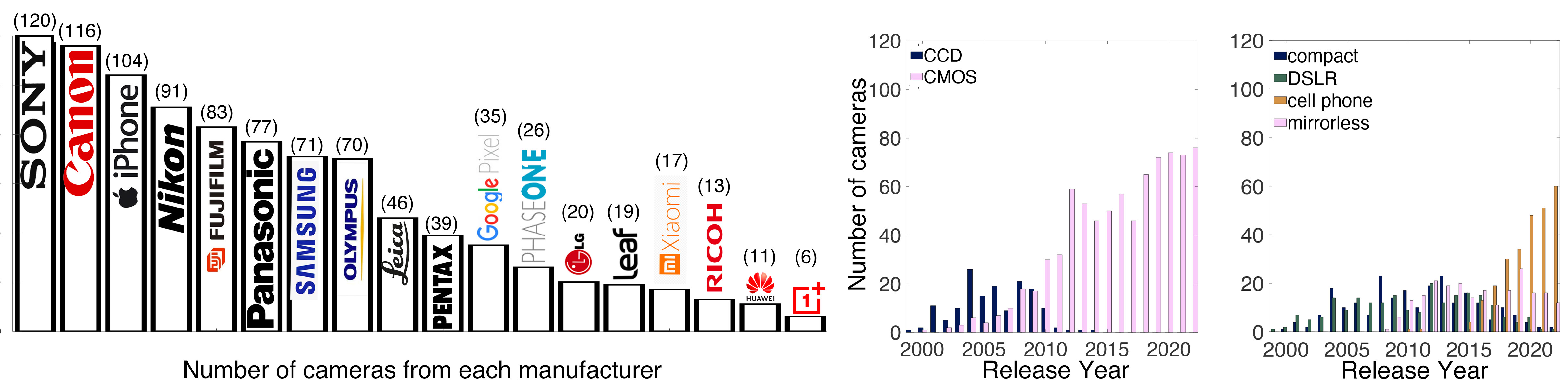

Photography Basics : Spectral Sensitivity Estimation Without a Camera

Read more: Photography Basics : Spectral Sensitivity Estimation Without a Camerahttps://color-lab-eilat.github.io/Spectral-sensitivity-estimation-web/

A number of problems in computer vision and related fields would be mitigated if camera spectral sensitivities were known. As consumer cameras are not designed for high-precision visual tasks, manufacturers do not disclose spectral sensitivities. Their estimation requires a costly optical setup, which triggered researchers to come up with numerous indirect methods that aim to lower cost and complexity by using color targets. However, the use of color targets gives rise to new complications that make the estimation more difficult, and consequently, there currently exists no simple, low-cost, robust go-to method for spectral sensitivity estimation that non-specialized research labs can adopt. Furthermore, even if not limited by hardware or cost, researchers frequently work with imagery from multiple cameras that they do not have in their possession.

To provide a practical solution to this problem, we propose a framework for spectral sensitivity estimation that not only does not require any hardware (including a color target), but also does not require physical access to the camera itself. Similar to other work, we formulate an optimization problem that minimizes a two-term objective function: a camera-specific term from a system of equations, and a universal term that bounds the solution space.

Different than other work, we utilize publicly available high-quality calibration data to construct both terms. We use the colorimetric mapping matrices provided by the Adobe DNG Converter to formulate the camera-specific system of equations, and constrain the solutions using an autoencoder trained on a database of ground-truth curves. On average, we achieve reconstruction errors as low as those that can arise due to manufacturing imperfections between two copies of the same camera. We provide predicted sensitivities for more than 1,000 cameras that the Adobe DNG Converter currently supports, and discuss which tasks can become trivial when camera responses are available.

-

Virtual Production volumes study

Read more: Virtual Production volumes studyColor Fidelity in LED Volumes

https://theasc.com/articles/color-fidelity-in-led-volumesVirtual Production Glossary

https://vpglossary.com/What is Virtual Production – In depth analysis

https://www.leadingledtech.com/what-is-a-led-virtual-production-studio-in-depth-technical-analysis/A comparison of LED panels for use in Virtual Production:

Findings and recommendations

https://eprints.bournemouth.ac.uk/36826/1/LED_Comparison_White_Paper%281%29.pdf -

Capturing the world in HDR for real time projects – Call of Duty: Advanced Warfare

Read more: Capturing the world in HDR for real time projects – Call of Duty: Advanced WarfareReal-World Measurements for Call of Duty: Advanced Warfare

www.activision.com/cdn/research/Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

Local version

Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

-

Scene Referred vs Display Referred color workflows

Read more: Scene Referred vs Display Referred color workflowsDisplay Referred it is tied to the target hardware, as such it bakes color requirements into every type of media output request.

Scene Referred uses a common unified wide gamut and targeting audience through CDL and DI libraries instead.

So that color information stays untouched and only “transformed” as/when needed.Sources:

– Victor Perez – Color Management Fundamentals & ACES Workflows in Nuke

– https://z-fx.nl/ColorspACES.pdf

– Wicus

-

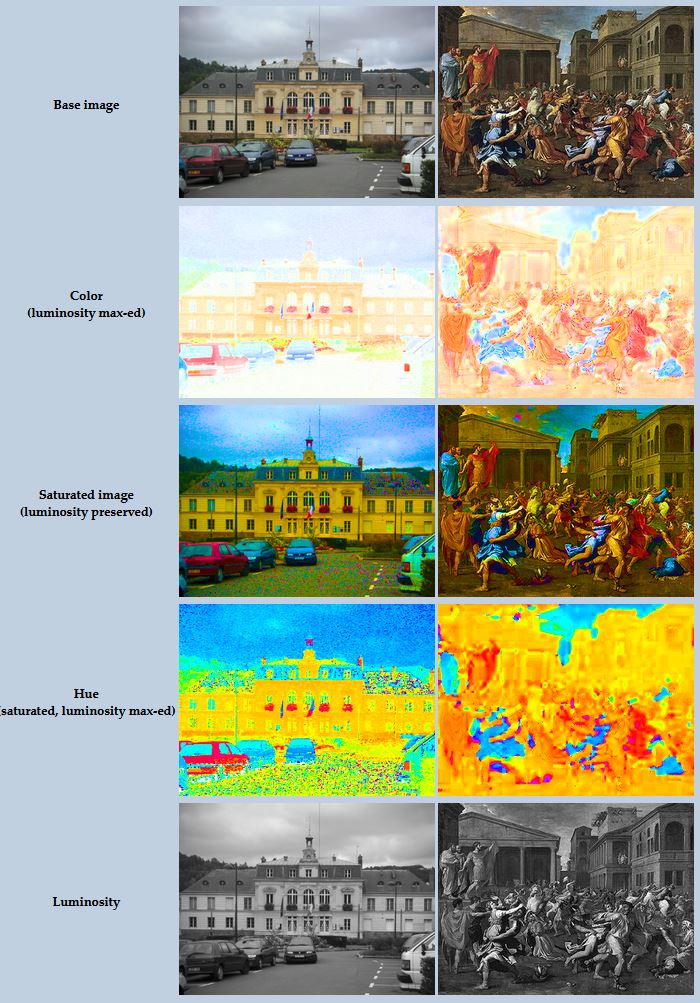

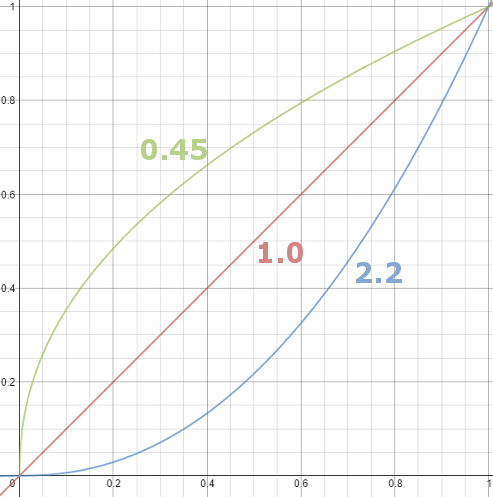

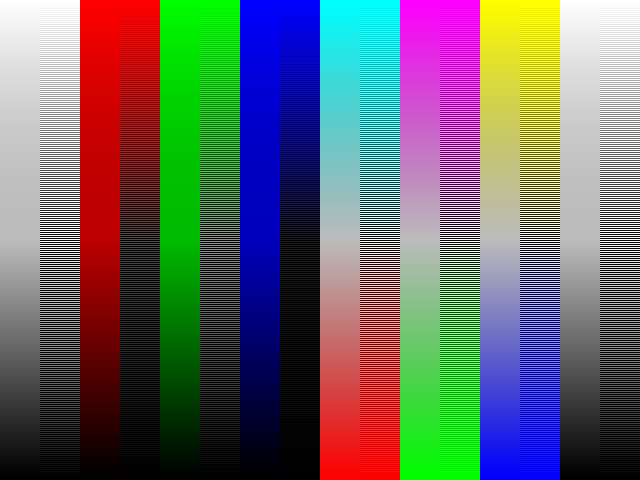

Gamma correction

Read more: Gamma correction

http://www.normankoren.com/makingfineprints1A.html#Gammabox

https://en.wikipedia.org/wiki/Gamma_correction

http://www.photoscientia.co.uk/Gamma.htm

https://www.w3.org/Graphics/Color/sRGB.html

http://www.eizoglobal.com/library/basics/lcd_display_gamma/index.html

https://forum.reallusion.com/PrintTopic308094.aspx

Basically, gamma is the relationship between the brightness of a pixel as it appears on the screen, and the numerical value of that pixel. Generally Gamma is just about defining relationships.

Three main types:

– Image Gamma encoded in images

– Display Gammas encoded in hardware and/or viewing time

– System or Viewing Gamma which is the net effect of all gammas when you look back at a final image. In theory this should flatten back to 1.0 gamma.

(more…)

LIGHTING

-

StudioBinder.com – CRI color rendering index

Read more: StudioBinder.com – CRI color rendering indexwww.studiobinder.com/blog/what-is-color-rendering-index

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”

www.pixelsham.com/2021/04/28/types-of-film-lights-and-their-efficiency

-

Convert between light exposure and intensity

Read more: Convert between light exposure and intensityimport math,sys def Exposure2Intensity(exposure): exp = float(exposure) result = math.pow(2,exp) print(result) Exposure2Intensity(0) def Intensity2Exposure(intensity): inarg = float(intensity) if inarg == 0: print("Exposure of zero intensity is undefined.") return if inarg < 1e-323: inarg = max(inarg, 1e-323) print("Exposure of negative intensities is undefined. Clamping to a very small value instead (1e-323)") result = math.log(inarg, 2) print(result) Intensity2Exposure(0.1)Why Exposure?

Exposure is a stop value that multiplies the intensity by 2 to the power of the stop. Increasing exposure by 1 results in double the amount of light.

Artists think in “stops.” Doubling or halving brightness is easy math and common in grading and look-dev.

Exposure counts doublings in whole stops:- +1 stop = ×2 brightness

- −1 stop = ×0.5 brightness

This gives perceptually even controls across both bright and dark values.

Why Intensity?

Intensity is linear.

It’s what render engines and compositors expect when:- Summing values

- Averaging pixels

- Multiplying or filtering pixel data

Use intensity when you need the actual math on pixel/light data.

Formulas (from your Python)

- Intensity from exposure: intensity = 2**exposure

- Exposure from intensity: exposure = log₂(intensity)

Guardrails:

- Intensity must be > 0 to compute exposure.

- If intensity = 0 → exposure is undefined.

- Clamp tiny values (e.g.

1e−323) before using log₂.

Use Exposure (stops) when…

- You want artist-friendly sliders (−5…+5 stops)

- Adjusting look-dev or grading in even stops

- Matching plates with quick ±1 stop tweaks

- Tweening brightness changes smoothly across ranges

Use Intensity (linear) when…

- Storing raw pixel/light values

- Multiplying textures or lights by a gain

- Performing sums, averages, and filters

- Feeding values to render engines expecting linear data

Examples

- +2 stops → 2**2 = 4.0 (×4)

- +1 stop → 2**1 = 2.0 (×2)

- 0 stop → 2**0 = 1.0 (×1)

- −1 stop → 2**(−1) = 0.5 (×0.5)

- −2 stops → 2**(−2) = 0.25 (×0.25)

- Intensity 0.1 → exposure = log₂(0.1) ≈ −3.32

Rule of thumb

Think in stops (exposure) for controls and matching.

Compute in linear (intensity) for rendering and math. -

Sun cone angle (angular diameter) as perceived by earth viewers

Read more: Sun cone angle (angular diameter) as perceived by earth viewersAlso see:

https://www.pixelsham.com/2020/08/01/solid-angle-measures/

The cone angle of the sun refers to the angular diameter of the sun as observed from Earth, which is related to the apparent size of the sun in the sky.

The angular diameter of the sun, or the cone angle of the sunlight as perceived from Earth, is approximately 0.53 degrees on average. This value can vary slightly due to the elliptical nature of Earth’s orbit around the sun, but it generally stays within a narrow range.

Here’s a more precise breakdown:

-

- Average Angular Diameter: About 0.53 degrees (31 arcminutes)

- Minimum Angular Diameter: Approximately 0.52 degrees (when Earth is at aphelion, the farthest point from the sun)

- Maximum Angular Diameter: Approximately 0.54 degrees (when Earth is at perihelion, the closest point to the sun)

This angular diameter remains relatively constant throughout the day because the sun’s distance from Earth does not change significantly over a single day.

To summarize, the cone angle of the sun’s light, or its angular diameter, is typically around 0.53 degrees, regardless of the time of day.

https://en.wikipedia.org/wiki/Angular_diameter

-

-

The Color of Infinite Temperature

Read more: The Color of Infinite TemperatureThis is the color of something infinitely hot.

Of course you’d instantly be fried by gamma rays of arbitrarily high frequency, but this would be its spectrum in the visible range.

johncarlosbaez.wordpress.com/2022/01/16/the-color-of-infinite-temperature/

This is also the color of a typical neutron star. They’re so hot they look the same.

It’s also the color of the early Universe!This was worked out by David Madore.

The color he got is sRGB(148,177,255).

www.htmlcsscolor.com/hex/94B1FFAnd according to the experts who sip latte all day and make up names for colors, this color is called ‘Perano’.

-

Narcis Calin’s Galaxy Engine – A free, open source simulation software

Read more: Narcis Calin’s Galaxy Engine – A free, open source simulation softwareThis 2025 I decided to start learning how to code, so I installed Visual Studio and I started looking into C++. After days of watching tutorials and guides about the basics of C++ and programming, I decided to make something physics-related. I started with a dot that fell to the ground and then I wanted to simulate gravitational attraction, so I made 2 circles attracting each other. I thought it was really cool to see something I made with code actually work, so I kept building on top of that small, basic program. And here we are after roughly 8 months of learning programming. This is Galaxy Engine, and it is a simulation software I have been making ever since I started my learning journey. It currently can simulate gravity, dark matter, galaxies, the Big Bang, temperature, fluid dynamics, breakable solids, planetary interactions, etc. The program can run many tens of thousands of particles in real time on the CPU thanks to the Barnes-Hut algorithm, mixed with Morton curves. It also includes its own PBR 2D path tracer with BVH optimizations. The path tracer can simulate a bunch of stuff like diffuse lighting, specular reflections, refraction, internal reflection, fresnel, emission, dispersion, roughness, IOR, nested IOR and more! I tried to make the path tracer closer to traditional 3D render engines like V-Ray. I honestly never imagined I would go this far with programming, and it has been an amazing learning experience so far. I think that mixing this knowledge with my 3D knowledge can unlock countless new possibilities. In case you are curious about Galaxy Engine, I made it completely free and Open-Source so that anyone can build and compile it locally! You can find the source code in GitHub

https://github.com/NarcisCalin/Galaxy-Engine

-

Disney’s Moana Island Scene – Free data set

Read more: Disney’s Moana Island Scene – Free data sethttps://www.disneyanimation.com/resources/moana-island-scene/

This data set contains everything necessary to render a version of the Motunui island featured in the 2016 film Moana.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

-

Rec-2020 – TVs new color gamut standard used by Dolby Vision?

-

Photography basics: Shutter angle and shutter speed and motion blur

-

Methods for creating motion blur in Stop motion

-

AI Search – Find The Best AI Tools & Apps

-

Matt Gray – How to generate a profitable business

-

GretagMacbeth Color Checker Numeric Values and Middle Gray

-

Convert 2D Images or Text to 3D Models

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.

![sRGB gamma correction test [gamma correction test]](http://www.madore.org/~david/misc/color/gammatest.png)