COMPOSITION

DESIGN

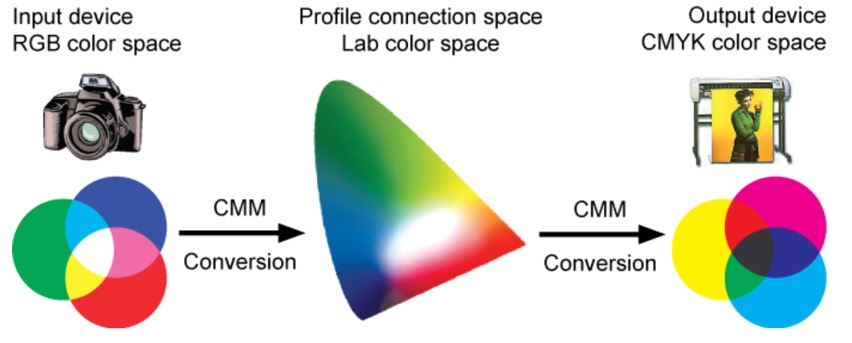

COLOR

-

THOMAS MANSENCAL – The Apparent Simplicity of RGB Rendering

Read more: THOMAS MANSENCAL – The Apparent Simplicity of RGB Renderinghttps://thomasmansencal.substack.com/p/the-apparent-simplicity-of-rgb-rendering

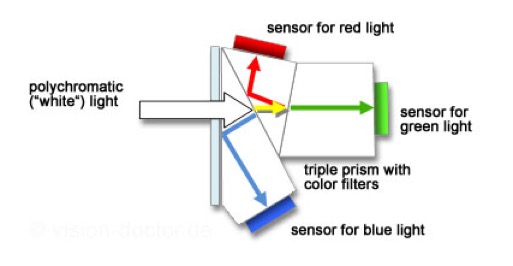

The primary goal of physically-based rendering (PBR) is to create a simulation that accurately reproduces the imaging process of electro-magnetic spectrum radiation incident to an observer. This simulation should be indistinguishable from reality for a similar observer.

Because a camera is not sensitive to incident light the same way than a human observer, the images it captures are transformed to be colorimetric. A project might require infrared imaging simulation, a portion of the electro-magnetic spectrum that is invisible to us. Radically different observers might image the same scene but the act of observing does not change the intrinsic properties of the objects being imaged. Consequently, the physical modelling of the virtual scene should be independent of the observer.

-

The Maya civilization and the color blue

Read more: The Maya civilization and the color blueMaya blue is a highly unusual pigment because it is a mix of organic indigo and an inorganic clay mineral called palygorskite.

Echoing the color of an azure sky, the indelible pigment was used to accentuate everything from ceramics to human sacrifices in the Late Preclassic period (300 B.C. to A.D. 300).

A team of researchers led by Dean Arnold, an adjunct curator of anthropology at the Field Museum in Chicago, determined that the key to Maya blue was actually a sacred incense called copal.

By heating the mixture of indigo, copal and palygorskite over a fire, the Maya produced the unique pigment, he reported at the time.

-

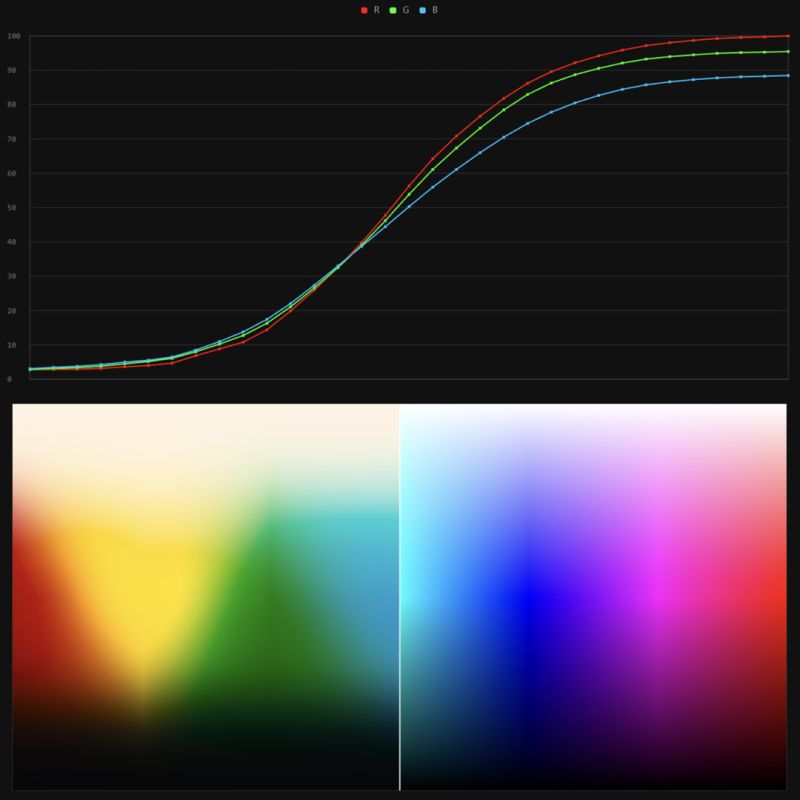

Stefan Ringelschwandtner – LUT Inspector tool

Read more: Stefan Ringelschwandtner – LUT Inspector toolIt lets you load any .cube LUT right in your browser, see the RGB curves, and use a split view on the Granger Test Image to compare the original vs. LUT-applied version in real time — perfect for spotting hue shifts, saturation changes, and contrast tweaks.

https://mononodes.com/lut-inspector/

-

OLED vs QLED – What TV is better?

Read more: OLED vs QLED – What TV is better?Supported by LG, Philips, Panasonic and Sony sell the OLED system TVs.

OLED stands for “organic light emitting diode.”

It is a fundamentally different technology from LCD, the major type of TV today.

OLED is “emissive,” meaning the pixels emit their own light.Samsung is branding its best TVs with a new acronym: “QLED”

QLED (according to Samsung) stands for “quantum dot LED TV.”

It is a variation of the common LED LCD, adding a quantum dot film to the LCD “sandwich.”

QLED, like LCD, is, in its current form, “transmissive” and relies on an LED backlight.OLED is the only technology capable of absolute blacks and extremely bright whites on a per-pixel basis. LCD definitely can’t do that, and even the vaunted, beloved, dearly departed plasma couldn’t do absolute blacks.

QLED, as an improvement over OLED, significantly improves the picture quality. QLED can produce an even wider range of colors than OLED, which says something about this new tech. QLED is also known to produce up to 40% higher luminance efficiency than OLED technology. Further, many tests conclude that QLED is far more efficient in terms of power consumption than its predecessor, OLED.

(more…) -

Photography basics: Why Use a (MacBeth) Color Chart?

Read more: Photography basics: Why Use a (MacBeth) Color Chart?Start here: https://www.pixelsham.com/2013/05/09/gretagmacbeth-color-checker-numeric-values/

https://www.studiobinder.com/blog/what-is-a-color-checker-tool/

In LightRoom

in Final Cut

in Nuke

Note: In Foundry’s Nuke, the software will map 18% gray to whatever your center f/stop is set to in the viewer settings (f/8 by default… change that to EV by following the instructions below).

You can experiment with this by attaching an Exposure node to a Constant set to 0.18, setting your viewer read-out to Spotmeter, and adjusting the stops in the node up and down. You will see that a full stop up or down will give you the respective next value on the aperture scale (f8, f11, f16 etc.).One stop doubles or halves the amount or light that hits the filmback/ccd, so everything works in powers of 2.

So starting with 0.18 in your constant, you will see that raising it by a stop will give you .36 as a floating point number (in linear space), while your f/stop will be f/11 and so on.If you set your center stop to 0 (see below) you will get a relative readout in EVs, where EV 0 again equals 18% constant gray.

In other words. Setting the center f-stop to 0 means that in a neutral plate, the middle gray in the macbeth chart will equal to exposure value 0. EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

This will set the sun usually around EV12-17 and the sky EV1-4 , depending on cloud coverage.

To switch Foundry’s Nuke’s SpotMeter to return the EV of an image, click on the main viewport, and then press s, this opens the viewer’s properties. Now set the center f-stop to 0 in there. And the SpotMeter in the viewport will change from aperture and fstops to EV.

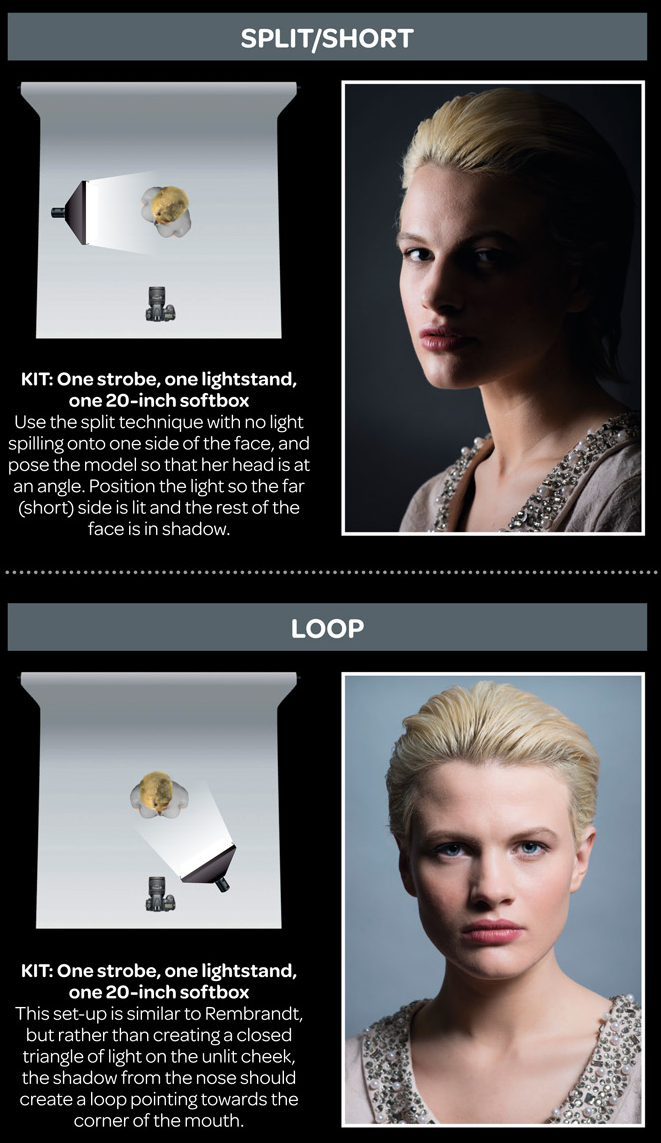

LIGHTING

-

9 Best Hacks to Make a Cinematic Video with Any Camera

Read more: 9 Best Hacks to Make a Cinematic Video with Any Camerahttps://www.flexclip.com/learn/cinematic-video.html

- Frame Your Shots to Create Depth

- Create Shallow Depth of Field

- Avoid Shaky Footage and Use Flexible Camera Movements

- Properly Use Slow Motion

- Use Cinematic Lighting Techniques

- Apply Color Grading

- Use Cinematic Music and SFX

- Add Cinematic Fonts and Text Effects

- Create the Cinematic Bar at the Top and the Bottom

-

Photography basics: Solid Angle measures

Read more: Photography basics: Solid Angle measureshttp://www.calculator.org/property.aspx?name=solid+angle

A measure of how large the object appears to an observer looking from that point. Thus. A measure for objects in the sky. Useful to retuen the size of the sun and moon… and in perspective, how much of their contribution to lighting. Solid angle can be represented in ‘angular diameter’ as well.

http://en.wikipedia.org/wiki/Solid_angle

http://www.mathsisfun.com/geometry/steradian.html

A solid angle is expressed in a dimensionless unit called a steradian (symbol: sr). By default in terms of the total celestial sphere and before atmospheric’s scattering, the Sun and the Moon subtend fractional areas of 0.000546% (Sun) and 0.000531% (Moon).

http://en.wikipedia.org/wiki/Solid_angle#Sun_and_Moon

On earth the sun is likely closer to 0.00011 solid angle after athmospheric scattering. The sun as perceived from earth has a diameter of 0.53 degrees. This is about 0.000064 solid angle.

http://www.numericana.com/answer/angles.htm

The mean angular diameter of the full moon is 2q = 0.52° (it varies with time around that average, by about 0.009°). This translates into a solid angle of 0.0000647 sr, which means that the whole night sky covers a solid angle roughly one hundred thousand times greater than the full moon.

More info

http://lcogt.net/spacebook/using-angles-describe-positions-and-apparent-sizes-objects

http://amazing-space.stsci.edu/glossary/def.php.s=topic_astronomy

Angular Size

The apparent size of an object as seen by an observer; expressed in units of degrees (of arc), arc minutes, or arc seconds. The moon, as viewed from the Earth, has an angular diameter of one-half a degree.

The angle covered by the diameter of the full moon is about 31 arcmin or 1/2°, so astronomers would say the Moon’s angular diameter is 31 arcmin, or the Moon subtends an angle of 31 arcmin.

-

Willem Zwarthoed – Aces gamut in VFX production pdf

Read more: Willem Zwarthoed – Aces gamut in VFX production pdfhttps://www.provideocoalition.com/color-management-part-12-introducing-aces/

Local copy:

https://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applications

-

HDRI Resources

Read more: HDRI ResourcesText2Light

- https://www.cgtrader.com/free-3d-models/exterior/other/10-free-hdr-panoramas-created-with-text2light-zero-shot

- https://frozenburning.github.io/projects/text2light/

- https://github.com/FrozenBurning/Text2Light

Royalty free links

- https://locationtextures.com/panoramas/

- http://www.noahwitchell.com/freebies

- https://polyhaven.com/hdris

- https://hdrmaps.com/

- https://www.ihdri.com/

- https://hdrihaven.com/

- https://www.domeble.com/

- http://www.hdrlabs.com/sibl/archive.html

- https://www.hdri-hub.com/hdrishop/hdri

- http://noemotionhdrs.net/hdrevening.html

- https://www.openfootage.net/hdri-panorama/

- https://www.zwischendrin.com/en/browse/hdri

Nvidia GauGAN360

-

How are Energy and Matter the Same?

Read more: How are Energy and Matter the Same?www.turnerpublishing.com/blog/detail/everything-is-energy-everything-is-one-everything-is-possible/

www.universetoday.com/116615/how-are-energy-and-matter-the-same/

As Einstein showed us, light and matter and just aspects of the same thing. Matter is just frozen light. And light is matter on the move. Albert Einstein’s most famous equation says that energy and matter are two sides of the same coin. How does one become the other?

Relativity requires that the faster an object moves, the more mass it appears to have. This means that somehow part of the energy of the car’s motion appears to transform into mass. Hence the origin of Einstein’s equation. How does that happen? We don’t really know. We only know that it does.

Matter is 99.999999999999 percent empty space. Not only do the atom and solid matter consist mainly of empty space, it is the same in outer space

The quantum theory researchers discovered the answer: Not only do particles consist of energy, but so does the space between. This is the so-called zero-point energy. Therefore it is true: Everything consists of energy.

Energy is the basis of material reality. Every type of particle is conceived of as a quantum vibration in a field: Electrons are vibrations in electron fields, protons vibrate in a proton field, and so on. Everything is energy, and everything is connected to everything else through fields.

-

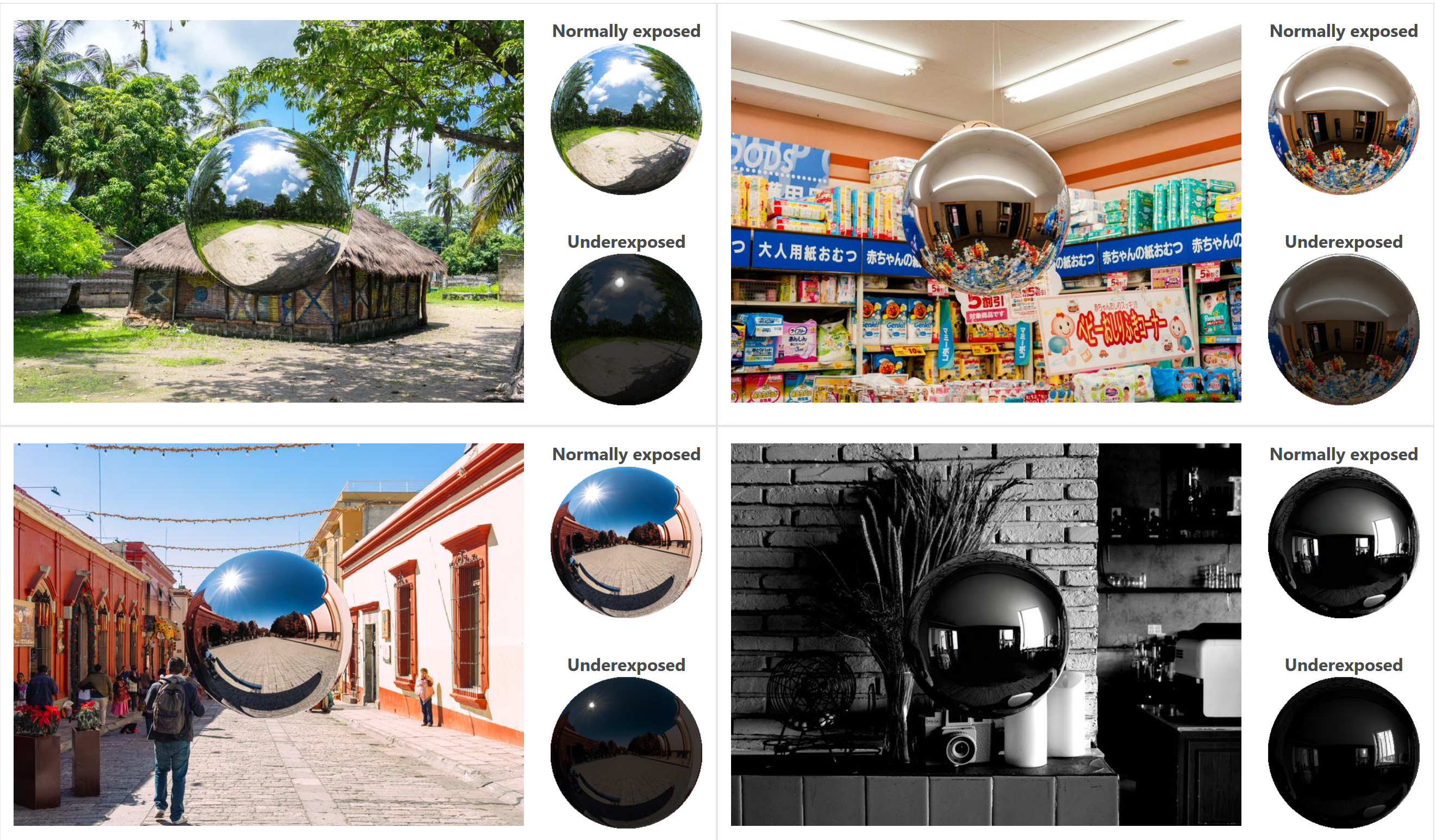

DiffusionLight: HDRI Light Probes for Free by Painting a Chrome Ball

Read more: DiffusionLight: HDRI Light Probes for Free by Painting a Chrome Ballhttps://diffusionlight.github.io/

https://github.com/DiffusionLight/DiffusionLight

https://github.com/DiffusionLight/DiffusionLight?tab=MIT-1-ov-file#readme

https://colab.research.google.com/drive/15pC4qb9mEtRYsW3utXkk-jnaeVxUy-0S

“a simple yet effective technique to estimate lighting in a single input image. Current techniques rely heavily on HDR panorama datasets to train neural networks to regress an input with limited field-of-view to a full environment map. However, these approaches often struggle with real-world, uncontrolled settings due to the limited diversity and size of their datasets. To address this problem, we leverage diffusion models trained on billions of standard images to render a chrome ball into the input image. Despite its simplicity, this task remains challenging: the diffusion models often insert incorrect or inconsistent objects and cannot readily generate images in HDR format. Our research uncovers a surprising relationship between the appearance of chrome balls and the initial diffusion noise map, which we utilize to consistently generate high-quality chrome balls. We further fine-tune an LDR difusion model (Stable Diffusion XL) with LoRA, enabling it to perform exposure bracketing for HDR light estimation. Our method produces convincing light estimates across diverse settings and demonstrates superior generalization to in-the-wild scenarios.”

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Animation/VFX/Game Industry JOB POSTINGS by Chris Mayne

-

Sensitivity of human eye

-

Top 3D Printing Website Resources

-

Survivorship Bias: The error resulting from systematically focusing on successes and ignoring failures. How a young statistician saved his planes during WW2.

-

N8N.io – From Zero to Your First AI Agent in 25 Minutes

-

Cinematographers Blueprint 300dpi poster

-

Key/Fill ratios and scene composition using false colors and Nuke node

-

How does Stable Diffusion work?

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.