COMPOSITION

-

9 Best Hacks to Make a Cinematic Video with Any Camera

Read more: 9 Best Hacks to Make a Cinematic Video with Any Camerahttps://www.flexclip.com/learn/cinematic-video.html

- Frame Your Shots to Create Depth

- Create Shallow Depth of Field

- Avoid Shaky Footage and Use Flexible Camera Movements

- Properly Use Slow Motion

- Use Cinematic Lighting Techniques

- Apply Color Grading

- Use Cinematic Music and SFX

- Add Cinematic Fonts and Text Effects

- Create the Cinematic Bar at the Top and the Bottom

-

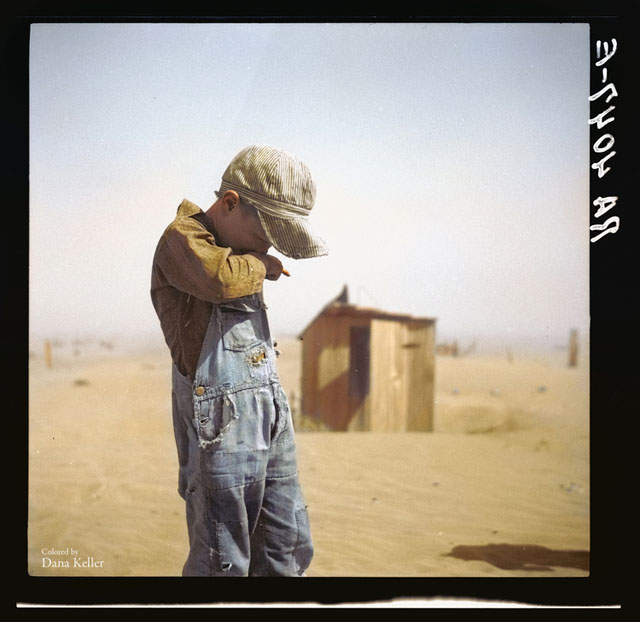

Photography basics: Depth of Field and composition

Read more: Photography basics: Depth of Field and compositionDepth of field is the range within which focusing is resolved in a photo.

Aperture has a huge affect on to the depth of field.Changing the f-stops (f/#) of a lens will change aperture and as such the DOF.

f-stops are a just certain number which is telling you the size of the aperture. That’s how f-stop is related to aperture (and DOF).

If you increase f-stops, it will increase DOF, the area in focus (and decrease the aperture). On the other hand, decreasing the f-stop it will decrease DOF (and increase the aperture).

The red cone in the figure is an angular representation of the resolution of the system. Versus the dotted lines, which indicate the aperture coverage. Where the lines of the two cones intersect defines the total range of the depth of field.

This image explains why the longer the depth of field, the greater the range of clarity.

-

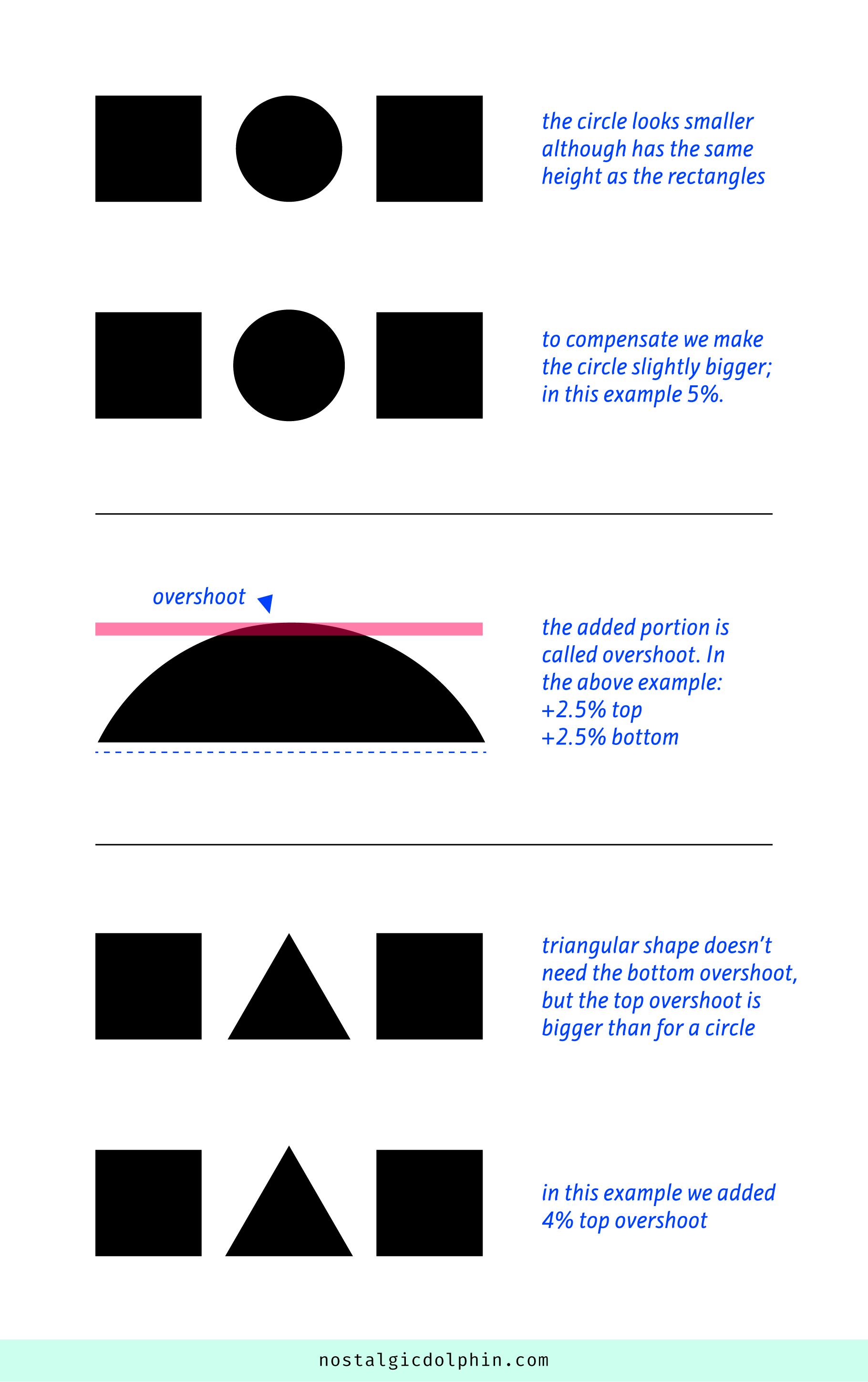

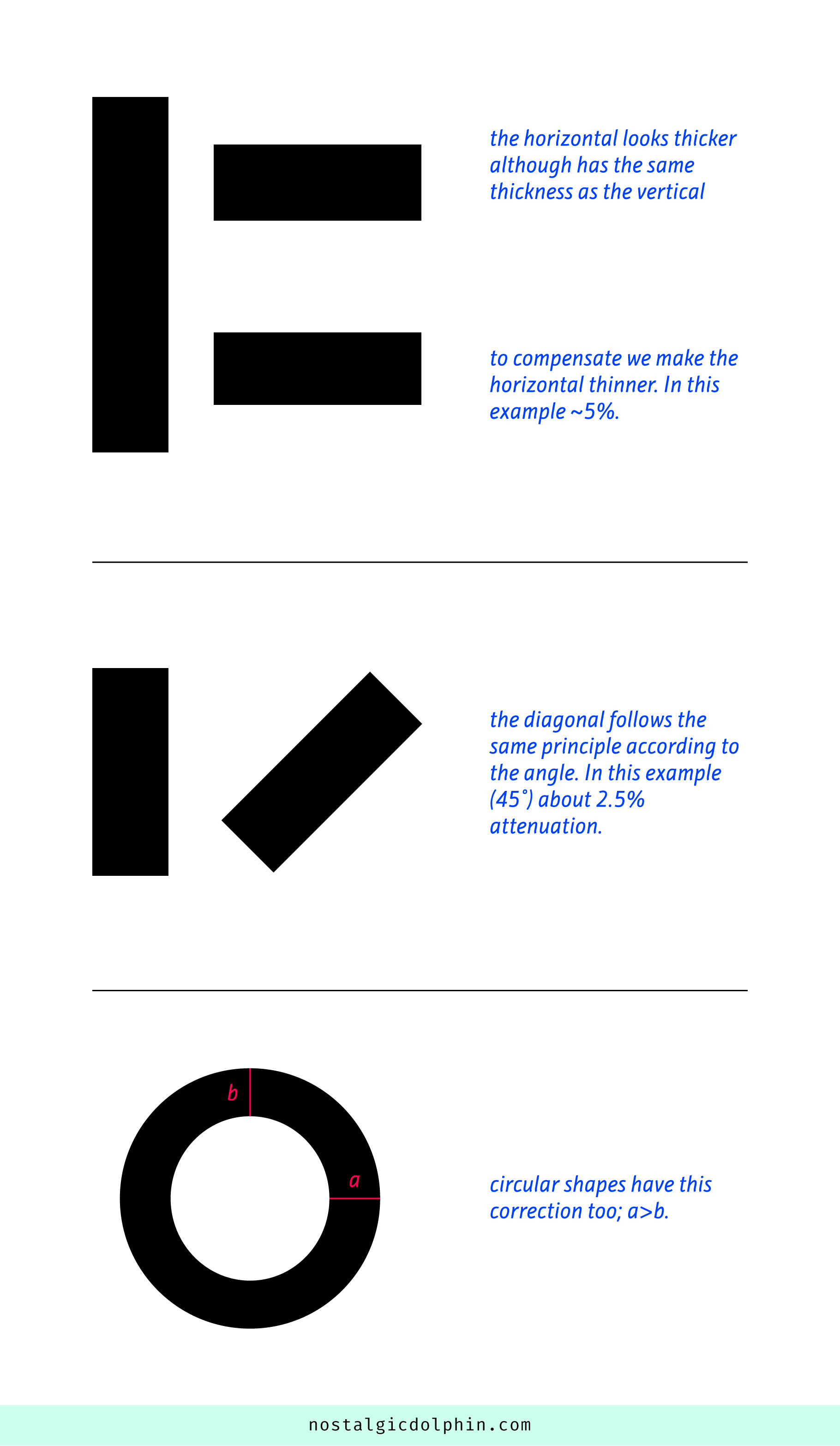

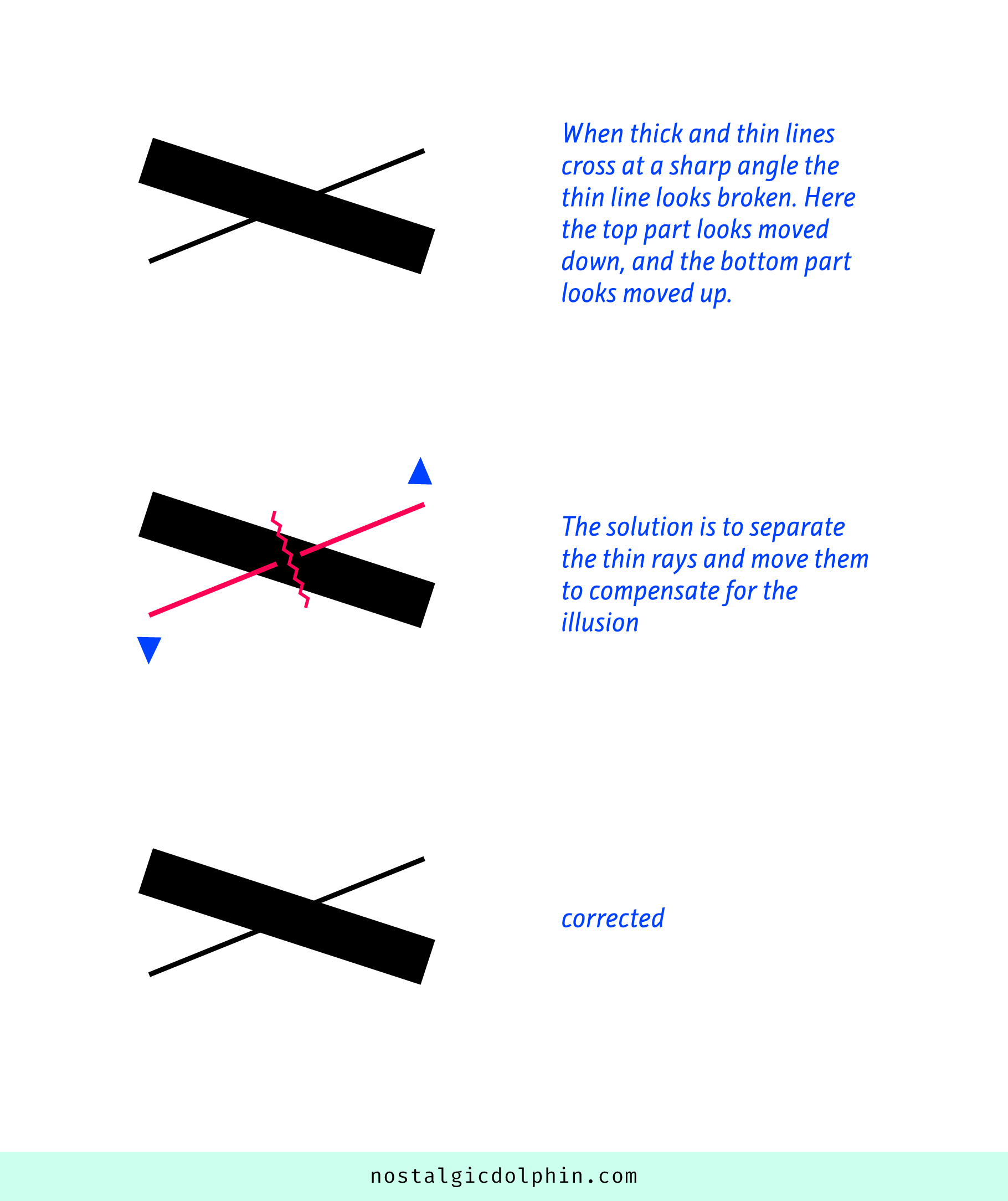

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

Read more: Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental processhttps://www.chrbutler.com/understanding-the-eye-mind-connection

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning

DESIGN

-

Reuben Wu – Glowing Geometric Light Paintings

Read more: Reuben Wu – Glowing Geometric Light Paintingswww.thisiscolossal.com/2021/04/reuben-wu-ex-stasis/

Wu programmed a stick of 200 LED lights to shift in color and shape above the calm landscapes. He captured the mesmerizing movements in-camera, and through a combination of stills, timelapse, and real-time footage, produced four audiovisual works that juxtapose the natural scenery with the artificially produced light and electronic sounds.

-

This legendary DC Comics style guide was nearly lost for years – now you can buy it

Read more: This legendary DC Comics style guide was nearly lost for years – now you can buy ithttps://www.fastcompany.com/91133306/dc-comics-style-guide-was-lost-for-years-now-you-can-buy-it

Reproduced from a rare original copy, the book features over 165 highly-detailed scans of the legendary art by José Luis García-López, with an introduction by Paul Levitz, former president of DC Comics.

https://standardsmanual.com/products/1982-dc-comics-style-guide

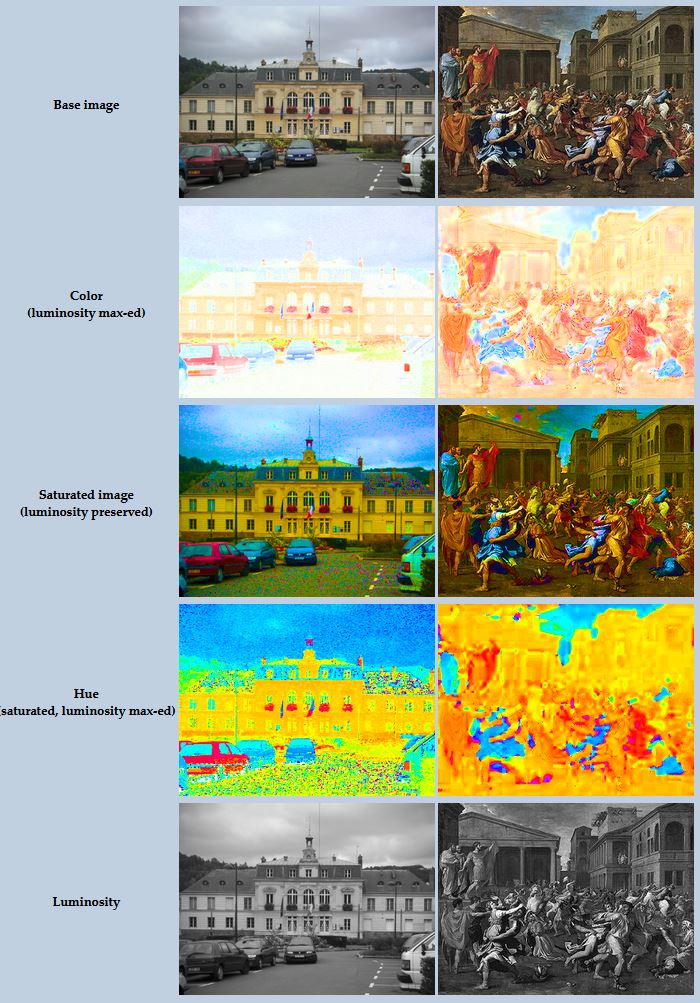

COLOR

-

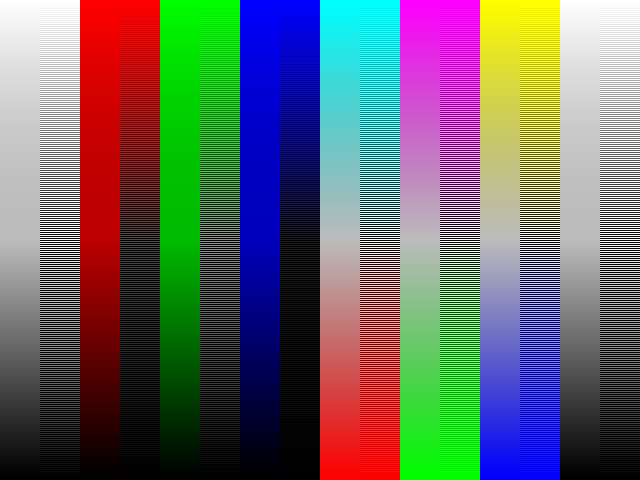

HDR and Color

Read more: HDR and Colorhttps://www.soundandvision.com/content/nits-and-bits-hdr-and-color

In HD we often refer to the range of available colors as a color gamut. Such a color gamut is typically plotted on a two-dimensional diagram, called a CIE chart, as shown in at the top of this blog. Each color is characterized by its x/y coordinates.

Good enough for government work, perhaps. But for HDR, with its higher luminance levels and wider color, the gamut becomes three-dimensional.

For HDR the color gamut therefore becomes a characteristic we now call the color volume. It isn’t easy to show color volume on a two-dimensional medium like the printed page or a computer screen, but one method is shown below. As the luminance becomes higher, the picture eventually turns to white. As it becomes darker, it fades to black. The traditional color gamut shown on the CIE chart is simply a slice through this color volume at a selected luminance level, such as 50%.

Three different color volumes—we still refer to them as color gamuts though their third dimension is important—are currently the most significant. The first is BT.709 (sometimes referred to as Rec.709), the color gamut used for pre-UHD/HDR formats, including standard HD.

The largest is known as BT.2020; it encompasses (roughly) the range of colors visible to the human eye (though ET might find it insufficient!).

Between these two is the color gamut used in digital cinema, known as DCI-P3.

sRGB

D65

-

Akiyoshi Kitaoka – Surround biased illumination perception

Read more: Akiyoshi Kitaoka – Surround biased illumination perceptionhttps://x.com/AkiyoshiKitaoka/status/1798705648001327209

The left face appears whitish and the right one blackish, but they are made up of the same luminance.

https://community.wolfram.com/groups/-/m/t/3191015

Illusory staircase Gelb effect

https://www.psy.ritsumei.ac.jp/akitaoka/illgelbe.html -

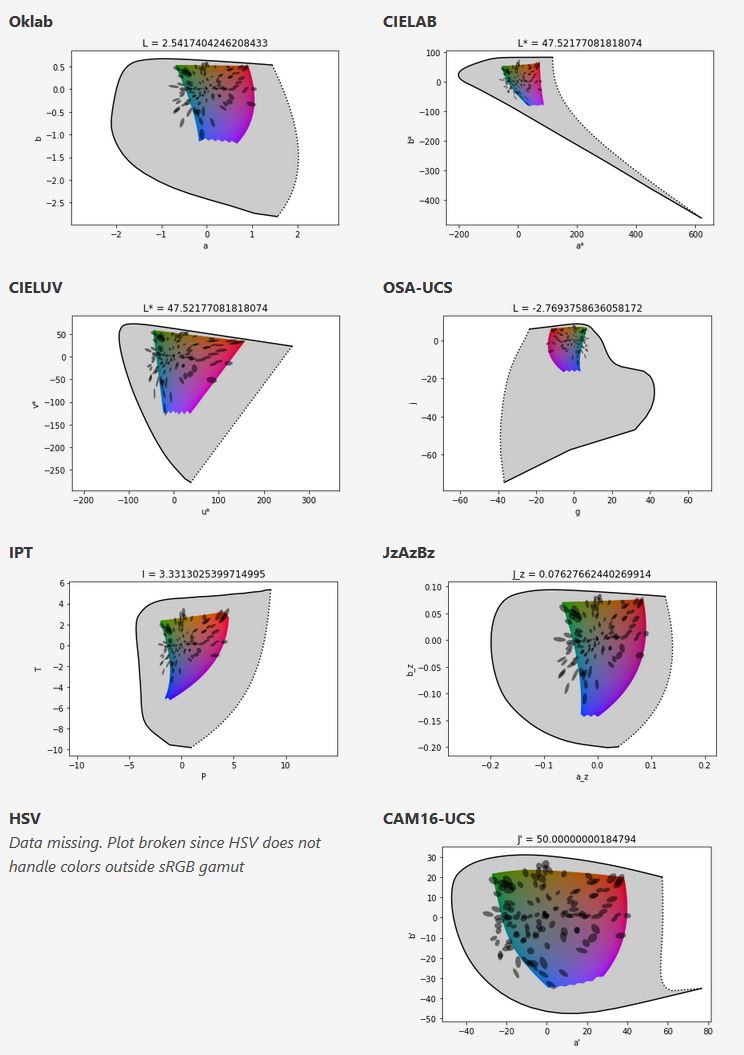

Björn Ottosson – OKHSV and OKHSL – Two new color spaces for color picking

Read more: Björn Ottosson – OKHSV and OKHSL – Two new color spaces for color pickinghttps://bottosson.github.io/misc/colorpicker

https://bottosson.github.io/posts/colorpicker/

https://www.smashingmagazine.com/2024/10/interview-bjorn-ottosson-creator-oklab-color-space/

One problem with sRGB is that in a gradient between blue and white, it becomes a bit purple in the middle of the transition. That’s because sRGB really isn’t created to mimic how the eye sees colors; rather, it is based on how CRT monitors work. That means it works with certain frequencies of red, green, and blue, and also the non-linear coding called gamma. It’s a miracle it works as well as it does, but it’s not connected to color perception. When using those tools, you sometimes get surprising results, like purple in the gradient.

There were also attempts to create simple models matching human perception based on XYZ, but as it turned out, it’s not possible to model all color vision that way. Perception of color is incredibly complex and depends, among other things, on whether it is dark or light in the room and the background color it is against. When you look at a photograph, it also depends on what you think the color of the light source is. The dress is a typical example of color vision being very context-dependent. It is almost impossible to model this perfectly.

I based Oklab on two other color spaces, CIECAM16 and IPT. I used the lightness and saturation prediction from CIECAM16, which is a color appearance model, as a target. I actually wanted to use the datasets used to create CIECAM16, but I couldn’t find them.

IPT was designed to have better hue uniformity. In experiments, they asked people to match light and dark colors, saturated and unsaturated colors, which resulted in a dataset for which colors, subjectively, have the same hue. IPT has a few other issues but is the basis for hue in Oklab.

In the Munsell color system, colors are described with three parameters, designed to match the perceived appearance of colors: Hue, Chroma and Value. The parameters are designed to be independent and each have a uniform scale. This results in a color solid with an irregular shape. The parameters are designed to be independent and each have a uniform scale. This results in a color solid with an irregular shape. Modern color spaces and models, such as CIELAB, Cam16 and Björn Ottosson own Oklab, are very similar in their construction.

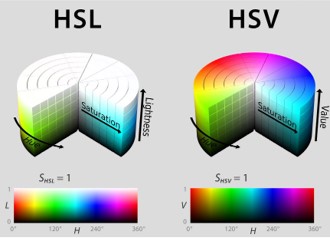

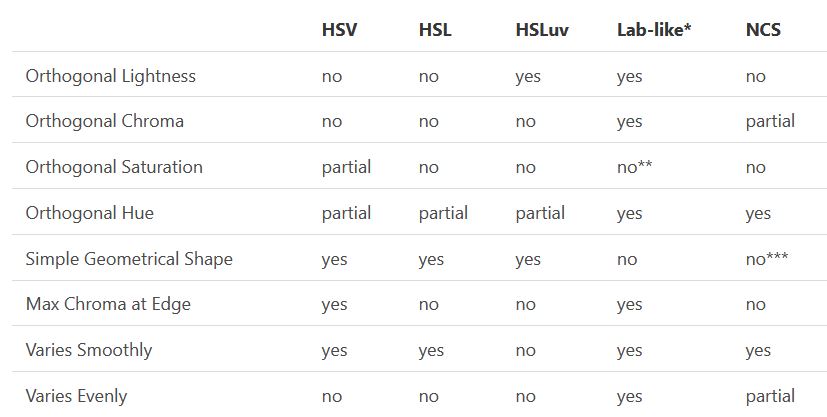

By far the most used color spaces today for color picking are HSL and HSV, two representations introduced in the classic 1978 paper “Color Spaces for Computer Graphics”. HSL and HSV designed to roughly correlate with perceptual color properties while being very simple and cheap to compute.

Today HSL and HSV are most commonly used together with the sRGB color space.

One of the main advantages of HSL and HSV over the different Lab color spaces is that they map the sRGB gamut to a cylinder. This makes them easy to use since all parameters can be changed independently, without the risk of creating colors outside of the target gamut.

The main drawback on the other hand is that their properties don’t match human perception particularly well.

Reconciling these conflicting goals perfectly isn’t possible, but given that HSV and HSL don’t use anything derived from experiments relating to human perception, creating something that makes a better tradeoff does not seem unreasonable.

With this new lightness estimate, we are ready to look into the construction of Okhsv and Okhsl.

LIGHTING

-

Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminance

Read more: Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminancehttps://www.translatorscafe.com/unit-converter/en-US/illumination/1-11/

The power output of a light source is measured using the unit of watts W. This is a direct measure to calculate how much power the light is going to drain from your socket and it is not relatable to the light brightness itself.

The amount of energy emitted from it per second. That energy comes out in a form of photons which we can crudely represent with rays of light coming out of the source. The higher the power the more rays emitted from the source in a unit of time.

Not all energy emitted is visible to the human eye, so we often rely on photometric measurements, which takes in account the sensitivity of human eye to different wavelenghts

Details in the post

(more…) -

Convert between light exposure and intensity

Read more: Convert between light exposure and intensityimport math,sys def Exposure2Intensity(exposure): exp = float(exposure) result = math.pow(2,exp) print(result) Exposure2Intensity(0) def Intensity2Exposure(intensity): inarg = float(intensity) if inarg == 0: print("Exposure of zero intensity is undefined.") return if inarg < 1e-323: inarg = max(inarg, 1e-323) print("Exposure of negative intensities is undefined. Clamping to a very small value instead (1e-323)") result = math.log(inarg, 2) print(result) Intensity2Exposure(0.1)Why Exposure?

Exposure is a stop value that multiplies the intensity by 2 to the power of the stop. Increasing exposure by 1 results in double the amount of light.

Artists think in “stops.” Doubling or halving brightness is easy math and common in grading and look-dev.

Exposure counts doublings in whole stops:- +1 stop = ×2 brightness

- −1 stop = ×0.5 brightness

This gives perceptually even controls across both bright and dark values.

Why Intensity?

Intensity is linear.

It’s what render engines and compositors expect when:- Summing values

- Averaging pixels

- Multiplying or filtering pixel data

Use intensity when you need the actual math on pixel/light data.

Formulas (from your Python)

- Intensity from exposure: intensity = 2**exposure

- Exposure from intensity: exposure = log₂(intensity)

Guardrails:

- Intensity must be > 0 to compute exposure.

- If intensity = 0 → exposure is undefined.

- Clamp tiny values (e.g.

1e−323) before using log₂.

Use Exposure (stops) when…

- You want artist-friendly sliders (−5…+5 stops)

- Adjusting look-dev or grading in even stops

- Matching plates with quick ±1 stop tweaks

- Tweening brightness changes smoothly across ranges

Use Intensity (linear) when…

- Storing raw pixel/light values

- Multiplying textures or lights by a gain

- Performing sums, averages, and filters

- Feeding values to render engines expecting linear data

Examples

- +2 stops → 2**2 = 4.0 (×4)

- +1 stop → 2**1 = 2.0 (×2)

- 0 stop → 2**0 = 1.0 (×1)

- −1 stop → 2**(−1) = 0.5 (×0.5)

- −2 stops → 2**(−2) = 0.25 (×0.25)

- Intensity 0.1 → exposure = log₂(0.1) ≈ −3.32

Rule of thumb

Think in stops (exposure) for controls and matching.

Compute in linear (intensity) for rendering and math. -

Free HDRI libraries

Read more: Free HDRI librariesnoahwitchell.com

http://www.noahwitchell.com/freebieslocationtextures.com

https://locationtextures.com/panoramas/maxroz.com

https://www.maxroz.com/hdri/listHDRI Haven

https://hdrihaven.com/Poly Haven

https://polyhaven.com/hdrisDomeble

https://www.domeble.com/IHDRI

https://www.ihdri.com/HDRMaps

https://hdrmaps.com/NoEmotionHdrs.net

http://noemotionhdrs.net/hdrday.htmlOpenFootage.net

https://www.openfootage.net/hdri-panorama/HDRI-hub

https://www.hdri-hub.com/hdrishop/hdri.zwischendrin

https://www.zwischendrin.com/en/browse/hdriLonger list here:

https://cgtricks.com/list-sites-free-hdri/

-

Composition – 5 tips for creating perfect cinematic lighting and making your work look stunning

Read more: Composition – 5 tips for creating perfect cinematic lighting and making your work look stunninghttp://www.diyphotography.net/5-tips-creating-perfect-cinematic-lighting-making-work-look-stunning/

1. Learn the rules of lighting

2. Learn when to break the rules

3. Make your key light larger

4. Reverse keying

5. Always be backlighting

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

What the Boeing 737 MAX’s crashes can teach us about production business – the effects of commoditisation

-

AI and the Law – studiobinder.com – What is Fair Use: Definition, Policies, Examples and More

-

Image rendering bit depth

-

N8N.io – From Zero to Your First AI Agent in 25 Minutes

-

Yann Lecun: Meta AI, Open Source, Limits of LLMs, AGI & the Future of AI | Lex Fridman Podcast #416

-

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

-

Principles of Animation with Alan Becker, Dermot OConnor and Shaun Keenan

-

Survivorship Bias: The error resulting from systematically focusing on successes and ignoring failures. How a young statistician saved his planes during WW2.

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.

![sRGB gamma correction test [gamma correction test]](http://www.madore.org/~david/misc/color/gammatest.png)