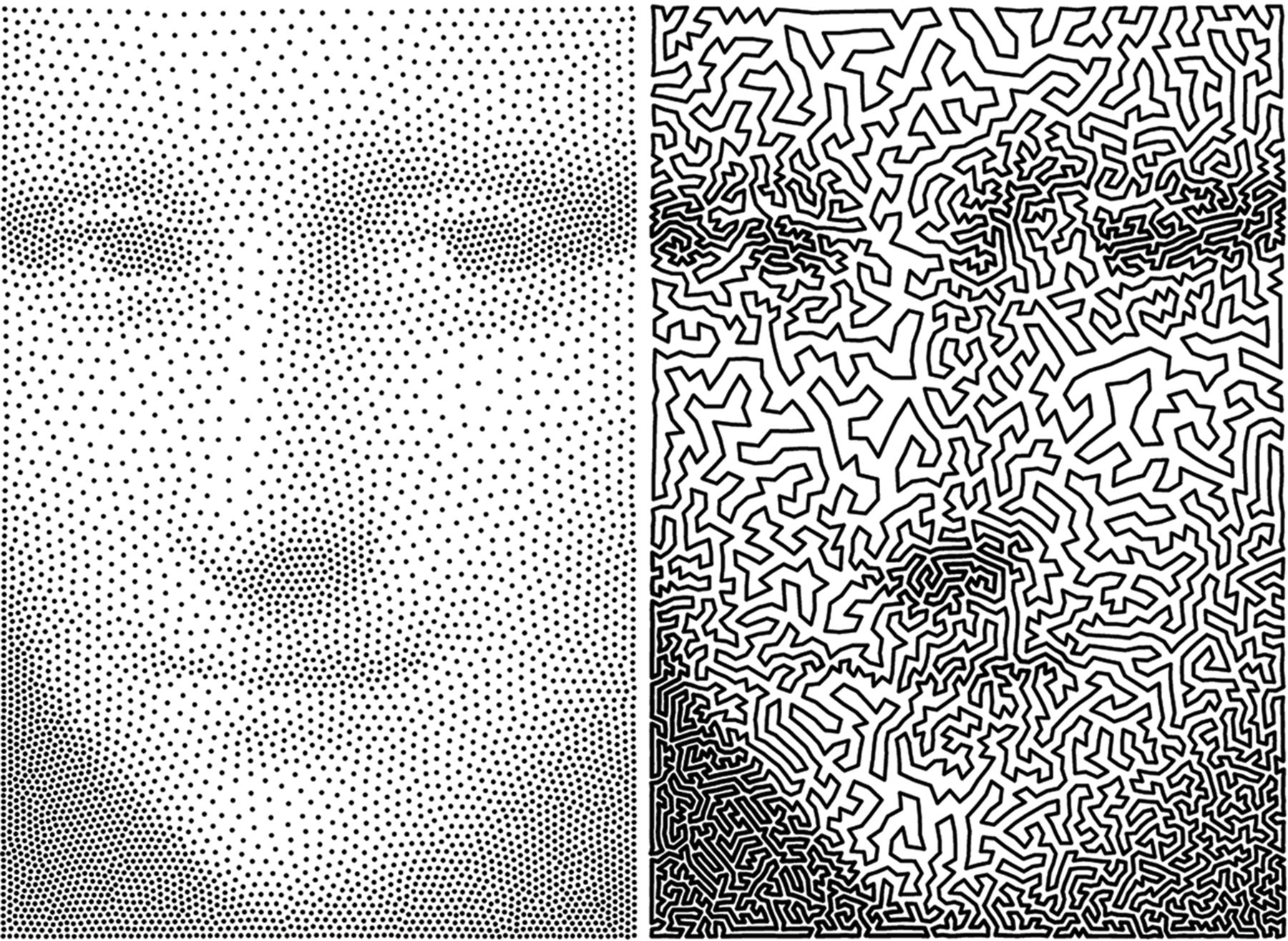

COMPOSITION

DESIGN

-

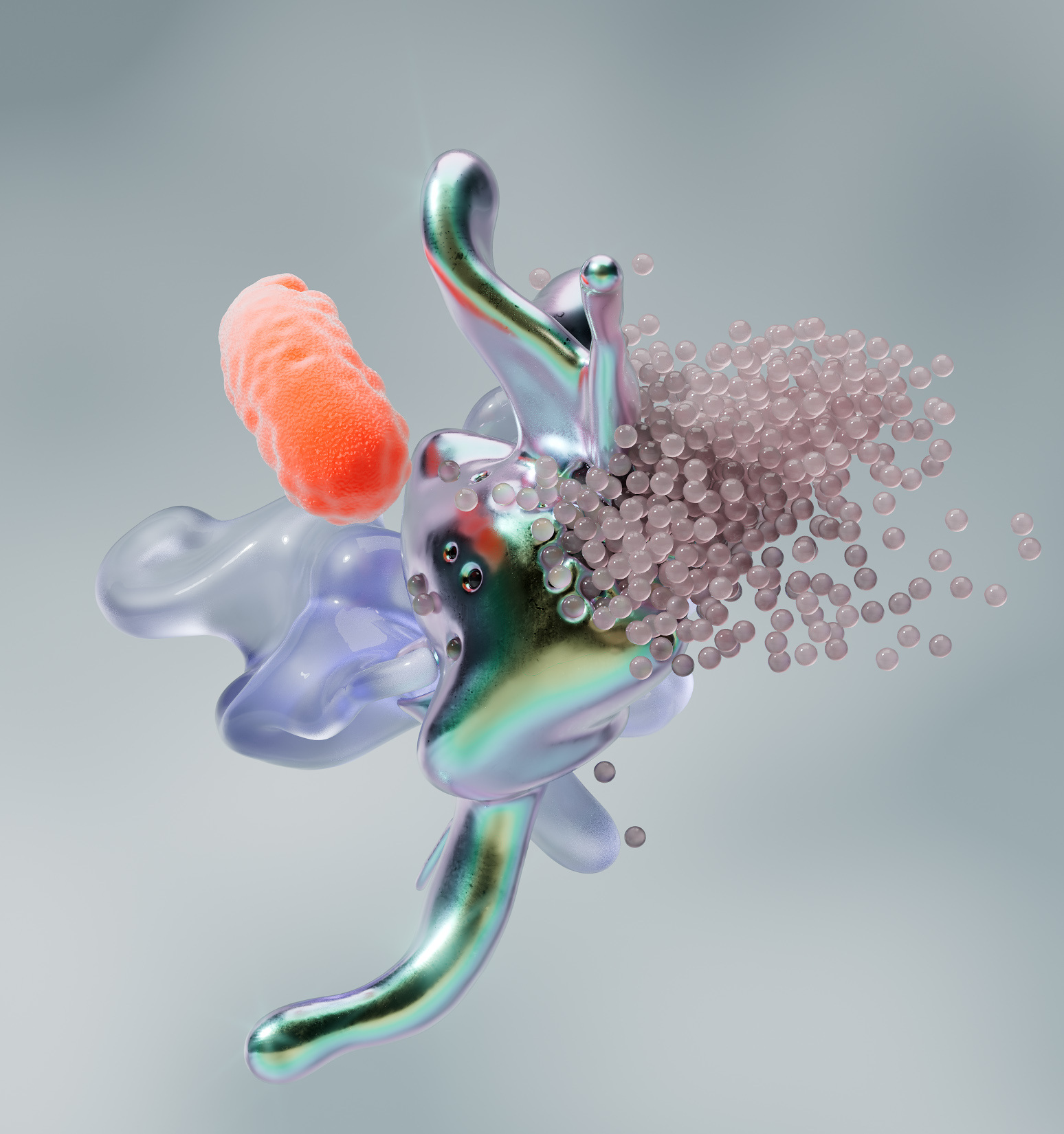

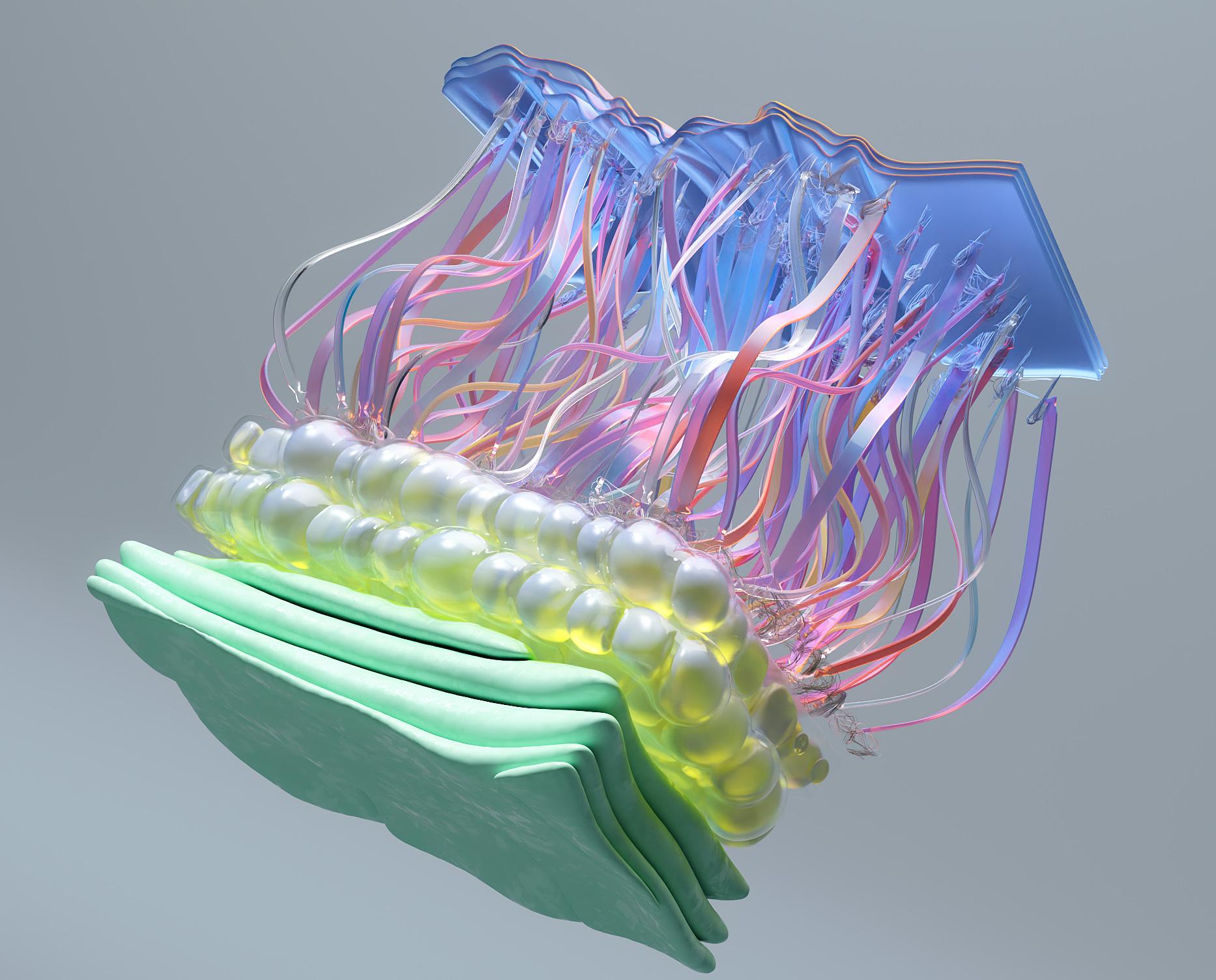

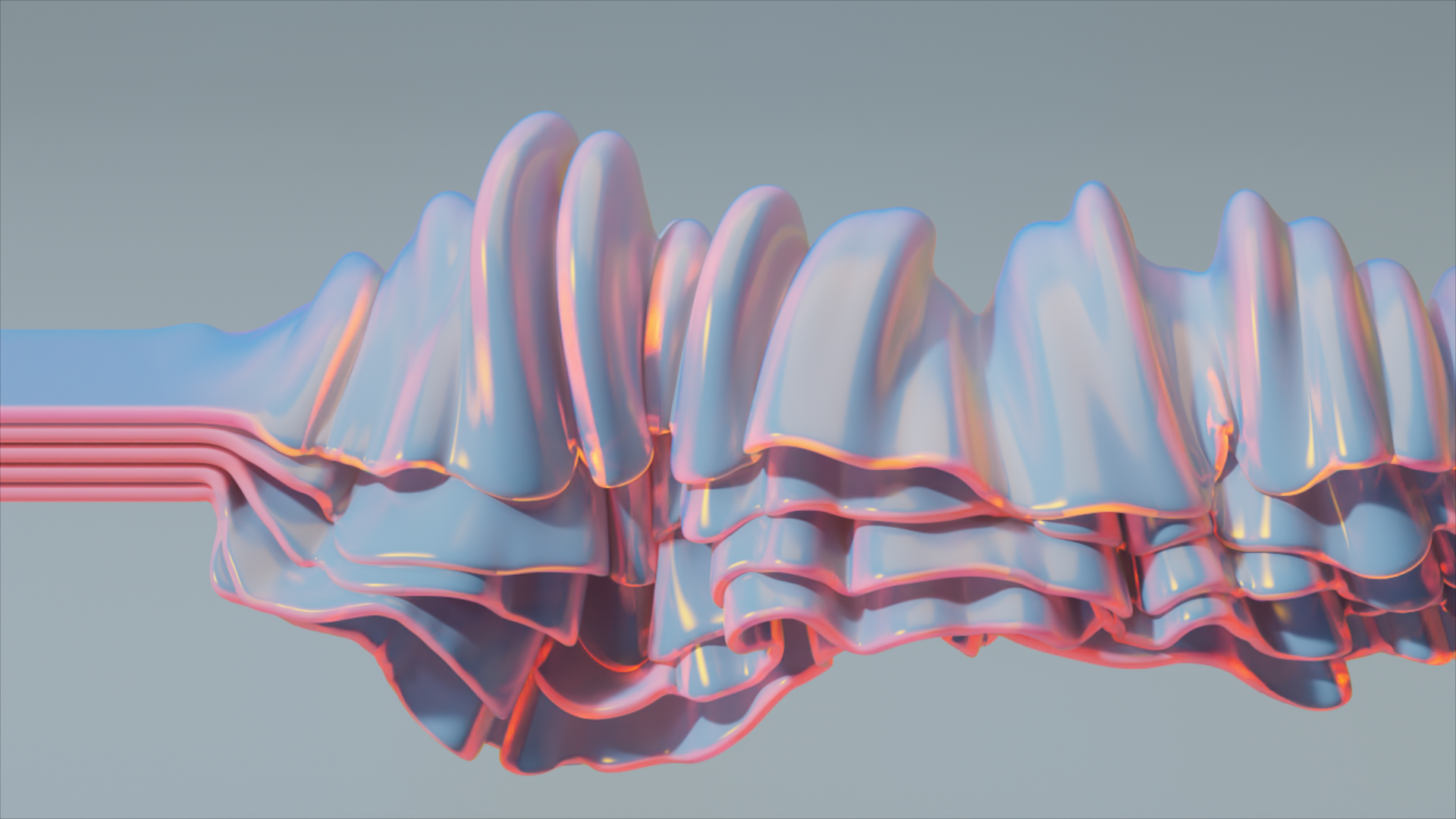

James Gerde – The way the leaves dance in the rain

Read more: James Gerde – The way the leaves dance in the rainhttps://www.instagram.com/gerdegotit/reel/C6s-2r2RgSu/

Since spending a lot of time recently with SDXL I’ve since made my way back to SD 1.5

While the models overall have less fidelity. There is just no comparing to the current motion models we have available for animatediff with 1.5 models.

To date this is one of my favorite pieces. Not because I think it’s even the best it can be. But because the workflow adjustments unlocked some very important ideas I can’t wait to try out.

Performance by @silkenkelly and @itxtheballerina on IG

COLOR

LIGHTING

-

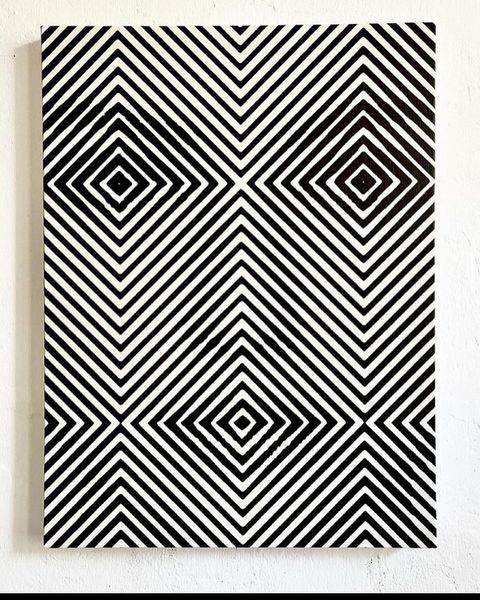

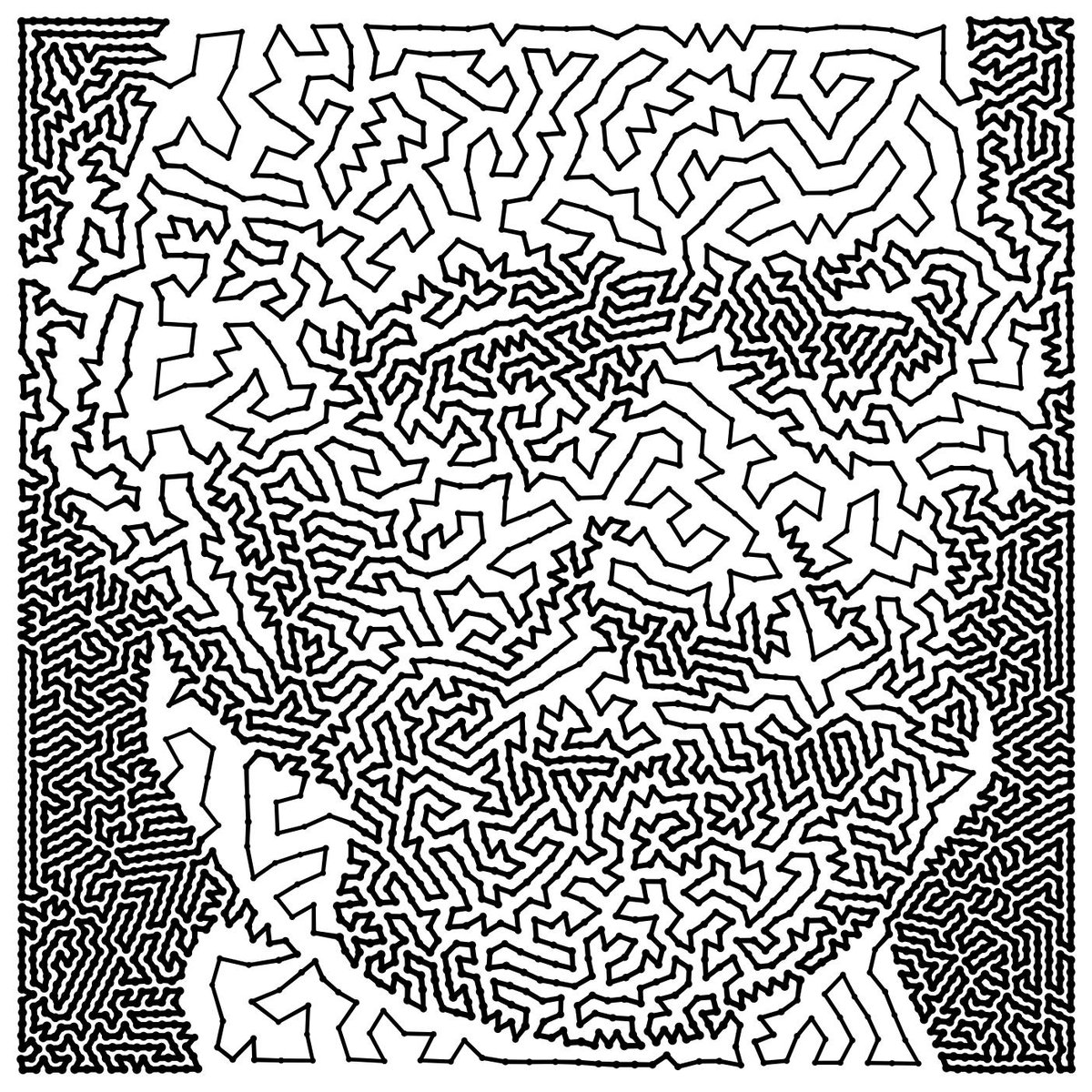

Composition – cinematography Cheat Sheet

Read more: Composition – cinematography Cheat Sheet

Where is our eye attracted first? Why?

Size. Focus. Lighting. Color.

Size. Mr. White (Harvey Keitel) on the right.

Focus. He’s one of the two objects in focus.

Lighting. Mr. White is large and in focus and Mr. Pink (Steve Buscemi) is highlighted by

a shaft of light.

Color. Both are black and white but the read on Mr. White’s shirt now really stands out.

(more…)

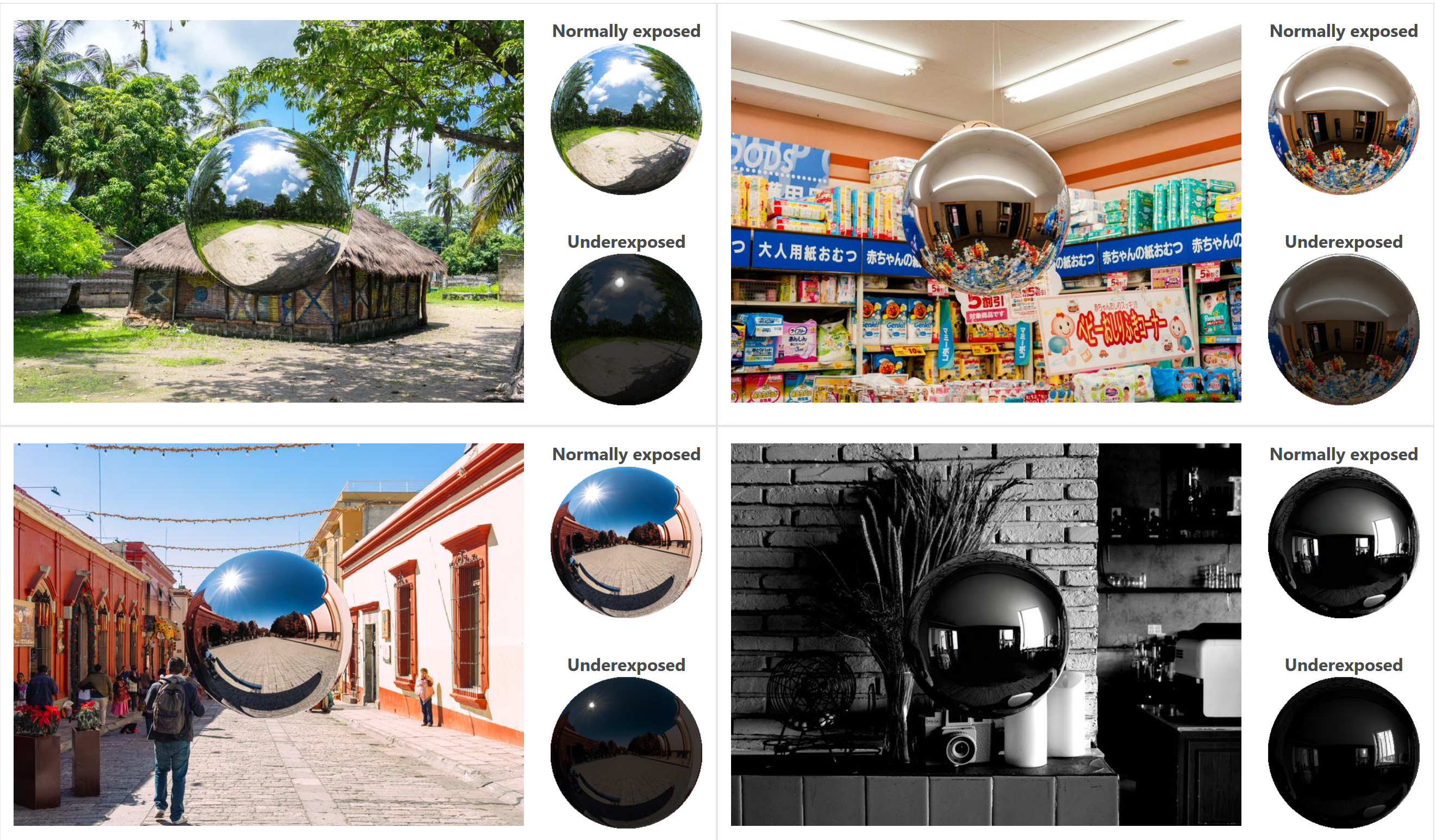

What type of lighting? -

DiffusionLight: HDRI Light Probes for Free by Painting a Chrome Ball

Read more: DiffusionLight: HDRI Light Probes for Free by Painting a Chrome Ballhttps://diffusionlight.github.io/

https://github.com/DiffusionLight/DiffusionLight

https://github.com/DiffusionLight/DiffusionLight?tab=MIT-1-ov-file#readme

https://colab.research.google.com/drive/15pC4qb9mEtRYsW3utXkk-jnaeVxUy-0S

“a simple yet effective technique to estimate lighting in a single input image. Current techniques rely heavily on HDR panorama datasets to train neural networks to regress an input with limited field-of-view to a full environment map. However, these approaches often struggle with real-world, uncontrolled settings due to the limited diversity and size of their datasets. To address this problem, we leverage diffusion models trained on billions of standard images to render a chrome ball into the input image. Despite its simplicity, this task remains challenging: the diffusion models often insert incorrect or inconsistent objects and cannot readily generate images in HDR format. Our research uncovers a surprising relationship between the appearance of chrome balls and the initial diffusion noise map, which we utilize to consistently generate high-quality chrome balls. We further fine-tune an LDR difusion model (Stable Diffusion XL) with LoRA, enabling it to perform exposure bracketing for HDR light estimation. Our method produces convincing light estimates across diverse settings and demonstrates superior generalization to in-the-wild scenarios.”

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Photography basics: Shutter angle and shutter speed and motion blur

-

Emmanuel Tsekleves – Writing Research Papers

-

Advanced Computer Vision with Python OpenCV and Mediapipe

-

Photography basics: Solid Angle measures

-

Kling 1.6 and competitors – advanced tests and comparisons

-

Photography basics: Production Rendering Resolution Charts

-

4dv.ai – Remote Interactive 3D Holographic Presentation Technology and System running on the PlayCanvas engine

-

Python and TCL: Tips and Tricks for Foundry Nuke

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.