COMPOSITION

DESIGN

COLOR

LIGHTING

-

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

Read more: Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental processhttps://www.chrbutler.com/understanding-the-eye-mind-connection

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

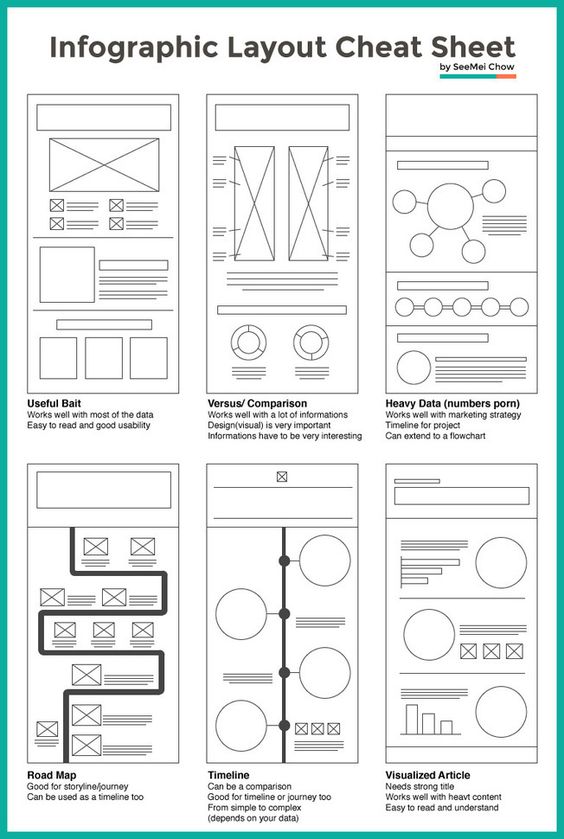

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning

-

Sun cone angle (angular diameter) as perceived by earth viewers

Read more: Sun cone angle (angular diameter) as perceived by earth viewersAlso see:

https://www.pixelsham.com/2020/08/01/solid-angle-measures/

The cone angle of the sun refers to the angular diameter of the sun as observed from Earth, which is related to the apparent size of the sun in the sky.

The angular diameter of the sun, or the cone angle of the sunlight as perceived from Earth, is approximately 0.53 degrees on average. This value can vary slightly due to the elliptical nature of Earth’s orbit around the sun, but it generally stays within a narrow range.

Here’s a more precise breakdown:

-

- Average Angular Diameter: About 0.53 degrees (31 arcminutes)

- Minimum Angular Diameter: Approximately 0.52 degrees (when Earth is at aphelion, the farthest point from the sun)

- Maximum Angular Diameter: Approximately 0.54 degrees (when Earth is at perihelion, the closest point to the sun)

This angular diameter remains relatively constant throughout the day because the sun’s distance from Earth does not change significantly over a single day.

To summarize, the cone angle of the sun’s light, or its angular diameter, is typically around 0.53 degrees, regardless of the time of day.

https://en.wikipedia.org/wiki/Angular_diameter

-

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Web vs Printing or digital RGB vs CMYK

-

NVidia – High-Fidelity 3D Mesh Generation at Scale with Meshtron

-

Canva bought Affinity – Now Affinity Photo and Affinity Designer are… GONE?!

-

Eyeline Labs VChain – Chain-of-Visual-Thought for Reasoning in Video Generation for better AI physics

-

How do LLMs like ChatGPT (Generative Pre-Trained Transformer) work? Explained by Deep-Fake Ryan Gosling

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

-

4dv.ai – Remote Interactive 3D Holographic Presentation Technology and System running on the PlayCanvas engine

-

Ethan Roffler interviews CG Supervisor Daniele Tosti

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.