COMPOSITION

-

Composition and The Expressive Nature Of Light

Read more: Composition and The Expressive Nature Of Lighthttp://www.huffingtonpost.com/bill-danskin/post_12457_b_10777222.html

George Sand once said “ The artist vocation is to send light into the human heart.”

DESIGN

COLOR

-

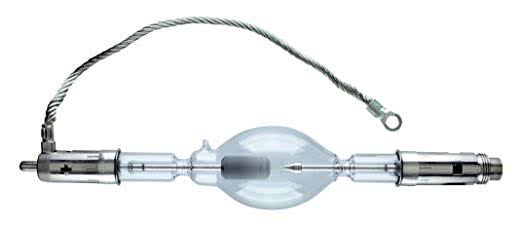

What light is best to illuminate gems for resale

Read more: What light is best to illuminate gems for resalewww.palagems.com/gem-lighting2

Artificial light sources, not unlike the diverse phases of natural light, vary considerably in their properties. As a result, some lamps render an object’s color better than others do.

The most important criterion for assessing the color-rendering ability of any lamp is its spectral power distribution curve.

Natural daylight varies too much in strength and spectral composition to be taken seriously as a lighting standard for grading and dealing colored stones. For anything to be a standard, it must be constant in its properties, which natural light is not.

For dealers in particular to make the transition from natural light to an artificial light source, that source must offer:

1- A degree of illuminance at least as strong as the common phases of natural daylight.

2- Spectral properties identical or comparable to a phase of natural daylight.A source combining these two things makes gems appear much the same as when viewed under a given phase of natural light. From the viewpoint of many dealers, this corresponds to a naturalappearance.

The 6000° Kelvin xenon short-arc lamp appears closest to meeting the criteria for a standard light source. Besides the strong illuminance this lamp affords, its spectrum is very similar to CIE standard illuminants of similar color temperature.

-

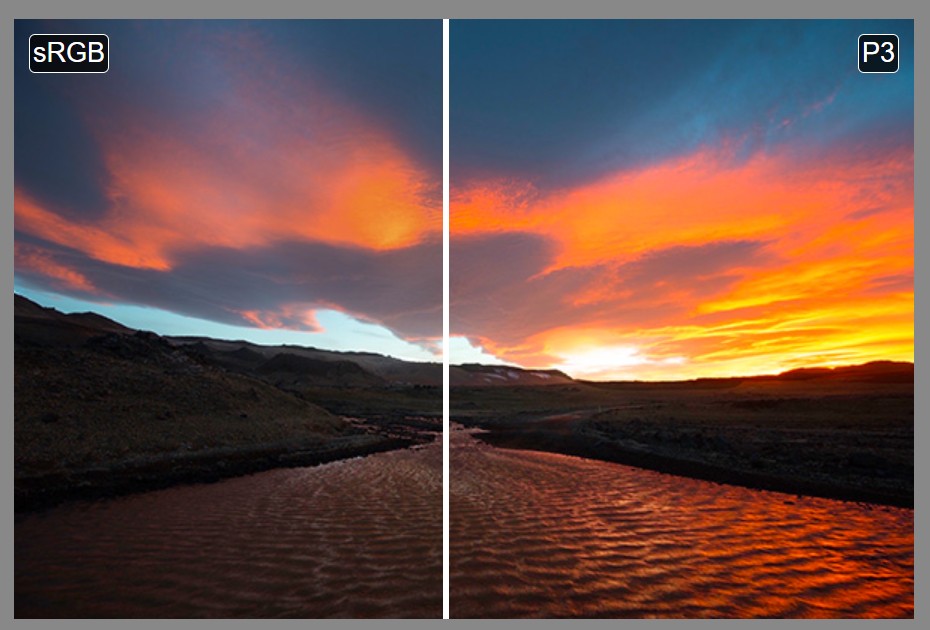

Colormaxxing – What if I told you that rgb(255, 0, 0) is not actually the reddest red you can have in your browser?

Read more: Colormaxxing – What if I told you that rgb(255, 0, 0) is not actually the reddest red you can have in your browser?https://karuna.dev/colormaxxing

https://webkit.org/blog-files/color-gamut/comparison.html

https://oklch.com/#70,0.1,197,100

-

Paul Debevec, Chloe LeGendre, Lukas Lepicovsky – Jointly Optimizing Color Rendition and In-Camera Backgrounds in an RGB Virtual Production Stage

Read more: Paul Debevec, Chloe LeGendre, Lukas Lepicovsky – Jointly Optimizing Color Rendition and In-Camera Backgrounds in an RGB Virtual Production Stagehttps://arxiv.org/pdf/2205.12403.pdf

RGB LEDs vs RGBWP (RGB + lime + phospor converted amber) LEDs

Local copy:

-

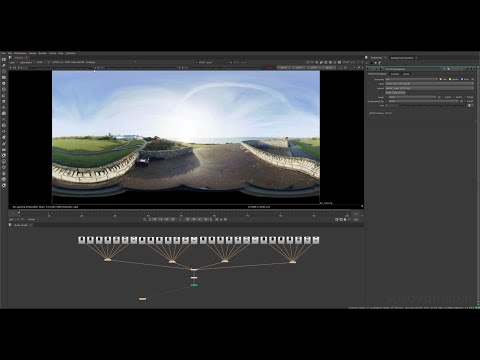

PTGui 13 beta adds control through a Patch Editor

Read more: PTGui 13 beta adds control through a Patch EditorAdditions:

- Patch Editor (PTGui Pro)

- DNG output

- Improved RAW / DNG handling

- JPEG 2000 support

- Performance improvements

-

Brett Jones / Phil Reyneri (Lightform) / Philipp7pc: The study of Projection Mapping through Projectors

Read more: Brett Jones / Phil Reyneri (Lightform) / Philipp7pc: The study of Projection Mapping through ProjectorsVideo Projection Tool Software

https://hcgilje.wordpress.com/vpt/https://www.projectorpoint.co.uk/news/how-bright-should-my-projector-be/

http://www.adwindowscreens.com/the_calculator/

heavym

https://heavym.net/en/MadMapper

https://madmapper.com/

LIGHTING

-

Vahan Sosoyan MakeHDR – an OpenFX open source plug-in for merging multiple LDR images into a single HDRI

Read more: Vahan Sosoyan MakeHDR – an OpenFX open source plug-in for merging multiple LDR images into a single HDRIhttps://github.com/Sosoyan/make-hdr

Feature notes

- Merge up to 16 inputs with 8, 10 or 12 bit depth processing

- User friendly logarithmic Tone Mapping controls within the tool

- Advanced controls such as Sampling rate and Smoothness

Available at cross platform on Linux, MacOS and Windows Works consistent in compositing applications like Nuke, Fusion, Natron.

NOTE: The goal is to clean the initial individual brackets before or at merging time as much as possible.

This means:- keeping original shooting metadata

- de-fringing

- removing aberration (through camera lens data or automatically)

- at 32 bit

- in ACEScg (or ACES) wherever possible

-

Custom bokeh in a raytraced DOF render

Read more: Custom bokeh in a raytraced DOF renderTo achieve a custom pinhole camera effect with a custom bokeh in Arnold Raytracer, you can follow these steps:

- Set the render camera with a focal length around 50 (or as needed)

- Set the F-Stop to a high value (e.g., 22).

- Set the focus distance as you require

- Turn on DOF

- Place a plane a few cm in front of the camera.

- Texture the plane with a transparent shape at the center of it. (Transmission with no specular roughness)

-

Capturing the world in HDR for real time projects – Call of Duty: Advanced Warfare

Read more: Capturing the world in HDR for real time projects – Call of Duty: Advanced WarfareReal-World Measurements for Call of Duty: Advanced Warfare

www.activision.com/cdn/research/Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

Local version

Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

-

Neural Microfacet Fields for Inverse Rendering

Read more: Neural Microfacet Fields for Inverse Renderinghttps://half-potato.gitlab.io/posts/nmf/

-

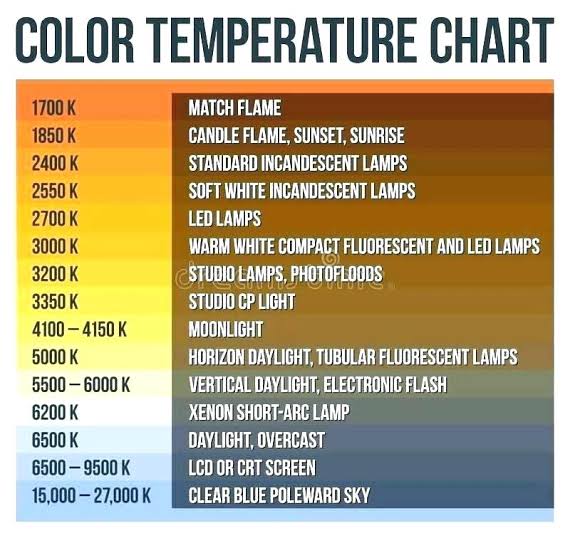

Photography basics: Color Temperature and White Balance

Read more: Photography basics: Color Temperature and White BalanceColor Temperature of a light source describes the spectrum of light which is radiated from a theoretical “blackbody” (an ideal physical body that absorbs all radiation and incident light – neither reflecting it nor allowing it to pass through) with a given surface temperature.

https://en.wikipedia.org/wiki/Color_temperature

Or. Most simply it is a method of describing the color characteristics of light through a numerical value that corresponds to the color emitted by a light source, measured in degrees of Kelvin (K) on a scale from 1,000 to 10,000.

More accurately. The color temperature of a light source is the temperature of an ideal backbody that radiates light of comparable hue to that of the light source.

(more…) -

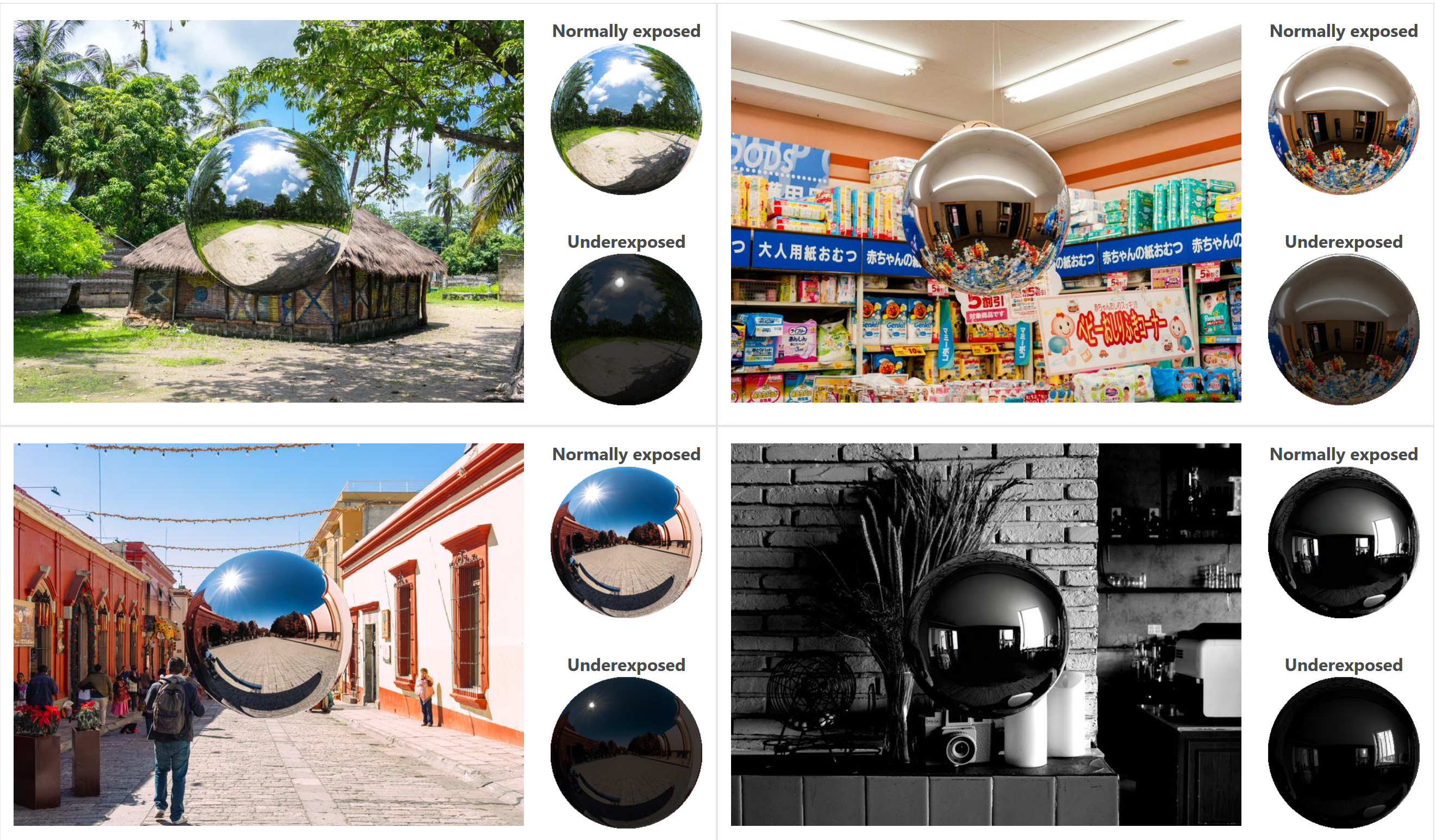

DiffusionLight: HDRI Light Probes for Free by Painting a Chrome Ball

Read more: DiffusionLight: HDRI Light Probes for Free by Painting a Chrome Ballhttps://diffusionlight.github.io/

https://github.com/DiffusionLight/DiffusionLight

https://github.com/DiffusionLight/DiffusionLight?tab=MIT-1-ov-file#readme

https://colab.research.google.com/drive/15pC4qb9mEtRYsW3utXkk-jnaeVxUy-0S

“a simple yet effective technique to estimate lighting in a single input image. Current techniques rely heavily on HDR panorama datasets to train neural networks to regress an input with limited field-of-view to a full environment map. However, these approaches often struggle with real-world, uncontrolled settings due to the limited diversity and size of their datasets. To address this problem, we leverage diffusion models trained on billions of standard images to render a chrome ball into the input image. Despite its simplicity, this task remains challenging: the diffusion models often insert incorrect or inconsistent objects and cannot readily generate images in HDR format. Our research uncovers a surprising relationship between the appearance of chrome balls and the initial diffusion noise map, which we utilize to consistently generate high-quality chrome balls. We further fine-tune an LDR difusion model (Stable Diffusion XL) with LoRA, enabling it to perform exposure bracketing for HDR light estimation. Our method produces convincing light estimates across diverse settings and demonstrates superior generalization to in-the-wild scenarios.”

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

Read more: Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacynofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. “

Use CRI, Luminous Efficacy and color temperature controls to match your needs.Color Temperature

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. “https://www.studiobinder.com/blog/what-is-color-rendering-index

(more…)

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

-

Google – Artificial Intelligence free courses

-

Tencent Hunyuan3D 2.1 goes Open Source and adds MV (Multi-view) and MV Mini

-

How does Stable Diffusion work?

-

Convert 2D Images or Text to 3D Models

-

Jesse Zumstein – Jobs in games

-

MiniMax-Remover – Taming Bad Noise Helps Video Object Removal Rotoscoping

-

Zibra.AI – Real-Time Volumetric Effects in Virtual Production. Now free for Indies!

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.