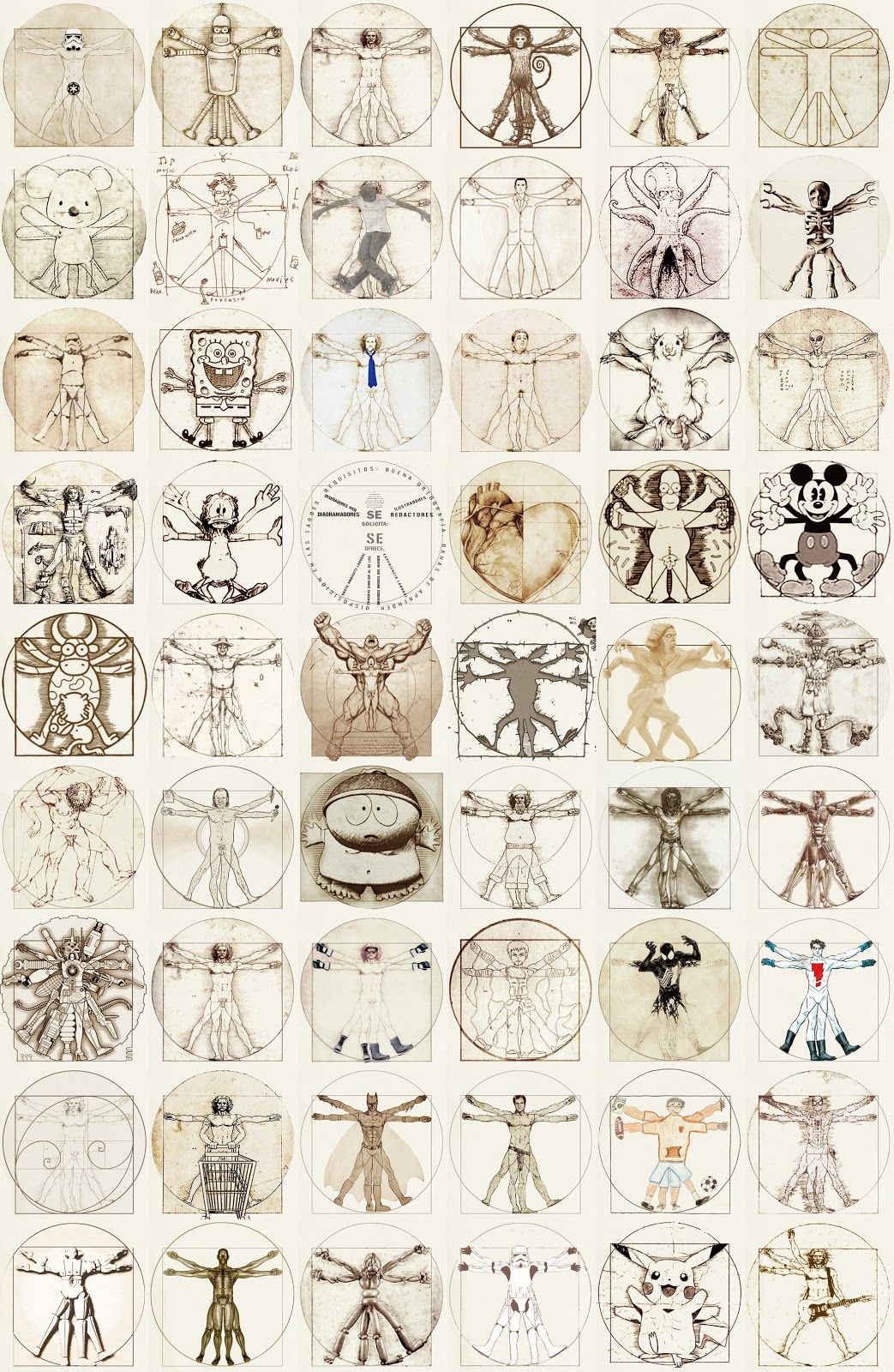

COMPOSITION

-

HuggingFace ai-comic-factory – a FREE AI Comic Book Creator

Read more: HuggingFace ai-comic-factory – a FREE AI Comic Book Creatorhttps://huggingface.co/spaces/jbilcke-hf/ai-comic-factory

this is the epic story of a group of talented digital artists trying to overcame daily technical challenges to achieve incredibly photorealistic projects of monsters and aliens

DESIGN

-

Mike Wong – AtoMeow – A Blue noise image stippling in Processing

Read more: Mike Wong – AtoMeow – A Blue noise image stippling in Processing

https://github.com/mwkm/atoMeow

https://www.shadertoy.com/view/7s3XzX

This demo is created for coders who are familiar with this awesome creative coding platform. You may quickly modify the code to work for video or to stipple your own Procssing drawings by turning them into

PImageand run the simulation. This demo code also serves as a reference implementation of my article Blue noise sampling using an N-body simulation-based method. If you are interested in 2.5D, you may mod the code to achieve what I discussed in this artist friendly article.Convert your video to a dotted noise.

-

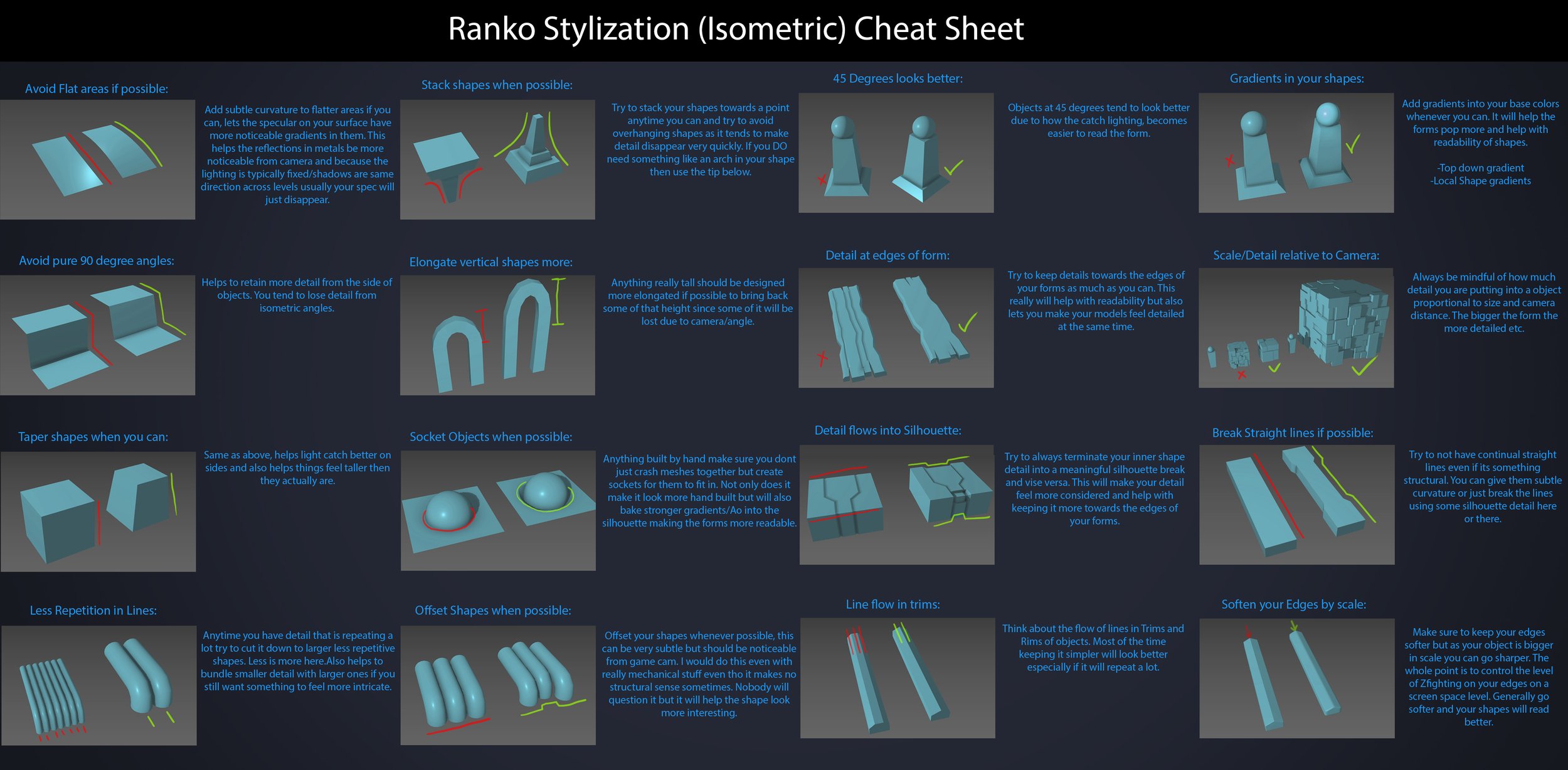

Ranko Prozo – Modelling design tips

Read more: Ranko Prozo – Modelling design tipsEvery Project I work on I always create a stylization Cheat sheet. Every project is unique but some principles carry over no matter what. This is a sheet I use a lot when I work on isometric stylized projects to help keep my assets consistent and interesting. None of these concepts are my own, just lots of tips I learned over the years. I have also added this to a page on my website, will continue to update with more tips and tricks, just need time to compile it all :)

COLOR

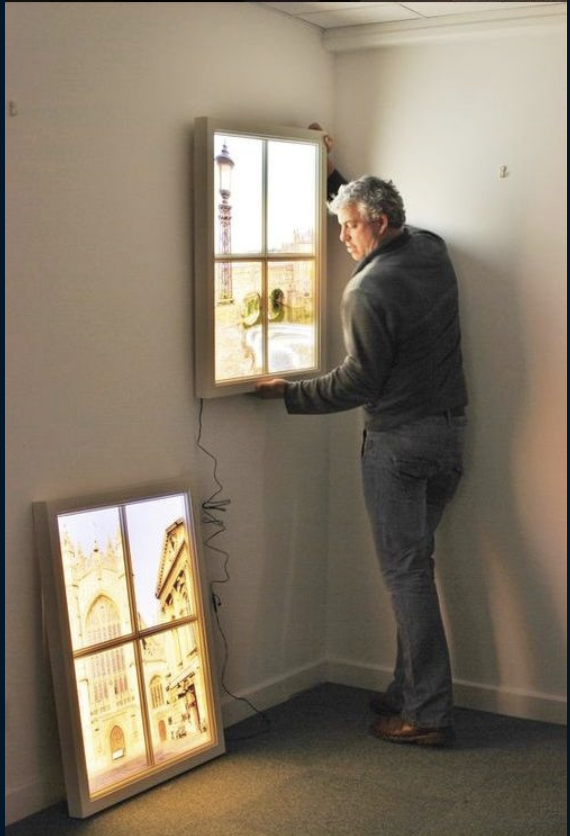

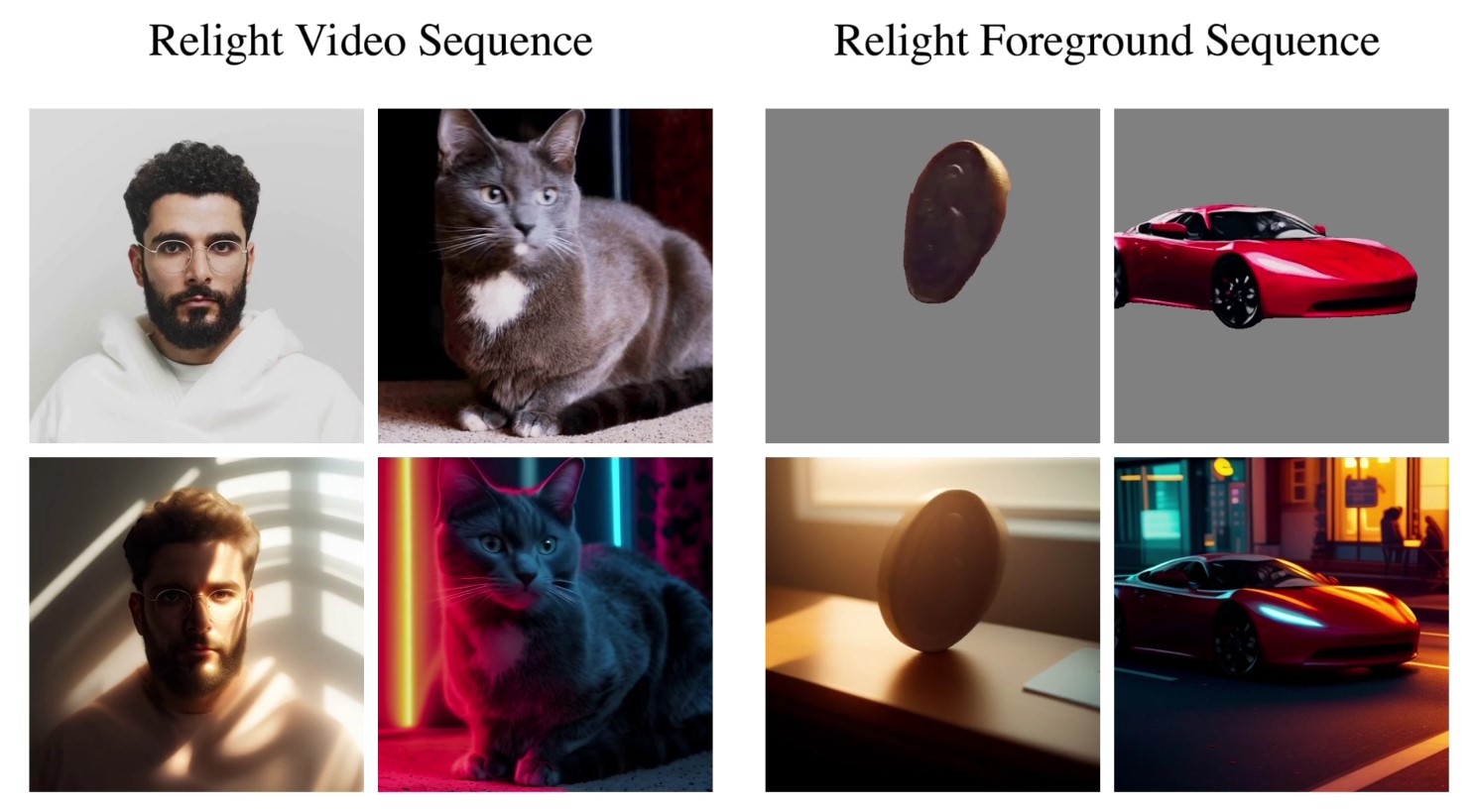

LIGHTING

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

Read more: Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacynofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. “

Use CRI, Luminous Efficacy and color temperature controls to match your needs.Color Temperature

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. “https://www.studiobinder.com/blog/what-is-color-rendering-index

(more…)

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

ComfyDock – The Easiest (Free) Way to Safely Run ComfyUI Sessions in a Boxed Container

-

Steven Stahlberg – Perception and Composition

-

Eyeline Labs VChain – Chain-of-Visual-Thought for Reasoning in Video Generation for better AI physics

-

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

-

Ross Pettit on The Agile Manager – How tech firms went for prioritizing cash flow instead of talent (and artists)

-

Sensitivity of human eye

-

Kling 1.6 and competitors – advanced tests and comparisons

-

What Is The Resolution and view coverage Of The human Eye. And what distance is TV at best?

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.