COMPOSITION

-

9 Best Hacks to Make a Cinematic Video with Any Camera

Read more: 9 Best Hacks to Make a Cinematic Video with Any Camerahttps://www.flexclip.com/learn/cinematic-video.html

- Frame Your Shots to Create Depth

- Create Shallow Depth of Field

- Avoid Shaky Footage and Use Flexible Camera Movements

- Properly Use Slow Motion

- Use Cinematic Lighting Techniques

- Apply Color Grading

- Use Cinematic Music and SFX

- Add Cinematic Fonts and Text Effects

- Create the Cinematic Bar at the Top and the Bottom

DESIGN

COLOR

LIGHTING

-

IES Light Profiles and editing software

Read more: IES Light Profiles and editing softwarehttp://www.derekjenson.com/3d-blog/ies-light-profiles

https://ieslibrary.com/en/browse#ies

https://leomoon.com/store/shaders/ies-lights-pack

https://docs.arnoldrenderer.com/display/a5afmug/ai+photometric+light

IES profiles are useful for creating life-like lighting, as they can represent the physical distribution of light from any light source.

The IES format was created by the Illumination Engineering Society, and most lighting manufacturers provide IES profile for the lights they manufacture.

-

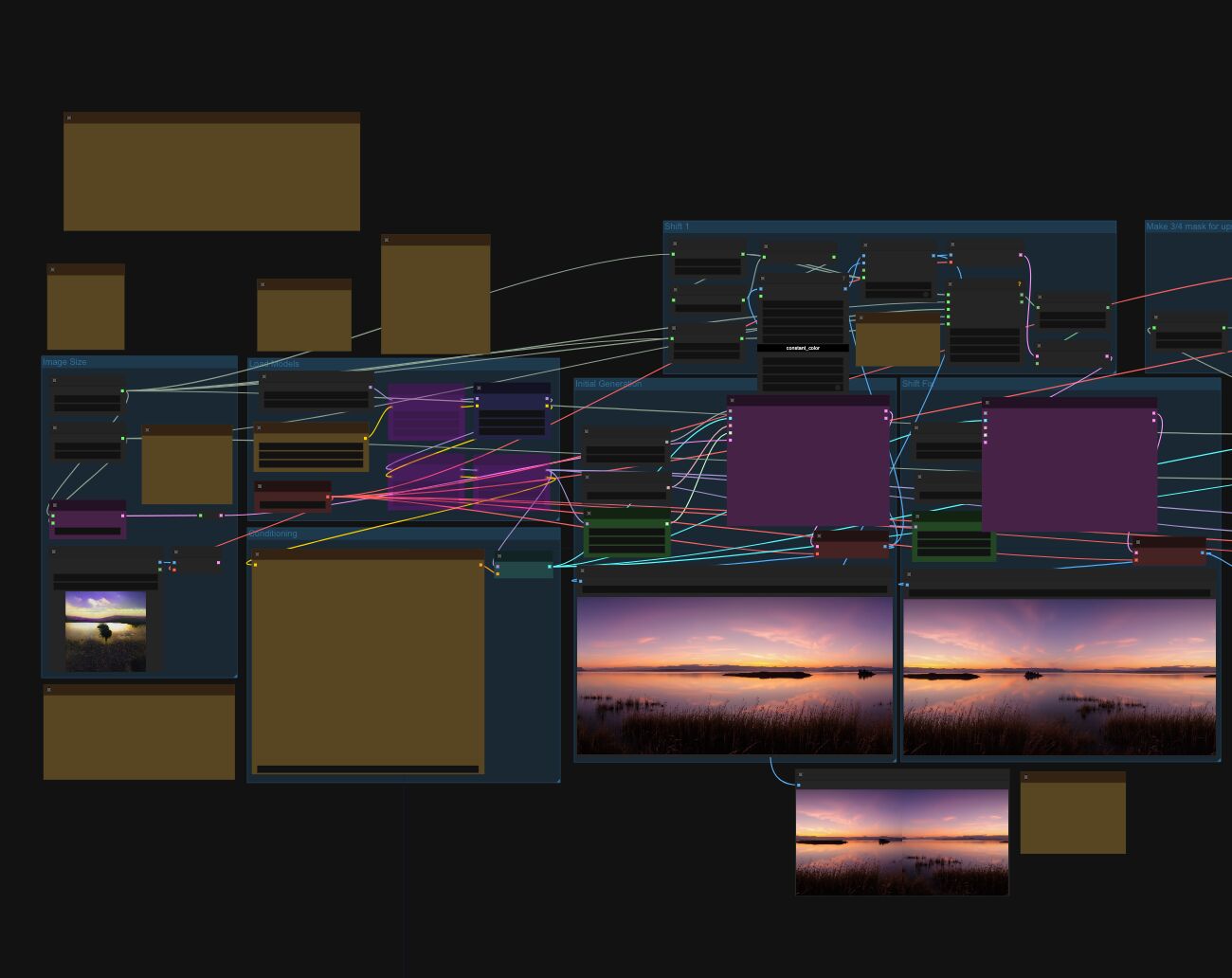

Arto T. – A workflow for creating photorealistic, equirectangular 360° panoramas in ComfyUI using Flux

Read more: Arto T. – A workflow for creating photorealistic, equirectangular 360° panoramas in ComfyUI using Fluxhttps://civitai.com/models/735980/flux-equirectangular-360-panorama

https://civitai.com/models/745010?modelVersionId=833115

The trigger phrase is “equirectangular 360 degree panorama”. I would avoid saying “spherical projection” since that tends to result in non-equirectangular spherical images.

Image resolution should always be a 2:1 aspect ratio. 1024 x 512 or 1408 x 704 work quite well and were used in the training data. 2048 x 1024 also works.

I suggest using a weight of 0.5 – 1.5. If you are having issues with the image generating too flat instead of having the necessary spherical distortion, try increasing the weight above 1, though this could negatively impact small details of the image. For Flux guidance, I recommend a value of about 2.5 for realistic scenes.

8-bit output at the moment

-

HDRI Resources

Read more: HDRI ResourcesText2Light

- https://www.cgtrader.com/free-3d-models/exterior/other/10-free-hdr-panoramas-created-with-text2light-zero-shot

- https://frozenburning.github.io/projects/text2light/

- https://github.com/FrozenBurning/Text2Light

Royalty free links

- https://locationtextures.com/panoramas/

- http://www.noahwitchell.com/freebies

- https://polyhaven.com/hdris

- https://hdrmaps.com/

- https://www.ihdri.com/

- https://hdrihaven.com/

- https://www.domeble.com/

- http://www.hdrlabs.com/sibl/archive.html

- https://www.hdri-hub.com/hdrishop/hdri

- http://noemotionhdrs.net/hdrevening.html

- https://www.openfootage.net/hdri-panorama/

- https://www.zwischendrin.com/en/browse/hdri

Nvidia GauGAN360

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Guide to Prompt Engineering

-

Matt Hallett – WAN 2.1 VACE Total Video Control in ComfyUI

-

Yann Lecun: Meta AI, Open Source, Limits of LLMs, AGI & the Future of AI | Lex Fridman Podcast #416

-

GretagMacbeth Color Checker Numeric Values and Middle Gray

-

Google – Artificial Intelligence free courses

-

Photography basics: How Exposure Stops (Aperture, Shutter Speed, and ISO) Affect Your Photos – cheat sheet cards

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

-

MiniMax-Remover – Taming Bad Noise Helps Video Object Removal Rotoscoping

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.