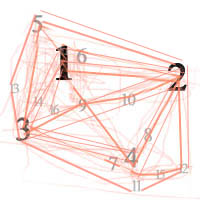

COMPOSITION

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

Read more: Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacynofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. “

Use CRI, Luminous Efficacy and color temperature controls to match your needs.Color Temperature

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. “https://www.studiobinder.com/blog/what-is-color-rendering-index

(more…) -

Mastering Camera Shots and Angles: A Guide for Filmmakers

Read more: Mastering Camera Shots and Angles: A Guide for Filmmakershttps://website.ltx.studio/blog/mastering-camera-shots-and-angles

1. Extreme Wide Shot

2. Wide Shot

3. Medium Shot

4. Close Up

5. Extreme Close Up

-

StudioBinder – Roger Deakins on How to Choose a Camera Lens — Cinematography Composition Techniques

Read more: StudioBinder – Roger Deakins on How to Choose a Camera Lens — Cinematography Composition Techniqueshttps://www.studiobinder.com/blog/camera-lens-buying-guide/

https://www.studiobinder.com/blog/e-books/camera-lenses-explained-volume-1-ebook

DESIGN

-

A.I. Algorithm art fetches US$432,500 at Christie auction

Read more: A.I. Algorithm art fetches US$432,500 at Christie auctionwww.ctvnews.ca/entertainment/algorithm-art-fetches-us-432-500-at-christie-s-auction-1.4150620

www.christies.com/features/A-collaboration-between-two-artists-one-human-one-a-machine-9332-1.aspx

-

Myriam Catrin – amazing design

Read more: Myriam Catrin – amazing designhttps://www.artstation.com/myriamcatrin

Creator of the comic book ” Passages. Book I” released with @therealarttitude

https://arttitudebootleg.bigcartel.com/product/passages-myriam-catrin

instagram/ FB page: @myriamcatrin / @MyriamCatrinComics

COLOR

-

FXGuide – ACES 2.0 with ILM’s Alex Fry

Read more: FXGuide – ACES 2.0 with ILM’s Alex Fryhttps://draftdocs.acescentral.com/background/whats-new/

ACES 2.0 is the second major release of the components that make up the ACES system. The most significant change is a new suite of rendering transforms whose design was informed by collected feedback and requests from users of ACES 1. The changes aim to improve the appearance of perceived artifacts and to complete previously unfinished components of the system, resulting in a more complete, robust, and consistent product.

Highlights of the key changes in ACES 2.0 are as follows:

- New output transforms, including:

- A less aggressive tone scale

- More intuitive controls to create custom outputs to non-standard displays

- Robust gamut mapping to improve perceptual uniformity

- Improved performance of the inverse transforms

- Enhanced AMF specification

- An updated specification for ACES Transform IDs

- OpenEXR compression recommendations

- Enhanced tools for generating Input Transforms and recommended procedures for characterizing prosumer cameras

- Look Transform Library

- Expanded documentation

Rendering Transform

The most substantial change in ACES 2.0 is a complete redesign of the rendering transform.

ACES 2.0 was built as a unified system, rather than through piecemeal additions. Different deliverable outputs “match” better and making outputs to display setups other than the provided presets is intended to be user-driven. The rendering transforms are less likely to produce undesirable artifacts “out of the box”, which means less time can be spent fixing problematic images and more time making pictures look the way you want.

Key design goals

- Improve consistency of tone scale and provide an easy to use parameter to allow for outputs between preset dynamic ranges

- Minimize hue skews across exposure range in a region of same hue

- Unify for structural consistency across transform type

- Easy to use parameters to create outputs other than the presets

- Robust gamut mapping to improve harsh clipping artifacts

- Fill extents of output code value cube (where appropriate and expected)

- Invertible – not necessarily reversible, but Output > ACES > Output round-trip should be possible

- Accomplish all of the above while maintaining an acceptable “out-of-the box” rendering

- New output transforms, including:

-

About color: What is a LUT

Read more: About color: What is a LUThttp://www.lightillusion.com/luts.html

https://www.shutterstock.com/blog/how-use-luts-color-grading

A LUT (Lookup Table) is essentially the modifier between two images, the original image and the displayed image, based on a mathematical formula. Basically conversion matrices of different complexities. There are different types of LUTS – viewing, transform, calibration, 1D and 3D.

-

Scene Referred vs Display Referred color workflows

Read more: Scene Referred vs Display Referred color workflowsDisplay Referred it is tied to the target hardware, as such it bakes color requirements into every type of media output request.

Scene Referred uses a common unified wide gamut and targeting audience through CDL and DI libraries instead.

So that color information stays untouched and only “transformed” as/when needed.Sources:

– Victor Perez – Color Management Fundamentals & ACES Workflows in Nuke

– https://z-fx.nl/ColorspACES.pdf

– Wicus

LIGHTING

-

Composition – 5 tips for creating perfect cinematic lighting and making your work look stunning

Read more: Composition – 5 tips for creating perfect cinematic lighting and making your work look stunninghttp://www.diyphotography.net/5-tips-creating-perfect-cinematic-lighting-making-work-look-stunning/

1. Learn the rules of lighting

2. Learn when to break the rules

3. Make your key light larger

4. Reverse keying

5. Always be backlighting

-

Magnific.ai Relight – change the entire lighting of a scene

Read more: Magnific.ai Relight – change the entire lighting of a sceneIt’s a new Magnific spell that allows you to change the entire lighting of a scene and, optionally, the background with just:

1/ A prompt OR

2/ A reference image OR

3/ A light map (drawing your own lights)https://x.com/javilopen/status/1805274155065176489

-

What is the Light Field?

Read more: What is the Light Field?http://lightfield-forum.com/what-is-the-lightfield/

The light field consists of the total of all light rays in 3D space, flowing through every point and in every direction.

How to Record a Light Field

- a single, robotically controlled camera

- a rotating arc of cameras

- an array of cameras or camera modules

- a single camera or camera lens fitted with a microlens array

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Black Body color aka the Planckian Locus curve for white point eye perception

-

Guide to Prompt Engineering

-

Black Forest Labs released FLUX.1 Kontext

-

PixelSham – Introduction to Python 2022

-

NVidia – High-Fidelity 3D Mesh Generation at Scale with Meshtron

-

Alejandro Villabón and Rafał Kaniewski – Recover Highlights With 8-Bit to High Dynamic Range Half Float Copycat – Nuke

-

Photography basics: Color Temperature and White Balance

-

Generative AI Glossary / AI Dictionary / AI Terminology

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.