COMPOSITION

-

Mastering Camera Shots and Angles: A Guide for Filmmakers

Read more: Mastering Camera Shots and Angles: A Guide for Filmmakershttps://website.ltx.studio/blog/mastering-camera-shots-and-angles

1. Extreme Wide Shot

2. Wide Shot

3. Medium Shot

4. Close Up

5. Extreme Close Up

-

Composition and The Expressive Nature Of Light

Read more: Composition and The Expressive Nature Of Lighthttp://www.huffingtonpost.com/bill-danskin/post_12457_b_10777222.html

George Sand once said “ The artist vocation is to send light into the human heart.”

DESIGN

-

Create striked-out text

Read more: Create striked-out texthttp://fsymbols.com/generators/strikethrough/

s̶t̶r̶i̶k̶e̶ ̶i̶t̶ ̶l̶i̶k̶̶e̶ ̶i̶t̶s̶ ̶h̶o̶t

-

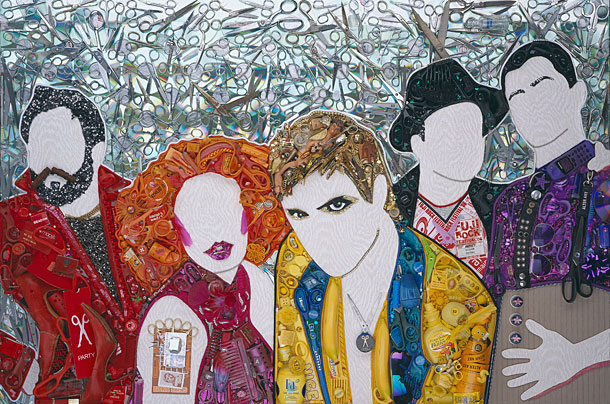

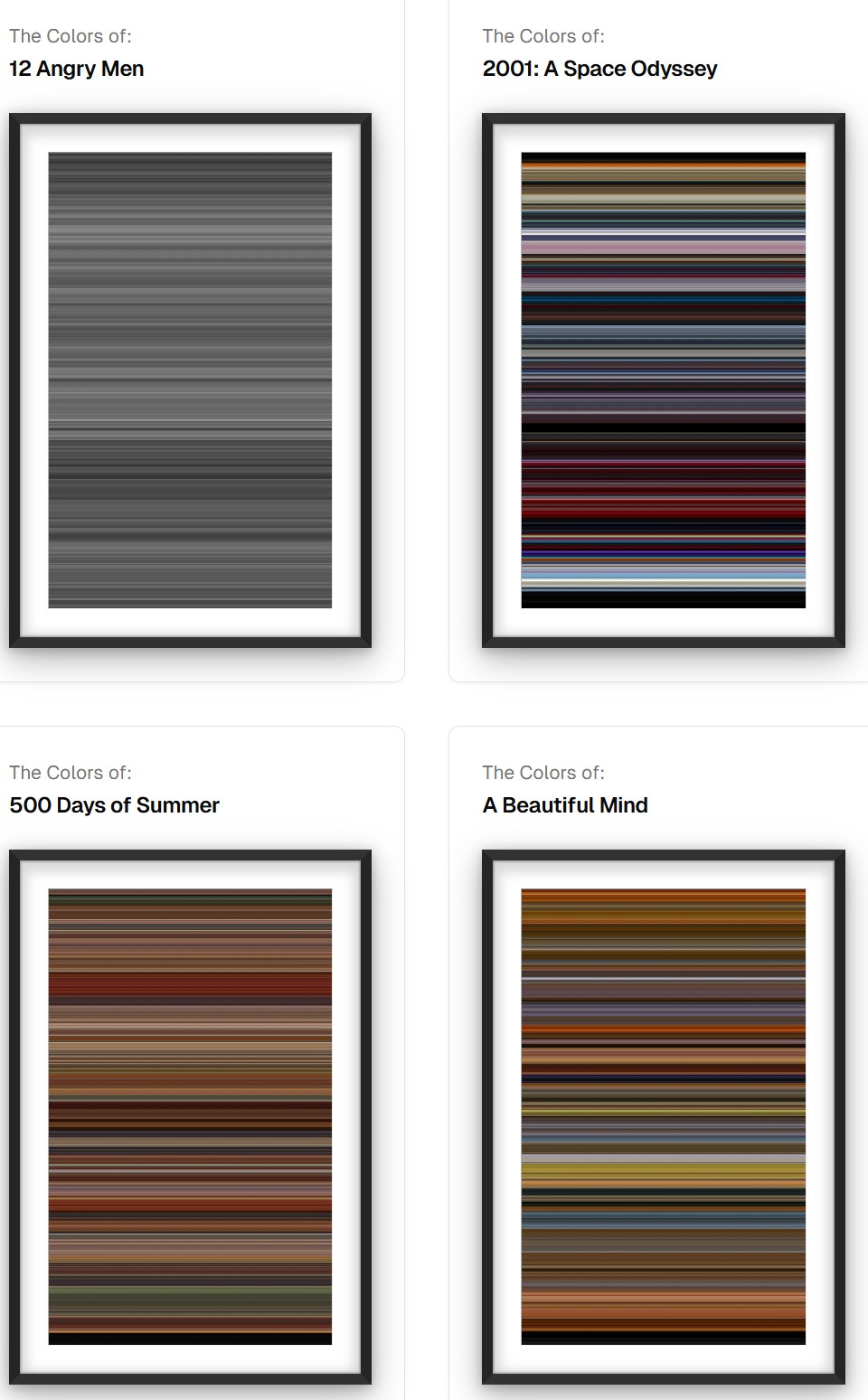

Creative duo Joseph Lattimer and Caitlin Derer Creates Absolutely Amazing The Beatles Collectable Toys

Read more: Creative duo Joseph Lattimer and Caitlin Derer Creates Absolutely Amazing The Beatles Collectable Toyshttps://designyoutrust.com/2024/11/artist-duo-creates-absolutely-amazing-the-beatles-collectable-toys

COLOR

-

THOMAS MANSENCAL – The Apparent Simplicity of RGB Rendering

Read more: THOMAS MANSENCAL – The Apparent Simplicity of RGB Renderinghttps://thomasmansencal.substack.com/p/the-apparent-simplicity-of-rgb-rendering

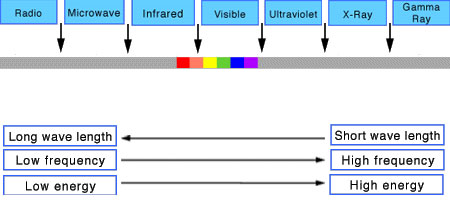

The primary goal of physically-based rendering (PBR) is to create a simulation that accurately reproduces the imaging process of electro-magnetic spectrum radiation incident to an observer. This simulation should be indistinguishable from reality for a similar observer.

Because a camera is not sensitive to incident light the same way than a human observer, the images it captures are transformed to be colorimetric. A project might require infrared imaging simulation, a portion of the electro-magnetic spectrum that is invisible to us. Radically different observers might image the same scene but the act of observing does not change the intrinsic properties of the objects being imaged. Consequently, the physical modelling of the virtual scene should be independent of the observer.

-

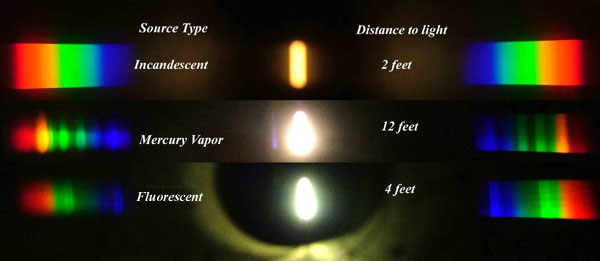

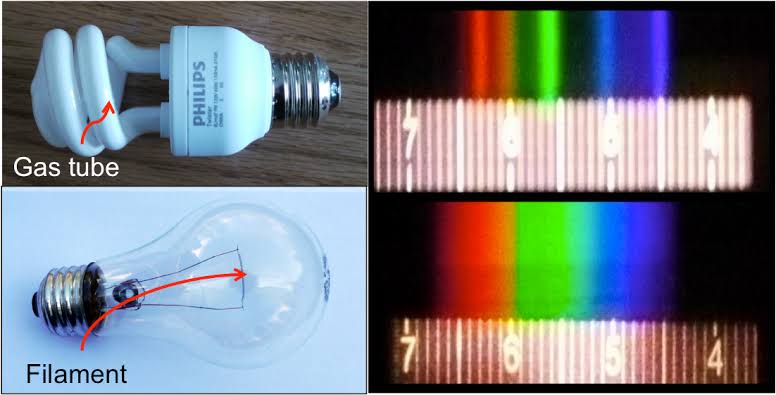

StudioBinder.com – CRI color rendering index

Read more: StudioBinder.com – CRI color rendering indexwww.studiobinder.com/blog/what-is-color-rendering-index

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”

www.pixelsham.com/2021/04/28/types-of-film-lights-and-their-efficiency

-

Photography basics: Color Temperature and White Balance

Read more: Photography basics: Color Temperature and White BalanceColor Temperature of a light source describes the spectrum of light which is radiated from a theoretical “blackbody” (an ideal physical body that absorbs all radiation and incident light – neither reflecting it nor allowing it to pass through) with a given surface temperature.

https://en.wikipedia.org/wiki/Color_temperature

Or. Most simply it is a method of describing the color characteristics of light through a numerical value that corresponds to the color emitted by a light source, measured in degrees of Kelvin (K) on a scale from 1,000 to 10,000.

More accurately. The color temperature of a light source is the temperature of an ideal backbody that radiates light of comparable hue to that of the light source.

(more…) -

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

Read more: RawTherapee – a free, open source, cross-platform raw image and HDRi processing program5.10 of this tool includes excellent tools to clean up cr2 and cr3 used on set to support HDRI processing.

Converting raw to AcesCG 32 bit tiffs with metadata. -

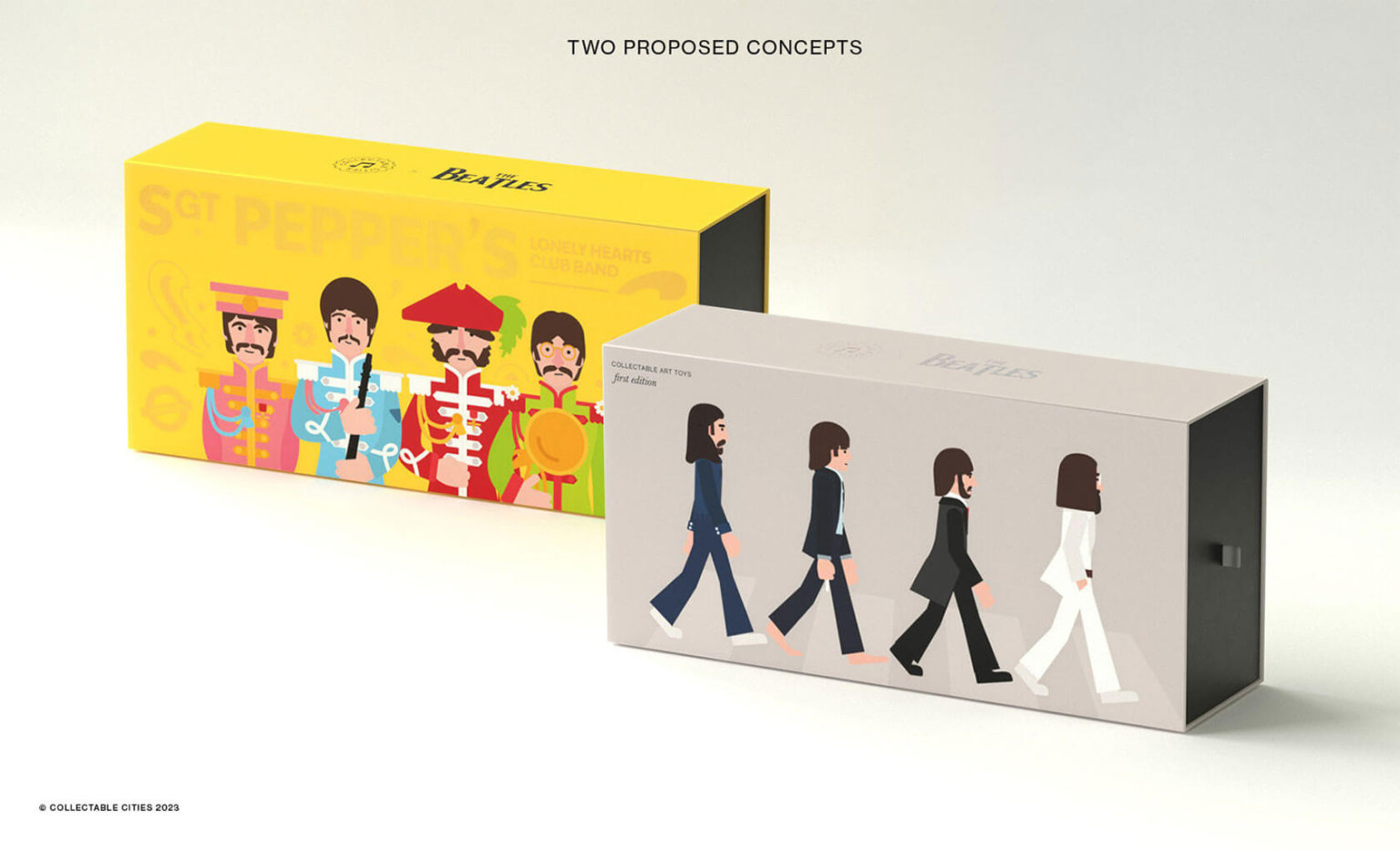

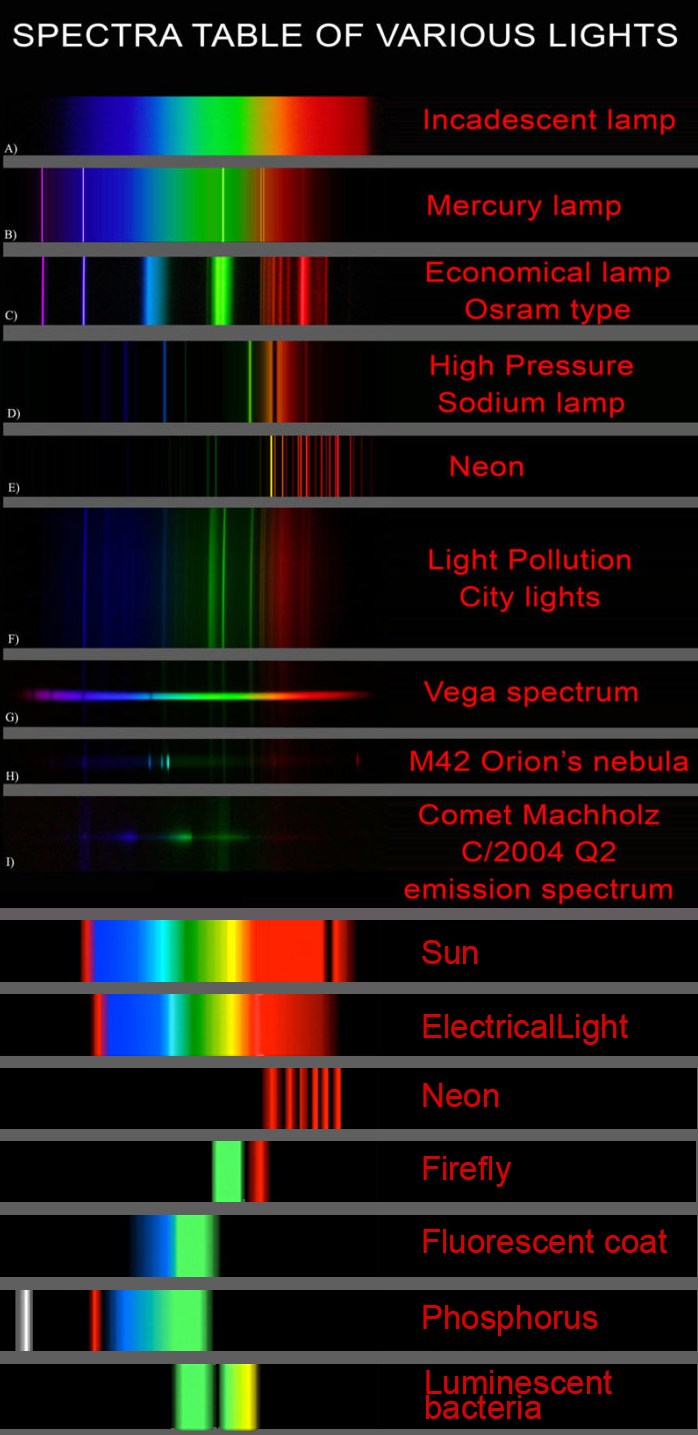

Space bodies’ components and light spectroscopy

Read more: Space bodies’ components and light spectroscopywww.plutorules.com/page-111-space-rocks.html

This help’s us understand the composition of components in/on solar system bodies.

Dips in the observed light spectrum, also known as, lines of absorption occur as gasses absorb energy from light at specific points along the light spectrum.

These dips or darkened zones (lines of absorption) leave a finger print which identify elements and compounds.

In this image the dark absorption bands appear as lines of emission which occur as the result of emitted not reflected (absorbed) light.

Lines of absorption

Lines of emission

Lines of emission

-

Thomas Mansencal – Colour Science for Python

Read more: Thomas Mansencal – Colour Science for Pythonhttps://thomasmansencal.substack.com/p/colour-science-for-python

https://www.colour-science.org/

Colour is an open-source Python package providing a comprehensive number of algorithms and datasets for colour science. It is freely available under the BSD-3-Clause terms.

LIGHTING

-

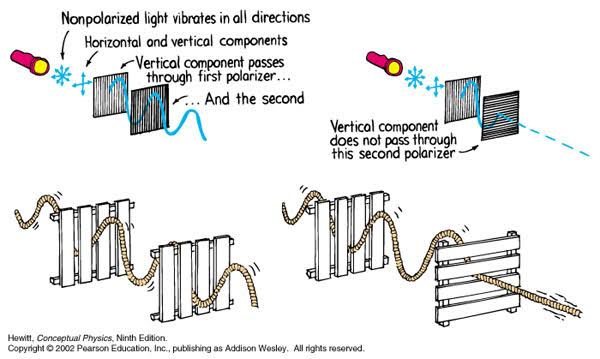

Polarised vs unpolarized filtering

Read more: Polarised vs unpolarized filteringA light wave that is vibrating in more than one plane is referred to as unpolarized light. …

Polarized light waves are light waves in which the vibrations occur in a single plane. The process of transforming unpolarized light into polarized light is known as polarization.

en.wikipedia.org/wiki/Polarizing_filter_(photography)

The most common use of polarized technology is to reduce lighting complexity on the subject.

(more…)

Details such as glare and hard edges are not removed, but greatly reduced. -

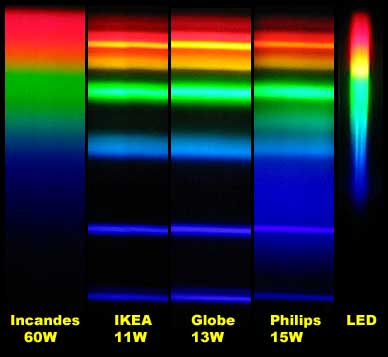

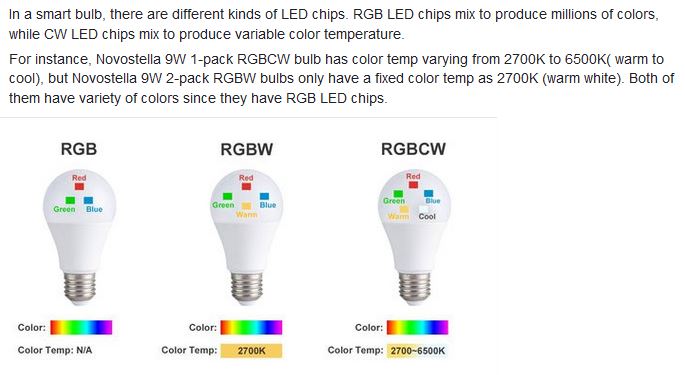

Practical Aspects of Spectral Data and LEDs in Digital Content Production and Virtual Production – SIGGRAPH 2022

Read more: Practical Aspects of Spectral Data and LEDs in Digital Content Production and Virtual Production – SIGGRAPH 2022Comparison to the commercial side

https://www.ecolorled.com/blog/detail/what-is-rgb-rgbw-rgbic-strip-lights

RGBW (RGB + White) LED strip uses a 4-in-1 LED chip made up of red, green, blue, and white.

RGBWW (RGB + White + Warm White) LED strip uses either a 5-in-1 LED chip with red, green, blue, white, and warm white for color mixing. The only difference between RGBW and RGBWW is the intensity of the white color. The term RGBCCT consists of RGB and CCT. CCT (Correlated Color Temperature) means that the color temperature of the led strip light can be adjusted to change between warm white and white. Thus, RGBWW strip light is another name of RGBCCT strip.

RGBCW is the acronym for Red, Green, Blue, Cold, and Warm. These 5-in-1 chips are used in supper bright smart LED lighting products

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Eddie Yoon – There’s a big misconception about AI creative

-

AnimationXpress.com interviews Daniele Tosti for TheCgCareer.com channel

-

PixelSham – Introduction to Python 2022

-

N8N.io – From Zero to Your First AI Agent in 25 Minutes

-

Web vs Printing or digital RGB vs CMYK

-

Photography basics: Production Rendering Resolution Charts

-

VFX pipeline – Render Wall Farm management topics

-

What the Boeing 737 MAX’s crashes can teach us about production business – the effects of commoditisation

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.