COMPOSITION

-

SlowMoVideo – How to make a slow motion shot with the open source program

Read more: SlowMoVideo – How to make a slow motion shot with the open source programhttp://slowmovideo.granjow.net/

slowmoVideo is an OpenSource program that creates slow-motion videos from your footage.

Slow motion cinematography is the result of playing back frames for a longer duration than they were exposed. For example, if you expose 240 frames of film in one second, then play them back at 24 fps, the resulting movie is 10 times longer (slower) than the original filmed event….

Film cameras are relatively simple mechanical devices that allow you to crank up the speed to whatever rate the shutter and pull-down mechanism allow. Some film cameras can operate at 2,500 fps or higher (although film shot in these cameras often needs some readjustment in postproduction). Video, on the other hand, is always captured, recorded, and played back at a fixed rate, with a current limit around 60fps. This makes extreme slow motion effects harder to achieve (and less elegant) on video, because slowing down the video results in each frame held still on the screen for a long time, whereas with high-frame-rate film there are plenty of frames to fill the longer durations of time. On video, the slow motion effect is more like a slide show than smooth, continuous motion.

One obvious solution is to shoot film at high speed, then transfer it to video (a case where film still has a clear advantage, sorry George). Another possibility is to cross dissolve or blur from one frame to the next. This adds a smooth transition from one still frame to the next. The blur reduces the sharpness of the image, and compared to slowing down images shot at a high frame rate, this is somewhat of a cheat. However, there isn’t much you can do about it until video can be recorded at much higher rates. Of course, many film cameras can’t shoot at high frame rates either, so the whole super-slow-motion endeavor is somewhat specialized no matter what medium you are using. (There are some high speed digital cameras available now that allow you to capture lots of digital frames directly to your computer, so technology is starting to catch up with film. However, this feature isn’t going to appear in consumer camcorders any time soon.)

-

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

Read more: Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental processhttps://www.chrbutler.com/understanding-the-eye-mind-connection

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning

DESIGN

-

Goga Tandashvili – bas-relief master

Read more: Goga Tandashvili – bas-relief master@moltenimmersiveart Goga Tandashvili is a master of the art of Bas-Relief. Using this technique, he creates stunning figures that are slightly raised from a flat surface, bringing scenes inspired by the natural world to life. #Art #Artists #GogaTandashvili #BasReliefSculpture #ArtInspiredByNature #ImpressionistArt #BasRelief #Sculptures #Sculptor #Molten #MoltenArt #MoltenImmersiveArt #MoltenAffect #Curation #Curator #ArtCuration #ArtCurator #DorothyDiStefano ♬ original sound – Molten Immersive Art -

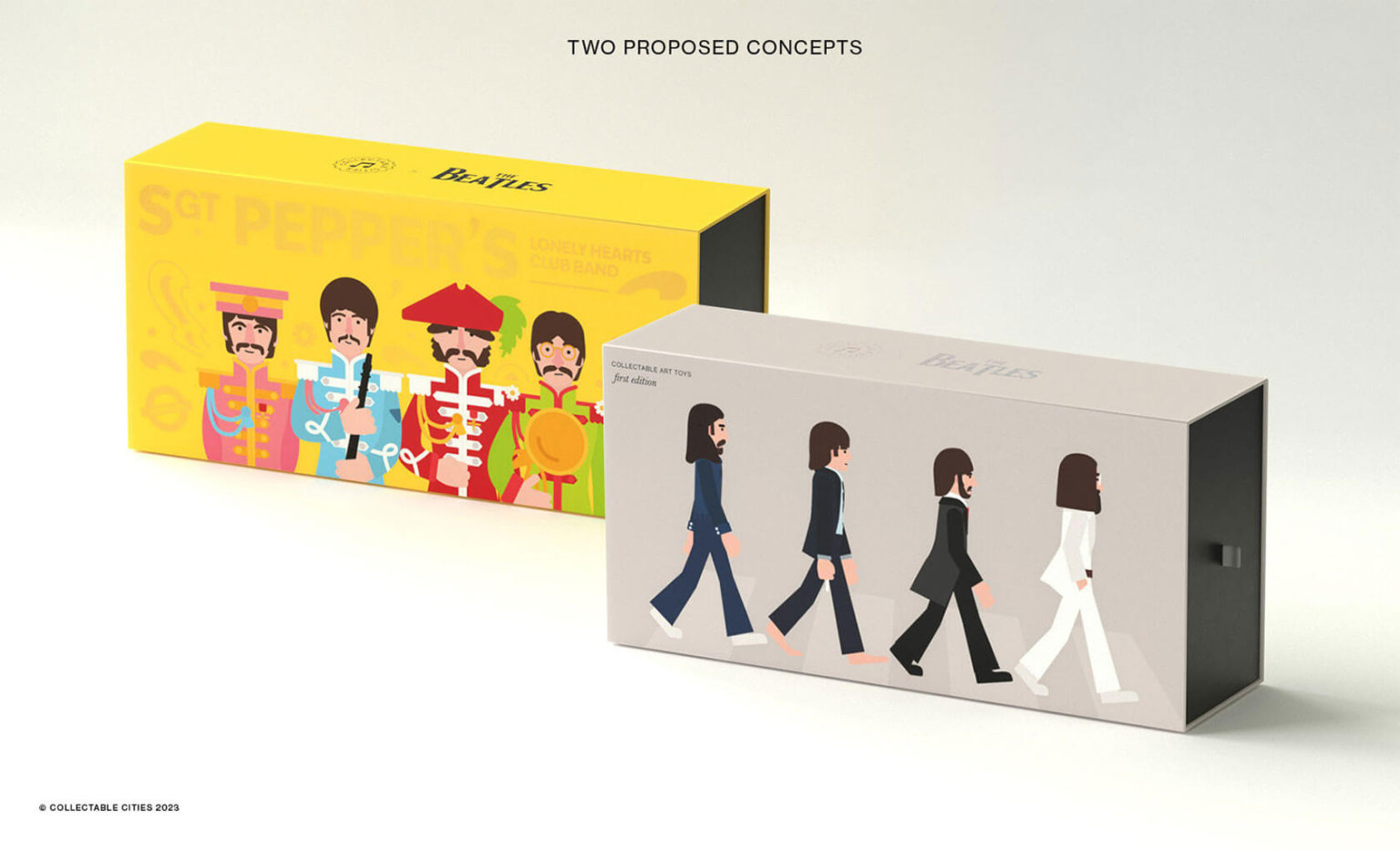

Creative duo Joseph Lattimer and Caitlin Derer Creates Absolutely Amazing The Beatles Collectable Toys

Read more: Creative duo Joseph Lattimer and Caitlin Derer Creates Absolutely Amazing The Beatles Collectable Toyshttps://designyoutrust.com/2024/11/artist-duo-creates-absolutely-amazing-the-beatles-collectable-toys

COLOR

-

Practical Aspects of Spectral Data and LEDs in Digital Content Production and Virtual Production – SIGGRAPH 2022

Read more: Practical Aspects of Spectral Data and LEDs in Digital Content Production and Virtual Production – SIGGRAPH 2022Comparison to the commercial side

https://www.ecolorled.com/blog/detail/what-is-rgb-rgbw-rgbic-strip-lights

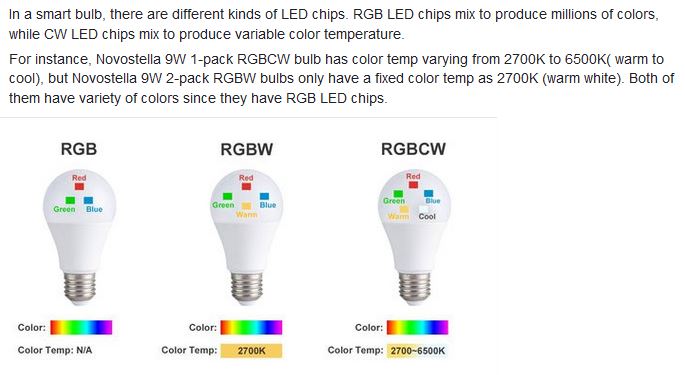

RGBW (RGB + White) LED strip uses a 4-in-1 LED chip made up of red, green, blue, and white.

RGBWW (RGB + White + Warm White) LED strip uses either a 5-in-1 LED chip with red, green, blue, white, and warm white for color mixing. The only difference between RGBW and RGBWW is the intensity of the white color. The term RGBCCT consists of RGB and CCT. CCT (Correlated Color Temperature) means that the color temperature of the led strip light can be adjusted to change between warm white and white. Thus, RGBWW strip light is another name of RGBCCT strip.

RGBCW is the acronym for Red, Green, Blue, Cold, and Warm. These 5-in-1 chips are used in supper bright smart LED lighting products

-

Capturing the world in HDR for real time projects – Call of Duty: Advanced Warfare

Read more: Capturing the world in HDR for real time projects – Call of Duty: Advanced WarfareReal-World Measurements for Call of Duty: Advanced Warfare

www.activision.com/cdn/research/Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

Local version

Real_World_Measurements_for_Call_of_Duty_Advanced_Warfare.pdf

-

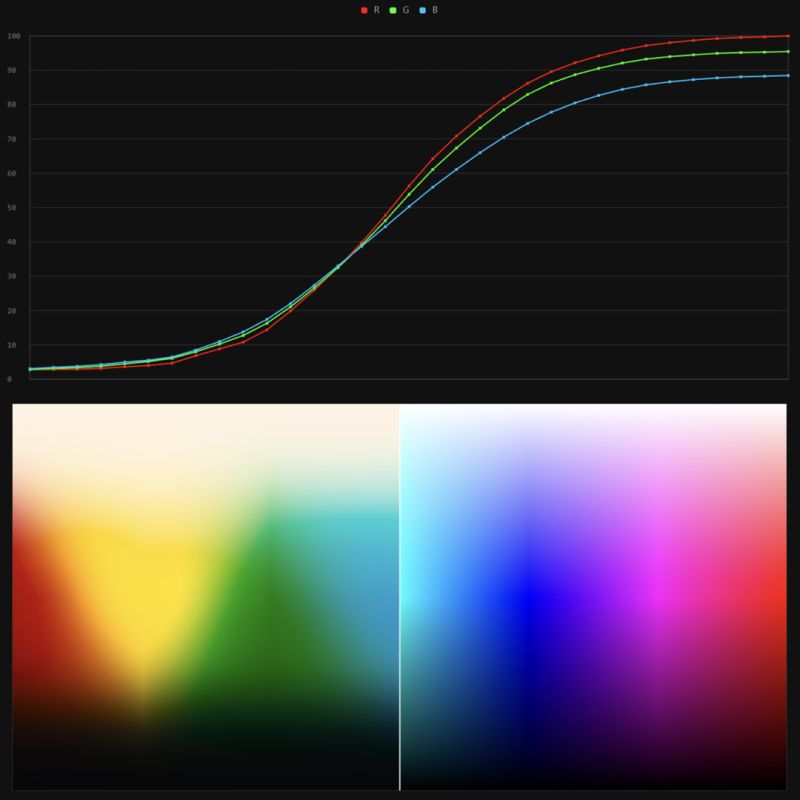

Stefan Ringelschwandtner – LUT Inspector tool

Read more: Stefan Ringelschwandtner – LUT Inspector toolIt lets you load any .cube LUT right in your browser, see the RGB curves, and use a split view on the Granger Test Image to compare the original vs. LUT-applied version in real time — perfect for spotting hue shifts, saturation changes, and contrast tweaks.

https://mononodes.com/lut-inspector/

-

If a blind person gained sight, could they recognize objects previously touched?

Read more: If a blind person gained sight, could they recognize objects previously touched?Blind people who regain their sight may find themselves in a world they don’t immediately comprehend. “It would be more like a sighted person trying to rely on tactile information,” Moore says.

Learning to see is a developmental process, just like learning language, Prof Cathleen Moore continues. “As far as vision goes, a three-and-a-half year old child is already a well-calibrated system.”

LIGHTING

-

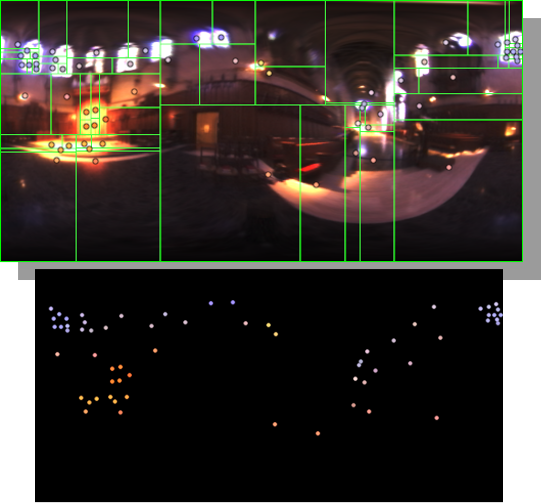

HDRI Median Cut plugin

Read more: HDRI Median Cut pluginwww.hdrlabs.com/picturenaut/plugins.html

Note. The Median Cut algorithm is typically used for color quantization, which involves reducing the number of colors in an image while preserving its visual quality. It doesn’t directly provide a way to identify the brightest areas in an image. However, if you’re interested in identifying the brightest areas, you might want to look into other methods like thresholding, histogram analysis, or edge detection, through openCV for example.

Here is an openCV example:

(more…) -

domeble – Hi-Resolution CGI Backplates and 360° HDRI

Read more: domeble – Hi-Resolution CGI Backplates and 360° HDRIWhen collecting hdri make sure the data supports basic metadata, such as:

- Iso

- Aperture

- Exposure time or shutter time

- Color temperature

- Color space Exposure value (what the sensor receives of the sun intensity in lux)

- 7+ brackets (with 5 or 6 being the perceived balanced exposure)

In image processing, computer graphics, and photography, high dynamic range imaging (HDRI or just HDR) is a set of techniques that allow a greater dynamic range of luminances (a Photometry measure of the luminous intensity per unit area of light travelling in a given direction. It describes the amount of light that passes through or is emitted from a particular area, and falls within a given solid angle) between the lightest and darkest areas of an image than standard digital imaging techniques or photographic methods. This wider dynamic range allows HDR images to represent more accurately the wide range of intensity levels found in real scenes ranging from direct sunlight to faint starlight and to the deepest shadows.

The two main sources of HDR imagery are computer renderings and merging of multiple photographs, which in turn are known as low dynamic range (LDR) or standard dynamic range (SDR) images. Tone Mapping (Look-up) techniques, which reduce overall contrast to facilitate display of HDR images on devices with lower dynamic range, can be applied to produce images with preserved or exaggerated local contrast for artistic effect. Photography

In photography, dynamic range is measured in Exposure Values (in photography, exposure value denotes all combinations of camera shutter speed and relative aperture that give the same exposure. The concept was developed in Germany in the 1950s) differences or stops, between the brightest and darkest parts of the image that show detail. An increase of one EV or one stop is a doubling of the amount of light.

The human response to brightness is well approximated by a Steven’s power law, which over a reasonable range is close to logarithmic, as described by the Weber�Fechner law, which is one reason that logarithmic measures of light intensity are often used as well.

HDR is short for High Dynamic Range. It’s a term used to describe an image which contains a greater exposure range than the “black” to “white” that 8 or 16-bit integer formats (JPEG, TIFF, PNG) can describe. Whereas these Low Dynamic Range images (LDR) can hold perhaps 8 to 10 f-stops of image information, HDR images can describe beyond 30 stops and stored in 32 bit images.

-

Is a MacBeth Colour Rendition Chart the Safest Way to Calibrate a Camera?

Read more: Is a MacBeth Colour Rendition Chart the Safest Way to Calibrate a Camera?www.colour-science.org/posts/the-colorchecker-considered-mostly-harmless/

“Unless you have all the relevant spectral measurements, a colour rendition chart should not be used to perform colour-correction of camera imagery but only for white balancing and relative exposure adjustments.”

“Using a colour rendition chart for colour-correction might dramatically increase error if the scene light source spectrum is different from the illuminant used to compute the colour rendition chart’s reference values.”

“other factors make using a colour rendition chart unsuitable for camera calibration:

– Uncontrolled geometry of the colour rendition chart with the incident illumination and the camera.

– Unknown sample reflectances and ageing as the colour of the samples vary with time.

– Low samples count.

– Camera noise and flare.

– Etc…“Those issues are well understood in the VFX industry, and when receiving plates, we almost exclusively use colour rendition charts to white balance and perform relative exposure adjustments, i.e. plate neutralisation.”

-

Magnific.ai Relight – change the entire lighting of a scene

Read more: Magnific.ai Relight – change the entire lighting of a sceneIt’s a new Magnific spell that allows you to change the entire lighting of a scene and, optionally, the background with just:

1/ A prompt OR

2/ A reference image OR

3/ A light map (drawing your own lights)https://x.com/javilopen/status/1805274155065176489

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Steven Stahlberg – Perception and Composition

-

Jesse Zumstein – Jobs in games

-

Photography basics: Production Rendering Resolution Charts

-

Web vs Printing or digital RGB vs CMYK

-

Methods for creating motion blur in Stop motion

-

Alejandro Villabón and Rafał Kaniewski – Recover Highlights With 8-Bit to High Dynamic Range Half Float Copycat – Nuke

-

Guide to Prompt Engineering

-

Types of AI Explained in a few Minutes – AI Glossary

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.