COMPOSITION

-

7 Commandments of Film Editing and composition

Read more: 7 Commandments of Film Editing and composition1. Watch every frame of raw footage twice. On the second time, take notes. If you don’t do this and try to start developing a scene premature, then it’s a big disservice to yourself and to the director, actors and production crew.

2. Nurture the relationships with the director. You are the secondary person in the relationship. Be calm and continually offer solutions. Get the main intention of the film as soon as possible from the director.

3. Organize your media so that you can find any shot instantly.

4. Factor in extra time for renders, exports, errors and crashes.

5. Attempt edits and ideas that shouldn’t work. It just might work. Until you do it and watch it, you won’t know. Don’t rule out ideas just because they don’t make sense in your mind.

6. Spend more time on your audio. It’s the glue of your edit. AUDIO SAVES EVERYTHING. Create fluid and seamless audio under your video.

7. Make cuts for the scene, but always in context for the whole film. Have a macro and a micro view at all times.

-

Composition and The Expressive Nature Of Light

Read more: Composition and The Expressive Nature Of Lighthttp://www.huffingtonpost.com/bill-danskin/post_12457_b_10777222.html

George Sand once said “ The artist vocation is to send light into the human heart.”

DESIGN

-

Reuben Wu – Glowing Geometric Light Paintings

Read more: Reuben Wu – Glowing Geometric Light Paintingswww.thisiscolossal.com/2021/04/reuben-wu-ex-stasis/

Wu programmed a stick of 200 LED lights to shift in color and shape above the calm landscapes. He captured the mesmerizing movements in-camera, and through a combination of stills, timelapse, and real-time footage, produced four audiovisual works that juxtapose the natural scenery with the artificially produced light and electronic sounds.

-

COLOR

-

Tim Kang – calibrated white light values in sRGB color space

Read more: Tim Kang – calibrated white light values in sRGB color space8bit sRGB encoded

2000K 255 139 22

2700K 255 172 89

3000K 255 184 109

3200K 255 190 122

4000K 255 211 165

4300K 255 219 178

D50 255 235 205

D55 255 243 224

D5600 255 244 227

D6000 255 249 240

D65 255 255 255

D10000 202 221 255

D20000 166 196 2558bit Rec709 Gamma 2.4

2000K 255 145 34

2700K 255 177 97

3000K 255 187 117

3200K 255 193 129

4000K 255 214 170

4300K 255 221 182

D50 255 236 208

D55 255 243 226

D5600 255 245 229

D6000 255 250 241

D65 255 255 255

D10000 204 222 255

D20000 170 199 2558bit Display P3 encoded

2000K 255 154 63

2700K 255 185 109

3000K 255 195 127

3200K 255 201 138

4000K 255 219 176

4300K 255 225 187

D50 255 239 212

D55 255 245 228

D5600 255 246 231

D6000 255 251 242

D65 255 255 255

D10000 208 223 255

D20000 175 199 25510bit Rec2020 PQ (100 nits)

2000K 520 435 273

2700K 520 466 358

3000K 520 475 384

3200K 520 480 399

4000K 520 495 446

4300K 520 500 458

D50 520 510 482

D55 520 514 497

D5600 520 514 500

D6000 520 517 509

D65 520 520 520

D10000 479 489 520

D20000 448 464 520 -

Pattern generators

Read more: Pattern generatorshttp://qrohlf.com/trianglify-generator/

https://halftonepro.com/app/polygons#

https://mattdesl.svbtle.com/generative-art-with-nodejs-and-canvas

https://www.patterncooler.com/

http://permadi.com/java/spaint/spaint.html

https://dribbble.com/shots/1847313-Kaleidoscope-Generator-PSD

http://eskimoblood.github.io/gerstnerizer/

http://www.stripegenerator.com/

http://btmills.github.io/geopattern/geopattern.html

http://fractalarchitect.net/FA4-Random-Generator.html

https://sciencevsmagic.net/fractal/#0605,0000,3,2,0,1,2

https://sites.google.com/site/mandelbulber/home

-

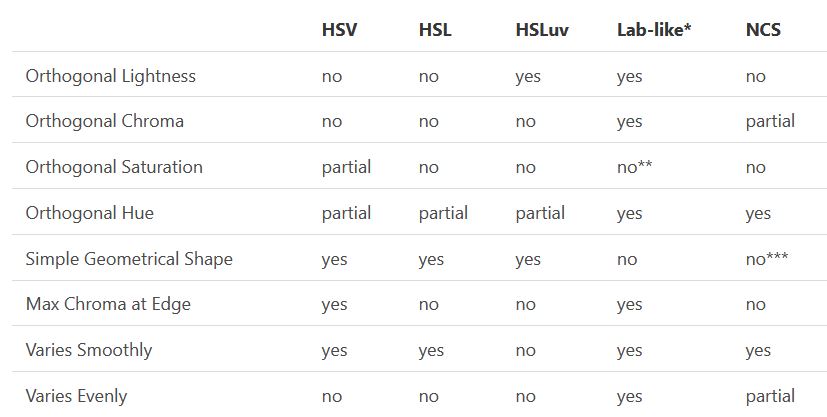

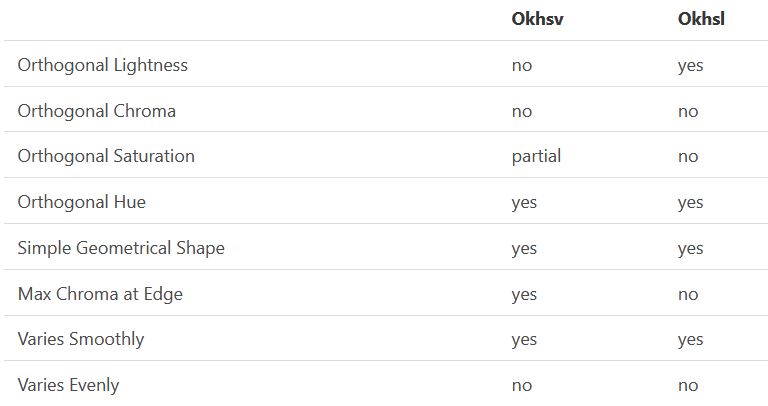

Björn Ottosson – OKHSV and OKHSL – Two new color spaces for color picking

Read more: Björn Ottosson – OKHSV and OKHSL – Two new color spaces for color pickinghttps://bottosson.github.io/misc/colorpicker

https://bottosson.github.io/posts/colorpicker/

https://www.smashingmagazine.com/2024/10/interview-bjorn-ottosson-creator-oklab-color-space/

One problem with sRGB is that in a gradient between blue and white, it becomes a bit purple in the middle of the transition. That’s because sRGB really isn’t created to mimic how the eye sees colors; rather, it is based on how CRT monitors work. That means it works with certain frequencies of red, green, and blue, and also the non-linear coding called gamma. It’s a miracle it works as well as it does, but it’s not connected to color perception. When using those tools, you sometimes get surprising results, like purple in the gradient.

There were also attempts to create simple models matching human perception based on XYZ, but as it turned out, it’s not possible to model all color vision that way. Perception of color is incredibly complex and depends, among other things, on whether it is dark or light in the room and the background color it is against. When you look at a photograph, it also depends on what you think the color of the light source is. The dress is a typical example of color vision being very context-dependent. It is almost impossible to model this perfectly.

I based Oklab on two other color spaces, CIECAM16 and IPT. I used the lightness and saturation prediction from CIECAM16, which is a color appearance model, as a target. I actually wanted to use the datasets used to create CIECAM16, but I couldn’t find them.

IPT was designed to have better hue uniformity. In experiments, they asked people to match light and dark colors, saturated and unsaturated colors, which resulted in a dataset for which colors, subjectively, have the same hue. IPT has a few other issues but is the basis for hue in Oklab.

In the Munsell color system, colors are described with three parameters, designed to match the perceived appearance of colors: Hue, Chroma and Value. The parameters are designed to be independent and each have a uniform scale. This results in a color solid with an irregular shape. The parameters are designed to be independent and each have a uniform scale. This results in a color solid with an irregular shape. Modern color spaces and models, such as CIELAB, Cam16 and Björn Ottosson own Oklab, are very similar in their construction.

By far the most used color spaces today for color picking are HSL and HSV, two representations introduced in the classic 1978 paper “Color Spaces for Computer Graphics”. HSL and HSV designed to roughly correlate with perceptual color properties while being very simple and cheap to compute.

Today HSL and HSV are most commonly used together with the sRGB color space.

One of the main advantages of HSL and HSV over the different Lab color spaces is that they map the sRGB gamut to a cylinder. This makes them easy to use since all parameters can be changed independently, without the risk of creating colors outside of the target gamut.

The main drawback on the other hand is that their properties don’t match human perception particularly well.

Reconciling these conflicting goals perfectly isn’t possible, but given that HSV and HSL don’t use anything derived from experiments relating to human perception, creating something that makes a better tradeoff does not seem unreasonable.

With this new lightness estimate, we are ready to look into the construction of Okhsv and Okhsl.

LIGHTING

-

Cinematographers Blueprint 300dpi poster

Read more: Cinematographers Blueprint 300dpi posterThe 300dpi digital poster is now available to all PixelSham.com subscribers.

If you have already subscribed and wish a copy, please send me a note through the contact page.

-

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

Read more: Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental processhttps://www.chrbutler.com/understanding-the-eye-mind-connection

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning

-

Vahan Sosoyan MakeHDR – an OpenFX open source plug-in for merging multiple LDR images into a single HDRI

Read more: Vahan Sosoyan MakeHDR – an OpenFX open source plug-in for merging multiple LDR images into a single HDRIhttps://github.com/Sosoyan/make-hdr

Feature notes

- Merge up to 16 inputs with 8, 10 or 12 bit depth processing

- User friendly logarithmic Tone Mapping controls within the tool

- Advanced controls such as Sampling rate and Smoothness

Available at cross platform on Linux, MacOS and Windows Works consistent in compositing applications like Nuke, Fusion, Natron.

NOTE: The goal is to clean the initial individual brackets before or at merging time as much as possible.

This means:- keeping original shooting metadata

- de-fringing

- removing aberration (through camera lens data or automatically)

- at 32 bit

- in ACEScg (or ACES) wherever possible

-

Disney’s Moana Island Scene – Free data set

Read more: Disney’s Moana Island Scene – Free data sethttps://www.disneyanimation.com/resources/moana-island-scene/

This data set contains everything necessary to render a version of the Motunui island featured in the 2016 film Moana.

-

Rendering – BRDF – Bidirectional reflectance distribution function

Read more: Rendering – BRDF – Bidirectional reflectance distribution functionhttp://en.wikipedia.org/wiki/Bidirectional_reflectance_distribution_function

The bidirectional reflectance distribution function is a four-dimensional function that defines how light is reflected at an opaque surface

http://www.cs.ucla.edu/~zhu/tutorial/An_Introduction_to_BRDF-Based_Lighting.pdf

In general, when light interacts with matter, a complicated light-matter dynamic occurs. This interaction depends on the physical characteristics of the light as well as the physical composition and characteristics of the matter.

That is, some of the incident light is reflected, some of the light is transmitted, and another portion of the light is absorbed by the medium itself.

A BRDF describes how much light is reflected when light makes contact with a certain material. Similarly, a BTDF (Bi-directional Transmission Distribution Function) describes how much light is transmitted when light makes contact with a certain material

http://www.cs.princeton.edu/~smr/cs348c-97/surveypaper.html

It is difficult to establish exactly how far one should go in elaborating the surface model. A truly complete representation of the reflective behavior of a surface might take into account such phenomena as polarization, scattering, fluorescence, and phosphorescence, all of which might vary with position on the surface. Therefore, the variables in this complete function would be:

incoming and outgoing angle incoming and outgoing wavelength incoming and outgoing polarization (both linear and circular) incoming and outgoing position (which might differ due to subsurface scattering) time delay between the incoming and outgoing light ray

-

Custom bokeh in a raytraced DOF render

Read more: Custom bokeh in a raytraced DOF renderTo achieve a custom pinhole camera effect with a custom bokeh in Arnold Raytracer, you can follow these steps:

- Set the render camera with a focal length around 50 (or as needed)

- Set the F-Stop to a high value (e.g., 22).

- Set the focus distance as you require

- Turn on DOF

- Place a plane a few cm in front of the camera.

- Texture the plane with a transparent shape at the center of it. (Transmission with no specular roughness)

-

About green screens

Read more: About green screenshackaday.com/2015/02/07/how-green-screen-worked-before-computers/

www.newtek.com/blog/tips/best-green-screen-materials/

www.chromawall.com/blog//chroma-key-green

Chroma Key Green, the color of green screens is also known as Chroma Green and is valued at approximately 354C in the Pantone color matching system (PMS).

Chroma Green can be broken down in many different ways. Here is green screen green as other values useful for both physical and digital production:

Green Screen as RGB Color Value: 0, 177, 64

Green Screen as CMYK Color Value: 81, 0, 92, 0

Green Screen as Hex Color Value: #00b140

Green Screen as Websafe Color Value: #009933Chroma Key Green is reasonably close to an 18% gray reflectance.

Illuminate your green screen with an uniform source with less than 2/3 EV variation.

The level of brightness at any given f-stop should be equivalent to a 90% white card under the same lighting.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

What’s the Difference Between Ray Casting, Ray Tracing, Path Tracing and Rasterization? Physical light tracing…

-

FFmpeg – examples and convenience lines

-

Daniele Tosti Interview for the magazine InCG, Taiwan, Issue 28, 201609

-

UV maps

-

Ross Pettit on The Agile Manager – How tech firms went for prioritizing cash flow instead of talent (and artists)

-

Top 3D Printing Website Resources

-

Python and TCL: Tips and Tricks for Foundry Nuke

-

White Balance is Broken!

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.