COMPOSITION

DESIGN

COLOR

-

No one could see the colour blue until modern times

Read more: No one could see the colour blue until modern timeshttps://www.businessinsider.com/what-is-blue-and-how-do-we-see-color-2015-2

The way humans see the world… until we have a way to describe something, even something so fundamental as a colour, we may not even notice that something it’s there.

Ancient languages didn’t have a word for blue — not Greek, not Chinese, not Japanese, not Hebrew, not Icelandic cultures. And without a word for the colour, there’s evidence that they may not have seen it at all.

https://www.wnycstudios.org/story/211119-colorsEvery language first had a word for black and for white, or dark and light. The next word for a colour to come into existence — in every language studied around the world — was red, the colour of blood and wine.

After red, historically, yellow appears, and later, green (though in a couple of languages, yellow and green switch places). The last of these colours to appear in every language is blue.The only ancient culture to develop a word for blue was the Egyptians — and as it happens, they were also the only culture that had a way to produce a blue dye.

https://mymodernmet.com/shades-of-blue-color-history/True blue hues are rare in the natural world because synthesizing pigments that absorb longer-wavelength light (reds and yellows) while reflecting shorter-wavelength blue light requires exceptionally elaborate molecular structures—biochemical feats that most plants and animals simply don’t undertake.

When you gaze at a blueberry’s deep blue surface, you’re actually seeing structural coloration rather than a true blue pigment. A fine, waxy bloom on the berry’s skin contains nanostructures that preferentially scatter blue and violet light, giving the fruit its signature blue sheen even though its inherent pigment is reddish.

Similarly, many of nature’s most striking blues—like those of blue jays and morpho butterflies—arise not from blue pigments but from microscopic architectures in feathers or wing scales. These tiny ridges and air pockets manipulate incoming light so that blue wavelengths emerge most prominently, creating vivid, angle-dependent colors through scattering rather than pigment alone.

(more…) -

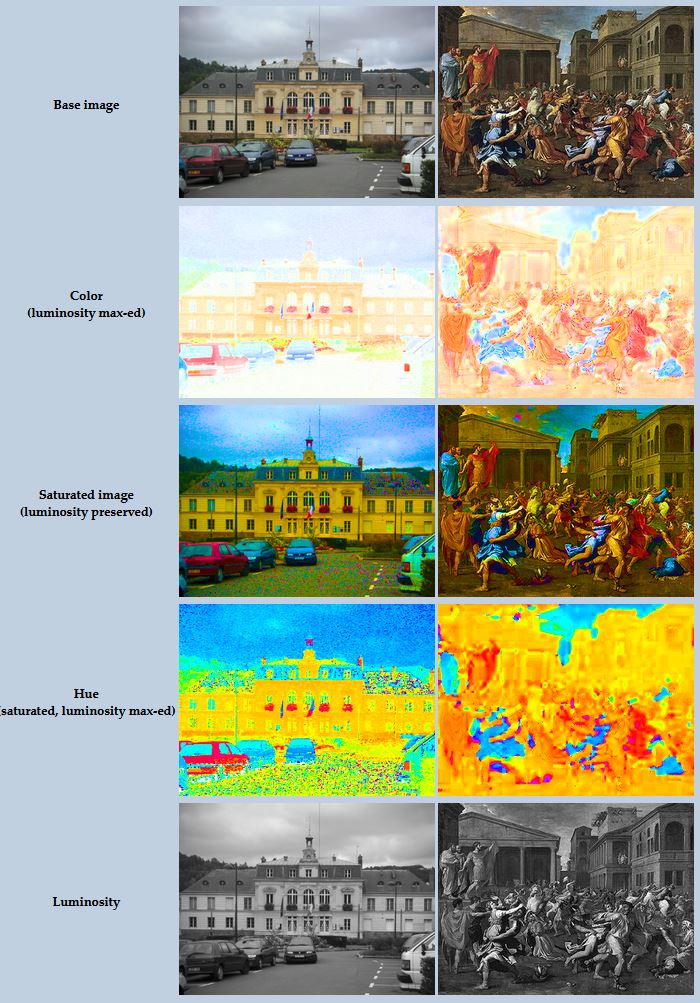

Tim Kang – calibrated white light values in sRGB color space

Read more: Tim Kang – calibrated white light values in sRGB color space8bit sRGB encoded

2000K 255 139 22

2700K 255 172 89

3000K 255 184 109

3200K 255 190 122

4000K 255 211 165

4300K 255 219 178

D50 255 235 205

D55 255 243 224

D5600 255 244 227

D6000 255 249 240

D65 255 255 255

D10000 202 221 255

D20000 166 196 2558bit Rec709 Gamma 2.4

2000K 255 145 34

2700K 255 177 97

3000K 255 187 117

3200K 255 193 129

4000K 255 214 170

4300K 255 221 182

D50 255 236 208

D55 255 243 226

D5600 255 245 229

D6000 255 250 241

D65 255 255 255

D10000 204 222 255

D20000 170 199 2558bit Display P3 encoded

2000K 255 154 63

2700K 255 185 109

3000K 255 195 127

3200K 255 201 138

4000K 255 219 176

4300K 255 225 187

D50 255 239 212

D55 255 245 228

D5600 255 246 231

D6000 255 251 242

D65 255 255 255

D10000 208 223 255

D20000 175 199 25510bit Rec2020 PQ (100 nits)

2000K 520 435 273

2700K 520 466 358

3000K 520 475 384

3200K 520 480 399

4000K 520 495 446

4300K 520 500 458

D50 520 510 482

D55 520 514 497

D5600 520 514 500

D6000 520 517 509

D65 520 520 520

D10000 479 489 520

D20000 448 464 520 -

PBR Color Reference List for Materials – by Grzegorz Baran

Read more: PBR Color Reference List for Materials – by Grzegorz Baran“The list should be helpful for every material artist who work on PBR materials as it contains over 200 color values measured with PCE-RGB2 1002 Color Spectrometer device and presented in linear and sRGB (2.2) gamma space.

All color values, HUE and Saturation in this list come from measurements taken with PCE-RGB2 1002 Color Spectrometer device and are presented in linear and sRGB (2.2) gamma space (more info at the end of this video) I calculated Relative Luminance and Luminance values based on captured color using my own equation which takes color based luminance perception into consideration. Bare in mind that there is no ‘one’ color per substance as nothing in nature is even 100% uniform and any value in +/-10% range from these should be considered as correct one. Therefore this list should be always considered as a color reference for material’s albedos, not ulitimate and absolute truth.“

-

Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacy

Read more: Types of Film Lights and their efficiency – CRI, Color Temperature and Luminous Efficacynofilmschool.com/types-of-film-lights

“Not every light performs the same way. Lights and lighting are tricky to handle. You have to plan for every circumstance. But the good news is, lighting can be adjusted. Let’s look at different factors that affect lighting in every scene you shoot. “

Use CRI, Luminous Efficacy and color temperature controls to match your needs.Color Temperature

Color temperature describes the “color” of white light by a light source radiated by a perfect black body at a given temperature measured in degrees Kelvinhttps://www.pixelsham.com/2019/10/18/color-temperature/

CRI

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. “https://www.studiobinder.com/blog/what-is-color-rendering-index

(more…)

LIGHTING

-

Photography basics: Solid Angle measures

Read more: Photography basics: Solid Angle measureshttp://www.calculator.org/property.aspx?name=solid+angle

A measure of how large the object appears to an observer looking from that point. Thus. A measure for objects in the sky. Useful to retuen the size of the sun and moon… and in perspective, how much of their contribution to lighting. Solid angle can be represented in ‘angular diameter’ as well.

http://en.wikipedia.org/wiki/Solid_angle

http://www.mathsisfun.com/geometry/steradian.html

A solid angle is expressed in a dimensionless unit called a steradian (symbol: sr). By default in terms of the total celestial sphere and before atmospheric’s scattering, the Sun and the Moon subtend fractional areas of 0.000546% (Sun) and 0.000531% (Moon).

http://en.wikipedia.org/wiki/Solid_angle#Sun_and_Moon

On earth the sun is likely closer to 0.00011 solid angle after athmospheric scattering. The sun as perceived from earth has a diameter of 0.53 degrees. This is about 0.000064 solid angle.

http://www.numericana.com/answer/angles.htm

The mean angular diameter of the full moon is 2q = 0.52° (it varies with time around that average, by about 0.009°). This translates into a solid angle of 0.0000647 sr, which means that the whole night sky covers a solid angle roughly one hundred thousand times greater than the full moon.

More info

http://lcogt.net/spacebook/using-angles-describe-positions-and-apparent-sizes-objects

http://amazing-space.stsci.edu/glossary/def.php.s=topic_astronomy

Angular Size

The apparent size of an object as seen by an observer; expressed in units of degrees (of arc), arc minutes, or arc seconds. The moon, as viewed from the Earth, has an angular diameter of one-half a degree.

The angle covered by the diameter of the full moon is about 31 arcmin or 1/2°, so astronomers would say the Moon’s angular diameter is 31 arcmin, or the Moon subtends an angle of 31 arcmin.

-

Convert between light exposure and intensity

Read more: Convert between light exposure and intensityimport math,sys def Exposure2Intensity(exposure): exp = float(exposure) result = math.pow(2,exp) print(result) Exposure2Intensity(0) def Intensity2Exposure(intensity): inarg = float(intensity) if inarg == 0: print("Exposure of zero intensity is undefined.") return if inarg < 1e-323: inarg = max(inarg, 1e-323) print("Exposure of negative intensities is undefined. Clamping to a very small value instead (1e-323)") result = math.log(inarg, 2) print(result) Intensity2Exposure(0.1)Why Exposure?

Exposure is a stop value that multiplies the intensity by 2 to the power of the stop. Increasing exposure by 1 results in double the amount of light.

Artists think in “stops.” Doubling or halving brightness is easy math and common in grading and look-dev.

Exposure counts doublings in whole stops:- +1 stop = ×2 brightness

- −1 stop = ×0.5 brightness

This gives perceptually even controls across both bright and dark values.

Why Intensity?

Intensity is linear.

It’s what render engines and compositors expect when:- Summing values

- Averaging pixels

- Multiplying or filtering pixel data

Use intensity when you need the actual math on pixel/light data.

Formulas (from your Python)

- Intensity from exposure: intensity = 2**exposure

- Exposure from intensity: exposure = log₂(intensity)

Guardrails:

- Intensity must be > 0 to compute exposure.

- If intensity = 0 → exposure is undefined.

- Clamp tiny values (e.g.

1e−323) before using log₂.

Use Exposure (stops) when…

- You want artist-friendly sliders (−5…+5 stops)

- Adjusting look-dev or grading in even stops

- Matching plates with quick ±1 stop tweaks

- Tweening brightness changes smoothly across ranges

Use Intensity (linear) when…

- Storing raw pixel/light values

- Multiplying textures or lights by a gain

- Performing sums, averages, and filters

- Feeding values to render engines expecting linear data

Examples

- +2 stops → 2**2 = 4.0 (×4)

- +1 stop → 2**1 = 2.0 (×2)

- 0 stop → 2**0 = 1.0 (×1)

- −1 stop → 2**(−1) = 0.5 (×0.5)

- −2 stops → 2**(−2) = 0.25 (×0.25)

- Intensity 0.1 → exposure = log₂(0.1) ≈ −3.32

Rule of thumb

Think in stops (exposure) for controls and matching.

Compute in linear (intensity) for rendering and math. -

Composition – 5 tips for creating perfect cinematic lighting and making your work look stunning

Read more: Composition – 5 tips for creating perfect cinematic lighting and making your work look stunninghttp://www.diyphotography.net/5-tips-creating-perfect-cinematic-lighting-making-work-look-stunning/

1. Learn the rules of lighting

2. Learn when to break the rules

3. Make your key light larger

4. Reverse keying

5. Always be backlighting

-

HDRI shooting and editing by Xuan Prada and Greg Zaal

Read more: HDRI shooting and editing by Xuan Prada and Greg Zaalwww.xuanprada.com/blog/2014/11/3/hdri-shooting

http://blog.gregzaal.com/2016/03/16/make-your-own-hdri/

http://blog.hdrihaven.com/how-to-create-high-quality-hdri/

Shooting checklist

- Full coverage of the scene (fish-eye shots)

- Backplates for look-development (including ground or floor)

- Macbeth chart for white balance

- Grey ball for lighting calibration

- Chrome ball for lighting orientation

- Basic scene measurements

- Material samples

- Individual HDR artificial lighting sources if required

Methodology

(more…) -

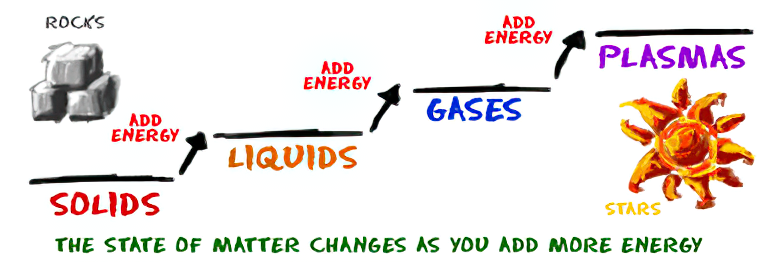

How are Energy and Matter the Same?

Read more: How are Energy and Matter the Same?www.turnerpublishing.com/blog/detail/everything-is-energy-everything-is-one-everything-is-possible/

www.universetoday.com/116615/how-are-energy-and-matter-the-same/

As Einstein showed us, light and matter and just aspects of the same thing. Matter is just frozen light. And light is matter on the move. Albert Einstein’s most famous equation says that energy and matter are two sides of the same coin. How does one become the other?

Relativity requires that the faster an object moves, the more mass it appears to have. This means that somehow part of the energy of the car’s motion appears to transform into mass. Hence the origin of Einstein’s equation. How does that happen? We don’t really know. We only know that it does.

Matter is 99.999999999999 percent empty space. Not only do the atom and solid matter consist mainly of empty space, it is the same in outer space

The quantum theory researchers discovered the answer: Not only do particles consist of energy, but so does the space between. This is the so-called zero-point energy. Therefore it is true: Everything consists of energy.

Energy is the basis of material reality. Every type of particle is conceived of as a quantum vibration in a field: Electrons are vibrations in electron fields, protons vibrate in a proton field, and so on. Everything is energy, and everything is connected to everything else through fields.

-

Cinematographers Blueprint 300dpi poster

Read more: Cinematographers Blueprint 300dpi posterThe 300dpi digital poster is now available to all PixelSham.com subscribers.

If you have already subscribed and wish a copy, please send me a note through the contact page.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Blender VideoDepthAI – Turn any video into 3D Animated Scenes

-

Glossary of Lighting Terms – cheat sheet

-

Sensitivity of human eye

-

Photography basics: How Exposure Stops (Aperture, Shutter Speed, and ISO) Affect Your Photos – cheat sheet cards

-

The Public Domain Is Working Again — No Thanks To Disney

-

Methods for creating motion blur in Stop motion

-

Black Forest Labs released FLUX.1 Kontext

-

Ross Pettit on The Agile Manager – How tech firms went for prioritizing cash flow instead of talent (and artists)

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.

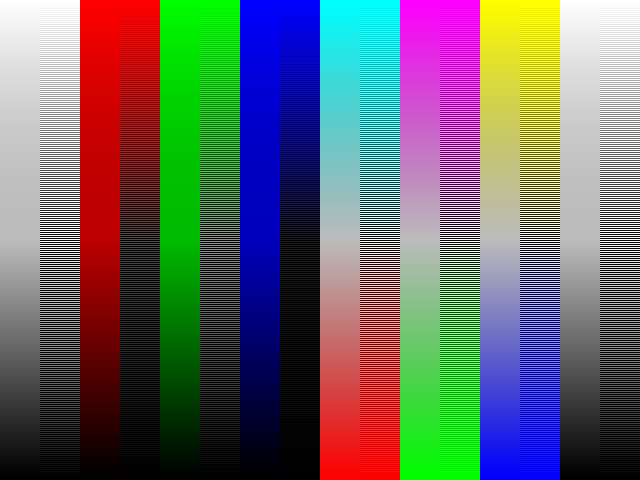

![sRGB gamma correction test [gamma correction test]](http://www.madore.org/~david/misc/color/gammatest.png)