COMPOSITION

-

Cinematographers Blueprint 300dpi poster

Read more: Cinematographers Blueprint 300dpi posterThe 300dpi digital poster is now available to all PixelSham.com subscribers.

If you have already subscribed and wish a copy, please send me a note through the contact page.

DESIGN

-

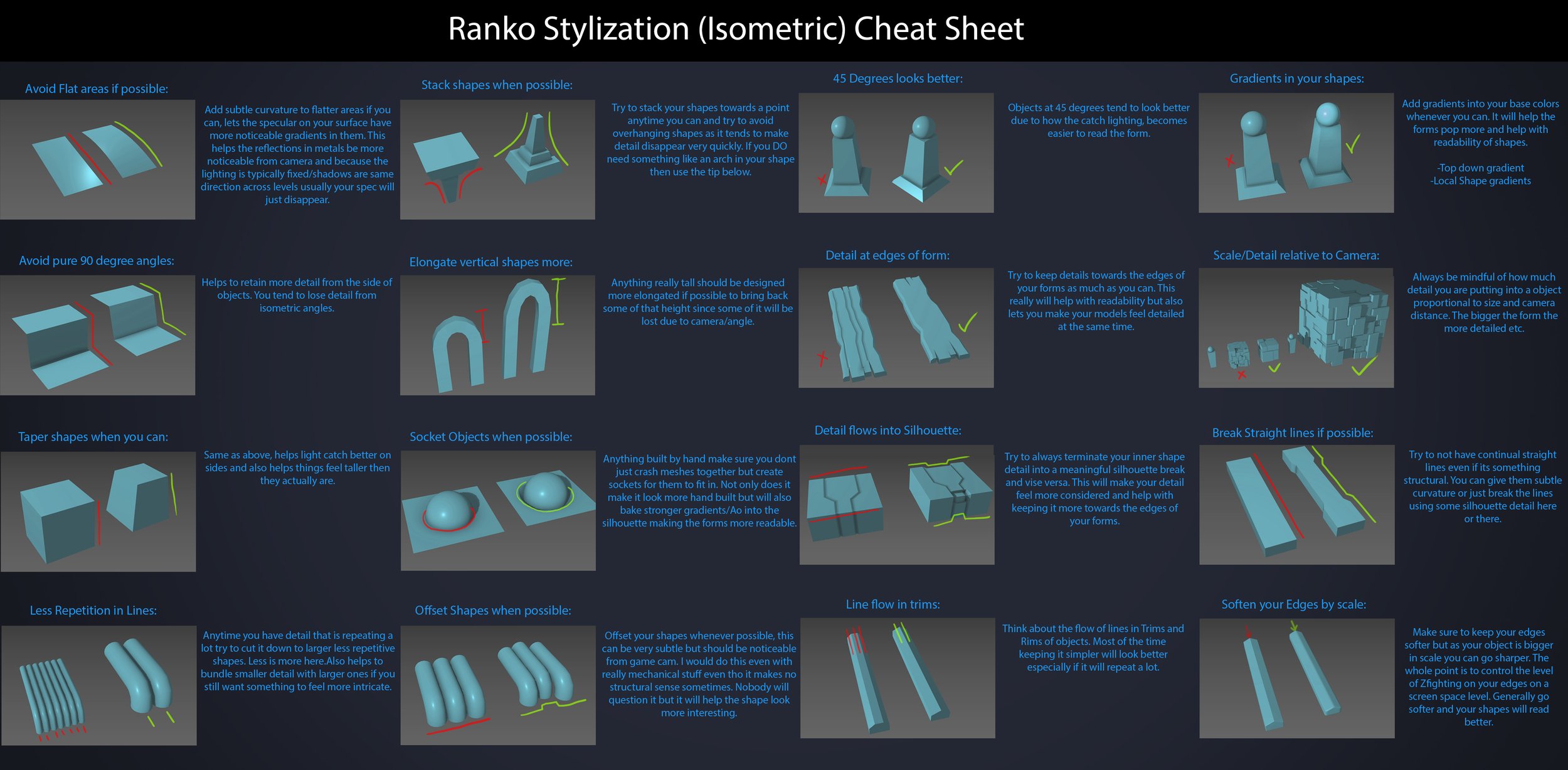

Ranko Prozo – Modelling design tips

Read more: Ranko Prozo – Modelling design tipsEvery Project I work on I always create a stylization Cheat sheet. Every project is unique but some principles carry over no matter what. This is a sheet I use a lot when I work on isometric stylized projects to help keep my assets consistent and interesting. None of these concepts are my own, just lots of tips I learned over the years. I have also added this to a page on my website, will continue to update with more tips and tricks, just need time to compile it all :)

COLOR

-

What Is The Resolution and view coverage Of The human Eye. And what distance is TV at best?

Read more: What Is The Resolution and view coverage Of The human Eye. And what distance is TV at best?https://www.discovery.com/science/mexapixels-in-human-eye

About 576 megapixels for the entire field of view.

Consider a view in front of you that is 90 degrees by 90 degrees, like looking through an open window at a scene. The number of pixels would be:

90 degrees * 60 arc-minutes/degree * 1/0.3 * 90 * 60 * 1/0.3 = 324,000,000 pixels (324 megapixels).At any one moment, you actually do not perceive that many pixels, but your eye moves around the scene to see all the detail you want. But the human eye really sees a larger field of view, close to 180 degrees. Let’s be conservative and use 120 degrees for the field of view. Then we would see:

120 * 120 * 60 * 60 / (0.3 * 0.3) = 576 megapixels.

Or.

7 megapixels for the 2 degree focus arc… + 1 megapixel for the rest.

https://clarkvision.com/articles/eye-resolution.html

Details in the post

-

Willem Zwarthoed – Aces gamut in VFX production pdf

Read more: Willem Zwarthoed – Aces gamut in VFX production pdfhttps://www.provideocoalition.com/color-management-part-12-introducing-aces/

Local copy:

https://www.slideshare.net/hpduiker/acescg-a-common-color-encoding-for-visual-effects-applications

-

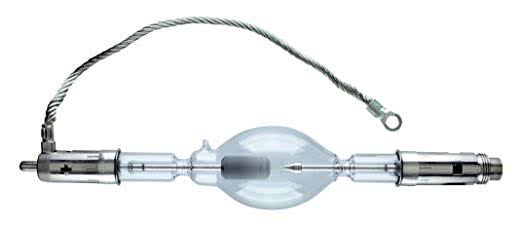

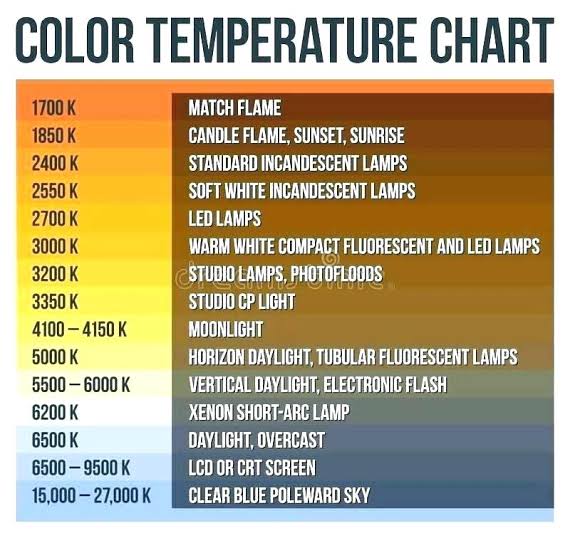

What light is best to illuminate gems for resale

Read more: What light is best to illuminate gems for resalewww.palagems.com/gem-lighting2

Artificial light sources, not unlike the diverse phases of natural light, vary considerably in their properties. As a result, some lamps render an object’s color better than others do.

The most important criterion for assessing the color-rendering ability of any lamp is its spectral power distribution curve.

Natural daylight varies too much in strength and spectral composition to be taken seriously as a lighting standard for grading and dealing colored stones. For anything to be a standard, it must be constant in its properties, which natural light is not.

For dealers in particular to make the transition from natural light to an artificial light source, that source must offer:

1- A degree of illuminance at least as strong as the common phases of natural daylight.

2- Spectral properties identical or comparable to a phase of natural daylight.A source combining these two things makes gems appear much the same as when viewed under a given phase of natural light. From the viewpoint of many dealers, this corresponds to a naturalappearance.

The 6000° Kelvin xenon short-arc lamp appears closest to meeting the criteria for a standard light source. Besides the strong illuminance this lamp affords, its spectrum is very similar to CIE standard illuminants of similar color temperature.

-

mmColorTarget – Nuke Gizmo for color matching a MacBeth chart

Read more: mmColorTarget – Nuke Gizmo for color matching a MacBeth charthttps://www.marcomeyer-vfx.de/posts/2014-04-11-mmcolortarget-nuke-gizmo/

https://www.marcomeyer-vfx.de/posts/mmcolortarget-nuke-gizmo/

https://vimeo.com/9.1652466e+07

https://www.nukepedia.com/gizmos/colour/mmcolortarget

-

Virtual Production volumes study

Read more: Virtual Production volumes studyColor Fidelity in LED Volumes

https://theasc.com/articles/color-fidelity-in-led-volumesVirtual Production Glossary

https://vpglossary.com/What is Virtual Production – In depth analysis

https://www.leadingledtech.com/what-is-a-led-virtual-production-studio-in-depth-technical-analysis/A comparison of LED panels for use in Virtual Production:

Findings and recommendations

https://eprints.bournemouth.ac.uk/36826/1/LED_Comparison_White_Paper%281%29.pdf -

FXGuide – ACES 2.0 with ILM’s Alex Fry

Read more: FXGuide – ACES 2.0 with ILM’s Alex Fryhttps://draftdocs.acescentral.com/background/whats-new/

ACES 2.0 is the second major release of the components that make up the ACES system. The most significant change is a new suite of rendering transforms whose design was informed by collected feedback and requests from users of ACES 1. The changes aim to improve the appearance of perceived artifacts and to complete previously unfinished components of the system, resulting in a more complete, robust, and consistent product.

Highlights of the key changes in ACES 2.0 are as follows:

- New output transforms, including:

- A less aggressive tone scale

- More intuitive controls to create custom outputs to non-standard displays

- Robust gamut mapping to improve perceptual uniformity

- Improved performance of the inverse transforms

- Enhanced AMF specification

- An updated specification for ACES Transform IDs

- OpenEXR compression recommendations

- Enhanced tools for generating Input Transforms and recommended procedures for characterizing prosumer cameras

- Look Transform Library

- Expanded documentation

Rendering Transform

The most substantial change in ACES 2.0 is a complete redesign of the rendering transform.

ACES 2.0 was built as a unified system, rather than through piecemeal additions. Different deliverable outputs “match” better and making outputs to display setups other than the provided presets is intended to be user-driven. The rendering transforms are less likely to produce undesirable artifacts “out of the box”, which means less time can be spent fixing problematic images and more time making pictures look the way you want.

Key design goals

- Improve consistency of tone scale and provide an easy to use parameter to allow for outputs between preset dynamic ranges

- Minimize hue skews across exposure range in a region of same hue

- Unify for structural consistency across transform type

- Easy to use parameters to create outputs other than the presets

- Robust gamut mapping to improve harsh clipping artifacts

- Fill extents of output code value cube (where appropriate and expected)

- Invertible – not necessarily reversible, but Output > ACES > Output round-trip should be possible

- Accomplish all of the above while maintaining an acceptable “out-of-the box” rendering

- New output transforms, including:

-

Light and Matter : The 2018 theory of Physically-Based Rendering and Shading by Allegorithmic

Read more: Light and Matter : The 2018 theory of Physically-Based Rendering and Shading by Allegorithmicacademy.substance3d.com/courses/the-pbr-guide-part-1

academy.substance3d.com/courses/the-pbr-guide-part-2

Local copy:

LIGHTING

-

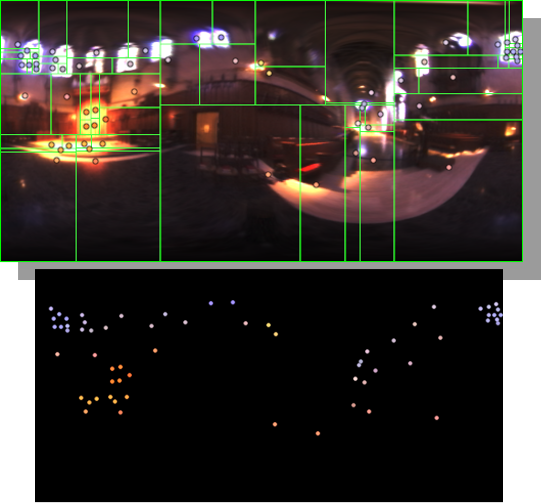

HDRI Median Cut plugin

Read more: HDRI Median Cut pluginwww.hdrlabs.com/picturenaut/plugins.html

Note. The Median Cut algorithm is typically used for color quantization, which involves reducing the number of colors in an image while preserving its visual quality. It doesn’t directly provide a way to identify the brightest areas in an image. However, if you’re interested in identifying the brightest areas, you might want to look into other methods like thresholding, histogram analysis, or edge detection, through openCV for example.

Here is an openCV example:

(more…) -

Terminators and Iron Men: HDRI, Image-based lighting and physical shading at ILM – Siggraph 2010

Read more: Terminators and Iron Men: HDRI, Image-based lighting and physical shading at ILM – Siggraph 2010 -

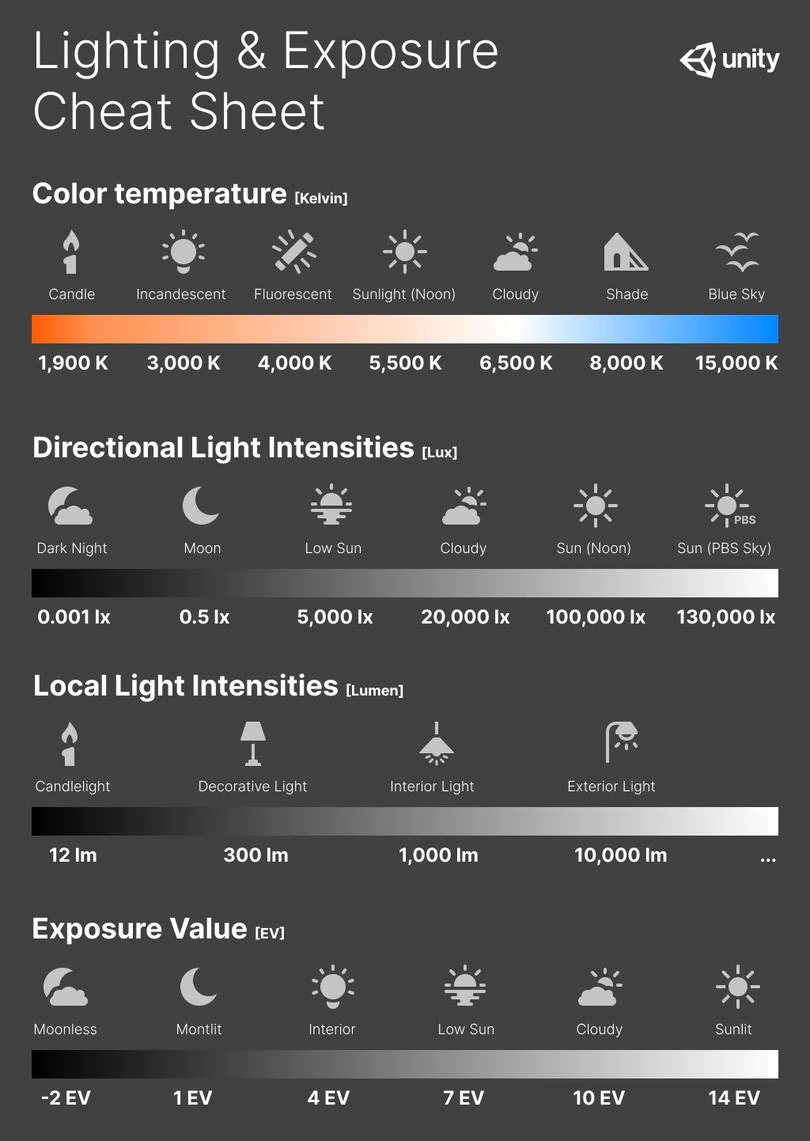

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

Read more: Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurementsAlso see: https://www.pixelsham.com/2015/05/16/how-aperture-shutter-speed-and-iso-affect-your-photos/

In photography, exposure value (EV) is a number that represents a combination of a camera’s shutter speed and f-number, such that all combinations that yield the same exposure have the same EV (for any fixed scene luminance).

The EV concept was developed in an attempt to simplify choosing among combinations of equivalent camera settings. Although all camera settings with the same EV nominally give the same exposure, they do not necessarily give the same picture. EV is also used to indicate an interval on the photographic exposure scale. 1 EV corresponding to a standard power-of-2 exposure step, commonly referred to as a stop

EV 0 corresponds to an exposure time of 1 sec and a relative aperture of f/1.0. If the EV is known, it can be used to select combinations of exposure time and f-number.Note EV does not equal to photographic exposure. Photographic Exposure is defined as how much light hits the camera’s sensor. It depends on the camera settings mainly aperture and shutter speed. Exposure value (known as EV) is a number that represents the exposure setting of the camera.

Thus, strictly, EV is not a measure of luminance (indirect or reflected exposure) or illuminance (incidentl exposure); rather, an EV corresponds to a luminance (or illuminance) for which a camera with a given ISO speed would use the indicated EV to obtain the nominally correct exposure. Nonetheless, it is common practice among photographic equipment manufacturers to express luminance in EV for ISO 100 speed, as when specifying metering range or autofocus sensitivity.

The exposure depends on two things: how much light gets through the lenses to the camera’s sensor and for how long the sensor is exposed. The former is a function of the aperture value while the latter is a function of the shutter speed. Exposure value is a number that represents this potential amount of light that could hit the sensor. It is important to understand that exposure value is a measure of how exposed the sensor is to light and not a measure of how much light actually hits the sensor. The exposure value is independent of how lit the scene is. For example a pair of aperture value and shutter speed represents the same exposure value both if the camera is used during a very bright day or during a dark night.

Each exposure value number represents all the possible shutter and aperture settings that result in the same exposure. Although the exposure value is the same for different combinations of aperture values and shutter speeds the resulting photo can be very different (the aperture controls the depth of field while shutter speed controls how much motion is captured).

EV 0.0 is defined as the exposure when setting the aperture to f-number 1.0 and the shutter speed to 1 second. All other exposure values are relative to that number. Exposure values are on a base two logarithmic scale. This means that every single step of EV – plus or minus 1 – represents the exposure (actual light that hits the sensor) being halved or doubled.Formulas

(more…)

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Methods for creating motion blur in Stop motion

-

Photography basics: Lumens vs Candelas (candle) vs Lux vs FootCandle vs Watts vs Irradiance vs Illuminance

-

Eyeline Labs VChain – Chain-of-Visual-Thought for Reasoning in Video Generation for better AI physics

-

Most common ways to smooth 3D prints

-

SourceTree vs Github Desktop – Which one to use

-

Generative AI Glossary / AI Dictionary / AI Terminology

-

Ethan Roffler interviews CG Supervisor Daniele Tosti

-

AI Search – Find The Best AI Tools & Apps

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.