COMPOSITION

-

Composition – These are the basic lighting techniques you need to know for photography and film

Read more: Composition – These are the basic lighting techniques you need to know for photography and filmhttp://www.diyphotography.net/basic-lighting-techniques-need-know-photography-film/

Amongst the basic techniques, there’s…

1- Side lighting – Literally how it sounds, lighting a subject from the side when they’re faced toward you

2- Rembrandt lighting – Here the light is at around 45 degrees over from the front of the subject, raised and pointing down at 45 degrees

3- Back lighting – Again, how it sounds, lighting a subject from behind. This can help to add drama with silouettes

4- Rim lighting – This produces a light glowing outline around your subject

5- Key light – The main light source, and it’s not necessarily always the brightest light source

6- Fill light – This is used to fill in the shadows and provide detail that would otherwise be blackness

7- Cross lighting – Using two lights placed opposite from each other to light two subjects

-

Composition – 5 tips for creating perfect cinematic lighting and making your work look stunning

Read more: Composition – 5 tips for creating perfect cinematic lighting and making your work look stunninghttp://www.diyphotography.net/5-tips-creating-perfect-cinematic-lighting-making-work-look-stunning/

1. Learn the rules of lighting

2. Learn when to break the rules

3. Make your key light larger

4. Reverse keying

5. Always be backlighting

DESIGN

COLOR

-

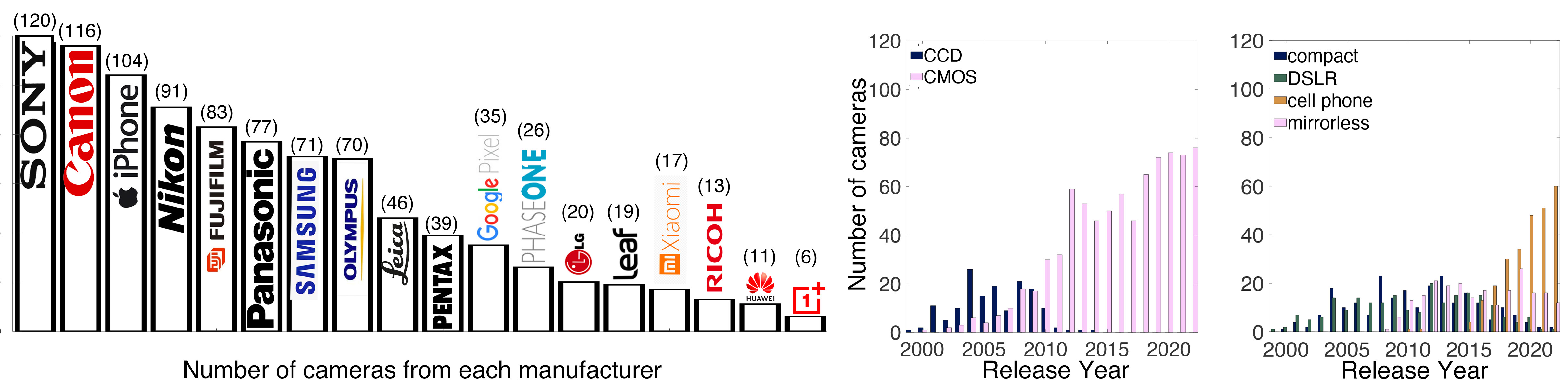

Photography Basics : Spectral Sensitivity Estimation Without a Camera

Read more: Photography Basics : Spectral Sensitivity Estimation Without a Camerahttps://color-lab-eilat.github.io/Spectral-sensitivity-estimation-web/

A number of problems in computer vision and related fields would be mitigated if camera spectral sensitivities were known. As consumer cameras are not designed for high-precision visual tasks, manufacturers do not disclose spectral sensitivities. Their estimation requires a costly optical setup, which triggered researchers to come up with numerous indirect methods that aim to lower cost and complexity by using color targets. However, the use of color targets gives rise to new complications that make the estimation more difficult, and consequently, there currently exists no simple, low-cost, robust go-to method for spectral sensitivity estimation that non-specialized research labs can adopt. Furthermore, even if not limited by hardware or cost, researchers frequently work with imagery from multiple cameras that they do not have in their possession.

To provide a practical solution to this problem, we propose a framework for spectral sensitivity estimation that not only does not require any hardware (including a color target), but also does not require physical access to the camera itself. Similar to other work, we formulate an optimization problem that minimizes a two-term objective function: a camera-specific term from a system of equations, and a universal term that bounds the solution space.

Different than other work, we utilize publicly available high-quality calibration data to construct both terms. We use the colorimetric mapping matrices provided by the Adobe DNG Converter to formulate the camera-specific system of equations, and constrain the solutions using an autoencoder trained on a database of ground-truth curves. On average, we achieve reconstruction errors as low as those that can arise due to manufacturing imperfections between two copies of the same camera. We provide predicted sensitivities for more than 1,000 cameras that the Adobe DNG Converter currently supports, and discuss which tasks can become trivial when camera responses are available.

-

Yasuharu YOSHIZAWA – Comparison of sRGB vs ACREScg in Nuke

Read more: Yasuharu YOSHIZAWA – Comparison of sRGB vs ACREScg in NukeAnswering the question that is often asked, “Do I need to use ACEScg to display an sRGB monitor in the end?” (Demonstration shown at an in-house seminar)

Comparison of scanlineRender output with extreme color lights on color charts with sRGB/ACREScg in color – OCIO -working space in NukeDownload the Nuke script:

-

Thomas Mansencal – Colour Science for Python

Read more: Thomas Mansencal – Colour Science for Pythonhttps://thomasmansencal.substack.com/p/colour-science-for-python

https://www.colour-science.org/

Colour is an open-source Python package providing a comprehensive number of algorithms and datasets for colour science. It is freely available under the BSD-3-Clause terms.

-

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

Read more: Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental processhttps://www.chrbutler.com/understanding-the-eye-mind-connection

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning

-

Tim Kang – calibrated white light values in sRGB color space

Read more: Tim Kang – calibrated white light values in sRGB color space8bit sRGB encoded

2000K 255 139 22

2700K 255 172 89

3000K 255 184 109

3200K 255 190 122

4000K 255 211 165

4300K 255 219 178

D50 255 235 205

D55 255 243 224

D5600 255 244 227

D6000 255 249 240

D65 255 255 255

D10000 202 221 255

D20000 166 196 2558bit Rec709 Gamma 2.4

2000K 255 145 34

2700K 255 177 97

3000K 255 187 117

3200K 255 193 129

4000K 255 214 170

4300K 255 221 182

D50 255 236 208

D55 255 243 226

D5600 255 245 229

D6000 255 250 241

D65 255 255 255

D10000 204 222 255

D20000 170 199 2558bit Display P3 encoded

2000K 255 154 63

2700K 255 185 109

3000K 255 195 127

3200K 255 201 138

4000K 255 219 176

4300K 255 225 187

D50 255 239 212

D55 255 245 228

D5600 255 246 231

D6000 255 251 242

D65 255 255 255

D10000 208 223 255

D20000 175 199 25510bit Rec2020 PQ (100 nits)

2000K 520 435 273

2700K 520 466 358

3000K 520 475 384

3200K 520 480 399

4000K 520 495 446

4300K 520 500 458

D50 520 510 482

D55 520 514 497

D5600 520 514 500

D6000 520 517 509

D65 520 520 520

D10000 479 489 520

D20000 448 464 520 -

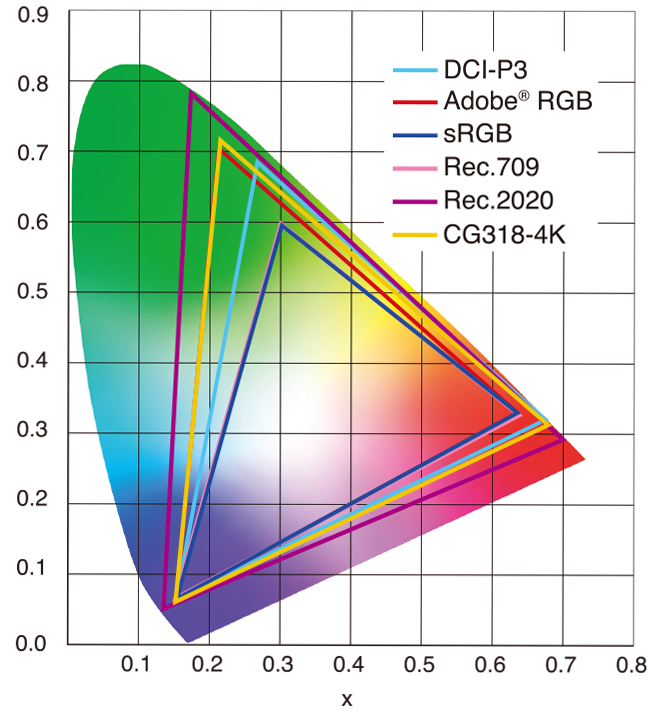

sRGB vs REC709 – An introduction and FFmpeg implementations

Read more: sRGB vs REC709 – An introduction and FFmpeg implementations

1. Basic Comparison

- What they are

- sRGB: A standard “web”/computer-display RGB color space defined by IEC 61966-2-1. It’s used for most monitors, cameras, printers, and the vast majority of images on the Internet.

- Rec. 709: An HD-video color space defined by ITU-R BT.709. It’s the go-to standard for HDTV broadcasts, Blu-ray discs, and professional video pipelines.

- Why they exist

- sRGB: Ensures consistent colors across different consumer devices (PCs, phones, webcams).

- Rec. 709: Ensures consistent colors across video production and playback chains (cameras → editing → broadcast → TV).

- What you’ll see

- On your desktop or phone, images tagged sRGB will look “right” without extra tweaking.

- On an HDTV or video-editing timeline, footage tagged Rec. 709 will display accurate contrast and hue on broadcast-grade monitors.

2. Digging Deeper

Feature sRGB Rec. 709 White point D65 (6504 K), same for both D65 (6504 K) Primaries (x,y) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) Gamut size Identical triangle on CIE 1931 chart Identical to sRGB Gamma / transfer Piecewise curve: approximate 2.2 with linear toe Pure power-law γ≈2.4 (often approximated as 2.2 in practice) Matrix coefficients N/A (pure RGB usage) Y = 0.2126 R + 0.7152 G + 0.0722 B (Rec. 709 matrix) Typical bit-depth 8-bit/channel (with 16-bit variants) 8-bit/channel (10-bit for professional video) Usage metadata Tagged as “sRGB” in image files (PNG, JPEG, etc.) Tagged as “bt709” in video containers (MP4, MOV) Color range Full-range RGB (0–255) Studio-range Y′CbCr (Y′ [16–235], Cb/Cr [16–240])

Why the Small Differences Matter

(more…) - What they are

LIGHTING

-

About green screens

Read more: About green screenshackaday.com/2015/02/07/how-green-screen-worked-before-computers/

www.newtek.com/blog/tips/best-green-screen-materials/

www.chromawall.com/blog//chroma-key-green

Chroma Key Green, the color of green screens is also known as Chroma Green and is valued at approximately 354C in the Pantone color matching system (PMS).

Chroma Green can be broken down in many different ways. Here is green screen green as other values useful for both physical and digital production:

Green Screen as RGB Color Value: 0, 177, 64

Green Screen as CMYK Color Value: 81, 0, 92, 0

Green Screen as Hex Color Value: #00b140

Green Screen as Websafe Color Value: #009933Chroma Key Green is reasonably close to an 18% gray reflectance.

Illuminate your green screen with an uniform source with less than 2/3 EV variation.

The level of brightness at any given f-stop should be equivalent to a 90% white card under the same lighting. -

Neural Microfacet Fields for Inverse Rendering

Read more: Neural Microfacet Fields for Inverse Renderinghttps://half-potato.gitlab.io/posts/nmf/

-

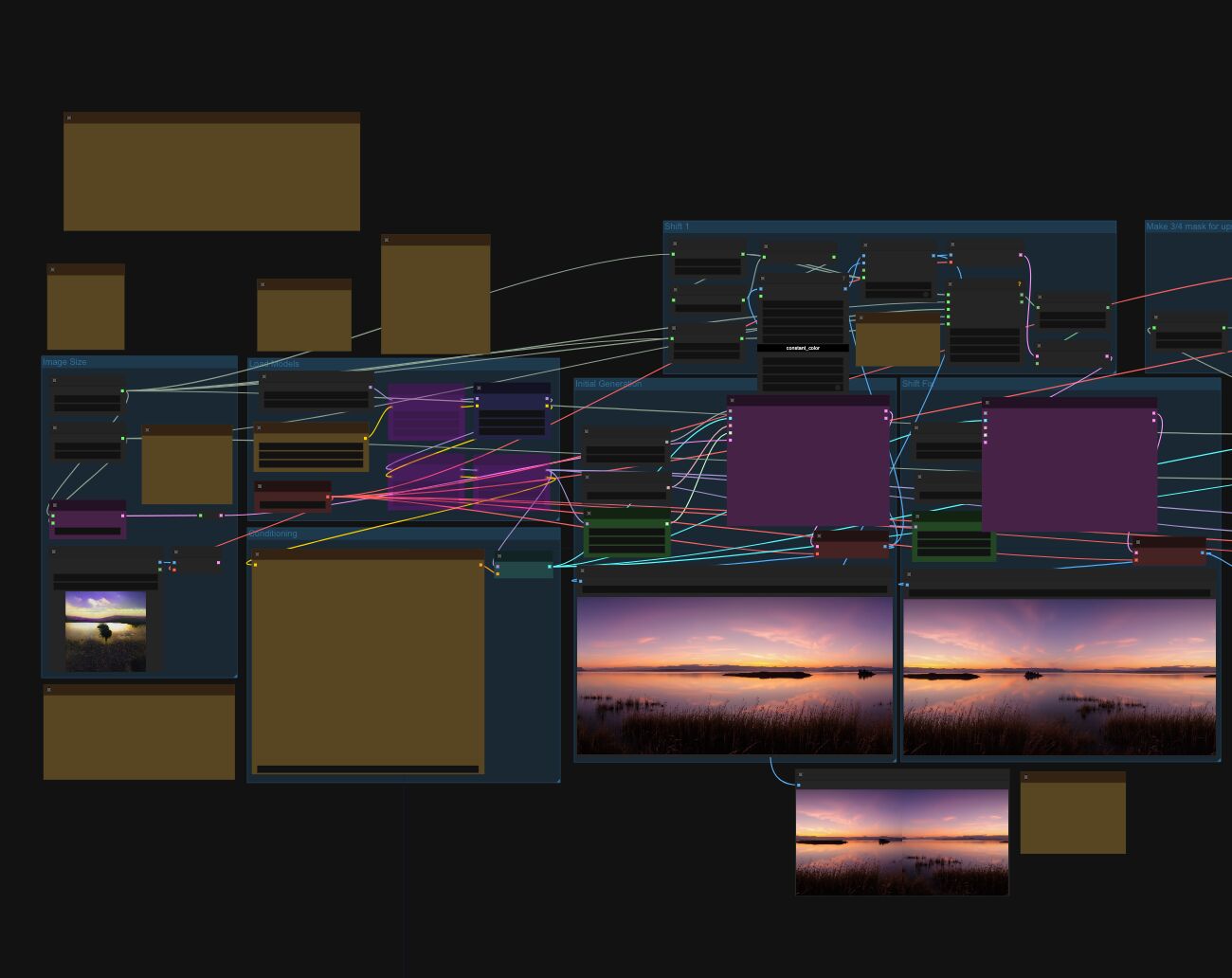

Arto T. – A workflow for creating photorealistic, equirectangular 360° panoramas in ComfyUI using Flux

Read more: Arto T. – A workflow for creating photorealistic, equirectangular 360° panoramas in ComfyUI using Fluxhttps://civitai.com/models/735980/flux-equirectangular-360-panorama

https://civitai.com/models/745010?modelVersionId=833115

The trigger phrase is “equirectangular 360 degree panorama”. I would avoid saying “spherical projection” since that tends to result in non-equirectangular spherical images.

Image resolution should always be a 2:1 aspect ratio. 1024 x 512 or 1408 x 704 work quite well and were used in the training data. 2048 x 1024 also works.

I suggest using a weight of 0.5 – 1.5. If you are having issues with the image generating too flat instead of having the necessary spherical distortion, try increasing the weight above 1, though this could negatively impact small details of the image. For Flux guidance, I recommend a value of about 2.5 for realistic scenes.

8-bit output at the moment

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

UV maps

-

Key/Fill ratios and scene composition using false colors and Nuke node

-

Python and TCL: Tips and Tricks for Foundry Nuke

-

Web vs Printing or digital RGB vs CMYK

-

Kling 1.6 and competitors – advanced tests and comparisons

-

GretagMacbeth Color Checker Numeric Values and Middle Gray

-

AI and the Law – studiobinder.com – What is Fair Use: Definition, Policies, Examples and More

-

The Perils of Technical Debt – Understanding Its Impact on Security, Usability, and Stability

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.