COMPOSITION

-

Photography basics: Depth of Field and composition

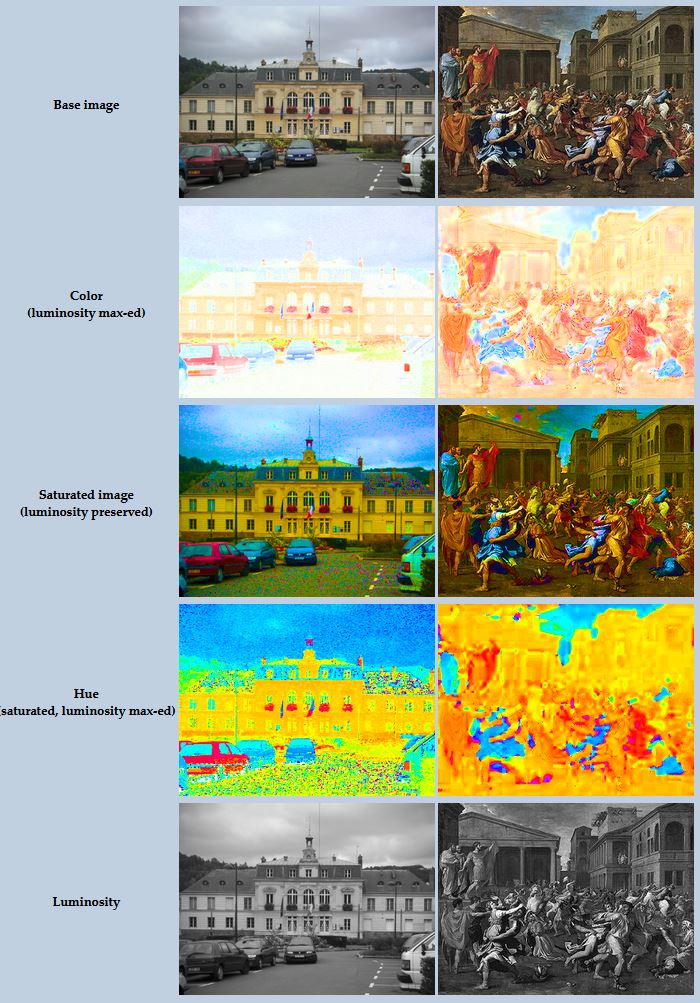

Read more: Photography basics: Depth of Field and compositionDepth of field is the range within which focusing is resolved in a photo.

Aperture has a huge affect on to the depth of field.Changing the f-stops (f/#) of a lens will change aperture and as such the DOF.

f-stops are a just certain number which is telling you the size of the aperture. That’s how f-stop is related to aperture (and DOF).

If you increase f-stops, it will increase DOF, the area in focus (and decrease the aperture). On the other hand, decreasing the f-stop it will decrease DOF (and increase the aperture).

The red cone in the figure is an angular representation of the resolution of the system. Versus the dotted lines, which indicate the aperture coverage. Where the lines of the two cones intersect defines the total range of the depth of field.

This image explains why the longer the depth of field, the greater the range of clarity.

DESIGN

-

James Gerde – The way the leaves dance in the rain

Read more: James Gerde – The way the leaves dance in the rainhttps://www.instagram.com/gerdegotit/reel/C6s-2r2RgSu/

Since spending a lot of time recently with SDXL I’ve since made my way back to SD 1.5

While the models overall have less fidelity. There is just no comparing to the current motion models we have available for animatediff with 1.5 models.

To date this is one of my favorite pieces. Not because I think it’s even the best it can be. But because the workflow adjustments unlocked some very important ideas I can’t wait to try out.

Performance by @silkenkelly and @itxtheballerina on IG

COLOR

-

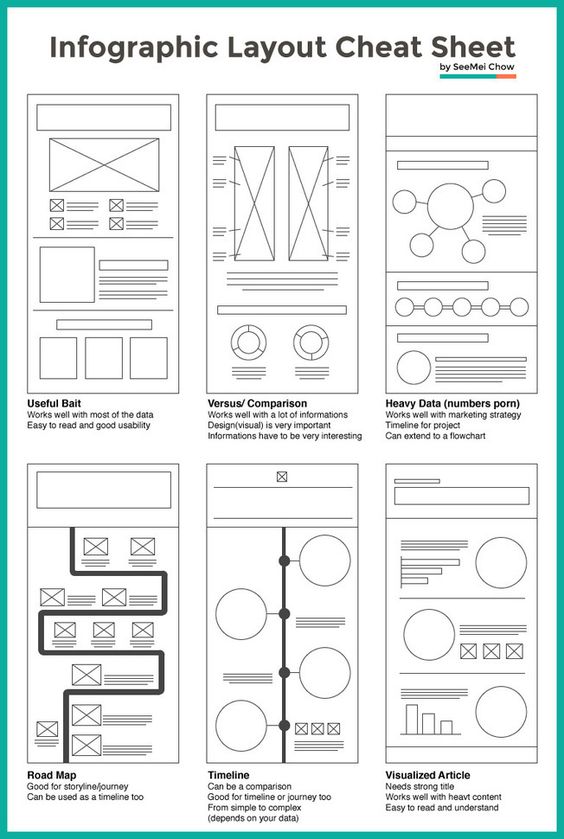

GretagMacbeth Color Checker Numeric Values and Middle Gray

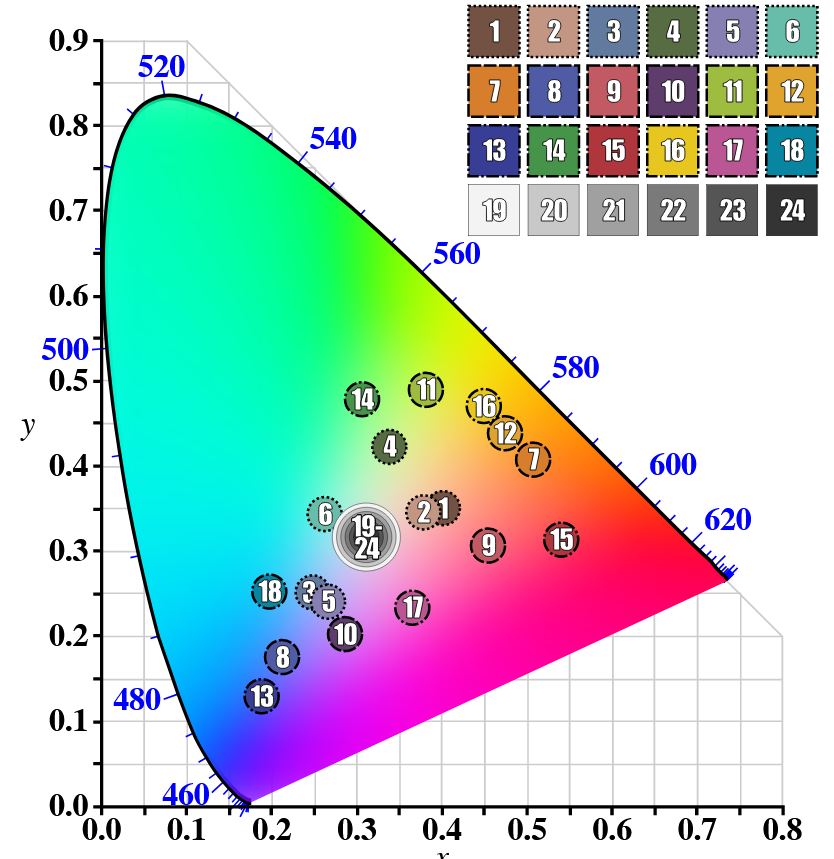

Read more: GretagMacbeth Color Checker Numeric Values and Middle GrayThe human eye perceives half scene brightness not as the linear 50% of the present energy (linear nature values) but as 18% of the overall brightness. We are biased to perceive more information in the dark and contrast areas. A Macbeth chart helps with calibrating back into a photographic capture into this “human perspective” of the world.

https://en.wikipedia.org/wiki/Middle_gray

In photography, painting, and other visual arts, middle gray or middle grey is a tone that is perceptually about halfway between black and white on a lightness scale in photography and printing, it is typically defined as 18% reflectance in visible light

Light meters, cameras, and pictures are often calibrated using an 18% gray card[4][5][6] or a color reference card such as a ColorChecker. On the assumption that 18% is similar to the average reflectance of a scene, a grey card can be used to estimate the required exposure of the film.

https://en.wikipedia.org/wiki/ColorChecker

(more…) -

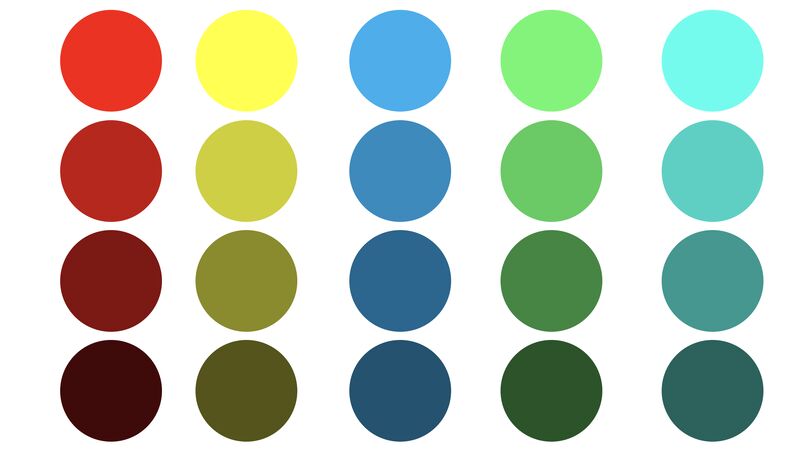

Is it possible to get a dark yellow

Read more: Is it possible to get a dark yellowhttps://www.patreon.com/posts/102660674

https://www.linkedin.com/posts/stephenwestland_here-is-a-post-about-the-dark-yellow-problem-activity-7187131643764092929-7uCL

-

About green screens

Read more: About green screenshackaday.com/2015/02/07/how-green-screen-worked-before-computers/

www.newtek.com/blog/tips/best-green-screen-materials/

www.chromawall.com/blog//chroma-key-green

Chroma Key Green, the color of green screens is also known as Chroma Green and is valued at approximately 354C in the Pantone color matching system (PMS).

Chroma Green can be broken down in many different ways. Here is green screen green as other values useful for both physical and digital production:

Green Screen as RGB Color Value: 0, 177, 64

Green Screen as CMYK Color Value: 81, 0, 92, 0

Green Screen as Hex Color Value: #00b140

Green Screen as Websafe Color Value: #009933Chroma Key Green is reasonably close to an 18% gray reflectance.

Illuminate your green screen with an uniform source with less than 2/3 EV variation.

The level of brightness at any given f-stop should be equivalent to a 90% white card under the same lighting. -

“Reality” is constructed by your brain. Here’s what that means, and why it matters.

Read more: “Reality” is constructed by your brain. Here’s what that means, and why it matters.“Fix your gaze on the black dot on the left side of this image. But wait! Finish reading this paragraph first. As you gaze at the left dot, try to answer this question: In what direction is the object on the right moving? Is it drifting diagonally, or is it moving up and down?”

What color are these strawberries?

Are A and B the same gray?

-

StudioBinder.com – CRI color rendering index

Read more: StudioBinder.com – CRI color rendering indexwww.studiobinder.com/blog/what-is-color-rendering-index

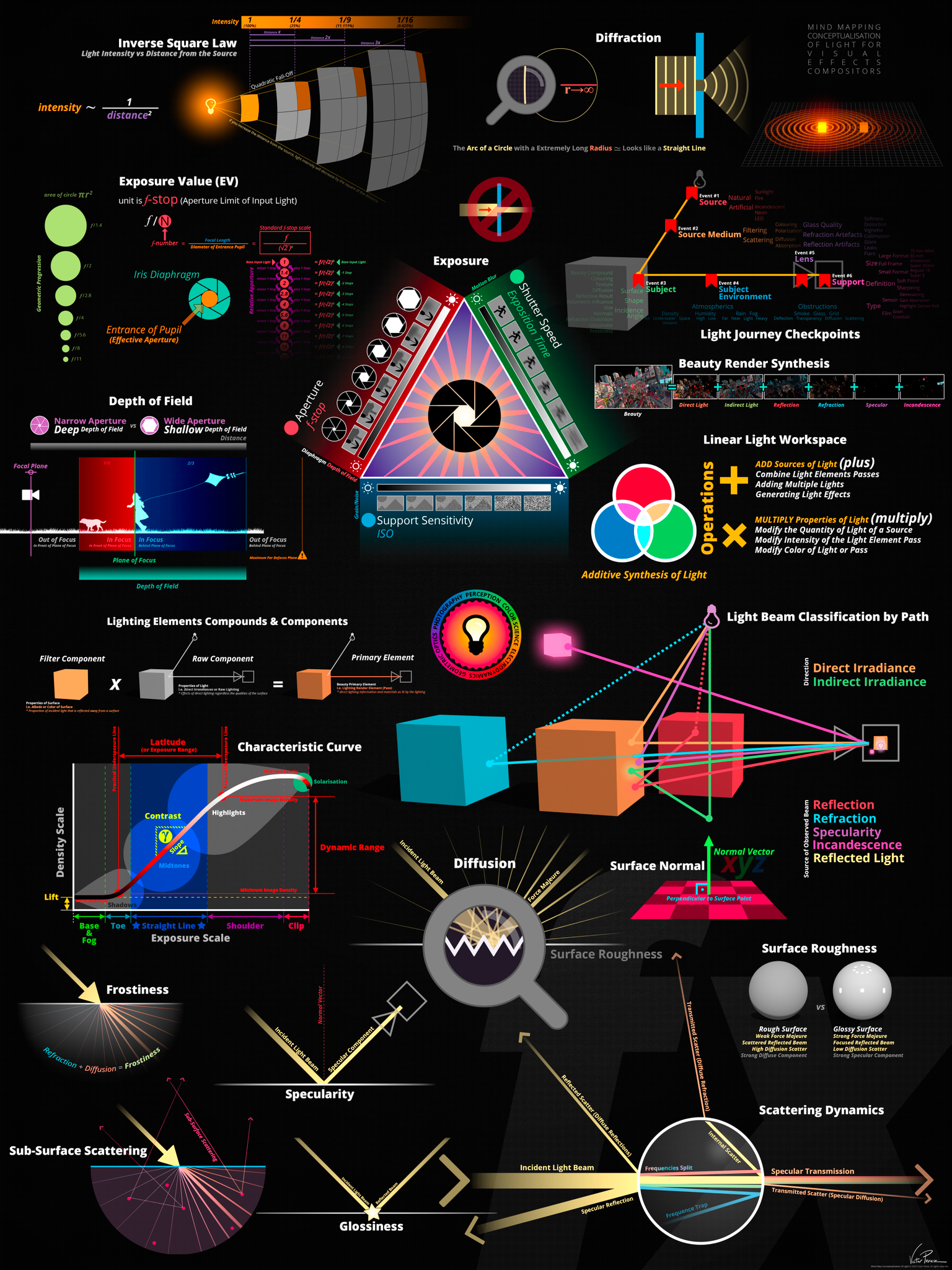

“The Color Rendering Index is a measurement of how faithfully a light source reveals the colors of whatever it illuminates, it describes the ability of a light source to reveal the color of an object, as compared to the color a natural light source would provide. The highest possible CRI is 100. A CRI of 100 generally refers to a perfect black body, like a tungsten light source or the sun. ”

www.pixelsham.com/2021/04/28/types-of-film-lights-and-their-efficiency

-

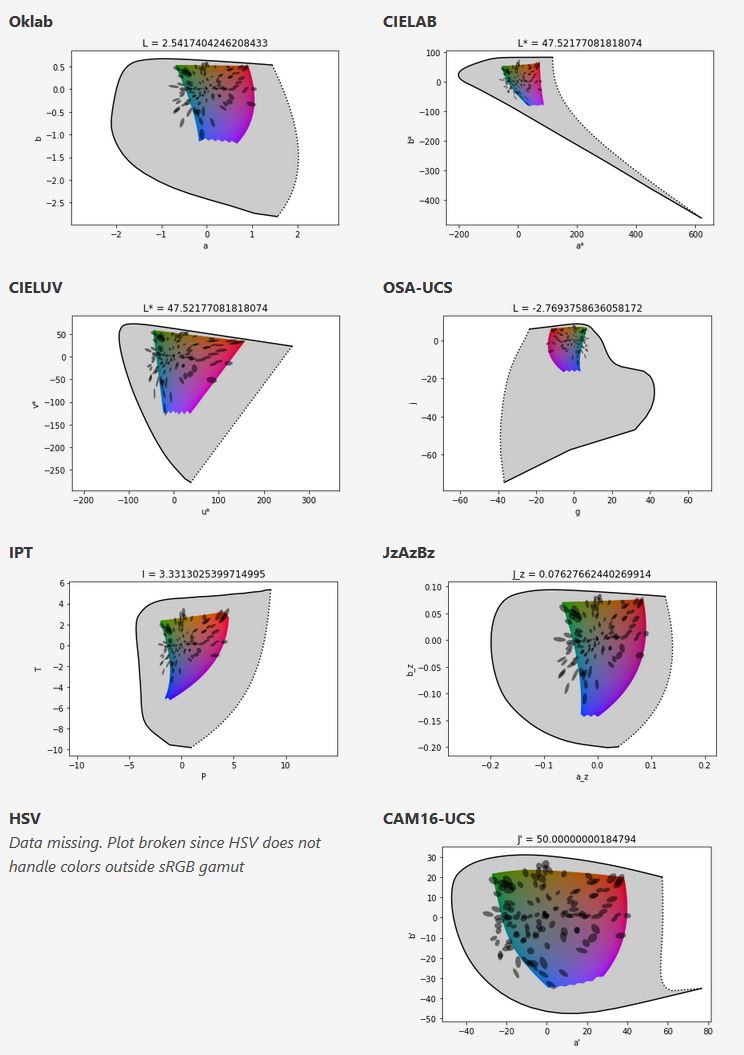

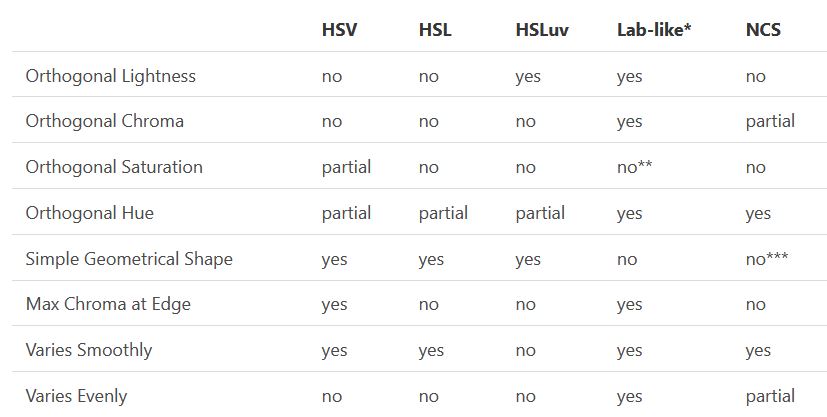

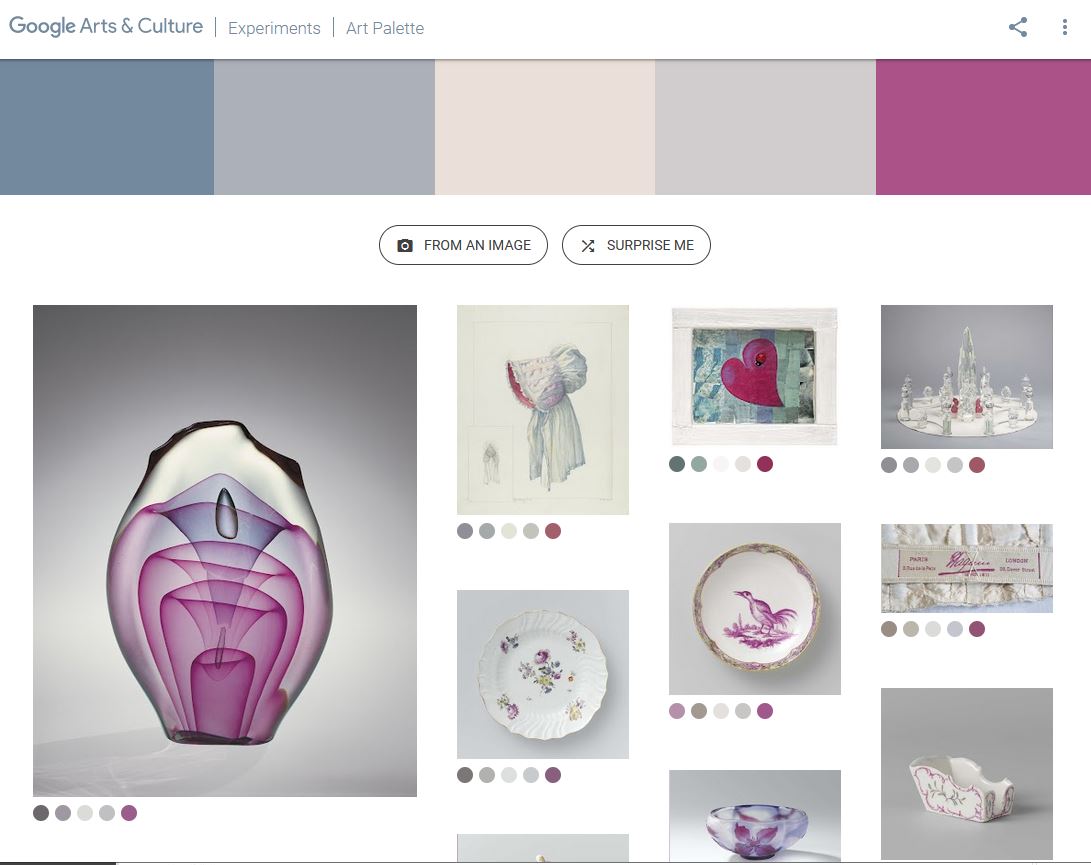

Björn Ottosson – OKHSV and OKHSL – Two new color spaces for color picking

Read more: Björn Ottosson – OKHSV and OKHSL – Two new color spaces for color pickinghttps://bottosson.github.io/misc/colorpicker

https://bottosson.github.io/posts/colorpicker/

https://www.smashingmagazine.com/2024/10/interview-bjorn-ottosson-creator-oklab-color-space/

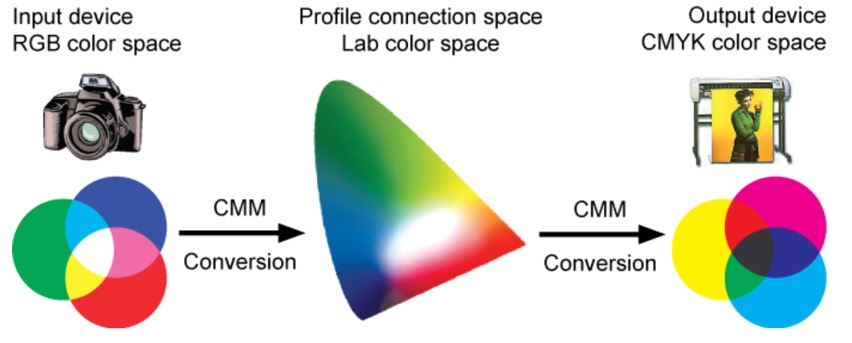

One problem with sRGB is that in a gradient between blue and white, it becomes a bit purple in the middle of the transition. That’s because sRGB really isn’t created to mimic how the eye sees colors; rather, it is based on how CRT monitors work. That means it works with certain frequencies of red, green, and blue, and also the non-linear coding called gamma. It’s a miracle it works as well as it does, but it’s not connected to color perception. When using those tools, you sometimes get surprising results, like purple in the gradient.

There were also attempts to create simple models matching human perception based on XYZ, but as it turned out, it’s not possible to model all color vision that way. Perception of color is incredibly complex and depends, among other things, on whether it is dark or light in the room and the background color it is against. When you look at a photograph, it also depends on what you think the color of the light source is. The dress is a typical example of color vision being very context-dependent. It is almost impossible to model this perfectly.

I based Oklab on two other color spaces, CIECAM16 and IPT. I used the lightness and saturation prediction from CIECAM16, which is a color appearance model, as a target. I actually wanted to use the datasets used to create CIECAM16, but I couldn’t find them.

IPT was designed to have better hue uniformity. In experiments, they asked people to match light and dark colors, saturated and unsaturated colors, which resulted in a dataset for which colors, subjectively, have the same hue. IPT has a few other issues but is the basis for hue in Oklab.

In the Munsell color system, colors are described with three parameters, designed to match the perceived appearance of colors: Hue, Chroma and Value. The parameters are designed to be independent and each have a uniform scale. This results in a color solid with an irregular shape. The parameters are designed to be independent and each have a uniform scale. This results in a color solid with an irregular shape. Modern color spaces and models, such as CIELAB, Cam16 and Björn Ottosson own Oklab, are very similar in their construction.

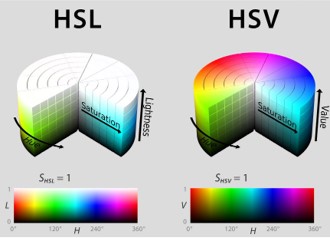

By far the most used color spaces today for color picking are HSL and HSV, two representations introduced in the classic 1978 paper “Color Spaces for Computer Graphics”. HSL and HSV designed to roughly correlate with perceptual color properties while being very simple and cheap to compute.

Today HSL and HSV are most commonly used together with the sRGB color space.

One of the main advantages of HSL and HSV over the different Lab color spaces is that they map the sRGB gamut to a cylinder. This makes them easy to use since all parameters can be changed independently, without the risk of creating colors outside of the target gamut.

The main drawback on the other hand is that their properties don’t match human perception particularly well.

Reconciling these conflicting goals perfectly isn’t possible, but given that HSV and HSL don’t use anything derived from experiments relating to human perception, creating something that makes a better tradeoff does not seem unreasonable.

With this new lightness estimate, we are ready to look into the construction of Okhsv and Okhsl.

LIGHTING

-

Photography basics: Solid Angle measures

Read more: Photography basics: Solid Angle measureshttp://www.calculator.org/property.aspx?name=solid+angle

A measure of how large the object appears to an observer looking from that point. Thus. A measure for objects in the sky. Useful to retuen the size of the sun and moon… and in perspective, how much of their contribution to lighting. Solid angle can be represented in ‘angular diameter’ as well.

http://en.wikipedia.org/wiki/Solid_angle

http://www.mathsisfun.com/geometry/steradian.html

A solid angle is expressed in a dimensionless unit called a steradian (symbol: sr). By default in terms of the total celestial sphere and before atmospheric’s scattering, the Sun and the Moon subtend fractional areas of 0.000546% (Sun) and 0.000531% (Moon).

http://en.wikipedia.org/wiki/Solid_angle#Sun_and_Moon

On earth the sun is likely closer to 0.00011 solid angle after athmospheric scattering. The sun as perceived from earth has a diameter of 0.53 degrees. This is about 0.000064 solid angle.

http://www.numericana.com/answer/angles.htm

The mean angular diameter of the full moon is 2q = 0.52° (it varies with time around that average, by about 0.009°). This translates into a solid angle of 0.0000647 sr, which means that the whole night sky covers a solid angle roughly one hundred thousand times greater than the full moon.

More info

http://lcogt.net/spacebook/using-angles-describe-positions-and-apparent-sizes-objects

http://amazing-space.stsci.edu/glossary/def.php.s=topic_astronomy

Angular Size

The apparent size of an object as seen by an observer; expressed in units of degrees (of arc), arc minutes, or arc seconds. The moon, as viewed from the Earth, has an angular diameter of one-half a degree.

The angle covered by the diameter of the full moon is about 31 arcmin or 1/2°, so astronomers would say the Moon’s angular diameter is 31 arcmin, or the Moon subtends an angle of 31 arcmin.

-

Photography basics: Why Use a (MacBeth) Color Chart?

Read more: Photography basics: Why Use a (MacBeth) Color Chart?Start here: https://www.pixelsham.com/2013/05/09/gretagmacbeth-color-checker-numeric-values/

https://www.studiobinder.com/blog/what-is-a-color-checker-tool/

In LightRoom

in Final Cut

in Nuke

Note: In Foundry’s Nuke, the software will map 18% gray to whatever your center f/stop is set to in the viewer settings (f/8 by default… change that to EV by following the instructions below).

You can experiment with this by attaching an Exposure node to a Constant set to 0.18, setting your viewer read-out to Spotmeter, and adjusting the stops in the node up and down. You will see that a full stop up or down will give you the respective next value on the aperture scale (f8, f11, f16 etc.).One stop doubles or halves the amount or light that hits the filmback/ccd, so everything works in powers of 2.

So starting with 0.18 in your constant, you will see that raising it by a stop will give you .36 as a floating point number (in linear space), while your f/stop will be f/11 and so on.If you set your center stop to 0 (see below) you will get a relative readout in EVs, where EV 0 again equals 18% constant gray.

In other words. Setting the center f-stop to 0 means that in a neutral plate, the middle gray in the macbeth chart will equal to exposure value 0. EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

This will set the sun usually around EV12-17 and the sky EV1-4 , depending on cloud coverage.

To switch Foundry’s Nuke’s SpotMeter to return the EV of an image, click on the main viewport, and then press s, this opens the viewer’s properties. Now set the center f-stop to 0 in there. And the SpotMeter in the viewport will change from aperture and fstops to EV.

-

Composition – These are the basic lighting techniques you need to know for photography and film

Read more: Composition – These are the basic lighting techniques you need to know for photography and filmhttp://www.diyphotography.net/basic-lighting-techniques-need-know-photography-film/

Amongst the basic techniques, there’s…

1- Side lighting – Literally how it sounds, lighting a subject from the side when they’re faced toward you

2- Rembrandt lighting – Here the light is at around 45 degrees over from the front of the subject, raised and pointing down at 45 degrees

3- Back lighting – Again, how it sounds, lighting a subject from behind. This can help to add drama with silouettes

4- Rim lighting – This produces a light glowing outline around your subject

5- Key light – The main light source, and it’s not necessarily always the brightest light source

6- Fill light – This is used to fill in the shadows and provide detail that would otherwise be blackness

7- Cross lighting – Using two lights placed opposite from each other to light two subjects

-

Sun cone angle (angular diameter) as perceived by earth viewers

Read more: Sun cone angle (angular diameter) as perceived by earth viewersAlso see:

https://www.pixelsham.com/2020/08/01/solid-angle-measures/

The cone angle of the sun refers to the angular diameter of the sun as observed from Earth, which is related to the apparent size of the sun in the sky.

The angular diameter of the sun, or the cone angle of the sunlight as perceived from Earth, is approximately 0.53 degrees on average. This value can vary slightly due to the elliptical nature of Earth’s orbit around the sun, but it generally stays within a narrow range.

Here’s a more precise breakdown:

-

- Average Angular Diameter: About 0.53 degrees (31 arcminutes)

- Minimum Angular Diameter: Approximately 0.52 degrees (when Earth is at aphelion, the farthest point from the sun)

- Maximum Angular Diameter: Approximately 0.54 degrees (when Earth is at perihelion, the closest point to the sun)

This angular diameter remains relatively constant throughout the day because the sun’s distance from Earth does not change significantly over a single day.

To summarize, the cone angle of the sun’s light, or its angular diameter, is typically around 0.53 degrees, regardless of the time of day.

https://en.wikipedia.org/wiki/Angular_diameter

-

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

Convert 2D Images or Text to 3D Models

-

What’s the Difference Between Ray Casting, Ray Tracing, Path Tracing and Rasterization? Physical light tracing…

-

Want to build a start up company that lasts? Think three-layer cake

-

Google – Artificial Intelligence free courses

-

WhatDreamsCost Spline-Path-Control – Create motion controls for ComfyUI

-

AI and the Law – Netflix : Using Generative AI in Content Production

-

Canva bought Affinity – Now Affinity Photo and Affinity Designer are… GONE?!

-

RawTherapee – a free, open source, cross-platform raw image and HDRi processing program

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.

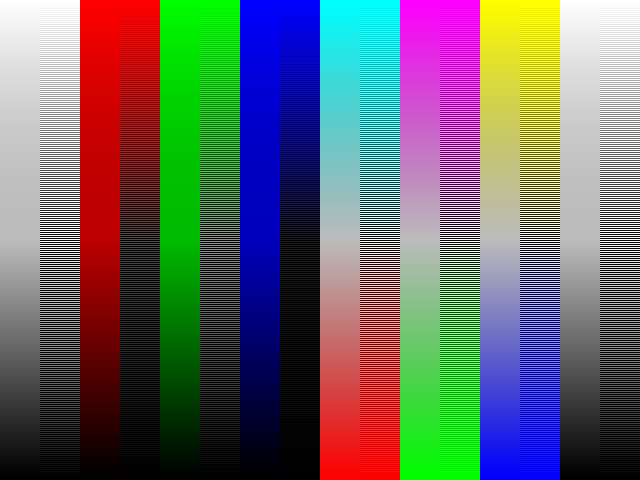

![sRGB gamma correction test [gamma correction test]](http://www.madore.org/~david/misc/color/gammatest.png)