RANDOM POSTs

-

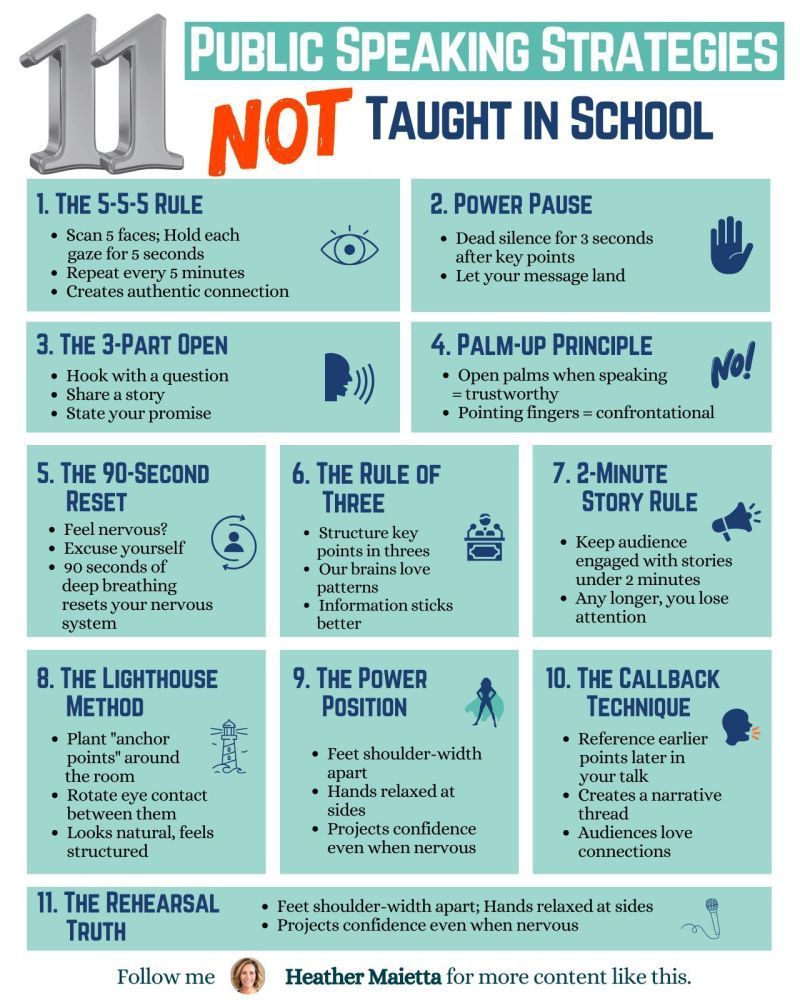

11 Public Speaking Strategies

Read more: 11 Public Speaking Strategies

What do people report as their #1 greatest fear?

It’s not death….

It’s public speaking.

Glossophobia, the fear of public speaking, has been a daunting obstacle for me for years.

11 confidence-boosting tips

1/ The 5-5-5 Rule

→ Scan 5 faces; Hold each gaze for 5 seconds.

→ Repeat every 5 minutes.

→ Creates an authentic connection.

2/Power Pause

→ Dead silence for 3 seconds after key points.

→ Let your message land.

3/ The 3-Part Open

→ Hook with a question.

→ Share a story.

→ State your promise.

4/ Palm-Up Principle

→ Open palms when speaking = trustworthy.

→ Pointing fingers = confrontational.

5/ The 90-Second Reset

→ Feel nervous? Excuse yourself.

→ 90 seconds of deep breathing reset your nervous system.

6/ Rule of Three

→ Structure key points in threes.

→ Our brains love patterns.

7/ 2-Minute Story Rule

→ Keep stories under 2 minutes.

→ Any longer, you lose them.

8/ The Lighthouse Method

→ Plant “anchor points” around the room.

→ Rotate eye contact between them.

→ Looks natural, feels structured.

9/ The Power Position

→ Feet shoulder-width apart.

→ Hands relaxed at sides.

→ Projects confidence even when nervous.

10/ The Callback Technique

→ Reference earlier points later in your talk.

→ Creates a narrative thread.

→ Audiences love connections.

11/ The Rehearsal Truth

→ Practice the opening 3x more than the rest.

→ Nail the first 30 seconds; you’ll nail the talk. -

AI Models – A walkthrough by Andreas Horn

Read more: AI Models – A walkthrough by Andreas Hornthe 8 most important model types and what they’re actually built to do: ⬇️

1. 𝗟𝗟𝗠 – 𝗟𝗮𝗿𝗴𝗲 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ Your ChatGPT-style model.

Handles text, predicts the next token, and powers 90% of GenAI hype.

🛠 Use case: content, code, convos.

2. 𝗟𝗖𝗠 – 𝗟𝗮𝘁𝗲𝗻𝘁 𝗖𝗼𝗻𝘀𝗶𝘀𝘁𝗲𝗻𝗰𝘆 𝗠𝗼𝗱𝗲𝗹

→ Lightweight, diffusion-style models.

Fast, quantized, and efficient — perfect for real-time or edge deployment.

🛠 Use case: image generation, optimized inference.

3. 𝗟𝗔𝗠 – 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗔𝗰𝘁𝗶𝗼𝗻 𝗠𝗼𝗱𝗲𝗹

→ Where LLM meets planning.

Adds memory, task breakdown, and intent recognition.

🛠 Use case: AI agents, tool use, step-by-step execution.

4. 𝗠𝗼𝗘 – 𝗠𝗶𝘅𝘁𝘂𝗿𝗲 𝗼𝗳 𝗘𝘅𝗽𝗲𝗿𝘁𝘀

→ One model, many minds.

Routes input to the right “expert” model slice — dynamic, scalable, efficient.

🛠 Use case: high-performance model serving at low compute cost.

5. 𝗩𝗟𝗠 – 𝗩𝗶𝘀𝗶𝗼𝗻 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ Multimodal beast.

Combines image + text understanding via shared embeddings.

🛠 Use case: Gemini, GPT-4o, search, robotics, assistive tech.

6. 𝗦𝗟𝗠 – 𝗦𝗺𝗮𝗹𝗹 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ Tiny but mighty.

Designed for edge use, fast inference, low latency, efficient memory.

🛠 Use case: on-device AI, chatbots, privacy-first GenAI.

7. 𝗠𝗟𝗠 – 𝗠𝗮𝘀𝗸𝗲𝗱 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ The OG foundation model.

Predicts masked tokens using bidirectional context.

🛠 Use case: search, classification, embeddings, pretraining.

8. 𝗦𝗔𝗠 – 𝗦𝗲𝗴𝗺𝗲𝗻𝘁 𝗔𝗻𝘆𝘁𝗵𝗶𝗻𝗴 𝗠𝗼𝗱𝗲𝗹

→ Vision model for pixel-level understanding.

Highlights, segments, and understands *everything* in an image.

🛠 Use case: medical imaging, AR, robotics, visual agents.

-

PBR Color Reference List for Materials – by Grzegorz Baran

Read more: PBR Color Reference List for Materials – by Grzegorz Baran“The list should be helpful for every material artist who work on PBR materials as it contains over 200 color values measured with PCE-RGB2 1002 Color Spectrometer device and presented in linear and sRGB (2.2) gamma space.

All color values, HUE and Saturation in this list come from measurements taken with PCE-RGB2 1002 Color Spectrometer device and are presented in linear and sRGB (2.2) gamma space (more info at the end of this video) I calculated Relative Luminance and Luminance values based on captured color using my own equation which takes color based luminance perception into consideration. Bare in mind that there is no ‘one’ color per substance as nothing in nature is even 100% uniform and any value in +/-10% range from these should be considered as correct one. Therefore this list should be always considered as a color reference for material’s albedos, not ulitimate and absolute truth.“

-

Looking Glass Factory – 4K Hololuminescent™ Displays (HLD)

Read more: Looking Glass Factory – 4K Hololuminescent™ Displays (HLD)𝐇𝐨𝐥𝐨𝐥𝐮𝐦𝐢𝐧𝐞𝐬𝐜𝐞𝐧𝐭™ 𝐃𝐢𝐬𝐩𝐥𝐚𝐲 (HLD for short) — razor thin (as thin as a 17mm), full 4K resolution, and capable of generating holographic presence at a magical price.

A breakthrough display that creates a holographic stage for people, products, and characters, delivering the magic of spatial presence in a razor-thin form factor.

Our patented hybrid technology creates embedded holographic layer creates the 3D volume, transforming standard video into dimensional, lifelike experiences.Create impossible spatial experiences from standard video

- Characters, people, and products appear physically present in the room

- The embedded holographic layer creates depth and dimension that makes subjects appear to float in space

- First scalable holographic illusion that doesn’t require a room-sized installation

- The dimensional depth and presence of pepper’s ghost illusions, without the bulk

Built for the real world

- FHD clarity (HLD 16″) and 4K clarity (HLD 27″ and HLD 86″), high brightness for any lighting environment

- Thin profile fits anywhere traditional displays go

- Wall-mounted, it creates the illusion of punching a hole through the wall into another dimension

- Films beautifully for social sharing – the magic translates on camera

Works with what you have

- Runs on your existing digital signage solution, CMS, and 4K video distribution infrastructure

- Standard HDMI input or USB loading

- 2D video workflow with straightforward, specific requirements: full-size subjects on green/white backgrounds or created with our templates (Cinema4D, Unity, Adobe Premiere Pro)

16″ $2000 usd

27″ $4000 usd

86″ $20000 usd

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.