BREAKING NEWS

LATEST POSTS

-

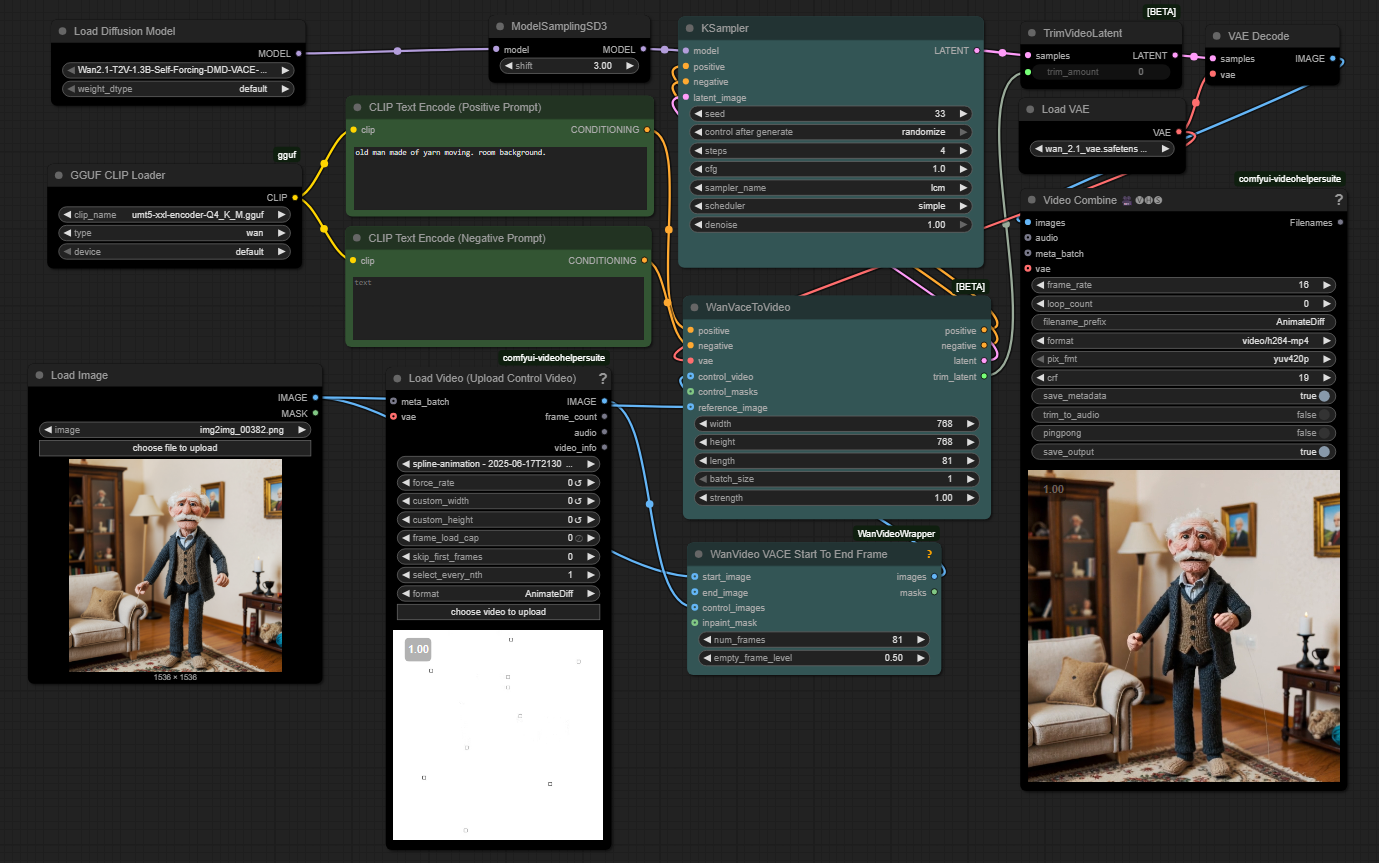

WhatDreamsCost Spline-Path-Control – Create motion controls for ComfyUI

https://github.com/WhatDreamsCost/Spline-Path-Control

https://whatdreamscost.github.io/Spline-Path-Control/

https://github.com/WhatDreamsCost/Spline-Path-Control/tree/main/example_workflows

Spline Path Control is a simple tool designed to make it easy to create motion controls. It allows you to create and animate shapes that follow splines, and then export the result as a

.webmvideo file.

This project was created to simplify the process of generating control videos for tools like VACE. Use it to control the motion of anything (camera movement, objects, humans etc) all without extra prompting.- Multi-Spline Editing: Create multiple, independent spline paths

- Easy To Use Controls: Quickly edit splines and points

- Full Control of Splines and Shapes:

- Start Frame: Set a delay before a spline’s animation begins.

- Duration: Control the speed of the shape along its path.

- Easing: Apply

Linear,Ease-in,Ease-out, andEase-in-outfunctions for smooth acceleration and deceleration. - Tension: Adjust the “curviness” of the spline path.

- Shape Customization: Change the shape (circle, square, triangle), size, fill color, and border.

- Reference Images: Drag and drop or upload a background image to trace paths over an existing image.

- WebM Export: Export your animation with a white background, perfect for use as a control video in VACE.

-

MiniMax-Remover – Taming Bad Noise Helps Video Object Removal Rotoscoping

https://github.com/zibojia/MiniMax-Remover

MiniMax-Remover is a fast and effective video object remover based on minimax optimization. It operates in two stages: the first stage trains a remover using a simplified DiT architecture, while the second stage distills a robust remover with CFG removal and fewer inference steps.

FEATURED POSTS

-

What is an AI Agent + OpenAI Practical Guide to Building AI Agents

If you’re serious about AI Agents, this is the guide you’ve been waiting for. It’s packed with everything you need to build powerful AI agents. It follows a very hands-on approach that cuts down your time and avoids the common mistakes most developers make.

Andreas Horn on AI Agents vs Agentic AI

1. 𝗔𝗜 𝗔𝗴𝗲𝗻𝘁𝘀: 𝗧𝗼𝗼𝗹𝘀 𝘄𝗶𝘁𝗵 𝗔𝘂𝘁𝗼𝗻𝗼𝗺𝘆, 𝗪𝗶𝘁𝗵𝗶𝗻 𝗟𝗶𝗺𝗶𝘁𝘀

➜ AI agents are modular, goal-directed systems that operate within clearly defined boundaries. They’re built to:

* Use tools (APIs, browsers, databases)

* Execute specific, task-oriented workflows

* React to prompts or real-time inputs

* Plan short sequences and return actionable outputs

𝘛𝘩𝘦𝘺’𝘳𝘦 𝘦𝘹𝘤𝘦𝘭𝘭𝘦𝘯𝘵 𝘧𝘰𝘳 𝘵𝘢𝘳𝘨𝘦𝘵𝘦𝘥 𝘢𝘶𝘵𝘰𝘮𝘢𝘵𝘪𝘰𝘯, 𝘭𝘪𝘬𝘦: 𝘊𝘶𝘴𝘵𝘰𝘮𝘦𝘳 𝘴𝘶𝘱𝘱𝘰𝘳𝘵 𝘣𝘰𝘵𝘴, 𝘐𝘯𝘵𝘦𝘳𝘯𝘢𝘭 𝘬𝘯𝘰𝘸𝘭𝘦𝘥𝘨𝘦 𝘴𝘦𝘢𝘳𝘤𝘩, 𝘌𝘮𝘢𝘪𝘭 𝘵𝘳𝘪𝘢𝘨𝘦, 𝘔𝘦𝘦𝘵𝘪𝘯𝘨 𝘴𝘤𝘩𝘦𝘥𝘶𝘭𝘪𝘯𝘨, 𝘊𝘰𝘥𝘦 𝘴𝘶𝘨𝘨𝘦𝘴𝘵𝘪𝘰𝘯𝘴

But even the most advanced are limited by scope. They don’t initiate. They don’t collaborate. They execute what we ask!

2. 𝗔𝗴𝗲𝗻𝘁𝗶𝗰 𝗔𝗜: 𝗔 𝗦𝘆𝘀𝘁𝗲𝗺 𝗼𝗳 𝗦𝘆𝘀𝘁𝗲𝗺𝘀

➜ Agentic AI is an architectural leap. It’s not just one smarter agent — it’s multiple specialized agents working together toward shared goals. These systems exhibit:

* Multi-agent collaboration

* Goal decomposition and role assignment

* Inter-agent communication via memory or messaging

* Persistent context across time and tasks

* Recursive planning and error recovery

* Distributed orchestration and adaptive feedback

Agentic AI systems don’t just follow instructions. They coordinate. They adapt. They manage complexity.

𝘌𝘹𝘢𝘮𝘱𝘭𝘦𝘴 𝘪𝘯𝘤𝘭𝘶𝘥𝘦: 𝘳𝘦𝘴𝘦𝘢𝘳𝘤𝘩 𝘵𝘦𝘢𝘮𝘴 𝘱𝘰𝘸𝘦𝘳𝘦𝘥 𝘣𝘺 𝘢𝘨𝘦𝘯𝘵𝘴, 𝘴𝘮𝘢𝘳𝘵 𝘩𝘰𝘮𝘦 𝘦𝘤𝘰𝘴𝘺𝘴𝘵𝘦𝘮𝘴 𝘰𝘱𝘵𝘪𝘮𝘪𝘻𝘪𝘯𝘨 𝘦𝘯𝘦𝘳𝘨𝘺/𝘴𝘦𝘤𝘶𝘳𝘪𝘵𝘺, 𝘴𝘸𝘢𝘳𝘮𝘴 𝘰𝘧 𝘳𝘰𝘣𝘰𝘵𝘴 𝘪𝘯 𝘭𝘰𝘨𝘪𝘴𝘵𝘪𝘤𝘴 𝘰𝘳 𝘢𝘨𝘳𝘪𝘤𝘶𝘭𝘵𝘶𝘳𝘦 𝘮𝘢𝘯𝘢𝘨𝘪𝘯𝘨 𝘳𝘦𝘢𝘭-𝘵𝘪𝘮𝘦 𝘶𝘯𝘤𝘦𝘳𝘵𝘢𝘪𝘯𝘵𝘺

𝗧𝗵𝗲 𝗖𝗼𝗿𝗲 𝗗𝗶𝗳𝗳𝗲𝗿𝗲𝗻𝗰𝗲?

AI Agents = autonomous tools for single-task execution

Agentic AI = orchestrated ecosystems for workflow-level intelligence

(more…)

Next, here 𝗮𝗿𝗲 𝘁𝗵𝗲 𝘁𝗼𝗽 10 𝗞𝗲𝘆 𝗧𝗮𝗸𝗲𝗮𝘄𝗮𝘆𝘀 𝗳𝗿𝗼𝗺 𝗢𝗽𝗲𝗻𝗔𝗜’𝘀 𝗚𝘂𝗶𝗱𝗲:

-

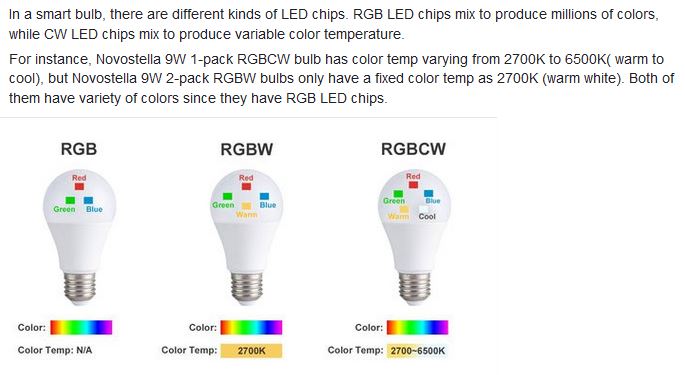

Practical Aspects of Spectral Data and LEDs in Digital Content Production and Virtual Production – SIGGRAPH 2022

Comparison to the commercial side

https://www.ecolorled.com/blog/detail/what-is-rgb-rgbw-rgbic-strip-lights

RGBW (RGB + White) LED strip uses a 4-in-1 LED chip made up of red, green, blue, and white.

RGBWW (RGB + White + Warm White) LED strip uses either a 5-in-1 LED chip with red, green, blue, white, and warm white for color mixing. The only difference between RGBW and RGBWW is the intensity of the white color. The term RGBCCT consists of RGB and CCT. CCT (Correlated Color Temperature) means that the color temperature of the led strip light can be adjusted to change between warm white and white. Thus, RGBWW strip light is another name of RGBCCT strip.

RGBCW is the acronym for Red, Green, Blue, Cold, and Warm. These 5-in-1 chips are used in supper bright smart LED lighting products

-

Zibra.AI – Real-Time Volumetric Effects in Virtual Production. Now free for Indies!

A New Era for Volumetrics

For a long time, volumetric visual effects were viable only in high-end offline VFX workflows. Large data footprints and poor real-time rendering performance limited their use: most teams simply avoided volumetrics altogether. It’s similar to the early days of online video: limited computational power and low network bandwidth made video content hard to share or stream. Today, of course, we can’t imagine the internet without it, and we believe volumetrics are on a similar path.

With advanced data compression and real-time, GPU-driven decompression, anyone can now bring CGI-class visual effects into Unreal Engine.

From now on, it’s completely free for individual creators!

What it means for you?

(more…)

-

Methods for creating motion blur in Stop motion

en.wikipedia.org/wiki/Go_motion

Petroleum jelly

This crude but reasonably effective technique involves smearing petroleum jelly (“Vaseline”) on a plate of glass in front of the camera lens, also known as vaselensing, then cleaning and reapplying it after each shot — a time-consuming process, but one which creates a blur around the model. This technique was used for the endoskeleton in The Terminator. This process was also employed by Jim Danforth to blur the pterodactyl’s wings in Hammer Films’ When Dinosaurs Ruled the Earth, and by Randal William Cook on the terror dogs sequence in Ghostbusters.[citation needed]Bumping the puppet

Gently bumping or flicking the puppet before taking the frame will produce a slight blur; however, care must be taken when doing this that the puppet does not move too much or that one does not bump or move props or set pieces.Moving the table

Moving the table on which the model is standing while the film is being exposed creates a slight, realistic blur. This technique was developed by Ladislas Starevich: when the characters ran, he moved the set in the opposite direction. This is seen in The Little Parade when the ballerina is chased by the devil. Starevich also used this technique on his films The Eyes of the Dragon, The Magical Clock and The Mascot. Aardman Animations used this for the train chase in The Wrong Trousers and again during the lorry chase in A Close Shave. In both cases the cameras were moved physically during a 1-2 second exposure. The technique was revived for the full-length Wallace & Gromit: The Curse of the Were-Rabbit.Go motion

The most sophisticated technique was originally developed for the film The Empire Strikes Back and used for some shots of the tauntauns and was later used on films like Dragonslayer and is quite different from traditional stop motion. The model is essentially a rod puppet. The rods are attached to motors which are linked to a computer that can record the movements as the model is traditionally animated. When enough movements have been made, the model is reset to its original position, the camera rolls and the model is moved across the table. Because the model is moving during shots, motion blur is created.A variation of go motion was used in E.T. the Extra-Terrestrial to partially animate the children on their bicycles.