BREAKING NEWS

LATEST POSTS

-

Google Stitch – Transform ideas into UI designs for mobile and web applications

https://stitch.withgoogle.com/

Stitch is available for free of charge with certain usage limits. Each user receives a monthly allowance of 350 generations using Flash mode and 50 generations using Experimental mode. Please note that these limits are subject to change.

-

Runway Partners with AMC Networks Across Marketing and TV Development

https://runwayml.com/news/runway-amc-partnership

Runway and AMC Networks, the international entertainment company known for popular and award-winning titles including MAD MEN, BREAKING BAD, BETTER CALL SAUL, THE WALKING DEAD and ANNE RICE’S INTERVIEW WITH THE VAMPIRE, are partnering to incorporate Runway’s AI models and tools in AMC Networks’ marketing and TV development processes.

-

LumaLabs.ai – Introducing Modify Video

https://lumalabs.ai/blog/news/introducing-modify-video

Reimagine any video. Shoot it in post with director-grade control over style, character, and setting. Restyle expressive actions and performances, swap entire worlds, or redesign the frame to your vision.

Shoot once. Shape infinitely. -

Transformer Explainer -Interactive Learning of Text-Generative Models

https://github.com/poloclub/transformer-explainer

Transformer Explainer is an interactive visualization tool designed to help anyone learn how Transformer-based models like GPT work. It runs a live GPT-2 model right in your browser, allowing you to experiment with your own text and observe in real time how internal components and operations of the Transformer work together to predict the next tokens. Try Transformer Explainer at http://poloclub.github.io/transformer-explainer

-

Henry Daubrez – How to generate VR/ 360 videos directly with Google VEO

https://www.linkedin.com/posts/upskydown_vr-googleveo-veo3-activity-7334269406396461059-d8Da

If you prompt for a 360° video in VEO (like literally write “360°” ) it can generate a Monoscopic 360 video, then the next step is to inject the right metadata in your file so you can play it as an actual 360 video.

Once it’s saved with the right Metadata, it will be recognized as an actual 360/VR video, meaning you can just play it in VLC and drag your mouse to look around.

FEATURED POSTS

-

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

https://www.chrbutler.com/understanding-the-eye-mind-connection

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning

-

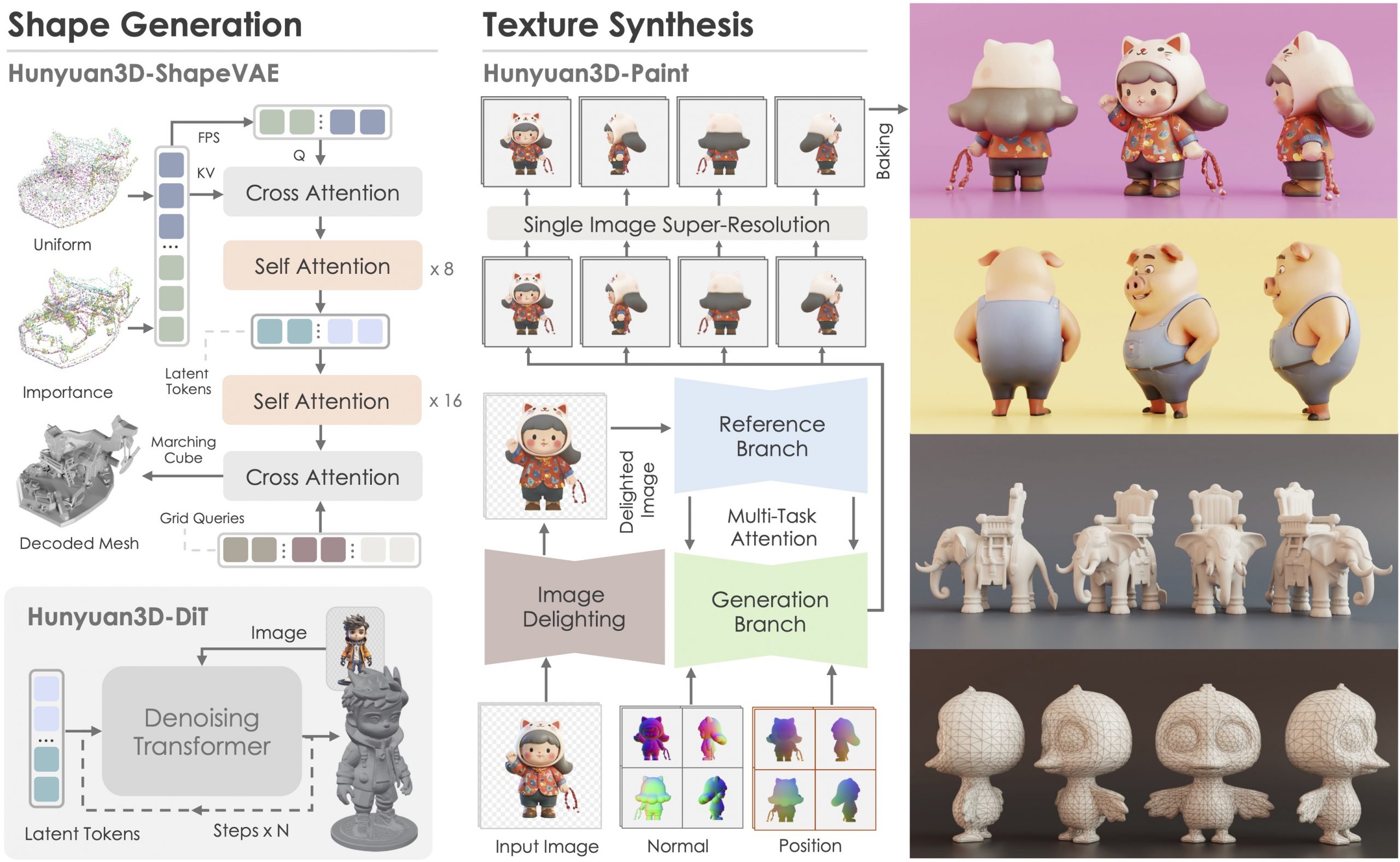

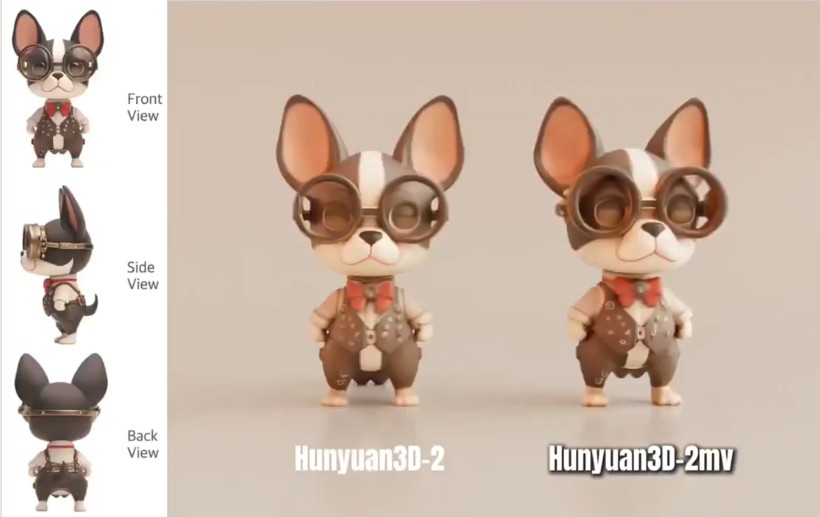

Tencent Hunyuan3D 2.1 goes Open Source and adds MV (Multi-view) and MV Mini

https://huggingface.co/tencent/Hunyuan3D-2mv

https://huggingface.co/tencent/Hunyuan3D-2mini

https://github.com/Tencent/Hunyuan3D-2

Tencent just made Hunyuan3D 2.1 open-source.

This is the first fully open-source, production-ready PBR 3D generative model with cinema-grade quality.

https://github.com/Tencent-Hunyuan/Hunyuan3D-2.1

What makes it special?

• Advanced PBR material synthesis brings realistic materials like leather, bronze, and more to life with stunning light interactions.

• Complete access to model weights, training/inference code, data pipelines.

• Optimized to run on accessible hardware.

• Built for real-world applications with professional-grade output quality.

They’re making it accessible to everyone:

• Complete open-source ecosystem with full documentation.

• Ready-to-use model weights and training infrastructure.

• Live demo available for instant testing.

• Comprehensive GitHub repository with implementation details.

-

Survivorship Bias: The error resulting from systematically focusing on successes and ignoring failures. How a young statistician saved his planes during WW2.

A young statistician saved their lives.

His insight (and how it can change yours):

(more…)

During World War II, the U.S. wanted to add reinforcement armor to specific areas of its planes.

Analysts examined returning bombers, plotted the bullet holes and damage on them (as in the image below), and came to the conclusion that adding armor to the tail, body, and wings would improve their odds of survival.

But a young statistician named Abraham Wald noted that this would be a tragic mistake. By only plotting data on the planes that returned, they were systematically omitting the data on a critical, informative subset: The planes that were damaged and unable to return.