BREAKING NEWS

LATEST POSTS

-

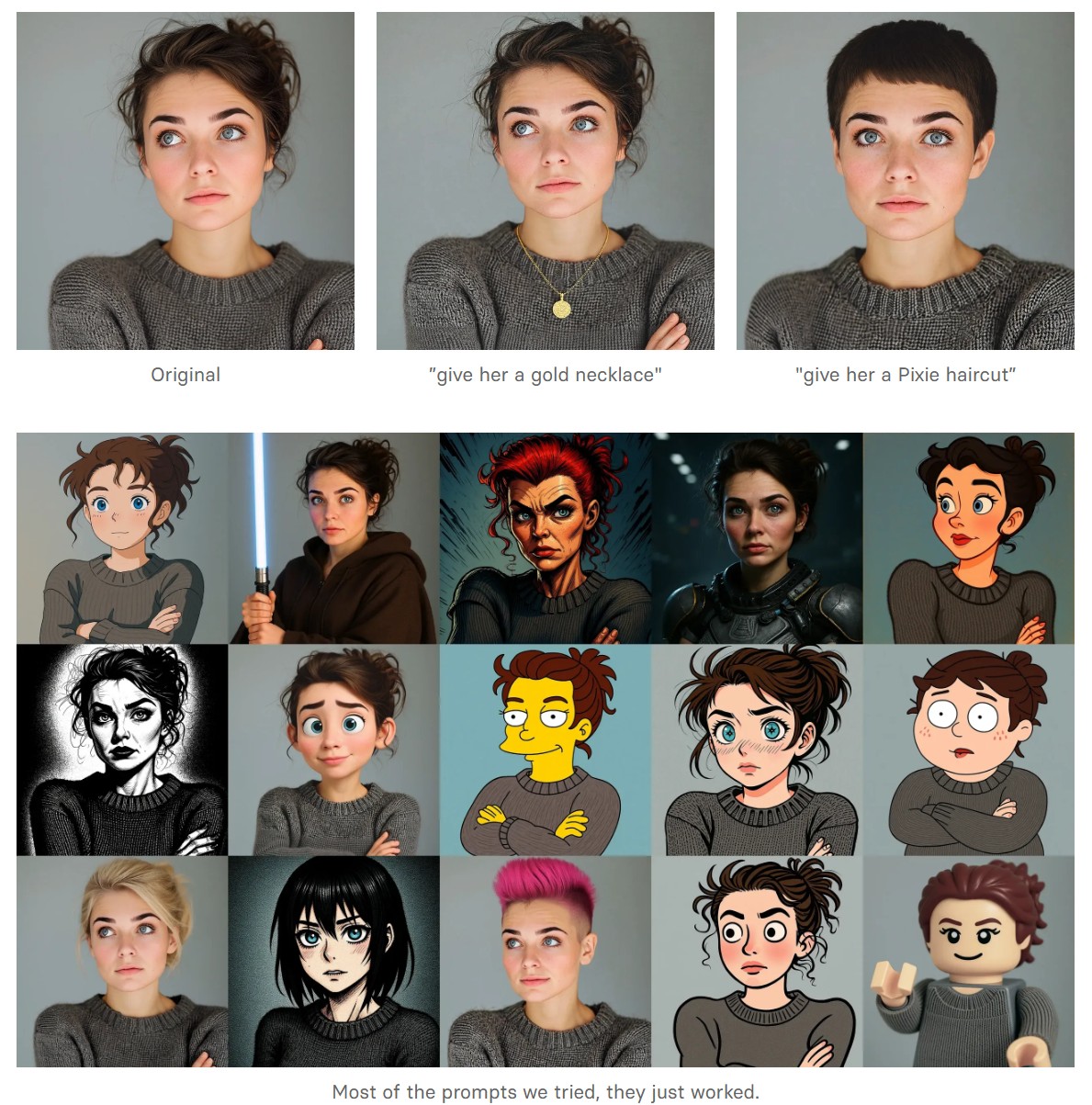

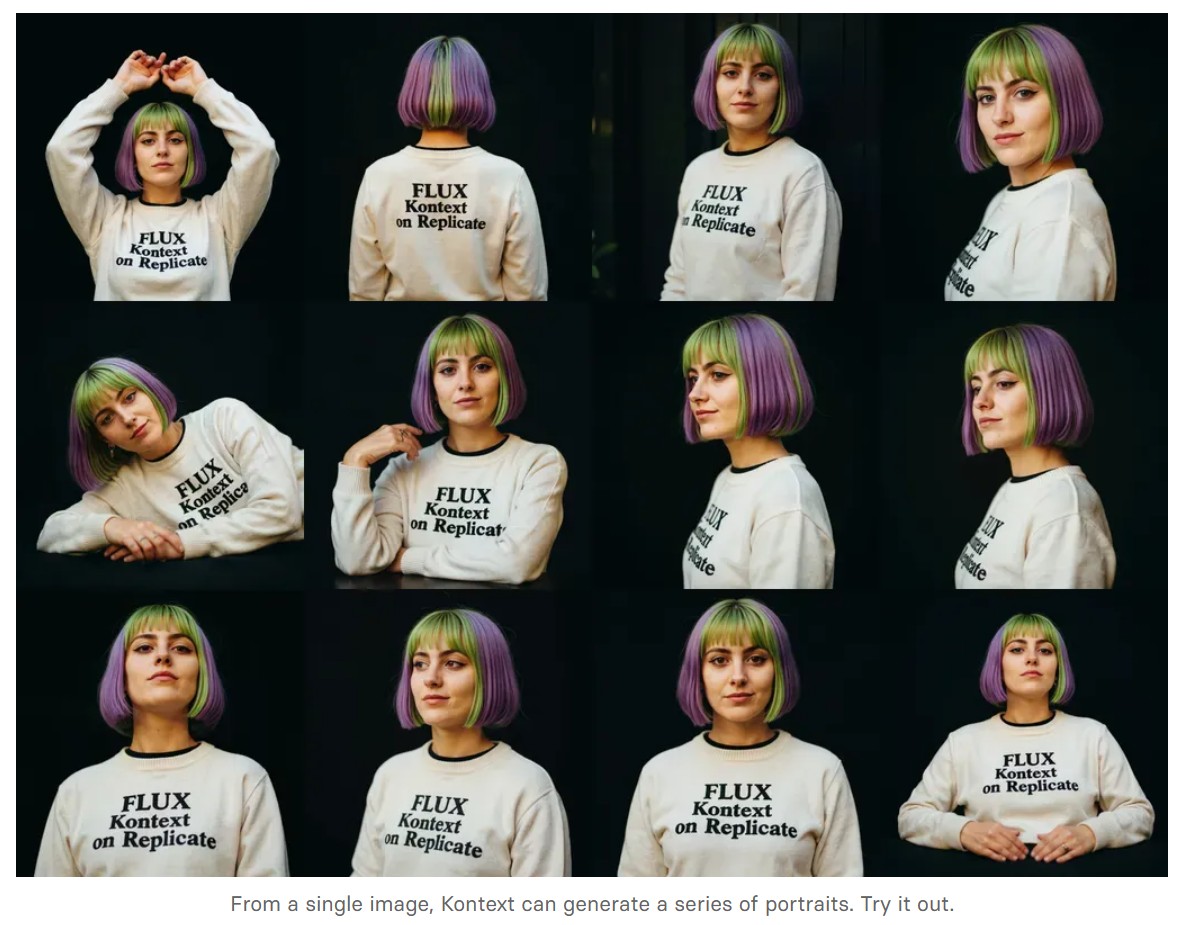

Black Forest Labs released FLUX.1 Kontext

https://replicate.com/blog/flux-kontext

https://replicate.com/black-forest-labs/flux-kontext-pro

There are three models, two are available now, and a third open-weight version is coming soon:

- FLUX.1 Kontext [pro]: State-of-the-art performance for image editing. High-quality outputs, great prompt following, and consistent results.

- FLUX.1 Kontext [max]: A premium model that brings maximum performance, improved prompt adherence, and high-quality typography generation without compromise on speed.

- Coming soon: FLUX.1 Kontext [dev]: An open-weight, guidance-distilled version of Kontext.

We’re so excited with what Kontext can do, we’ve created a collection of models on Replicate to give you ideas:

- Multi-image kontext: Combine two images into one.

- Portrait series: Generate a series of portraits from a single image

- Change haircut: Change a person’s hair style and color

- Iconic locations: Put yourself in front of famous landmarks

- Professional headshot: Generate a professional headshot from any image

-

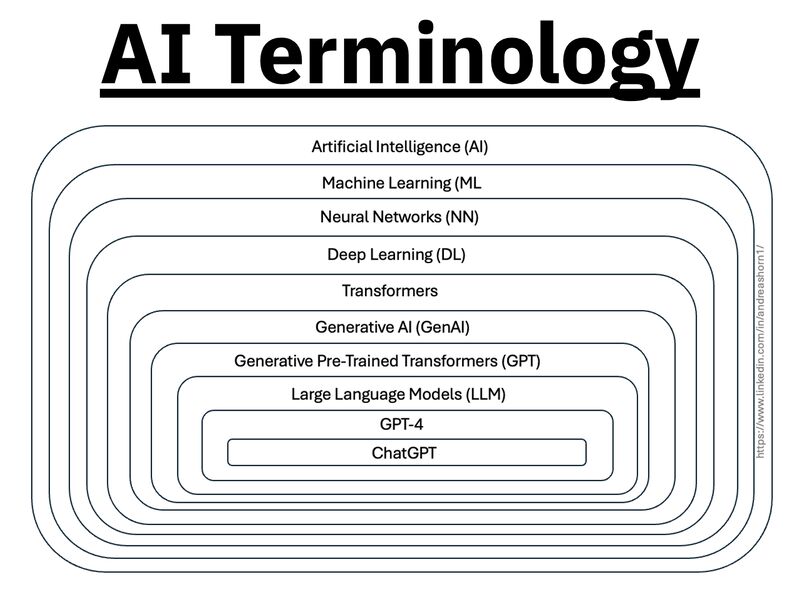

AI Models – A walkthrough by Andreas Horn

the 8 most important model types and what they’re actually built to do: ⬇️

1. 𝗟𝗟𝗠 – 𝗟𝗮𝗿𝗴𝗲 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ Your ChatGPT-style model.

Handles text, predicts the next token, and powers 90% of GenAI hype.

🛠 Use case: content, code, convos.

2. 𝗟𝗖𝗠 – 𝗟𝗮𝘁𝗲𝗻𝘁 𝗖𝗼𝗻𝘀𝗶𝘀𝘁𝗲𝗻𝗰𝘆 𝗠𝗼𝗱𝗲𝗹

→ Lightweight, diffusion-style models.

Fast, quantized, and efficient — perfect for real-time or edge deployment.

🛠 Use case: image generation, optimized inference.

3. 𝗟𝗔𝗠 – 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗔𝗰𝘁𝗶𝗼𝗻 𝗠𝗼𝗱𝗲𝗹

→ Where LLM meets planning.

Adds memory, task breakdown, and intent recognition.

🛠 Use case: AI agents, tool use, step-by-step execution.

4. 𝗠𝗼𝗘 – 𝗠𝗶𝘅𝘁𝘂𝗿𝗲 𝗼𝗳 𝗘𝘅𝗽𝗲𝗿𝘁𝘀

→ One model, many minds.

Routes input to the right “expert” model slice — dynamic, scalable, efficient.

🛠 Use case: high-performance model serving at low compute cost.

5. 𝗩𝗟𝗠 – 𝗩𝗶𝘀𝗶𝗼𝗻 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ Multimodal beast.

Combines image + text understanding via shared embeddings.

🛠 Use case: Gemini, GPT-4o, search, robotics, assistive tech.

6. 𝗦𝗟𝗠 – 𝗦𝗺𝗮𝗹𝗹 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ Tiny but mighty.

Designed for edge use, fast inference, low latency, efficient memory.

🛠 Use case: on-device AI, chatbots, privacy-first GenAI.

7. 𝗠𝗟𝗠 – 𝗠𝗮𝘀𝗸𝗲𝗱 𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗠𝗼𝗱𝗲𝗹

→ The OG foundation model.

Predicts masked tokens using bidirectional context.

🛠 Use case: search, classification, embeddings, pretraining.

8. 𝗦𝗔𝗠 – 𝗦𝗲𝗴𝗺𝗲𝗻𝘁 𝗔𝗻𝘆𝘁𝗵𝗶𝗻𝗴 𝗠𝗼𝗱𝗲𝗹

→ Vision model for pixel-level understanding.

Highlights, segments, and understands *everything* in an image.

🛠 Use case: medical imaging, AR, robotics, visual agents.

-

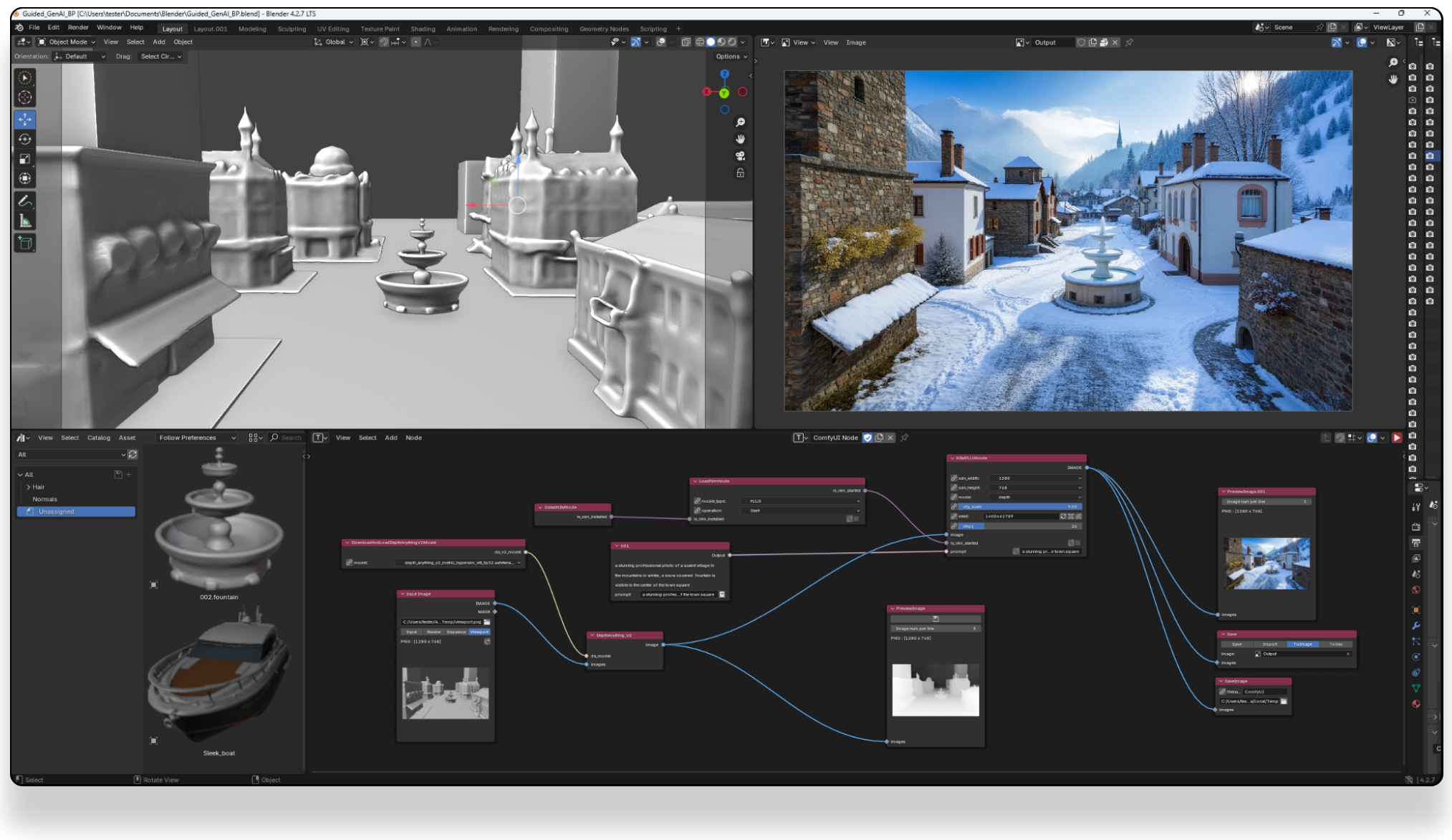

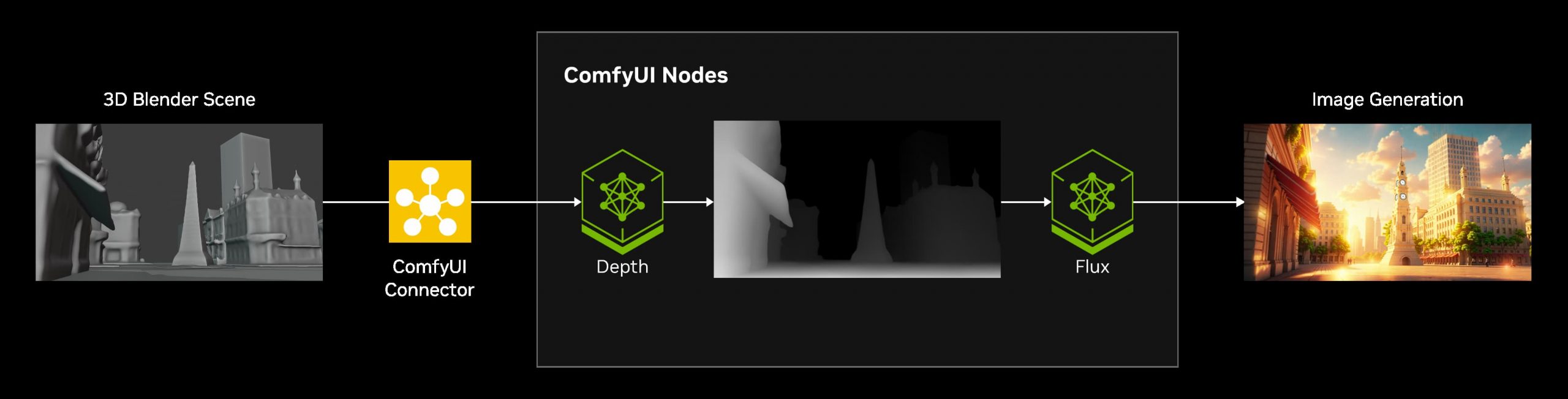

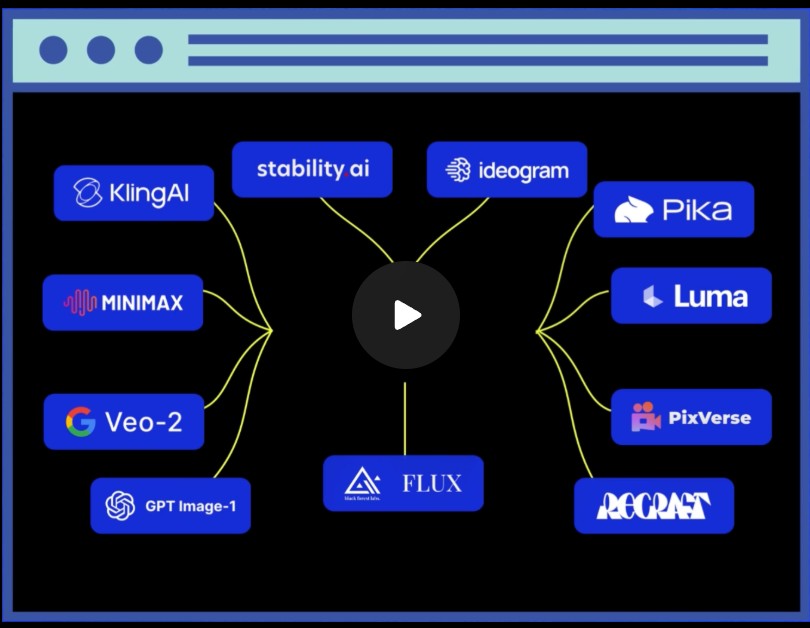

Introducting ComfyUI Native API Nodes

https://blog.comfy.org/p/comfyui-native-api-nodes

Models Supported

- Black Forest Labs Flux 1.1[pro] Ultra, Flux .1[pro]

- Kling 2.0, 1.6, 1.5 & Various Effects

- Luma Photon, Ray2, Ray1.6

- MiniMax Text-to-Video, Image-to-Video

- PixVerse V4 & Effects

- Recraft V3, V2 & Various Tools

- Stability AI Stable Image Ultra, Stable Diffusion 3.5 Large

- Google Veo2

- Ideogram V3, V2, V1

- OpenAI GPT4o image

- Pika 2.2

-

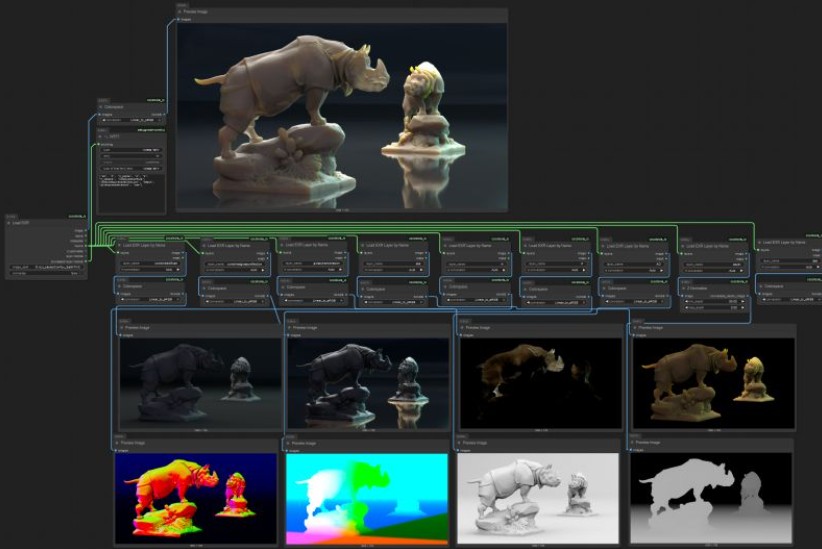

ComfyUI-CoCoTools_IO – A set of nodes focused on advanced image I/O operations, particularly for EXR file handling

https://github.com/Conor-Collins/ComfyUI-CoCoTools_IO

Features

- Advanced EXR image input with multilayer support

- EXR layer extraction and manipulation

- High-quality image saving with format-specific options

- Standard image format loading with bit depth awareness

Current Nodes

Image I/O

- Image Loader: Load standard image formats (PNG, JPG, WebP, etc.) with proper bit depth handling

- Load EXR: Comprehensive EXR file loading with support for multiple layers, channels, and cryptomatte data

- Load EXR Layer by Name: Extract specific layers from EXR files (similar to Nuke’s Shuffle node)

- Cryptomatte Layer: Specialized handling for cryptomatte layers in EXR files

- Image Saver: Save images in various formats with format-specific options (bit depth, compression, etc.)

Image Processing

- Colorspace: Convert between sRGB and Linear colorspaces

- Z Normalize: Normalize depth maps and other single-channel data

-

Claudio Tosti – La vita pittoresca dell’abate Uggeri

https://vivariumnovum.it/saggistica/varia/la-vita-pittoresca-dellabate-uggeri

Book author: Claudio Tosti

Title: La vita pittoresca dell’abate Uggeri – Vol. I – La Giornata Tuscolana- ISBN: 978-8895611990

Video made with Pixverse.ai and DaVinci Resolve

FEATURED POSTS

-

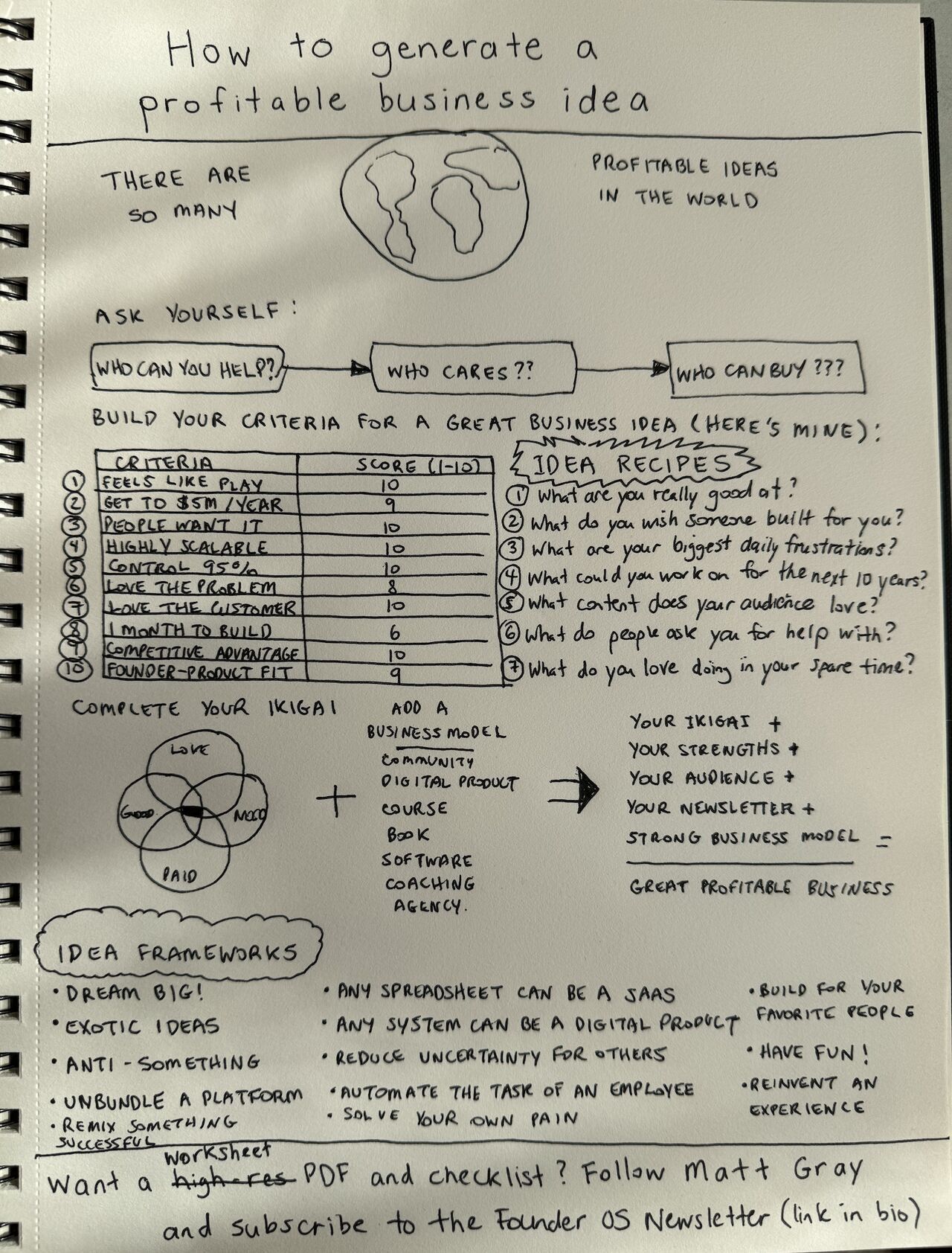

Matt Gray – How to generate a profitable business

In the last 10 years, over 1,000 people have asked me how to start a business. The truth? They’re all paralyzed by limiting beliefs. What they are and how to break them today:

(more…)

Before we get into the How, let’s first unpack why people think they can’t start a business.

Here are the biggest reasons I’ve found:

-

Methods for creating motion blur in Stop motion

en.wikipedia.org/wiki/Go_motion

Petroleum jelly

This crude but reasonably effective technique involves smearing petroleum jelly (“Vaseline”) on a plate of glass in front of the camera lens, also known as vaselensing, then cleaning and reapplying it after each shot — a time-consuming process, but one which creates a blur around the model. This technique was used for the endoskeleton in The Terminator. This process was also employed by Jim Danforth to blur the pterodactyl’s wings in Hammer Films’ When Dinosaurs Ruled the Earth, and by Randal William Cook on the terror dogs sequence in Ghostbusters.[citation needed]Bumping the puppet

Gently bumping or flicking the puppet before taking the frame will produce a slight blur; however, care must be taken when doing this that the puppet does not move too much or that one does not bump or move props or set pieces.Moving the table

Moving the table on which the model is standing while the film is being exposed creates a slight, realistic blur. This technique was developed by Ladislas Starevich: when the characters ran, he moved the set in the opposite direction. This is seen in The Little Parade when the ballerina is chased by the devil. Starevich also used this technique on his films The Eyes of the Dragon, The Magical Clock and The Mascot. Aardman Animations used this for the train chase in The Wrong Trousers and again during the lorry chase in A Close Shave. In both cases the cameras were moved physically during a 1-2 second exposure. The technique was revived for the full-length Wallace & Gromit: The Curse of the Were-Rabbit.Go motion

The most sophisticated technique was originally developed for the film The Empire Strikes Back and used for some shots of the tauntauns and was later used on films like Dragonslayer and is quite different from traditional stop motion. The model is essentially a rod puppet. The rods are attached to motors which are linked to a computer that can record the movements as the model is traditionally animated. When enough movements have been made, the model is reset to its original position, the camera rolls and the model is moved across the table. Because the model is moving during shots, motion blur is created.A variation of go motion was used in E.T. the Extra-Terrestrial to partially animate the children on their bicycles.