BREAKING NEWS

LATEST POSTS

-

Kelly Boesch – Static and Toward The Light

https://www.kellyboeschdesign.com

I was working an album cover last night and got these really cool images in midjourney so made a video out of it. Animated using Pika. Song made using Suno Full version on my bandcamp. It’s called Static.

https://www.linkedin.com/posts/kellyboesch_midjourney-keyframes-ai-activity-7359244714853736450-Wvcr(more…) -

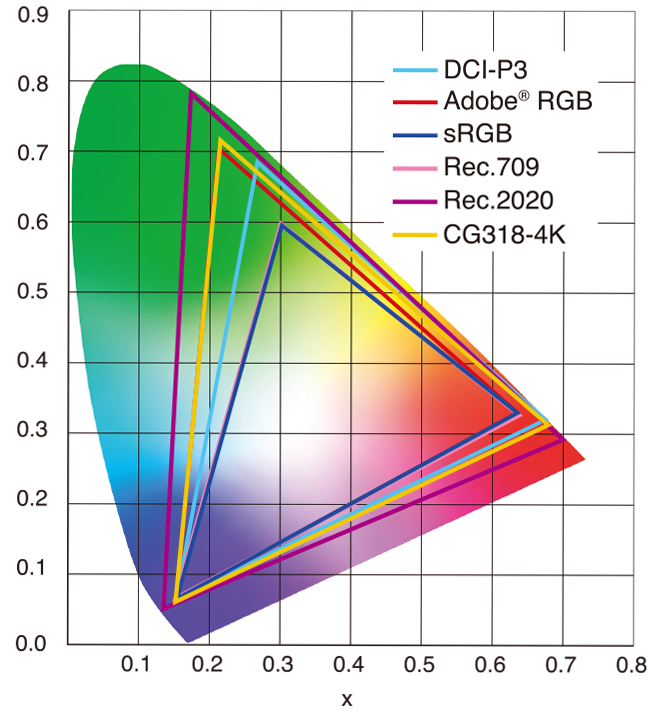

sRGB vs REC709 – An introduction and FFmpeg implementations

1. Basic Comparison

- What they are

- sRGB: A standard “web”/computer-display RGB color space defined by IEC 61966-2-1. It’s used for most monitors, cameras, printers, and the vast majority of images on the Internet.

- Rec. 709: An HD-video color space defined by ITU-R BT.709. It’s the go-to standard for HDTV broadcasts, Blu-ray discs, and professional video pipelines.

- Why they exist

- sRGB: Ensures consistent colors across different consumer devices (PCs, phones, webcams).

- Rec. 709: Ensures consistent colors across video production and playback chains (cameras → editing → broadcast → TV).

- What you’ll see

- On your desktop or phone, images tagged sRGB will look “right” without extra tweaking.

- On an HDTV or video-editing timeline, footage tagged Rec. 709 will display accurate contrast and hue on broadcast-grade monitors.

2. Digging Deeper

Feature sRGB Rec. 709 White point D65 (6504 K), same for both D65 (6504 K) Primaries (x,y) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) Gamut size Identical triangle on CIE 1931 chart Identical to sRGB Gamma / transfer Piecewise curve: approximate 2.2 with linear toe Pure power-law γ≈2.4 (often approximated as 2.2 in practice) Matrix coefficients N/A (pure RGB usage) Y = 0.2126 R + 0.7152 G + 0.0722 B (Rec. 709 matrix) Typical bit-depth 8-bit/channel (with 16-bit variants) 8-bit/channel (10-bit for professional video) Usage metadata Tagged as “sRGB” in image files (PNG, JPEG, etc.) Tagged as “bt709” in video containers (MP4, MOV) Color range Full-range RGB (0–255) Studio-range Y′CbCr (Y′ [16–235], Cb/Cr [16–240])

Why the Small Differences Matter

(more…) - What they are

-

Narcis Calin’s Galaxy Engine – A free, open source simulation software

This 2025 I decided to start learning how to code, so I installed Visual Studio and I started looking into C++. After days of watching tutorials and guides about the basics of C++ and programming, I decided to make something physics-related. I started with a dot that fell to the ground and then I wanted to simulate gravitational attraction, so I made 2 circles attracting each other. I thought it was really cool to see something I made with code actually work, so I kept building on top of that small, basic program. And here we are after roughly 8 months of learning programming. This is Galaxy Engine, and it is a simulation software I have been making ever since I started my learning journey. It currently can simulate gravity, dark matter, galaxies, the Big Bang, temperature, fluid dynamics, breakable solids, planetary interactions, etc. The program can run many tens of thousands of particles in real time on the CPU thanks to the Barnes-Hut algorithm, mixed with Morton curves. It also includes its own PBR 2D path tracer with BVH optimizations. The path tracer can simulate a bunch of stuff like diffuse lighting, specular reflections, refraction, internal reflection, fresnel, emission, dispersion, roughness, IOR, nested IOR and more! I tried to make the path tracer closer to traditional 3D render engines like V-Ray. I honestly never imagined I would go this far with programming, and it has been an amazing learning experience so far. I think that mixing this knowledge with my 3D knowledge can unlock countless new possibilities. In case you are curious about Galaxy Engine, I made it completely free and Open-Source so that anyone can build and compile it locally! You can find the source code in GitHub

https://github.com/NarcisCalin/Galaxy-Engine

-

Introduction to BytesIO

When you’re working with binary data in Python—whether that’s image bytes, network payloads, or any in-memory binary stream—you often need a file-like interface without touching the disk. That’s where

BytesIOfrom the built-iniomodule comes in handy. It lets you treat a bytes buffer as if it were a file.What Is

BytesIO?- Module:

io - Class:

BytesIO - Purpose:

- Provides an in-memory binary stream.

- Acts like a file opened in binary mode (

'rb'/'wb'), but data lives in RAM rather than on disk.

from io import BytesIO

Why Use

BytesIO?- Speed

- No disk I/O—reads and writes happen in memory.

- Convenience

- Emulates file methods (

read(),write(),seek(), etc.). - Ideal for testing code that expects a file-like object.

- Emulates file methods (

- Safety

- No temporary files cluttering up your filesystem.

- Integration

- Libraries that accept file-like objects (e.g., PIL,

requests) will work withBytesIO.

- Libraries that accept file-like objects (e.g., PIL,

Basic Examples

1. Writing Bytes to a Buffer

(more…)from io import BytesIO # Create a BytesIO buffer buffer = BytesIO() # Write some binary data buffer.write(b'Hello, \xF0\x9F\x98\x8A') # includes a smiley emoji in UTF-8 # Retrieve the entire contents data = buffer.getvalue() print(data) # b'Hello, \xf0\x9f\x98\x8a' print(data.decode('utf-8')) # Hello, 😊 # Always close when done buffer.close() - Module:

FEATURED POSTS

-

Free fonts

https://fontlibrary.org

https://fontsource.orgOpen-source fonts packaged into individual NPM packages for self-hosting in web applications. Self-hosting fonts can significantly improve website performance, remain version-locked, work offline, and offer more privacy.

https://www.awwwards.com/awwwards/collections/free-fonts

http://www.fontspace.com/popular/fonts

https://www.urbanfonts.com/free-fonts.htm

http://www.1001fonts.com/poster-fonts.html

How to use @font-face in CSS

The

@font-facerule allows custom fonts to be loaded on a webpage: https://css-tricks.com/snippets/css/using-font-face-in-css/

-

Photography basics: Production Rendering Resolution Charts

https://www.urtech.ca/2019/04/solved-complete-list-of-screen-resolution-names-sizes-and-aspect-ratios/

Resolution – Aspect Ratio 4:03 16:09 16:10 3:02 5:03 5:04 CGA 320 x 200 QVGA 320 x 240 VGA (SD, Standard Definition) 640 x 480 NTSC 720 x 480 WVGA 854 x 450 WVGA 800 x 480 PAL 768 x 576 SVGA 800 x 600 XGA 1024 x 768 not named 1152 x 768 HD 720 (720P, High Definition) 1280 x 720 WXGA 1280 x 800 WXGA 1280 x 768 SXGA 1280 x 1024 not named (768P, HD, High Definition) 1366 x 768 not named 1440 x 960 SXGA+ 1400 x 1050 WSXGA 1680 x 1050 UXGA (2MP) 1600 x 1200 HD1080 (1080P, Full HD) 1920 x 1080 WUXGA 1920 x 1200 2K 2048 x (any) QWXGA 2048 x 1152 QXGA (3MP) 2048 x 1536 WQXGA 2560 x 1600 QHD (Quad HD) 2560 x 1440 QSXGA (5MP) 2560 x 2048 4K UHD (4K, Ultra HD, Ultra-High Definition) 3840 x 2160 QUXGA+ 3840 x 2400 IMAX 3D 4096 x 3072 8K UHD (8K, 8K Ultra HD, UHDTV) 7680 x 4320 10K (10240×4320, 10K HD) 10240 x (any) 16K (Quad UHD, 16K UHD, 8640P) 15360 x 8640

-

What is OLED and what can it do for your TV

https://www.cnet.com/news/what-is-oled-and-what-can-it-do-for-your-tv/

OLED stands for Organic Light Emitting Diode. Each pixel in an OLED display is made of a material that glows when you jab it with electricity. Kind of like the heating elements in a toaster, but with less heat and better resolution. This effect is called electroluminescence, which is one of those delightful words that is big, but actually makes sense: “electro” for electricity, “lumin” for light and “escence” for, well, basically “essence.”

OLED TV marketing often claims “infinite” contrast ratios, and while that might sound like typical hyperbole, it’s one of the extremely rare instances where such claims are actually true. Since OLED can produce a perfect black, emitting no light whatsoever, its contrast ratio (expressed as the brightest white divided by the darkest black) is technically infinite.

OLED is the only technology capable of absolute blacks and extremely bright whites on a per-pixel basis. LCD definitely can’t do that, and even the vaunted, beloved, dearly departed plasma couldn’t do absolute blacks.

-

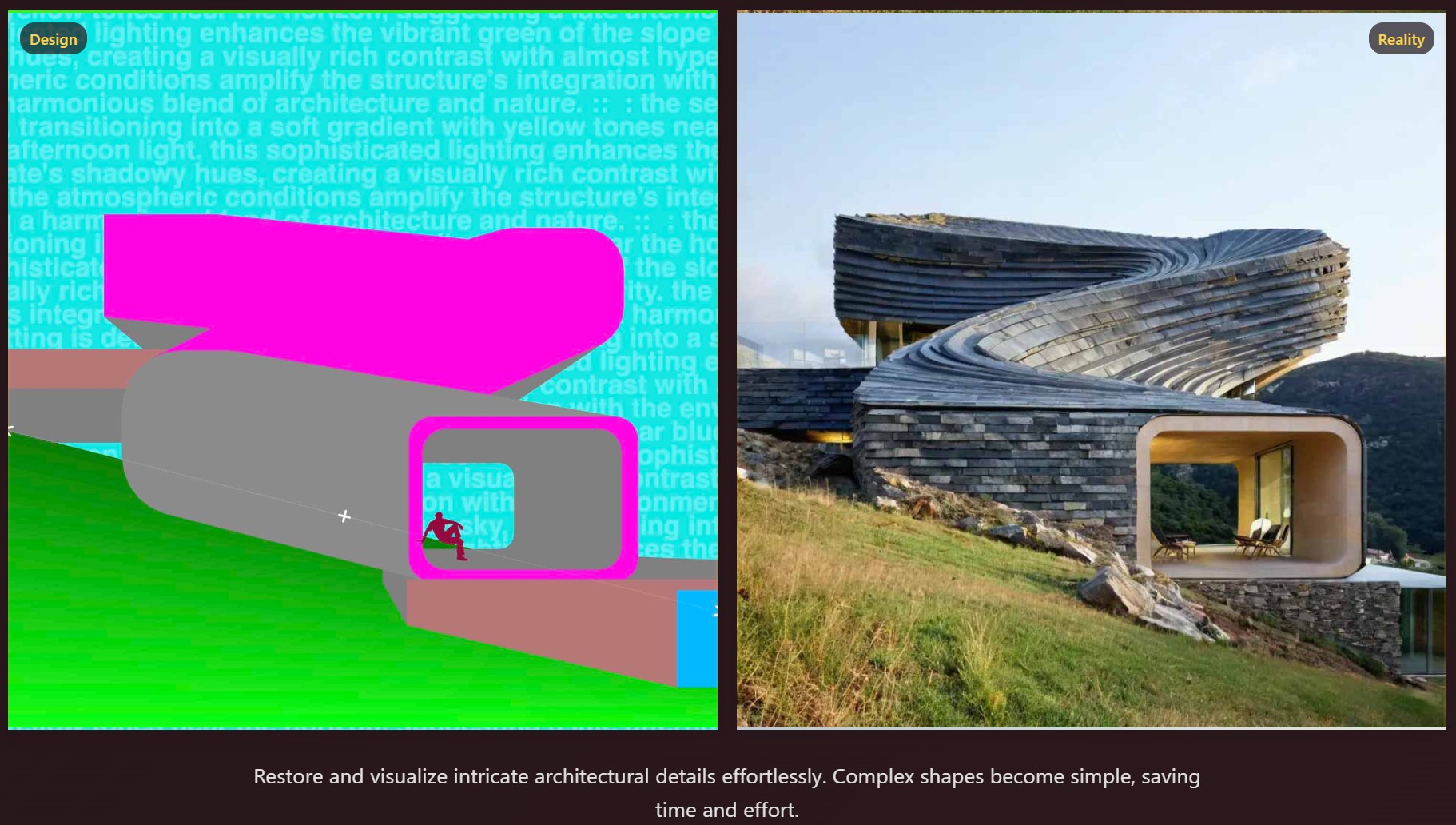

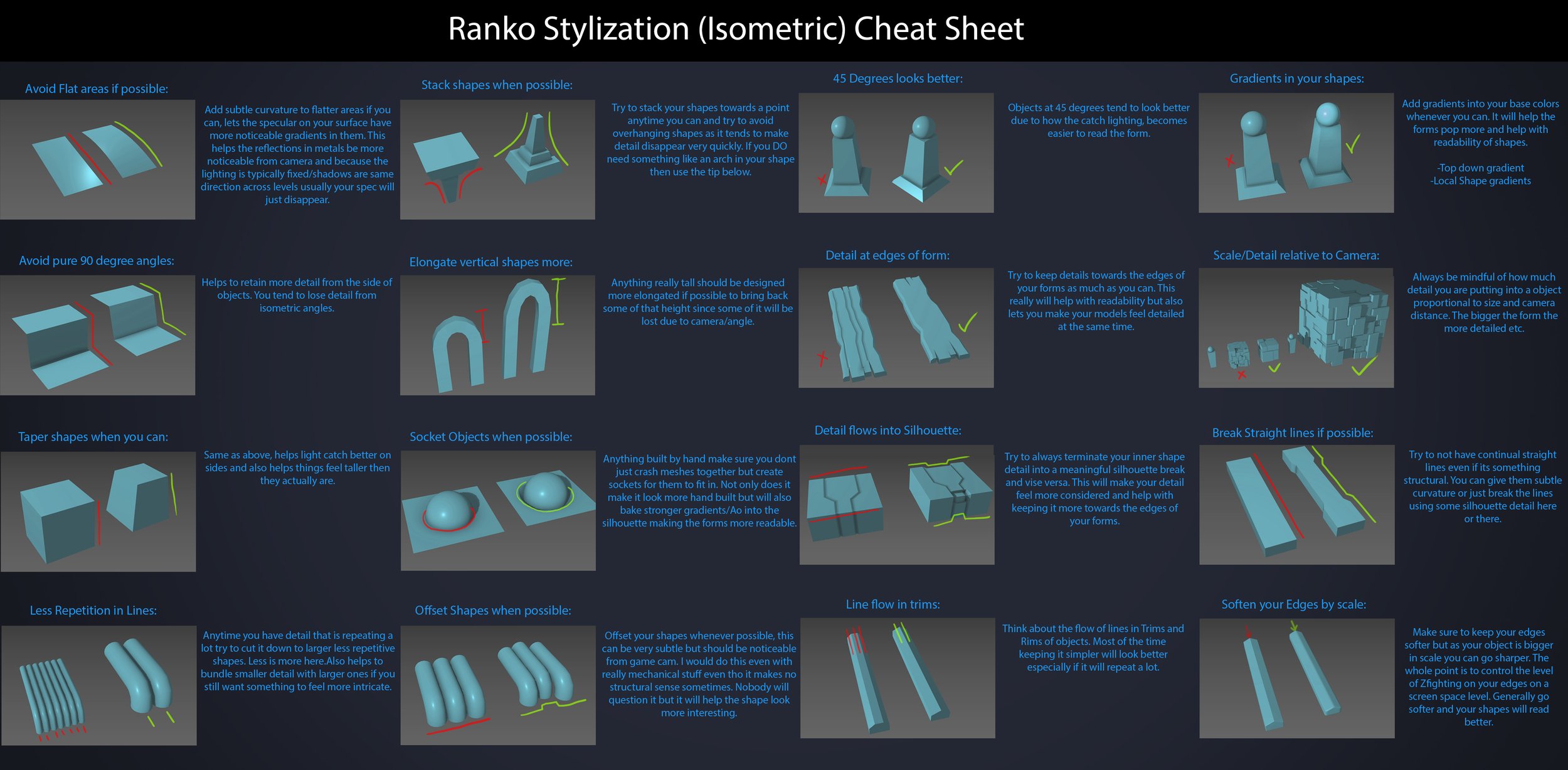

Ranko Prozo – Modelling design tips

Every Project I work on I always create a stylization Cheat sheet. Every project is unique but some principles carry over no matter what. This is a sheet I use a lot when I work on isometric stylized projects to help keep my assets consistent and interesting. None of these concepts are my own, just lots of tips I learned over the years. I have also added this to a page on my website, will continue to update with more tips and tricks, just need time to compile it all :)

-

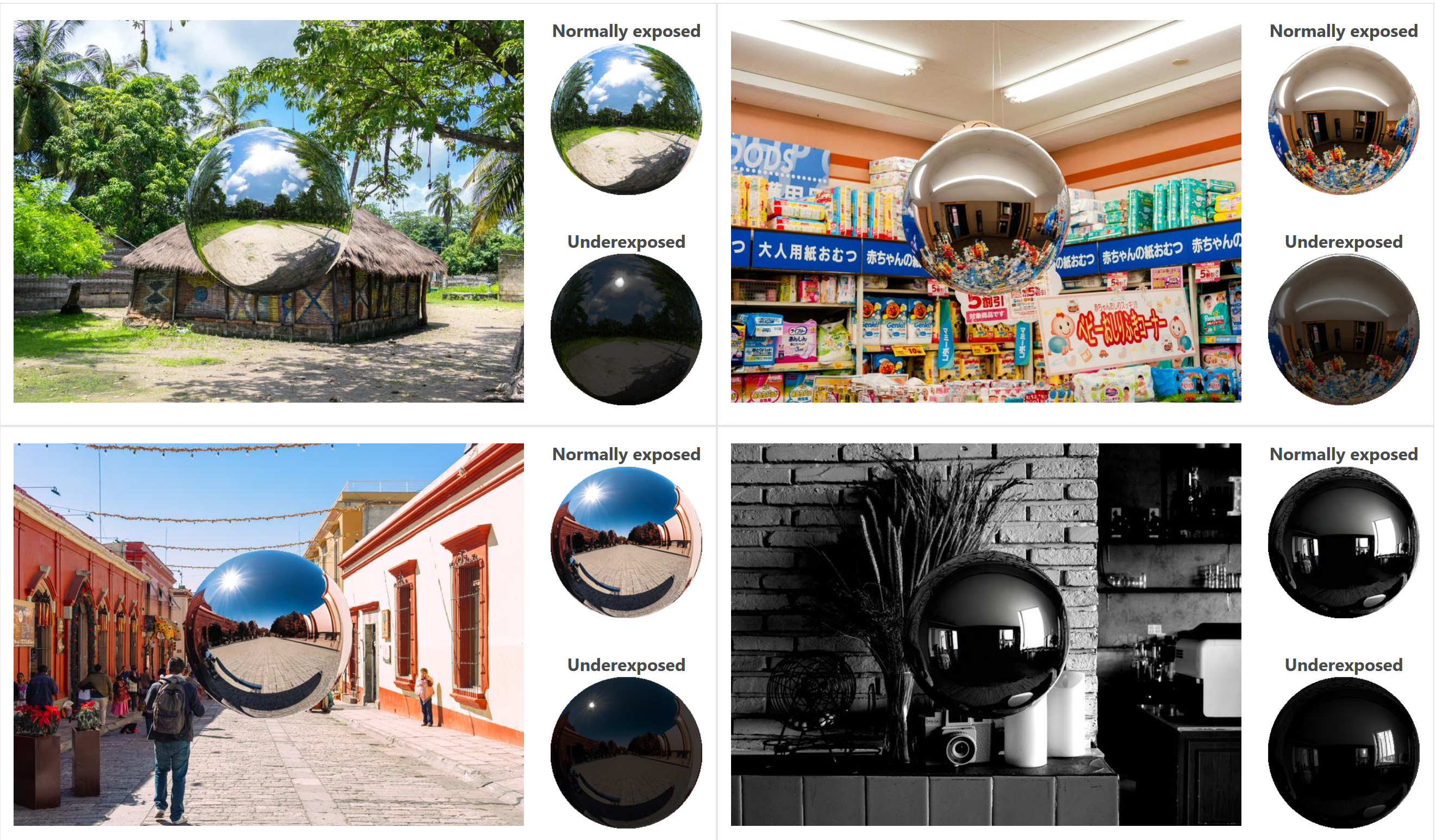

DiffusionLight: HDRI Light Probes for Free by Painting a Chrome Ball

https://diffusionlight.github.io/

https://github.com/DiffusionLight/DiffusionLight

https://github.com/DiffusionLight/DiffusionLight?tab=MIT-1-ov-file#readme

https://colab.research.google.com/drive/15pC4qb9mEtRYsW3utXkk-jnaeVxUy-0S

“a simple yet effective technique to estimate lighting in a single input image. Current techniques rely heavily on HDR panorama datasets to train neural networks to regress an input with limited field-of-view to a full environment map. However, these approaches often struggle with real-world, uncontrolled settings due to the limited diversity and size of their datasets. To address this problem, we leverage diffusion models trained on billions of standard images to render a chrome ball into the input image. Despite its simplicity, this task remains challenging: the diffusion models often insert incorrect or inconsistent objects and cannot readily generate images in HDR format. Our research uncovers a surprising relationship between the appearance of chrome balls and the initial diffusion noise map, which we utilize to consistently generate high-quality chrome balls. We further fine-tune an LDR difusion model (Stable Diffusion XL) with LoRA, enabling it to perform exposure bracketing for HDR light estimation. Our method produces convincing light estimates across diverse settings and demonstrates superior generalization to in-the-wild scenarios.”