https://www.sdiolatz.info/publications/00ImageGS.html

3Dprinting (185) A.I. (926) animation (356) blender (224) colour (241) commercials (53) composition (154) cool (375) design (661) Featured (94) hardware (319) IOS (109) jokes (141) lighting (302) modeling (160) music (189) photogrammetry (199) photography (758) production (1311) python (108) quotes (501) reference (318) software (1385) trailers (311) ves (579) VR (221)

POPULAR SEARCHES unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

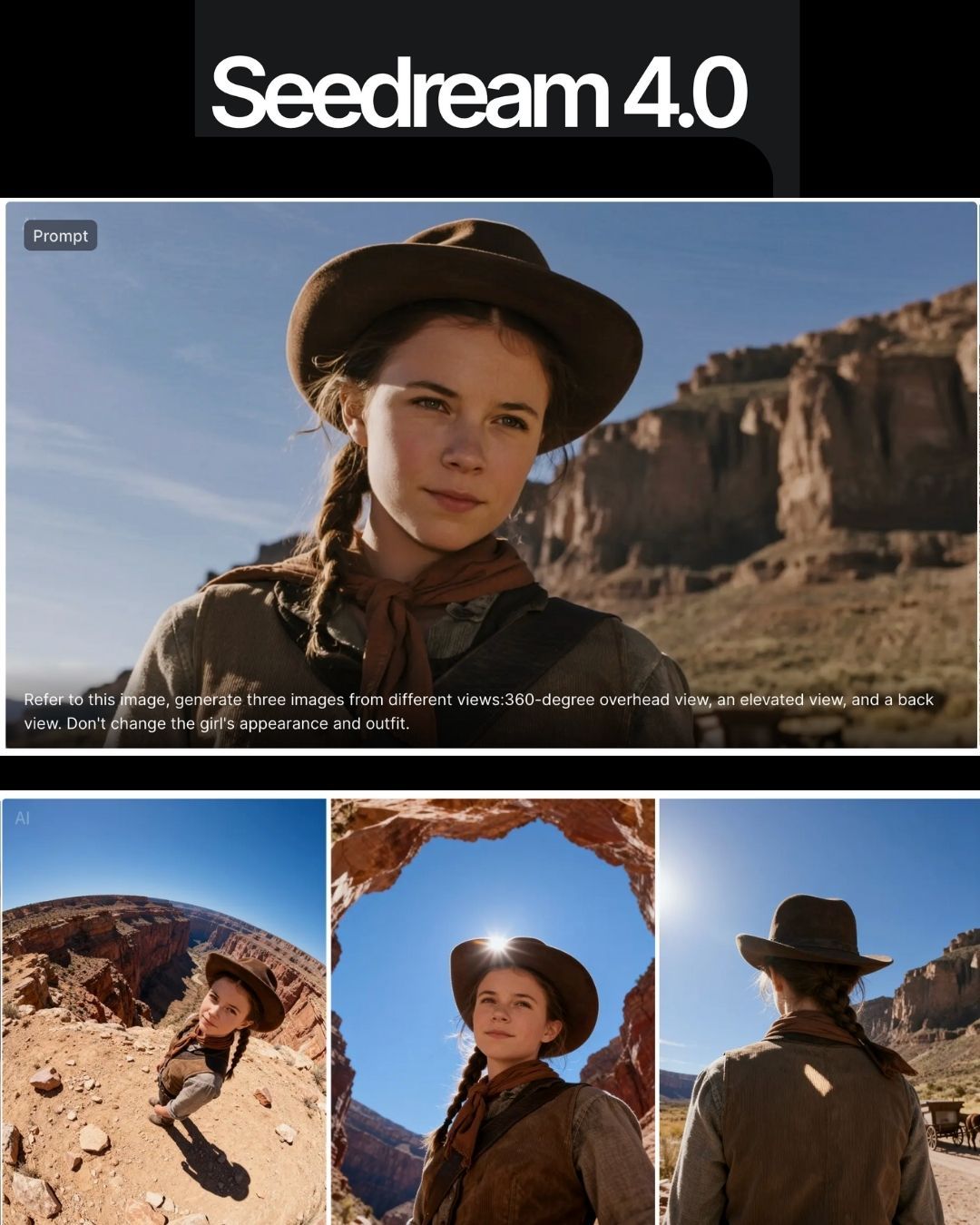

https://seed.bytedance.com/en/seedream4_0

➤ Super‑fast, high‑resolution results : resolutions up to 4K, producing a 2K image in less than 1.8 seconds, all while maintining sharpness and realism.

➤ At 4K, cost as low as 0.03 $ per generation.

➤ Natural‑language editing – You can instruct the model to “remove the people in the background,” “add a helmet” or “replace this with that,” and it executes without needing complicated prompts.

➤ Multi‑image input and output – It can combine multiple images, transfer styles and produce storyboards or series with consistent characters and themes.

https://www.wsj.com/tech/ai/openai-backs-ai-made-animated-feature-film-389f70b0

Film, called ‘Critterz,’ aims to debut at Cannes Film Festival and will leverage startup’s AI tools and resources.

“Critterz,” about forest creatures who go on an adventure after their village is disrupted by a stranger, is the brainchild of Chad Nelson, a creative specialist at OpenAI. Nelson started sketching out the characters three years ago while trying to make a short film with what was then OpenAI’s new DALL-E image-generation tool.

https://variety.com/2025/digital/news/anthropic-class-action-settlement-billion-1236509571

The settlement amounts to about $3,000 per book and is believed to be the largest ever recovery in a U.S. copyright case, according to the plaintiffs’ attorneys.

https://www.thepost.co.nz/business/360813799/weta-fx-posts-59m-loss-amid-industry-headwinds

Wētā FX, Sir Peter Jackson’s largest business has posted a $59.3 million loss for the year to March 31, an improvement on an $83m loss last year.

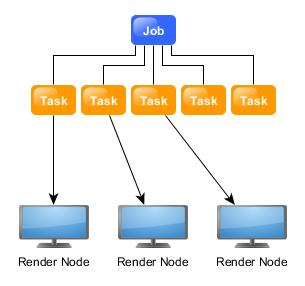

Submit ComfyUI workflows to Thinkbox Deadline render farm.

https://github.com/doubletwisted/ComfyUI-Deadline-Plugin

https://docs.thinkboxsoftware.com/products/deadline/latest/1_User%20Manual/manual/overview.html

Deadline 10 is a cross-platform render farm management tool for Windows, Linux, and macOS. It gives users control of their rendering resources and can be used on-premises, in the cloud, or both. It handles asset syncing to the cloud, manages data transfers, and supports tagging for cost tracking purposes.

Deadline 10’s Remote Connection Server allows for communication over HTTPS, improving performance and scalability. Where supported, users can use usage-based licensing to supplement their existing fixed pool of software licenses when rendering through Deadline 10.

https://landscapearchitecture.store/blogs/news/nano-banana-ai-free-tool-for-3d-architecture-models

Pro tip: If you want more accuracy, upload two images — a street photo for the facade and an aerial view for the roof/top.

Blender is switching from OpenGL to Vulkan as its default graphics backend, starting significantly with Blender 4.5, to achieve better performance and prepare for future features like real-time ray tracing and global illumination. To enable this switch, go to Edit > Preferences > System and set the “Backend” option to “Vulkan,” then restart Blender. This change offers substantial benefits, including faster startup times, improved viewport responsiveness, and more efficient handling of complex scenes by better utilizing your CPU and GPU resources.

Why the Switch to Vulkan?

Given sparse-view videos, Diffuman4D (1) generates 4D-consistent multi-view videos conditioned on these inputs, and (2) reconstructs a high-fidelity 4DGS model of the human performance using both the input and the generated videos.

Official Website: https://meigen-ai.github.io/InfiniteTalk/

GitHub Repo (Code & Instructions): https://github.com/MeiGen-AI/InfiniteTalk

Hugging Face (Models): https://huggingface.co/MeiGen-AI/InfiniteTalk

Paper: https://arxiv.org/abs/2408.09625

https://www.instagram.com/aifilms.ai/reel/DOEJ7LJEZxL

Truly Infinite Videos

This isn’t a gimmick. You can generate incredibly long videos without frying your VRAM. Perfect for podcasts, presentations, or full-on virtual influencers.

More Than Just Lips

This is the best part. It doesn’t just sync the mouth; it generates realistic head movements, body posture, and facial expressions that match the audio’s emotion. It makes characters feel alive.

Keeps Everything Consistent

It preserves the character’s identity, the background, and even camera movements from your original video, so everything looks seamless.

Completely Open Source & Ready for Business

The code, the weights, and the paper are all out there for you to use. Best of all, it’s released under an Apache 2.0 license, which means you are free to use what you create for commercial projects!

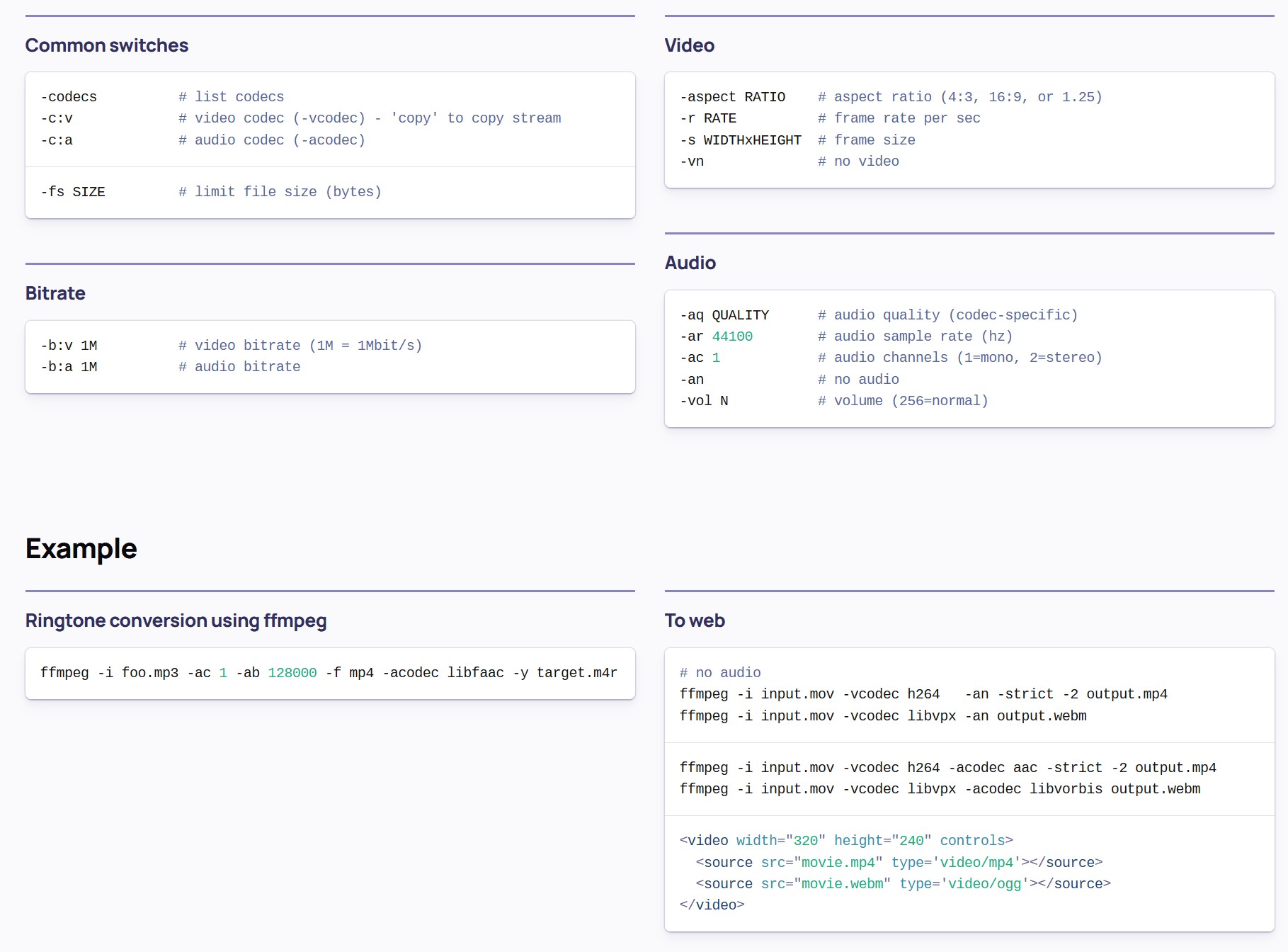

# extract one frame at the end of a video

ffmpeg -sseof -0.1 -i intro_1.mp4 -frames:v 1 -q:v 1 intro_end.jpg

-sseof -0.1: This option tells FFmpeg to seek to 0.1 seconds before the end of the file. This approach is often more reliable for extracting the last frame, especially if the video’s duration isn’t an exact multiple of the frame interval.

Super User

-frames:v 1: Extracts a single frame.

-q:v 1: Sets the quality of the output image; 1 is the highest quality.

# extract one frame at the beginning of a video

ffmpeg -i speaking_4.mp4 -frames:v 1 speaking_beginning.jpg

# check video length

ffmpeg -i C:\myvideo.mp4 -f null –

# Convert mov/mp4 to animated gifEdit

ffmpeg -i input.mp4 -pix_fmt rgb24 output.gif

Other useful ffmpeg commandsEdit

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.