COMPOSITION

-

Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental process

Read more: Christopher Butler – Understanding the Eye-Mind Connection – Vision is a mental processhttps://www.chrbutler.com/understanding-the-eye-mind-connection

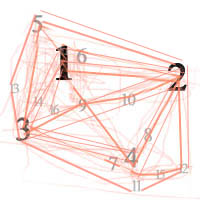

The intricate relationship between the eyes and the brain, often termed the eye-mind connection, reveals that vision is predominantly a cognitive process. This understanding has profound implications for fields such as design, where capturing and maintaining attention is paramount. This essay delves into the nuances of visual perception, the brain’s role in interpreting visual data, and how this knowledge can be applied to effective design strategies.

This cognitive aspect of vision is evident in phenomena such as optical illusions, where the brain interprets visual information in a way that contradicts physical reality. These illusions underscore that what we “see” is not merely a direct recording of the external world but a constructed experience shaped by cognitive processes.

Understanding the cognitive nature of vision is crucial for effective design. Designers must consider how the brain processes visual information to create compelling and engaging visuals. This involves several key principles:

- Attention and Engagement

- Visual Hierarchy

- Cognitive Load Management

- Context and Meaning

-

HuggingFace ai-comic-factory – a FREE AI Comic Book Creator

Read more: HuggingFace ai-comic-factory – a FREE AI Comic Book Creatorhttps://huggingface.co/spaces/jbilcke-hf/ai-comic-factory

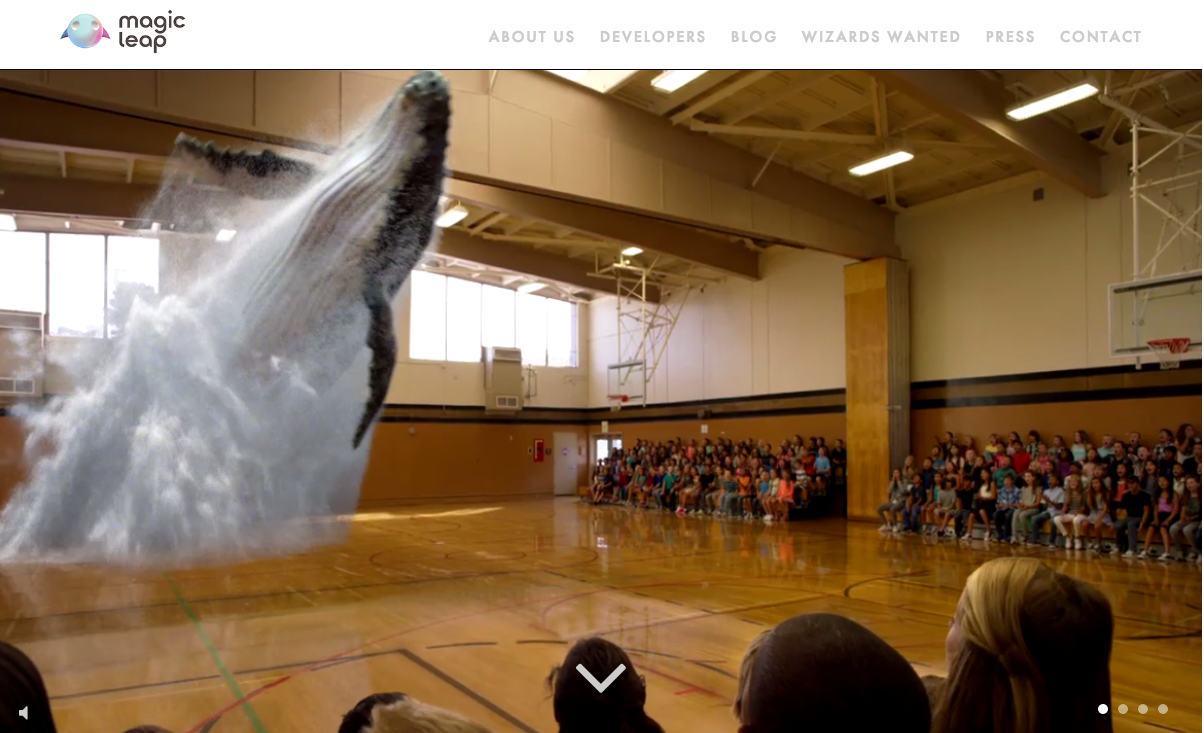

this is the epic story of a group of talented digital artists trying to overcame daily technical challenges to achieve incredibly photorealistic projects of monsters and aliens

DESIGN

-

Kristina Kashtanova – “This is how GPT-4 sees and hears itself”

Read more: Kristina Kashtanova – “This is how GPT-4 sees and hears itself”“I used GPT-4 to describe itself. Then I used its description to generate an image, a video based on this image and a soundtrack.

Tools I used: GPT-4, Midjourney, Kaiber AI, Mubert, RunwayML

This is the description I used that GPT-4 had of itself as a prompt to text-to-image, image-to-video, and text-to-music. I put the video and sound together in RunwayML.

GPT-4 described itself as: “Imagine a sleek, metallic sphere with a smooth surface, representing the vast knowledge contained within the model. The sphere emits a soft, pulsating glow that shifts between various colors, symbolizing the dynamic nature of the AI as it processes information and generates responses. The sphere appears to float in a digital environment, surrounded by streams of data and code, reflecting the complex algorithms and computing power behind the AI”

COLOR

-

OLED vs QLED – What TV is better?

Read more: OLED vs QLED – What TV is better?Supported by LG, Philips, Panasonic and Sony sell the OLED system TVs.

OLED stands for “organic light emitting diode.”

It is a fundamentally different technology from LCD, the major type of TV today.

OLED is “emissive,” meaning the pixels emit their own light.Samsung is branding its best TVs with a new acronym: “QLED”

QLED (according to Samsung) stands for “quantum dot LED TV.”

It is a variation of the common LED LCD, adding a quantum dot film to the LCD “sandwich.”

QLED, like LCD, is, in its current form, “transmissive” and relies on an LED backlight.OLED is the only technology capable of absolute blacks and extremely bright whites on a per-pixel basis. LCD definitely can’t do that, and even the vaunted, beloved, dearly departed plasma couldn’t do absolute blacks.

QLED, as an improvement over OLED, significantly improves the picture quality. QLED can produce an even wider range of colors than OLED, which says something about this new tech. QLED is also known to produce up to 40% higher luminance efficiency than OLED technology. Further, many tests conclude that QLED is far more efficient in terms of power consumption than its predecessor, OLED.

(more…) -

Brett Jones / Phil Reyneri (Lightform) / Philipp7pc: The study of Projection Mapping through Projectors

Read more: Brett Jones / Phil Reyneri (Lightform) / Philipp7pc: The study of Projection Mapping through ProjectorsVideo Projection Tool Software

https://hcgilje.wordpress.com/vpt/https://www.projectorpoint.co.uk/news/how-bright-should-my-projector-be/

http://www.adwindowscreens.com/the_calculator/

heavym

https://heavym.net/en/MadMapper

https://madmapper.com/ -

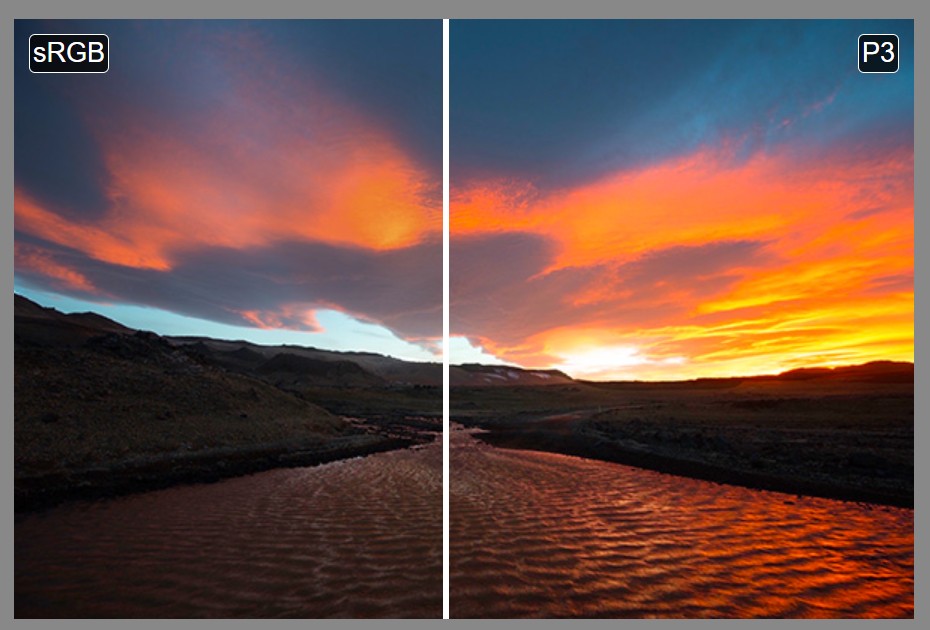

Colormaxxing – What if I told you that rgb(255, 0, 0) is not actually the reddest red you can have in your browser?

Read more: Colormaxxing – What if I told you that rgb(255, 0, 0) is not actually the reddest red you can have in your browser?https://karuna.dev/colormaxxing

https://webkit.org/blog-files/color-gamut/comparison.html

https://oklch.com/#70,0.1,197,100

-

Yasuharu YOSHIZAWA – Comparison of sRGB vs ACREScg in Nuke

Read more: Yasuharu YOSHIZAWA – Comparison of sRGB vs ACREScg in NukeAnswering the question that is often asked, “Do I need to use ACEScg to display an sRGB monitor in the end?” (Demonstration shown at an in-house seminar)

Comparison of scanlineRender output with extreme color lights on color charts with sRGB/ACREScg in color – OCIO -working space in NukeDownload the Nuke script:

-

Composition – cinematography Cheat Sheet

Read more: Composition – cinematography Cheat Sheet

Where is our eye attracted first? Why?

Size. Focus. Lighting. Color.

Size. Mr. White (Harvey Keitel) on the right.

Focus. He’s one of the two objects in focus.

Lighting. Mr. White is large and in focus and Mr. Pink (Steve Buscemi) is highlighted by

a shaft of light.

Color. Both are black and white but the read on Mr. White’s shirt now really stands out.

(more…)

What type of lighting? -

The Maya civilization and the color blue

Read more: The Maya civilization and the color blueMaya blue is a highly unusual pigment because it is a mix of organic indigo and an inorganic clay mineral called palygorskite.

Echoing the color of an azure sky, the indelible pigment was used to accentuate everything from ceramics to human sacrifices in the Late Preclassic period (300 B.C. to A.D. 300).

A team of researchers led by Dean Arnold, an adjunct curator of anthropology at the Field Museum in Chicago, determined that the key to Maya blue was actually a sacred incense called copal.

By heating the mixture of indigo, copal and palygorskite over a fire, the Maya produced the unique pigment, he reported at the time.

LIGHTING

-

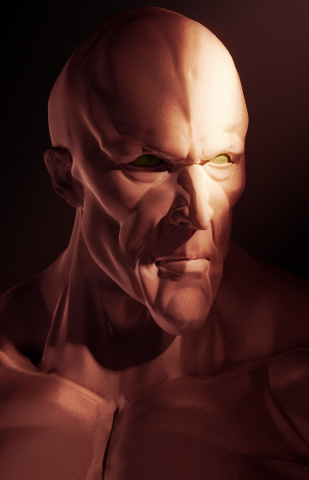

3D Lighting Tutorial by Amaan Kram

Read more: 3D Lighting Tutorial by Amaan Kramhttp://www.amaanakram.com/lightingT/part1.htm

The goals of lighting in 3D computer graphics are more or less the same as those of real world lighting.

Lighting serves a basic function of bringing out, or pushing back the shapes of objects visible from the camera’s view.

It gives a two-dimensional image on the monitor an illusion of the third dimension-depth.But it does not just stop there. It gives an image its personality, its character. A scene lit in different ways can give a feeling of happiness, of sorrow, of fear etc., and it can do so in dramatic or subtle ways. Along with personality and character, lighting fills a scene with emotion that is directly transmitted to the viewer.

Trying to simulate a real environment in an artificial one can be a daunting task. But even if you make your 3D rendering look absolutely photo-realistic, it doesn’t guarantee that the image carries enough emotion to elicit a “wow” from the people viewing it.

Making 3D renderings photo-realistic can be hard. Putting deep emotions in them can be even harder. However, if you plan out your lighting strategy for the mood and emotion that you want your rendering to express, you make the process easier for yourself.

Each light source can be broken down in to 4 distinct components and analyzed accordingly.

· Intensity

· Direction

· Color

· SizeThe overall thrust of this writing is to produce photo-realistic images by applying good lighting techniques.

-

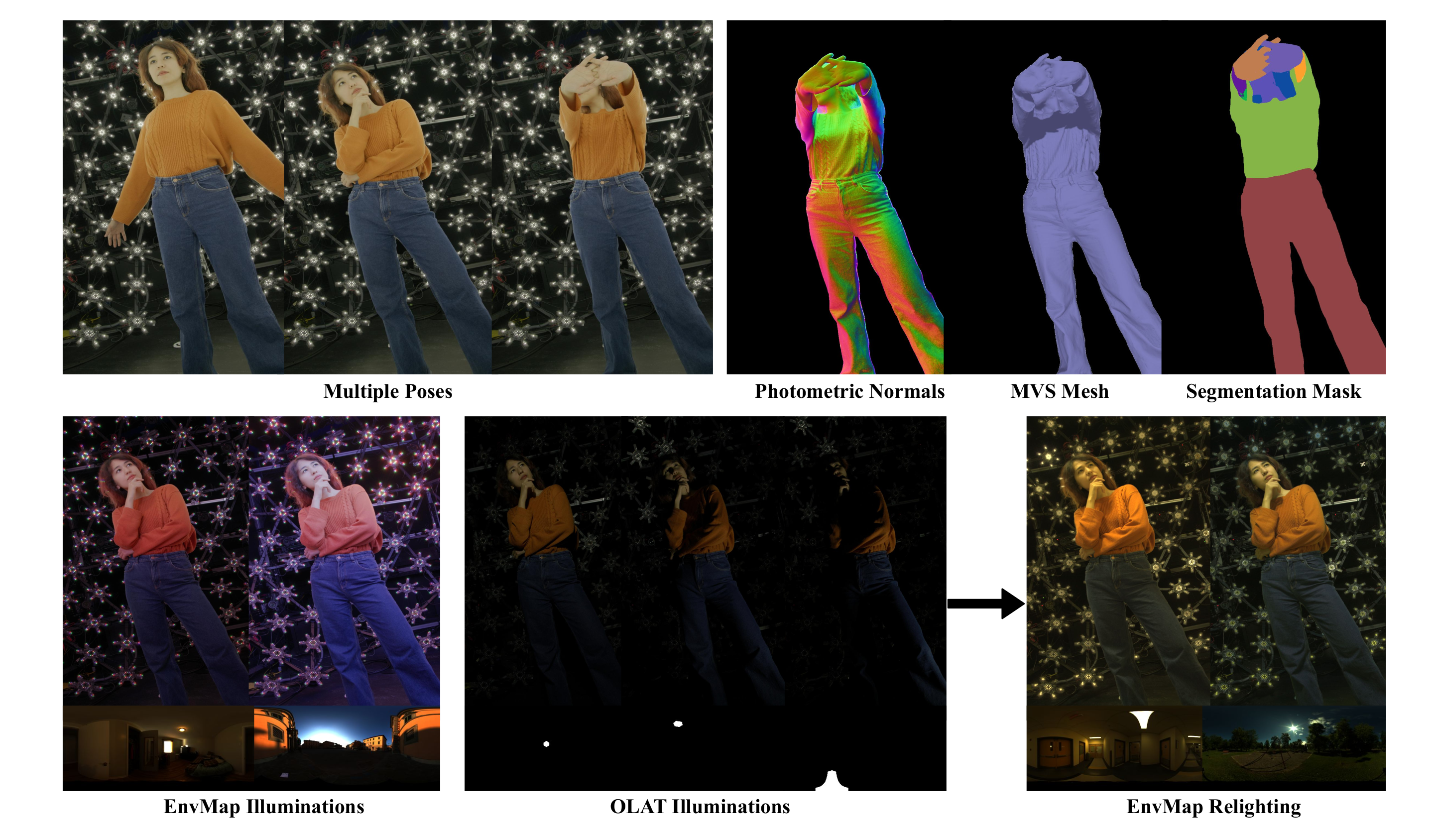

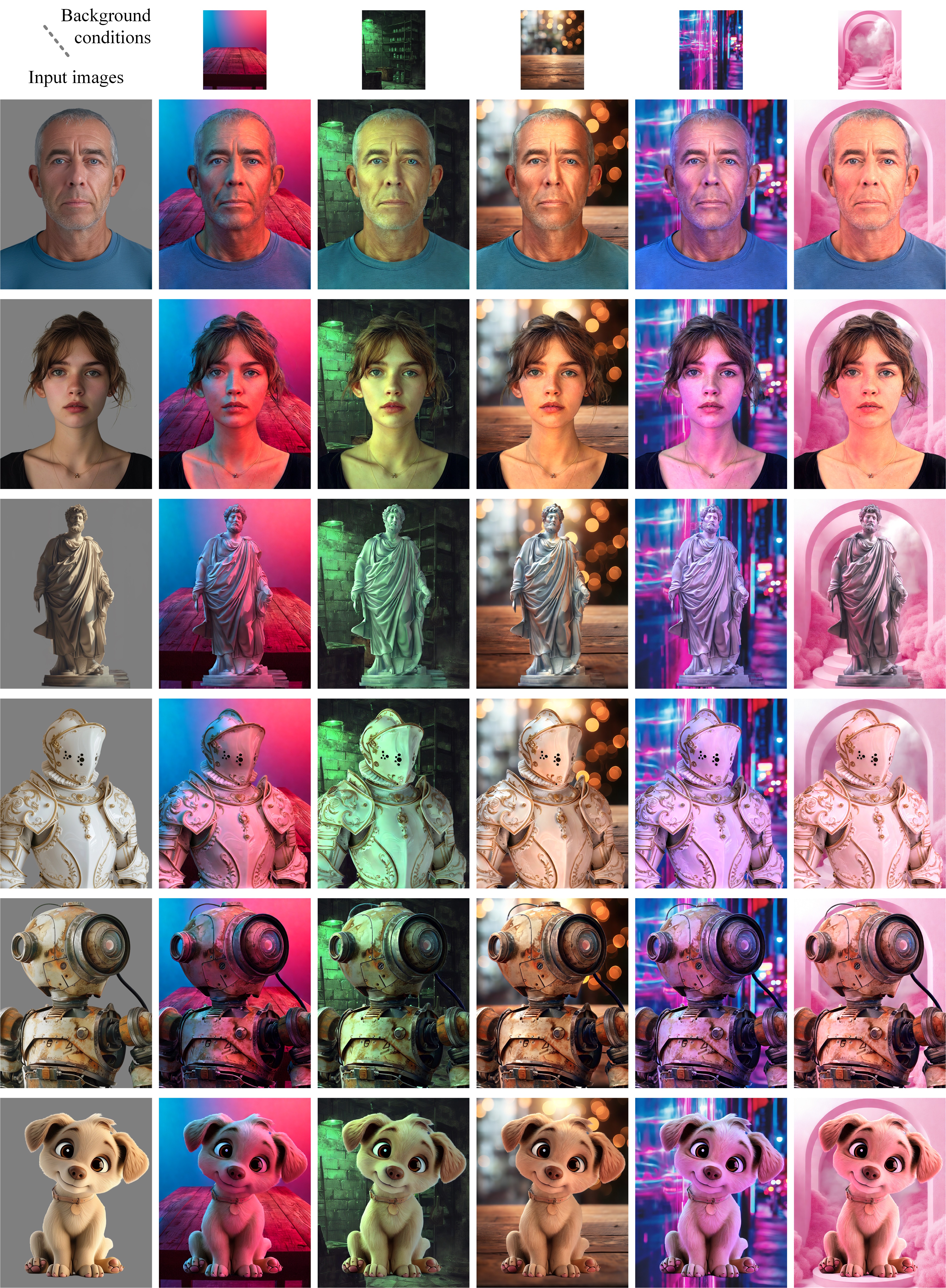

ICLight – Krea and ComfyUI light editing

Read more: ICLight – Krea and ComfyUI light editinghttps://drive.google.com/drive/folders/16Aq1mqZKP-h8vApaN4FX5at3acidqPUv

https://github.com/lllyasviel/IC-Light

https://generativematte.blogspot.com/2025/03/comfyui-ic-light-relighting-exploration.html

Workflow Local copy

-

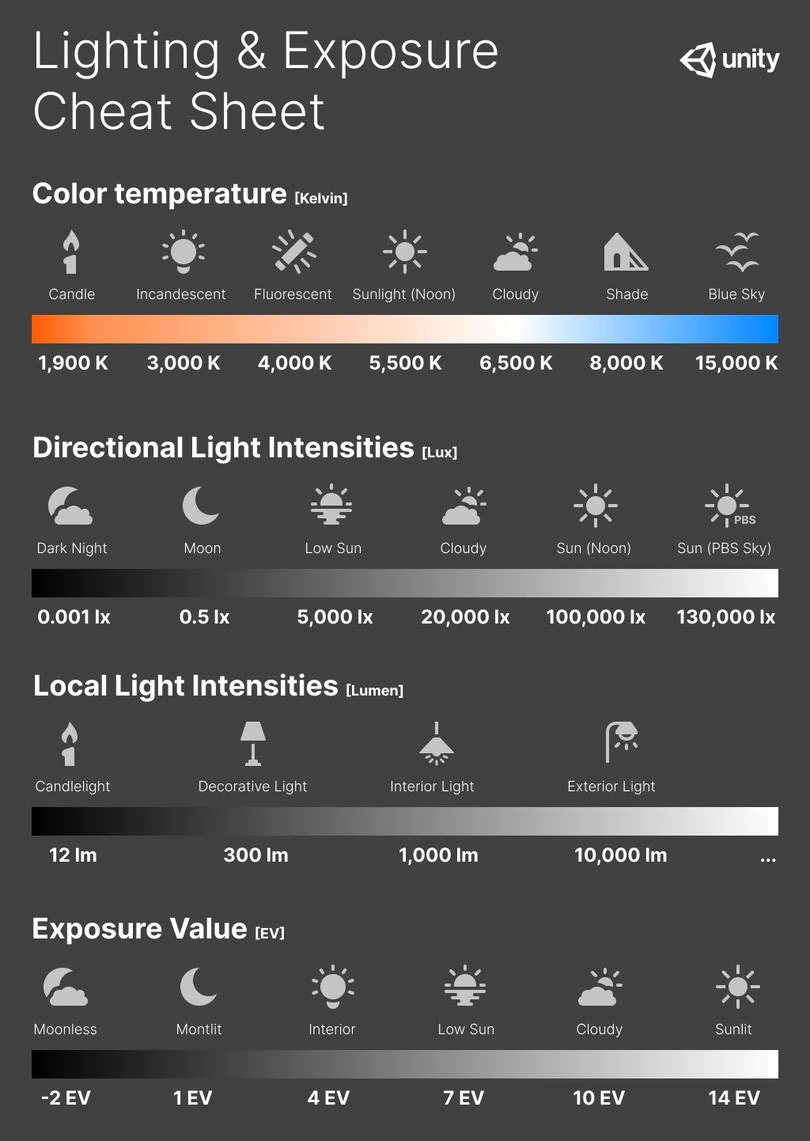

Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurements

Read more: Photography basics: Exposure Value vs Photographic Exposure vs Il/Luminance vs Pixel luminance measurementsAlso see: https://www.pixelsham.com/2015/05/16/how-aperture-shutter-speed-and-iso-affect-your-photos/

In photography, exposure value (EV) is a number that represents a combination of a camera’s shutter speed and f-number, such that all combinations that yield the same exposure have the same EV (for any fixed scene luminance).

The EV concept was developed in an attempt to simplify choosing among combinations of equivalent camera settings. Although all camera settings with the same EV nominally give the same exposure, they do not necessarily give the same picture. EV is also used to indicate an interval on the photographic exposure scale. 1 EV corresponding to a standard power-of-2 exposure step, commonly referred to as a stop

EV 0 corresponds to an exposure time of 1 sec and a relative aperture of f/1.0. If the EV is known, it can be used to select combinations of exposure time and f-number.Note EV does not equal to photographic exposure. Photographic Exposure is defined as how much light hits the camera’s sensor. It depends on the camera settings mainly aperture and shutter speed. Exposure value (known as EV) is a number that represents the exposure setting of the camera.

Thus, strictly, EV is not a measure of luminance (indirect or reflected exposure) or illuminance (incidentl exposure); rather, an EV corresponds to a luminance (or illuminance) for which a camera with a given ISO speed would use the indicated EV to obtain the nominally correct exposure. Nonetheless, it is common practice among photographic equipment manufacturers to express luminance in EV for ISO 100 speed, as when specifying metering range or autofocus sensitivity.

The exposure depends on two things: how much light gets through the lenses to the camera’s sensor and for how long the sensor is exposed. The former is a function of the aperture value while the latter is a function of the shutter speed. Exposure value is a number that represents this potential amount of light that could hit the sensor. It is important to understand that exposure value is a measure of how exposed the sensor is to light and not a measure of how much light actually hits the sensor. The exposure value is independent of how lit the scene is. For example a pair of aperture value and shutter speed represents the same exposure value both if the camera is used during a very bright day or during a dark night.

Each exposure value number represents all the possible shutter and aperture settings that result in the same exposure. Although the exposure value is the same for different combinations of aperture values and shutter speeds the resulting photo can be very different (the aperture controls the depth of field while shutter speed controls how much motion is captured).

EV 0.0 is defined as the exposure when setting the aperture to f-number 1.0 and the shutter speed to 1 second. All other exposure values are relative to that number. Exposure values are on a base two logarithmic scale. This means that every single step of EV – plus or minus 1 – represents the exposure (actual light that hits the sensor) being halved or doubled.Formulas

(more…)

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

59 AI Filmmaking Tools For Your Workflow

-

Eddie Yoon – There’s a big misconception about AI creative

-

Glossary of Lighting Terms – cheat sheet

-

Top 3D Printing Website Resources

-

Blender VideoDepthAI – Turn any video into 3D Animated Scenes

-

GretagMacbeth Color Checker Numeric Values and Middle Gray

-

QR code logos

-

Generative AI Glossary / AI Dictionary / AI Terminology

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.