COMPOSITION

DESIGN

-

Mike Wong – AtoMeow – A Blue noise image stippling in Processing

Read more: Mike Wong – AtoMeow – A Blue noise image stippling in Processing

https://github.com/mwkm/atoMeow

https://www.shadertoy.com/view/7s3XzX

This demo is created for coders who are familiar with this awesome creative coding platform. You may quickly modify the code to work for video or to stipple your own Procssing drawings by turning them into

PImageand run the simulation. This demo code also serves as a reference implementation of my article Blue noise sampling using an N-body simulation-based method. If you are interested in 2.5D, you may mod the code to achieve what I discussed in this artist friendly article.Convert your video to a dotted noise.

-

How to paint a boardgame miniatures

Read more: How to paint a boardgame miniaturesSteps:

- soap wash cleaning

- primer

- base-coat layer (black/white)

- detailing

- washing aka shade (could be done after highlighting)

- highlights aka dry brushing (could be done after washing)

- varnish (gloss/satin/matte)

COLOR

-

Victor Perez – The Color Management Handbook for Visual Effects Artists

Read more: Victor Perez – The Color Management Handbook for Visual Effects ArtistsDigital Color Principles, Color Management Fundamentals & ACES Workflows

-

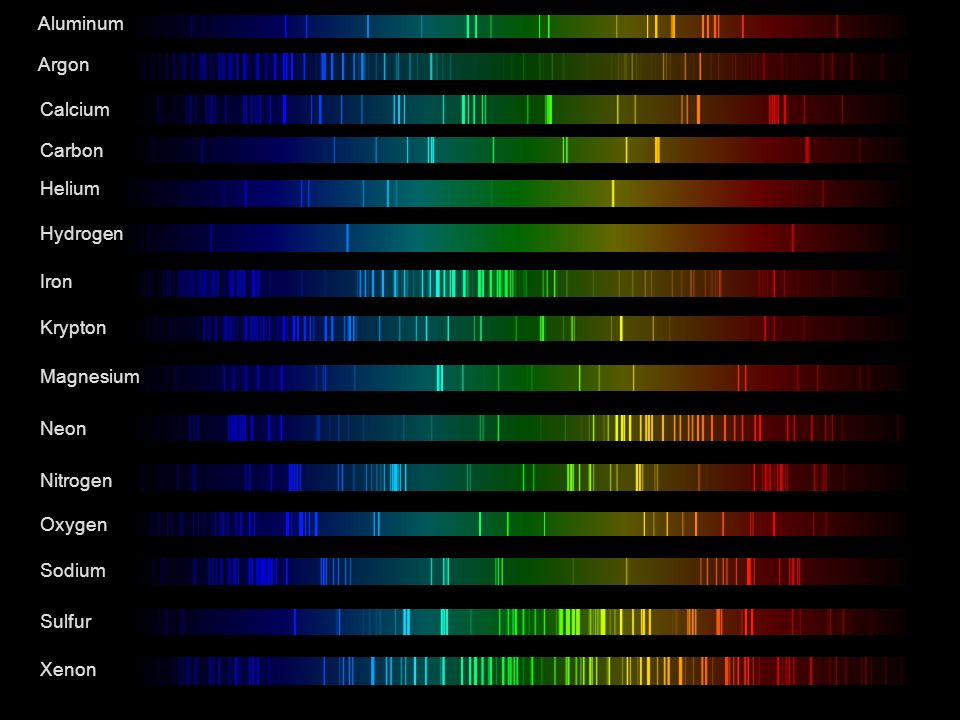

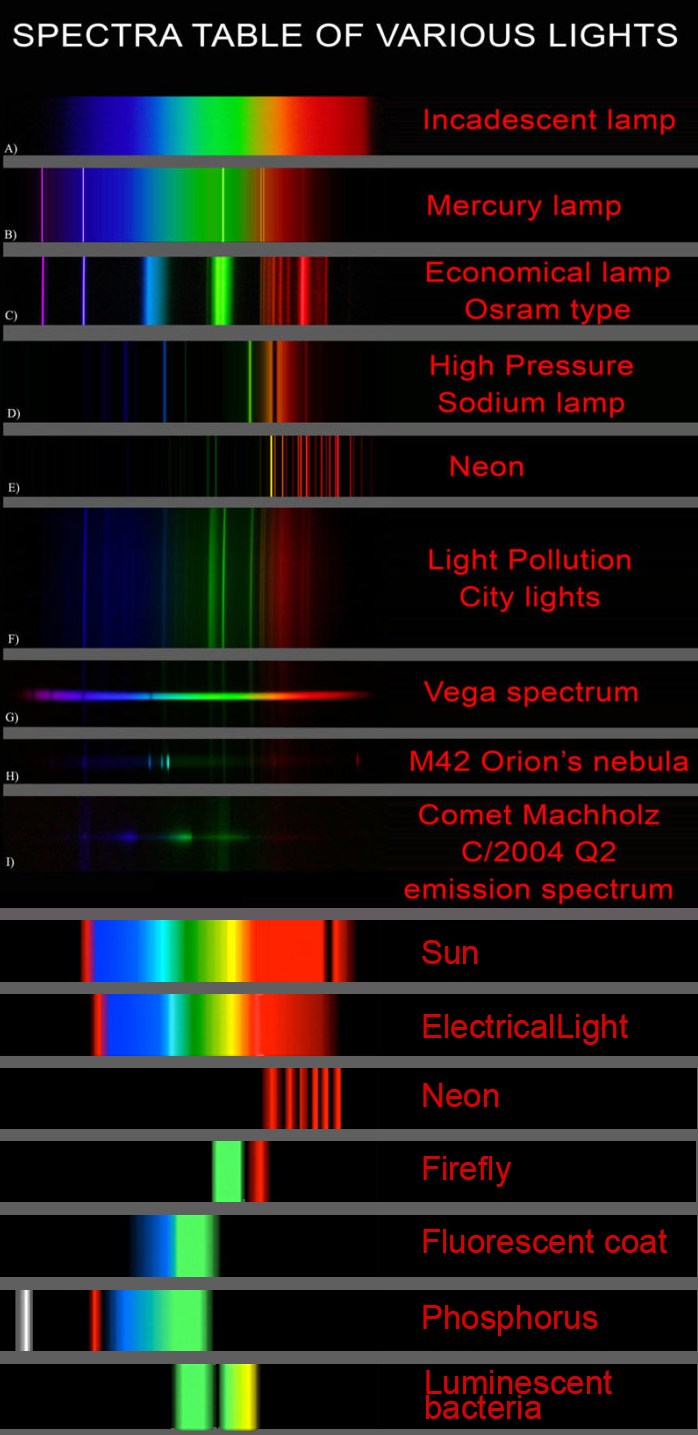

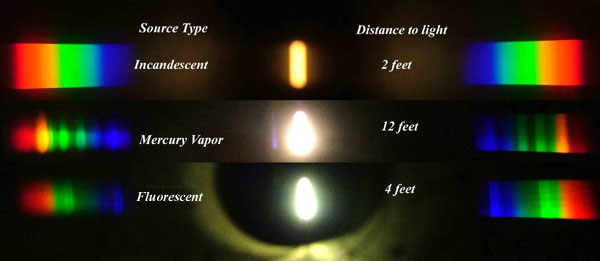

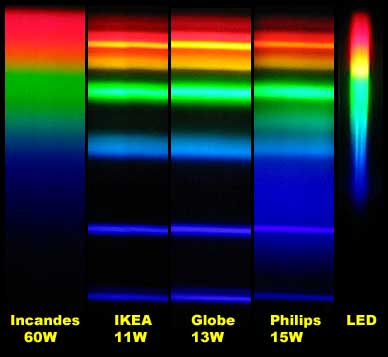

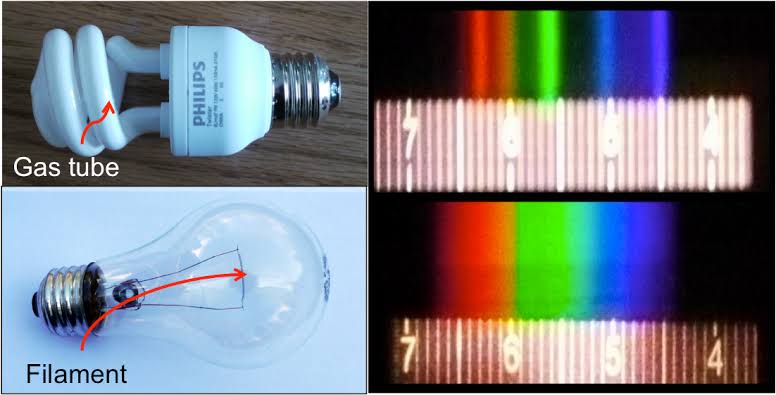

Space bodies’ components and light spectroscopy

Read more: Space bodies’ components and light spectroscopywww.plutorules.com/page-111-space-rocks.html

This help’s us understand the composition of components in/on solar system bodies.

Dips in the observed light spectrum, also known as, lines of absorption occur as gasses absorb energy from light at specific points along the light spectrum.

These dips or darkened zones (lines of absorption) leave a finger print which identify elements and compounds.

In this image the dark absorption bands appear as lines of emission which occur as the result of emitted not reflected (absorbed) light.

Lines of absorption

Lines of emission

Lines of emission

-

Photography basics: Why Use a (MacBeth) Color Chart?

Read more: Photography basics: Why Use a (MacBeth) Color Chart?Start here: https://www.pixelsham.com/2013/05/09/gretagmacbeth-color-checker-numeric-values/

https://www.studiobinder.com/blog/what-is-a-color-checker-tool/

In LightRoom

in Final Cut

in Nuke

Note: In Foundry’s Nuke, the software will map 18% gray to whatever your center f/stop is set to in the viewer settings (f/8 by default… change that to EV by following the instructions below).

You can experiment with this by attaching an Exposure node to a Constant set to 0.18, setting your viewer read-out to Spotmeter, and adjusting the stops in the node up and down. You will see that a full stop up or down will give you the respective next value on the aperture scale (f8, f11, f16 etc.).One stop doubles or halves the amount or light that hits the filmback/ccd, so everything works in powers of 2.

So starting with 0.18 in your constant, you will see that raising it by a stop will give you .36 as a floating point number (in linear space), while your f/stop will be f/11 and so on.If you set your center stop to 0 (see below) you will get a relative readout in EVs, where EV 0 again equals 18% constant gray.

In other words. Setting the center f-stop to 0 means that in a neutral plate, the middle gray in the macbeth chart will equal to exposure value 0. EV 0 corresponds to an exposure time of 1 sec and an aperture of f/1.0.

This will set the sun usually around EV12-17 and the sky EV1-4 , depending on cloud coverage.

To switch Foundry’s Nuke’s SpotMeter to return the EV of an image, click on the main viewport, and then press s, this opens the viewer’s properties. Now set the center f-stop to 0 in there. And the SpotMeter in the viewport will change from aperture and fstops to EV.

-

Pattern generators

Read more: Pattern generatorshttp://qrohlf.com/trianglify-generator/

https://halftonepro.com/app/polygons#

https://mattdesl.svbtle.com/generative-art-with-nodejs-and-canvas

https://www.patterncooler.com/

http://permadi.com/java/spaint/spaint.html

https://dribbble.com/shots/1847313-Kaleidoscope-Generator-PSD

http://eskimoblood.github.io/gerstnerizer/

http://www.stripegenerator.com/

http://btmills.github.io/geopattern/geopattern.html

http://fractalarchitect.net/FA4-Random-Generator.html

https://sciencevsmagic.net/fractal/#0605,0000,3,2,0,1,2

https://sites.google.com/site/mandelbulber/home

LIGHTING

-

7 Easy Portrait Lighting Setups

Read more: 7 Easy Portrait Lighting SetupsButterfly

Loop

Rembrandt

Split

Rim

Broad

Short

-

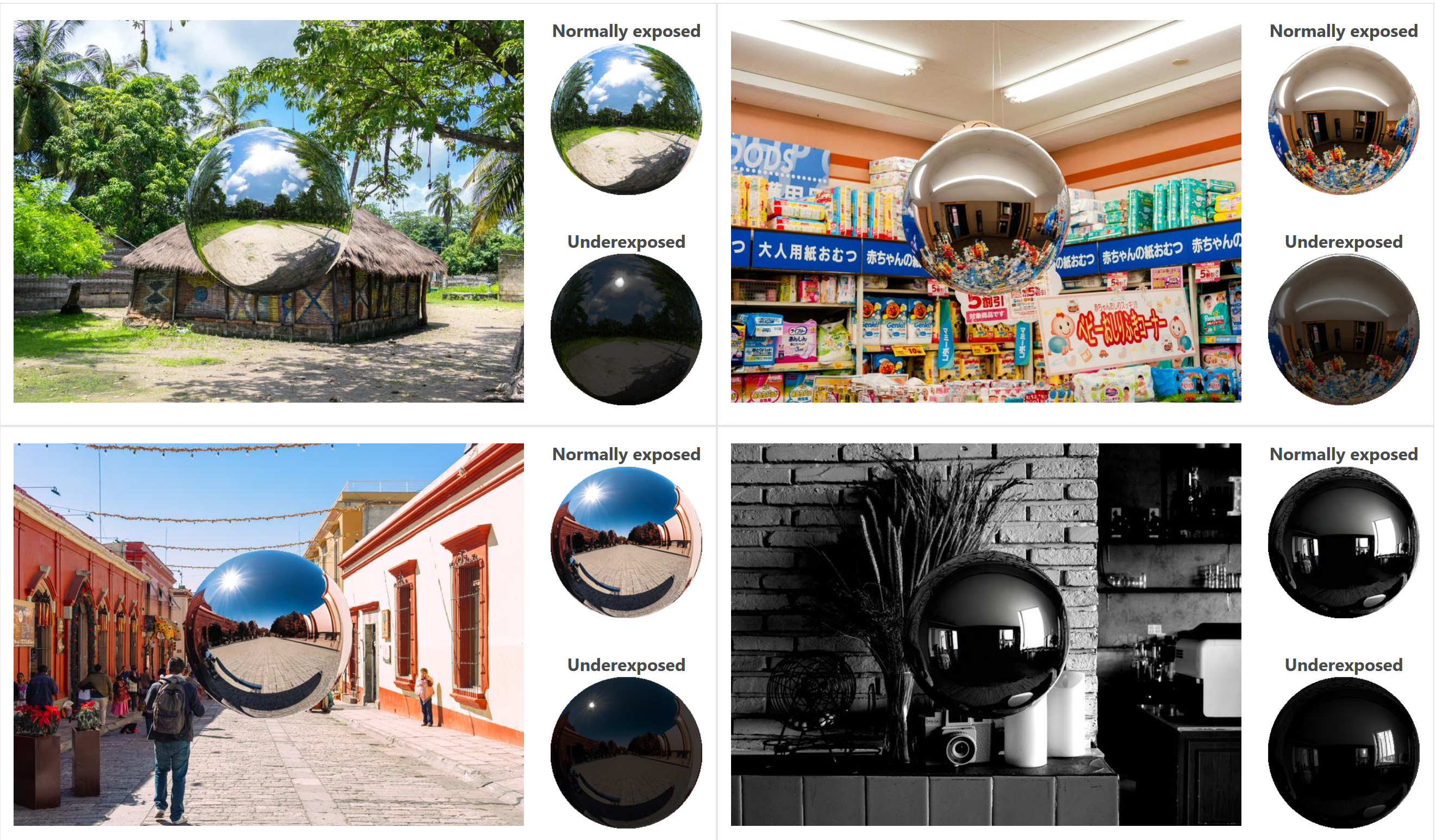

DiffusionLight: HDRI Light Probes for Free by Painting a Chrome Ball

Read more: DiffusionLight: HDRI Light Probes for Free by Painting a Chrome Ballhttps://diffusionlight.github.io/

https://github.com/DiffusionLight/DiffusionLight

https://github.com/DiffusionLight/DiffusionLight?tab=MIT-1-ov-file#readme

https://colab.research.google.com/drive/15pC4qb9mEtRYsW3utXkk-jnaeVxUy-0S

“a simple yet effective technique to estimate lighting in a single input image. Current techniques rely heavily on HDR panorama datasets to train neural networks to regress an input with limited field-of-view to a full environment map. However, these approaches often struggle with real-world, uncontrolled settings due to the limited diversity and size of their datasets. To address this problem, we leverage diffusion models trained on billions of standard images to render a chrome ball into the input image. Despite its simplicity, this task remains challenging: the diffusion models often insert incorrect or inconsistent objects and cannot readily generate images in HDR format. Our research uncovers a surprising relationship between the appearance of chrome balls and the initial diffusion noise map, which we utilize to consistently generate high-quality chrome balls. We further fine-tune an LDR difusion model (Stable Diffusion XL) with LoRA, enabling it to perform exposure bracketing for HDR light estimation. Our method produces convincing light estimates across diverse settings and demonstrates superior generalization to in-the-wild scenarios.”

-

Romain Chauliac – LightIt a lighting script for Maya and Arnold

Read more: Romain Chauliac – LightIt a lighting script for Maya and ArnoldLightIt is a script for Maya and Arnold that will help you and improve your lighting workflow.

Thanks to preset studio lighting components (lights, backdrop…), high quality studio scenes and HDRI library manager.https://www.artstation.com/artwork/393emJ

-

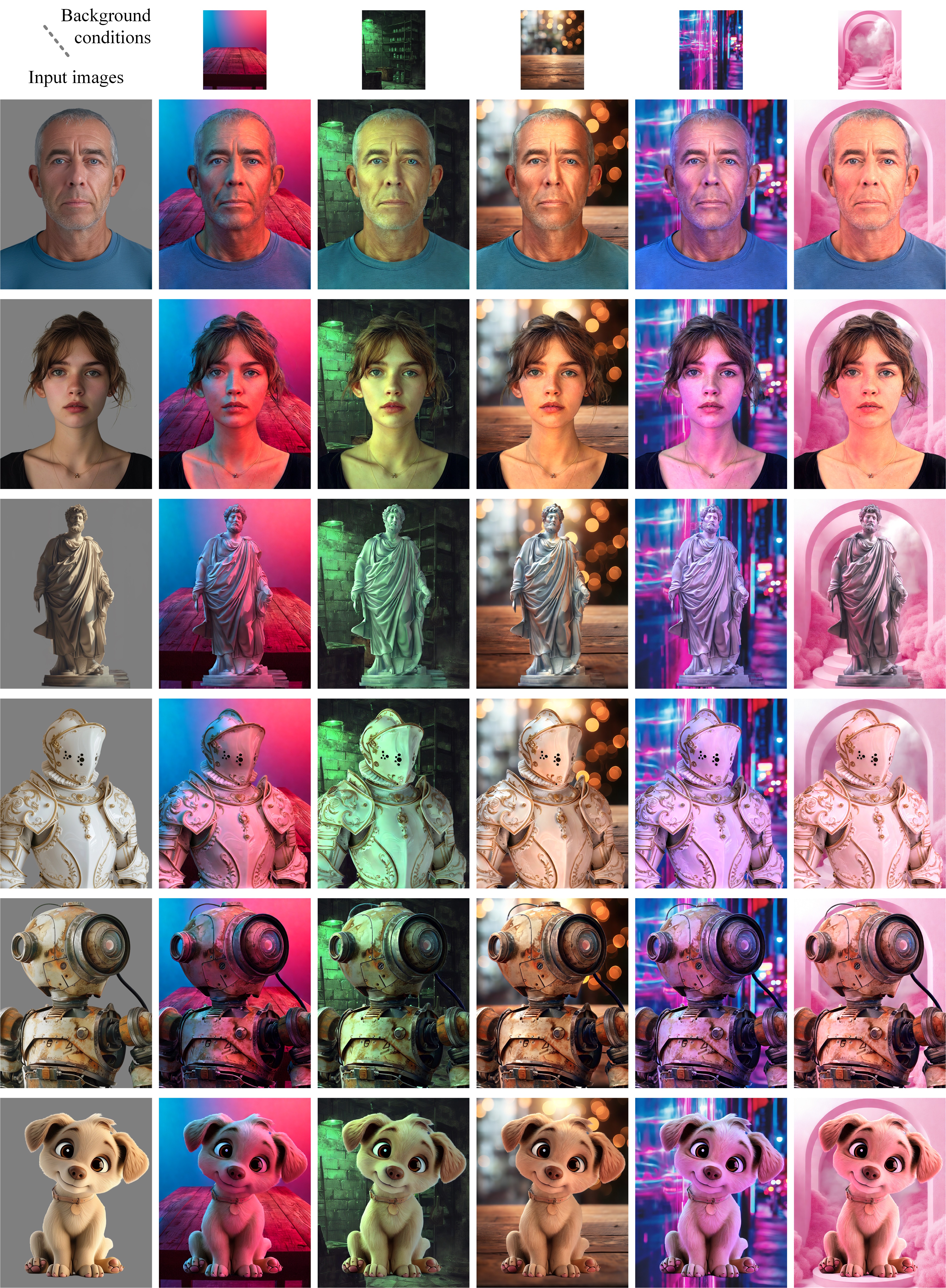

ICLight – Krea and ComfyUI light editing

Read more: ICLight – Krea and ComfyUI light editinghttps://drive.google.com/drive/folders/16Aq1mqZKP-h8vApaN4FX5at3acidqPUv

https://github.com/lllyasviel/IC-Light

https://generativematte.blogspot.com/2025/03/comfyui-ic-light-relighting-exploration.html

Workflow Local copy

-

Sun cone angle (angular diameter) as perceived by earth viewers

Read more: Sun cone angle (angular diameter) as perceived by earth viewersAlso see:

https://www.pixelsham.com/2020/08/01/solid-angle-measures/

The cone angle of the sun refers to the angular diameter of the sun as observed from Earth, which is related to the apparent size of the sun in the sky.

The angular diameter of the sun, or the cone angle of the sunlight as perceived from Earth, is approximately 0.53 degrees on average. This value can vary slightly due to the elliptical nature of Earth’s orbit around the sun, but it generally stays within a narrow range.

Here’s a more precise breakdown:

-

- Average Angular Diameter: About 0.53 degrees (31 arcminutes)

- Minimum Angular Diameter: Approximately 0.52 degrees (when Earth is at aphelion, the farthest point from the sun)

- Maximum Angular Diameter: Approximately 0.54 degrees (when Earth is at perihelion, the closest point to the sun)

This angular diameter remains relatively constant throughout the day because the sun’s distance from Earth does not change significantly over a single day.

To summarize, the cone angle of the sun’s light, or its angular diameter, is typically around 0.53 degrees, regardless of the time of day.

https://en.wikipedia.org/wiki/Angular_diameter

-

-

Disney’s Moana Island Scene – Free data set

Read more: Disney’s Moana Island Scene – Free data sethttps://www.disneyanimation.com/resources/moana-island-scene/

This data set contains everything necessary to render a version of the Motunui island featured in the 2016 film Moana.

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

-

4dv.ai – Remote Interactive 3D Holographic Presentation Technology and System running on the PlayCanvas engine

-

Photography basics: Production Rendering Resolution Charts

-

Glossary of Lighting Terms – cheat sheet

-

AI and the Law – Netflix : Using Generative AI in Content Production

-

White Balance is Broken!

-

Steven Stahlberg – Perception and Composition

-

WhatDreamsCost Spline-Path-Control – Create motion controls for ComfyUI

-

The Public Domain Is Working Again — No Thanks To Disney

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.