RANDOM POSTs

-

Acting Upward

Read more: Acting UpwardA growing community of collaborators dedicated to helping actors, artists & filmmakers gain experience & improve their craft.

-

Crypto Mining Attack via ComfyUI/Ultralytics in 2024

Read more: Crypto Mining Attack via ComfyUI/Ultralytics in 2024https://github.com/ultralytics/ultralytics/issues/18037

zopieux on Dec 5, 2024 : Ultralytics was attacked (or did it on purpose, waiting for a post mortem there), 8.3.41 contains nefarious code downloading and running a crypto miner hosted as a GitHub blob.

-

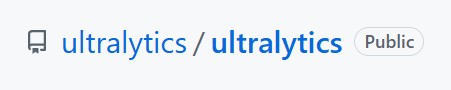

Luca Rossi – How to Help Underperformers

Read more: Luca Rossi – How to Help Underperformershttps://hybridhacker.email/p/how-to-help-underperformers

- Understand Performance is Systemic: Recognize that culture, systems, management, and individual traits impact performance.

- Address Underperformance Early: Use consistent feedback and the accountability dial (mention, invitation, conversation, boundary, limit).

- Provide Balanced Feedback: Reinforce, acknowledge, and correct behaviors.

- Use an Underperformance Checklist: Evaluate issues related to culture, systems, management, and individual traits.

-

Codeformer Colab and Hugging Face : open source ground-breaking face restoration technique

Read more: Codeformer Colab and Hugging Face : open source ground-breaking face restoration techniquehttps://huggingface.co/spaces/sczhou/CodeFormer

https://github.com/sczhou/CodeFormer

https://colab.research.google.com/drive/1m52PNveE4PBhYrecj34cnpEeiHcC5LTb

-

Lenticular kits

Read more: Lenticular kitshttp://www.3dphotopro.com/lenticular_tool_kit.html#LTKS

http://www.microlens.com/pages/choosing_right_lens.htm

http://www.lpc-world.com/index.html

Lenticular lens sheets are designed to enhance certain image characteristics. In fact, standard lenticular lens sheet designs are broken down into two primary categories, Flip and 3D. The difference between the two designs is the viewing angle. A lenticular lens sheet designed for 3D images will have a narrow viewing angle (typically less than 30°). Conversely, a lenticular lens sheet designed for flip images will have a wider viewing angle (typically higher than 40°).

COLLECTIONS

| Featured AI

| Design And Composition

| Explore posts

POPULAR SEARCHES

unreal | pipeline | virtual production | free | learn | photoshop | 360 | macro | google | nvidia | resolution | open source | hdri | real-time | photography basics | nuke

FEATURED POSTS

Social Links

DISCLAIMER – Links and images on this website may be protected by the respective owners’ copyright. All data submitted by users through this site shall be treated as freely available to share.