BREAKING NEWS

LATEST POSTS

-

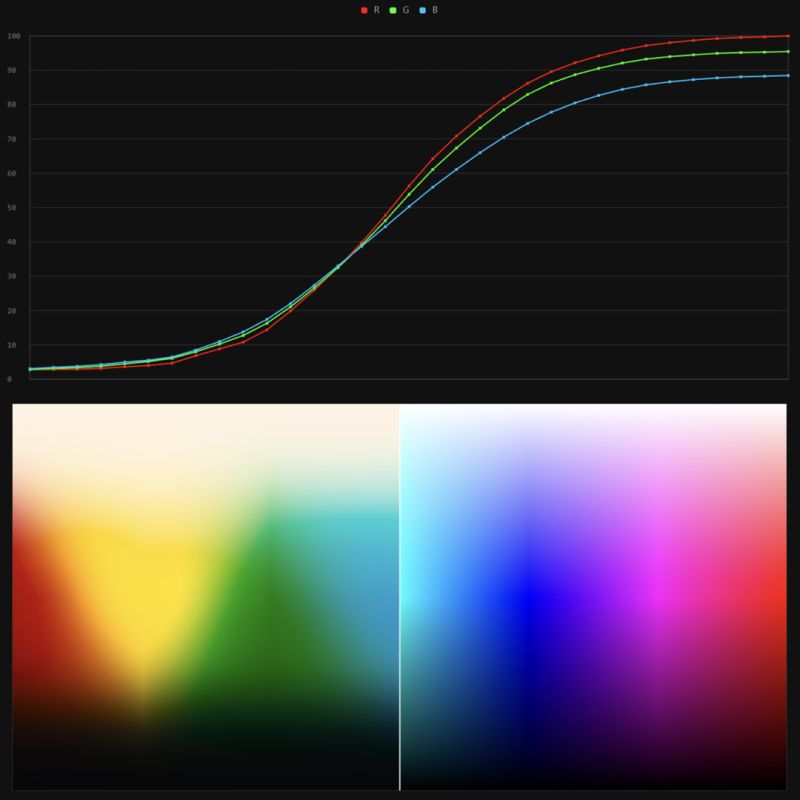

Stefan Ringelschwandtner – LUT Inspector tool

It lets you load any .cube LUT right in your browser, see the RGB curves, and use a split view on the Granger Test Image to compare the original vs. LUT-applied version in real time — perfect for spotting hue shifts, saturation changes, and contrast tweaks.

https://mononodes.com/lut-inspector/

-

Python Automation – Beginner to Advance Guid

WhatApp Message Automation

Automating Instagram

Telegrame Bot Creation

Email Automation with Python

PDF and Document Automation -

Kelly Boesch – Static and Toward The Light

https://www.kellyboeschdesign.com

I was working an album cover last night and got these really cool images in midjourney so made a video out of it. Animated using Pika. Song made using Suno Full version on my bandcamp. It’s called Static.

https://www.linkedin.com/posts/kellyboesch_midjourney-keyframes-ai-activity-7359244714853736450-Wvcr(more…) -

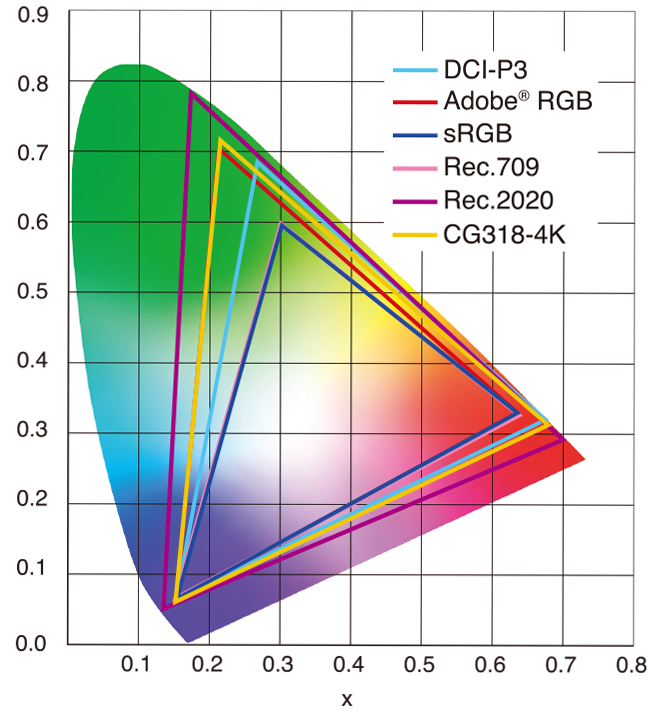

sRGB vs REC709 – An introduction and FFmpeg implementations

1. Basic Comparison

- What they are

- sRGB: A standard “web”/computer-display RGB color space defined by IEC 61966-2-1. It’s used for most monitors, cameras, printers, and the vast majority of images on the Internet.

- Rec. 709: An HD-video color space defined by ITU-R BT.709. It’s the go-to standard for HDTV broadcasts, Blu-ray discs, and professional video pipelines.

- Why they exist

- sRGB: Ensures consistent colors across different consumer devices (PCs, phones, webcams).

- Rec. 709: Ensures consistent colors across video production and playback chains (cameras → editing → broadcast → TV).

- What you’ll see

- On your desktop or phone, images tagged sRGB will look “right” without extra tweaking.

- On an HDTV or video-editing timeline, footage tagged Rec. 709 will display accurate contrast and hue on broadcast-grade monitors.

2. Digging Deeper

Feature sRGB Rec. 709 White point D65 (6504 K), same for both D65 (6504 K) Primaries (x,y) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) R: (0.640, 0.330) G: (0.300, 0.600) B: (0.150, 0.060) Gamut size Identical triangle on CIE 1931 chart Identical to sRGB Gamma / transfer Piecewise curve: approximate 2.2 with linear toe Pure power-law γ≈2.4 (often approximated as 2.2 in practice) Matrix coefficients N/A (pure RGB usage) Y = 0.2126 R + 0.7152 G + 0.0722 B (Rec. 709 matrix) Typical bit-depth 8-bit/channel (with 16-bit variants) 8-bit/channel (10-bit for professional video) Usage metadata Tagged as “sRGB” in image files (PNG, JPEG, etc.) Tagged as “bt709” in video containers (MP4, MOV) Color range Full-range RGB (0–255) Studio-range Y′CbCr (Y′ [16–235], Cb/Cr [16–240])

Why the Small Differences Matter

(more…) - What they are

FEATURED POSTS

-

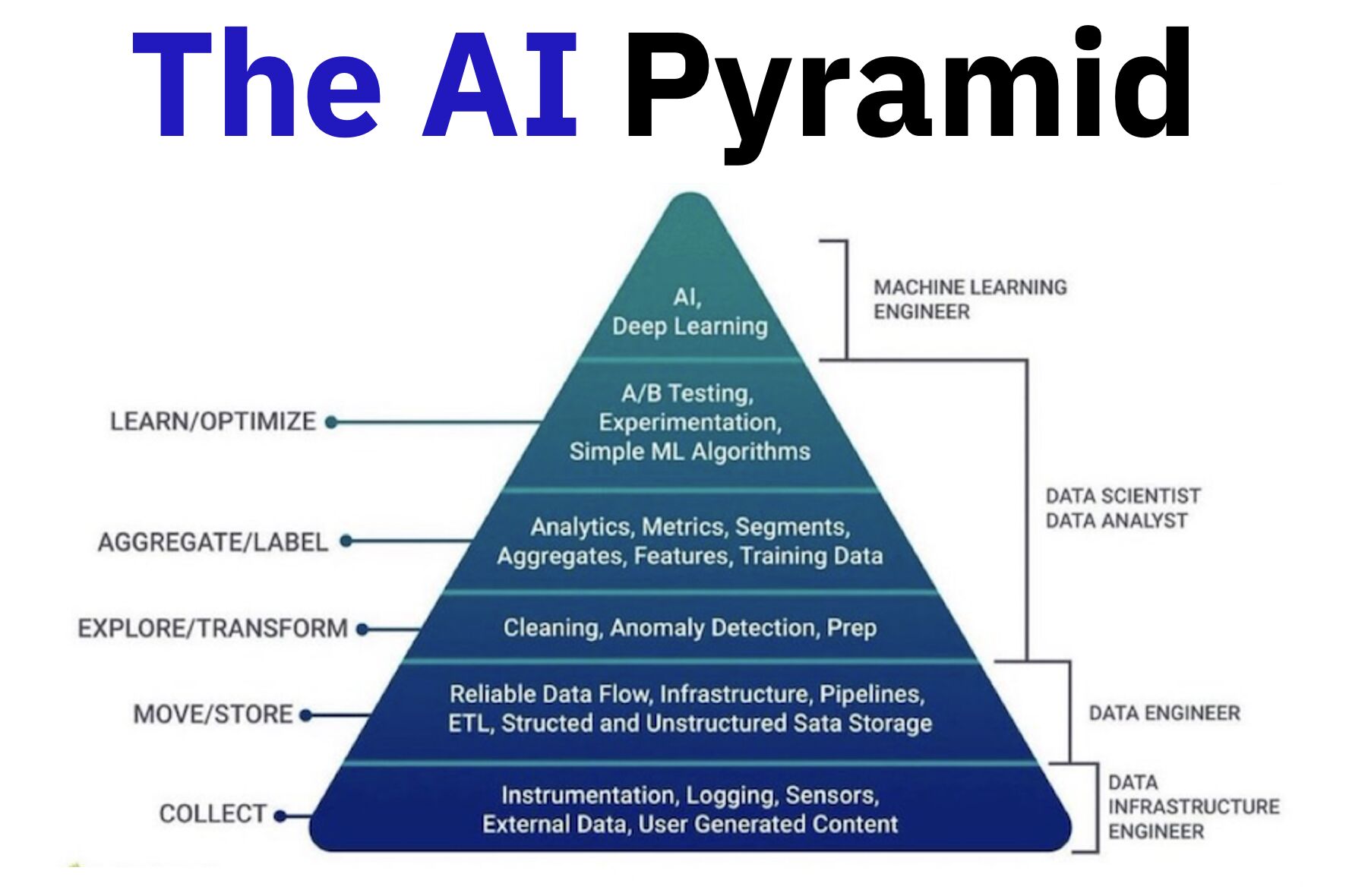

Andreas Horn – Want cutting edge AI?

𝗧𝗵𝗲 𝗯𝘂𝗶𝗹𝗱𝗶𝗻𝗴 𝗯𝗹𝗼𝗰𝗸𝘀 𝗼𝗳 𝗔𝗜 𝗮𝗻𝗱 𝗲𝘀𝘀𝗲𝗻𝘁𝗶𝗮𝗹 𝗽𝗿𝗼𝗰𝗲𝘀𝘀𝗲𝘀:

– Collect: Data from sensors, logs, and user input.

– Move/Store: Build infrastructure, pipelines, and reliable data flow.

– Explore/Transform: Clean, prep, and detect anomalies to make the data usable.

– Aggregate/Label: Add analytics, metrics, and labels to create training data.

– Learn/Optimize: Experiment, test, and train AI models.𝗧𝗵𝗲 𝗹𝗮𝘆𝗲𝗿𝘀 𝗼𝗳 𝗱𝗮𝘁𝗮 𝗮𝗻𝗱 𝗵𝗼𝘄 𝘁𝗵𝗲𝘆 𝗯𝗲𝗰𝗼𝗺𝗲 𝗶𝗻𝘁𝗲𝗹𝗹𝗶𝗴𝗲𝗻𝘁:

– Instrumentation and logging: Sensors, logs, and external data capture the raw inputs.

– Data flow and storage: Pipelines and infrastructure ensure smooth movement and reliable storage.

– Exploration and transformation: Data is cleaned, prepped, and anomalies are detected.

– Aggregation and labeling: Analytics, metrics, and labels create structured, usable datasets.

– Experimenting/AI/ML: Models are trained and optimized using the prepared data.

– AI insights and actions: Advanced AI generates predictions, insights, and decisions at the top.𝗪𝗵𝗼 𝗺𝗮𝗸𝗲𝘀 𝗶𝘁 𝗵𝗮𝗽𝗽𝗲𝗻 𝗮𝗻𝗱 𝗸𝗲𝘆 𝗿𝗼𝗹𝗲𝘀:

– Data Infrastructure Engineers: Build the foundation — collect, move, and store data.

– Data Engineers: Prep and transform the data into usable formats.

– Data Analysts & Scientists: Aggregate, label, and generate insights.

– Machine Learning Engineers: Optimize and deploy AI models.𝗧𝗵𝗲 𝗺𝗮𝗴𝗶𝗰 𝗼𝗳 𝗔𝗜 𝗶𝘀 𝗶𝗻 𝗵𝗼𝘄 𝘁𝗵𝗲𝘀𝗲 𝗹𝗮𝘆𝗲𝗿𝘀 𝗮𝗻𝗱 𝗿𝗼𝗹𝗲𝘀 𝘄𝗼𝗿𝗸 𝘁𝗼𝗴𝗲𝘁𝗵𝗲𝗿. 𝗧𝗵𝗲 𝘀𝘁𝗿𝗼𝗻𝗴𝗲𝗿 𝘆𝗼𝘂𝗿 𝗳𝗼𝘂𝗻𝗱𝗮𝘁𝗶𝗼𝗻, 𝘁𝗵𝗲 𝘀𝗺𝗮𝗿𝘁𝗲𝗿 𝘆𝗼𝘂𝗿 𝗔𝗜.

-

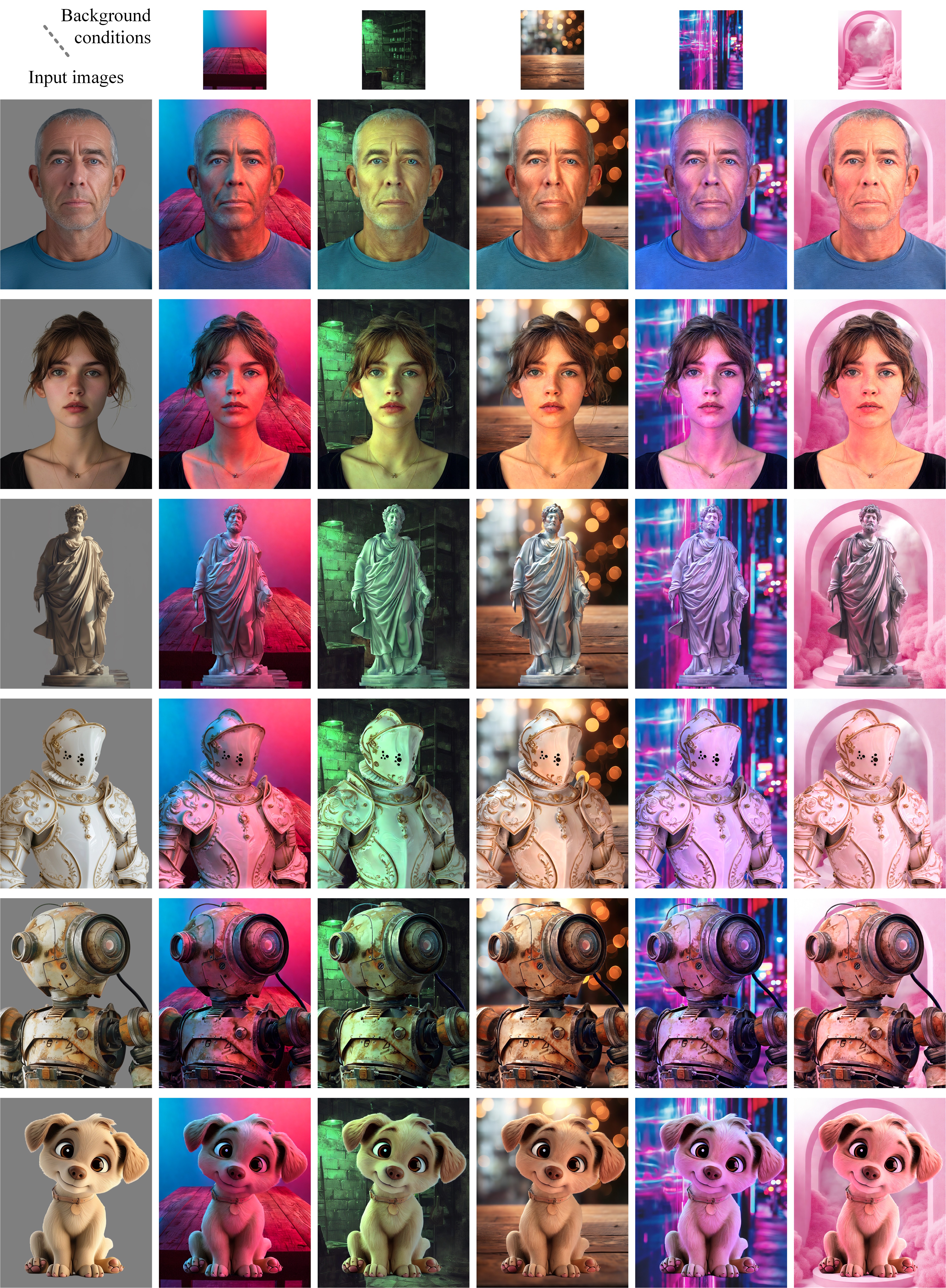

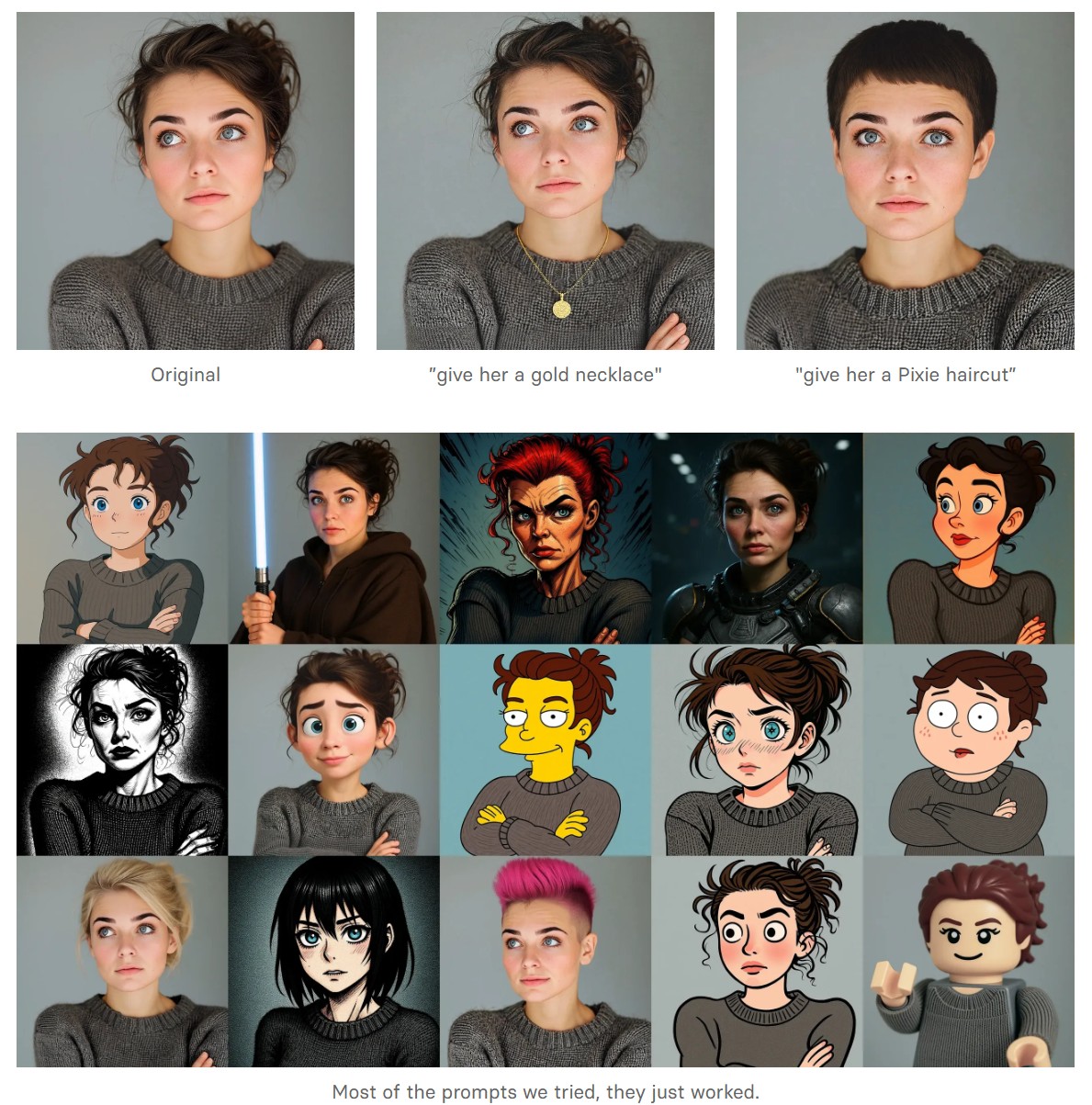

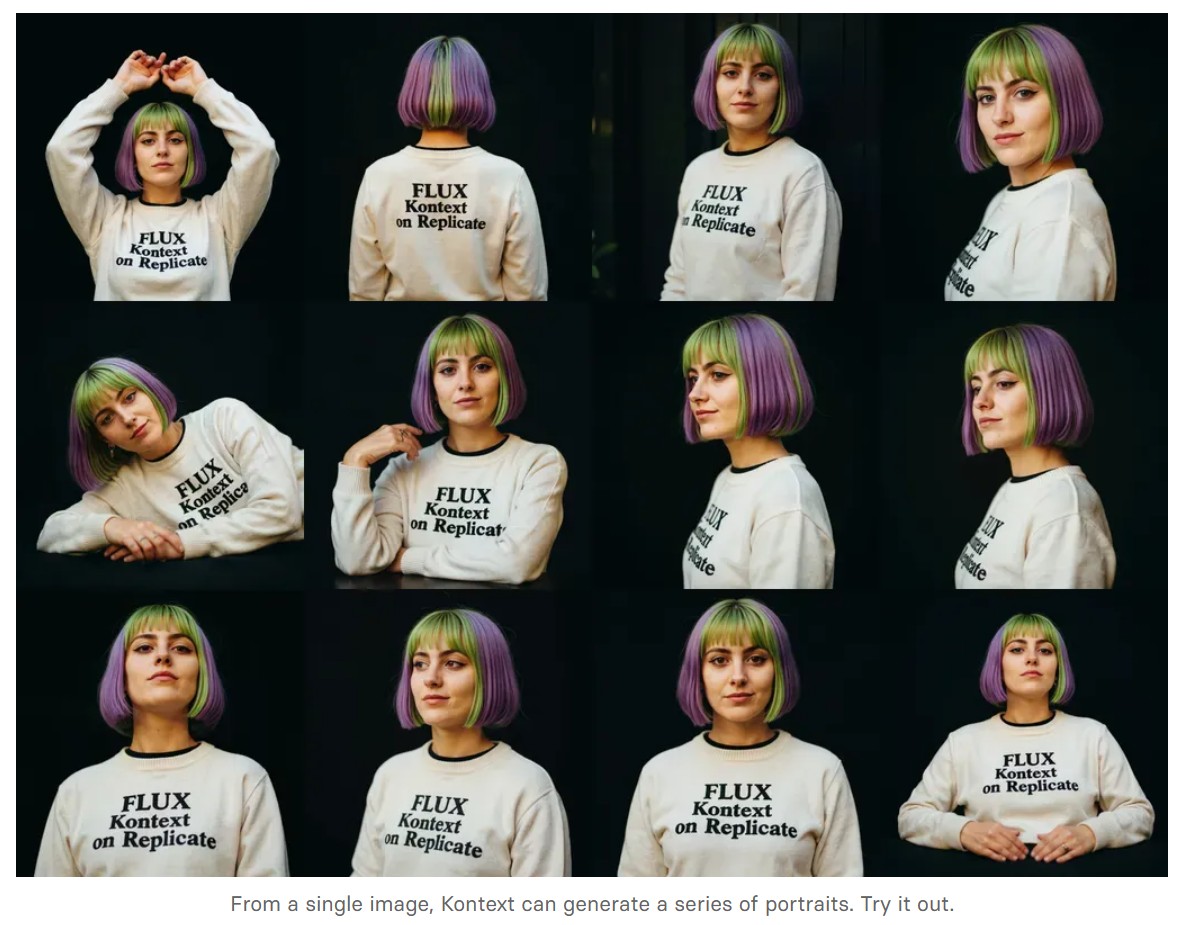

Black Forest Labs released FLUX.1 Kontext

https://replicate.com/blog/flux-kontext

https://replicate.com/black-forest-labs/flux-kontext-pro

There are three models, two are available now, and a third open-weight version is coming soon:

- FLUX.1 Kontext [pro]: State-of-the-art performance for image editing. High-quality outputs, great prompt following, and consistent results.

- FLUX.1 Kontext [max]: A premium model that brings maximum performance, improved prompt adherence, and high-quality typography generation without compromise on speed.

- Coming soon: FLUX.1 Kontext [dev]: An open-weight, guidance-distilled version of Kontext.

We’re so excited with what Kontext can do, we’ve created a collection of models on Replicate to give you ideas:

- Multi-image kontext: Combine two images into one.

- Portrait series: Generate a series of portraits from a single image

- Change haircut: Change a person’s hair style and color

- Iconic locations: Put yourself in front of famous landmarks

- Professional headshot: Generate a professional headshot from any image

-

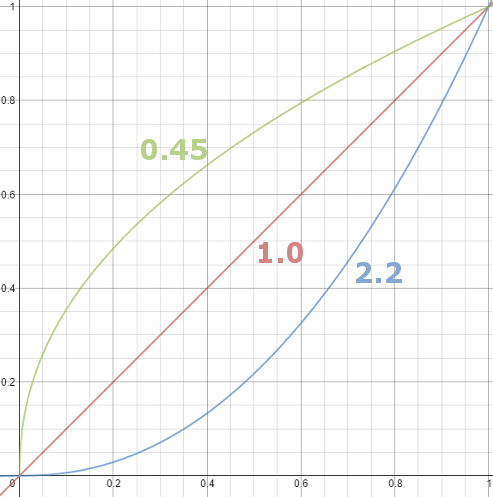

Gamma correction

http://www.normankoren.com/makingfineprints1A.html#Gammabox

https://en.wikipedia.org/wiki/Gamma_correction

http://www.photoscientia.co.uk/Gamma.htm

https://www.w3.org/Graphics/Color/sRGB.html

http://www.eizoglobal.com/library/basics/lcd_display_gamma/index.html

https://forum.reallusion.com/PrintTopic308094.aspx

Basically, gamma is the relationship between the brightness of a pixel as it appears on the screen, and the numerical value of that pixel. Generally Gamma is just about defining relationships.

Three main types:

– Image Gamma encoded in images

– Display Gammas encoded in hardware and/or viewing time

– System or Viewing Gamma which is the net effect of all gammas when you look back at a final image. In theory this should flatten back to 1.0 gamma.

(more…)