BREAKING NEWS

LATEST POSTS

-

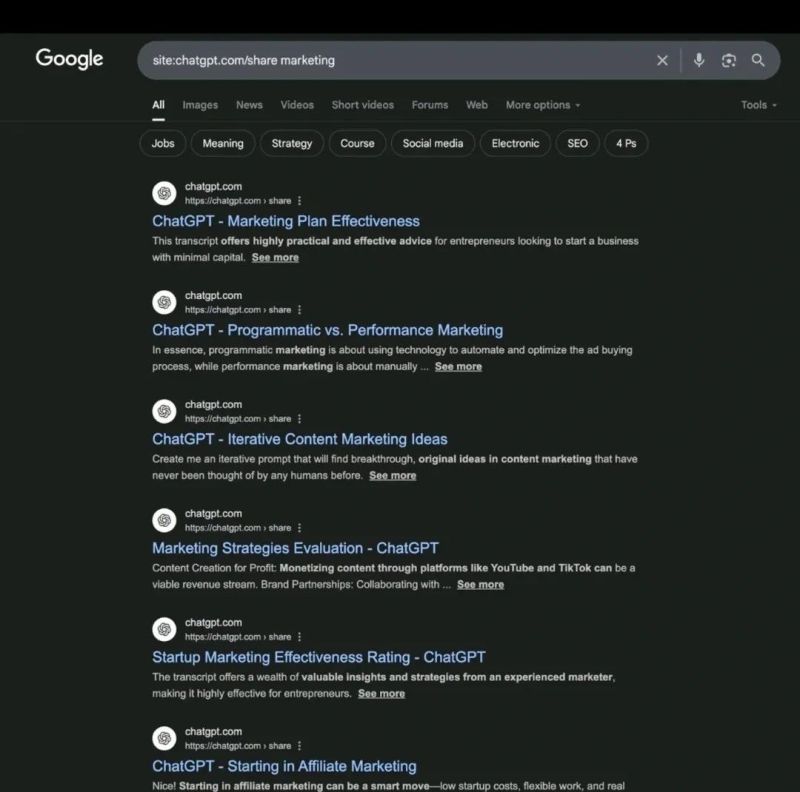

AI and the Law – 𝗬𝗼𝘂𝗿 (𝗽𝗿𝗶𝘃𝗮𝘁𝗲𝗹𝘆) 𝘀𝗵𝗮𝗿𝗲𝗱 𝗖𝗵𝗮𝘁𝗚𝗣𝗧 𝗰𝗵𝗮𝘁𝘀 𝗺𝗶𝗴𝗵𝘁 𝗯𝗲 𝘀𝗵𝗼𝘄𝗶𝗻𝗴 𝘂𝗽 𝗼𝗻 𝗚𝗼𝗼𝗴𝗹𝗲

Many users assume shared conversations are only seen by friends or colleagues — but when you use OpenAI’s share feature, those chats get now indexed by search engines like Google.

Meaning: your “private” AI prompts could end up very public. This is called Google dorking — and it’s shockingly effective.

Over 70,000 chats are now publicly viewable. Some are harmless.

Others? They might expose sensitive strategies, internal docs, product plans, even company secrets.

OpenAI currently does not block indexing. So if you’ve ever shared something thinking it’s “just a link” — it might now be searchable by anyone. You can even build a bot to crawl and analyze these.

Welcome to the new visibility layer of AI. I can’t say I am surprised…

-

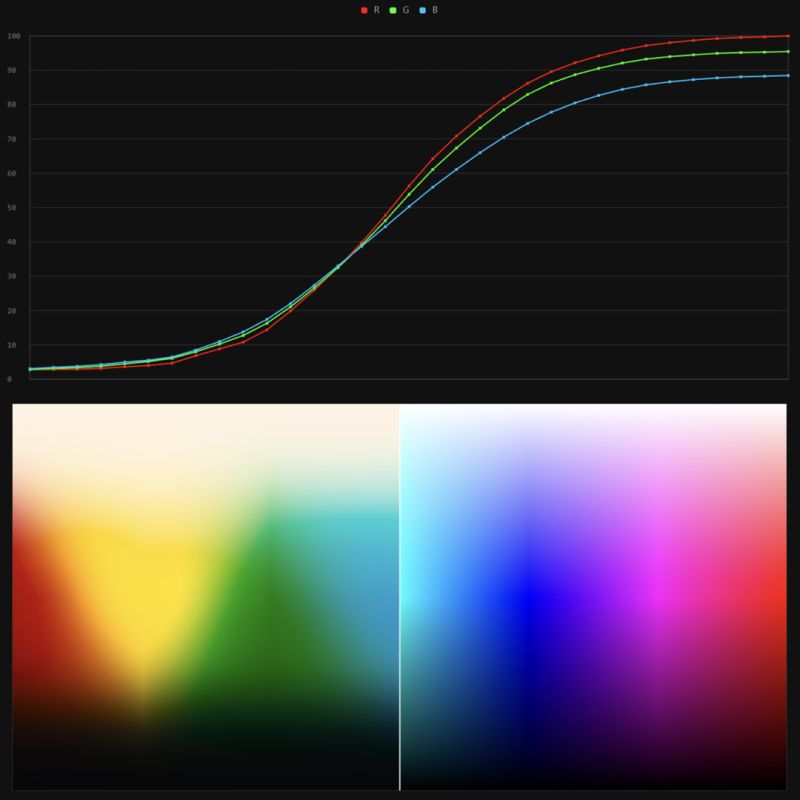

Stefan Ringelschwandtner – LUT Inspector tool

It lets you load any .cube LUT right in your browser, see the RGB curves, and use a split view on the Granger Test Image to compare the original vs. LUT-applied version in real time — perfect for spotting hue shifts, saturation changes, and contrast tweaks.

https://mononodes.com/lut-inspector/

-

Python Automation – Beginner to Advance Guid

WhatApp Message Automation

Automating Instagram

Telegrame Bot Creation

Email Automation with Python

PDF and Document Automation

FEATURED POSTS

-

FramePack – Packing Input Frame Context in Next-Frame Prediction Models for Offline Video Generation With Low Resource Requirements

https://lllyasviel.github.io/frame_pack_gitpage/

- Diffuse thousands of frames at full fps-30 with 13B models using 6GB laptop GPU memory.

- Finetune 13B video model at batch size 64 on a single 8xA100/H100 node for personal/lab experiments.

- Personal RTX 4090 generates at speed 2.5 seconds/frame (unoptimized) or 1.5 seconds/frame (teacache).

- No timestep distillation.

- Video diffusion, but feels like image diffusion.

Image-to-5-Seconds (30fps, 150 frames)

-

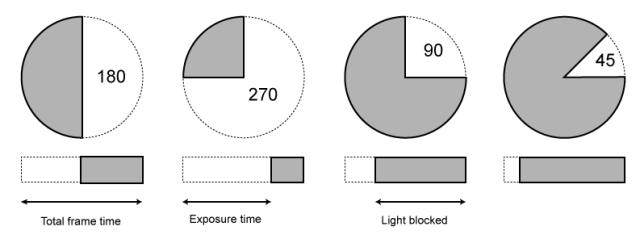

Photography basics: Shutter angle and shutter speed and motion blur

http://www.shutterangle.com/2012/cinematic-look-frame-rate-shutter-speed/

https://www.cinema5d.com/global-vs-rolling-shutter/

https://www.wikihow.com/Choose-a-Camera-Shutter-Speed

https://www.provideocoalition.com/shutter-speed-vs-shutter-angle/

Shutter is the device that controls the amount of light through a lens. Basically in general it controls the amount of time a film is exposed.

Shutter speed is how long this device is open for, which also defines motion blur… the longer it stays open the blurrier the image captured.

The number refers to the amount of light actually allowed through.

As a reference, shooting at 24fps, at 180 shutter angle or 1/48th of shutter speed (0.0208 exposure time) will produce motion blur which is similar to what we perceive at naked eye

Talked of as in (shutter) angles, for historical reasons, as the original exposure mechanism was controlled through a pie shaped mirror in front of the lens.

A shutter of 180 degrees is blocking/allowing light for half circle. (half blocked, half open). 270 degrees is one quarter pie shaped, which would allow for a higher exposure time (3 quarter pie open, vs one quarter closed) 90 degrees is three quarter pie shaped, which would allow for a lower exposure (one quarter open, three quarters closed)

The shutter angle can be converted back and fort with shutter speed with the following formulas:

https://www.provideocoalition.com/shutter-speed-vs-shutter-angle/shutter angle =

(360 * fps) * (1/shutter speed)

or

(360 * fps) / shutter speedshutter speed =

(360 * fps) * (1/shutter angle)

or

(360 * fps) / shutter angleFor example here is a chart from shutter angle to shutter speed at 24 fps:

270 = 1/32

180 = 1/48

172.8 = 1/50

144 = 1/60

90 = 1/96

72 = 1/120

45 = 1/198

22.5 = 1/348

11 = 1/696

8.6 = 1/1000The above is basically the relation between the way a video camera calculates shutter (fractions of a second) and the way a film camera calculates shutter (in degrees).

Smaller shutter angles show strobing artifacts. As the camera only ever sees at least half of the time (for a typical 180 degree shutter). Due to being obscured by the shutter during that period, it doesn’t capture the scene continuously.

This means that fast moving objects, and especially objects moving across the frame, will exhibit jerky movement. This is called strobing. The defect is also very noticeable during pans. Smaller shutter angles (shorter exposure) exhibit more pronounced strobing effects.

Larger shutter angles show more motion blur. As the longer exposure captures more motion.

Note that in 3D you want to first sum the total of the shutter open and shutter close values, than compare that to the shutter angle aperture, ie:

shutter open -0.0625

shutter close 0.0625

Total shutter = 0.0625+0.0625 = 0.125

Shutter angle = 360*0.125 = 45shutter open -0.125

shutter close 0.125

Total shutter = 0.125+0.125 = 0.25

Shutter angle = 360*0.25 = 90shutter open -0.25

shutter close 0.25

Total shutter = 0.25+0.25 = 0.5

Shutter angle = 360*0.5 = 180shutter open -0.375

shutter close 0.375

Total shutter = 0.375+0.375 = 0.75

Shutter angle = 360*0.75 = 270Faster frame rates can resolve both these issues.

-

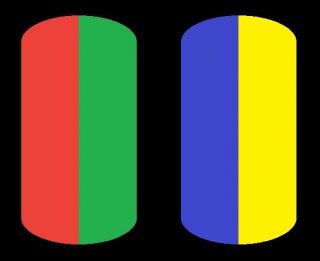

The Forbidden colors – Red-Green & Blue-Yellow: The Stunning Colors You Can’t See

www.livescience.com/17948-red-green-blue-yellow-stunning-colors.html

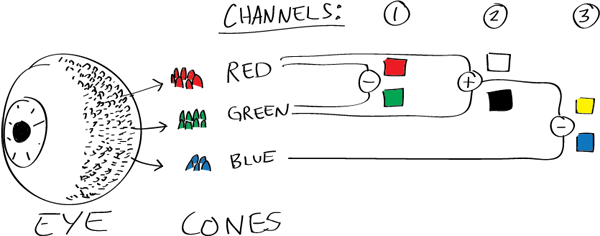

While the human eye has red, green, and blue-sensing cones, those cones are cross-wired in the retina to produce a luminance channel plus a red-green and a blue-yellow channel, and it’s data in that color space (known technically as “LAB”) that goes to the brain. That’s why we can’t perceive a reddish-green or a yellowish-blue, whereas such colors can be represented in the RGB color space used by digital cameras.

https://en.rockcontent.com/blog/the-use-of-yellow-in-data-design

The back of the retina is covered in light-sensitive neurons known as cone cells and rod cells. There are three types of cone cells, each sensitive to different ranges of light. These ranges overlap, but for convenience the cones are referred to as blue (short-wavelength), green (medium-wavelength), and red (long-wavelength). The rod cells are primarily used in low-light situations, so we’ll ignore those for now.

When light enters the eye and hits the cone cells, the cones get excited and send signals to the brain through the visual cortex. Different wavelengths of light excite different combinations of cones to varying levels, which generates our perception of color. You can see that the red cones are most sensitive to light, and the blue cones are least sensitive. The sensitivity of green and red cones overlaps for most of the visible spectrum.

Here’s how your brain takes the signals of light intensity from the cones and turns it into color information. To see red or green, your brain finds the difference between the levels of excitement in your red and green cones. This is the red-green channel.

To get “brightness,” your brain combines the excitement of your red and green cones. This creates the luminance, or black-white, channel. To see yellow or blue, your brain then finds the difference between this luminance signal and the excitement of your blue cones. This is the yellow-blue channel.

From the calculations made in the brain along those three channels, we get four basic colors: blue, green, yellow, and red. Seeing blue is what you experience when low-wavelength light excites the blue cones more than the green and red.

Seeing green happens when light excites the green cones more than the red cones. Seeing red happens when only the red cones are excited by high-wavelength light.

Here’s where it gets interesting. Seeing yellow is what happens when BOTH the green AND red cones are highly excited near their peak sensitivity. This is the biggest collective excitement that your cones ever have, aside from seeing pure white.

Notice that yellow occurs at peak intensity in the graph to the right. Further, the lens and cornea of the eye happen to block shorter wavelengths, reducing sensitivity to blue and violet light.