About green screens

hackaday.com/2015/02/07/how-green-screen-worked-before-computers/

www.newtek.com/blog/tips/best-green-screen-materials/

www.chromawall.com/blog//chroma-key-green

Chroma Key Green, the color of green screens is also known as Chroma Green and is valued at approximately 354C in the Pantone color matching system (PMS).

Chroma Green can be broken down in many different ways. Here is green screen green as other values useful for both physical and digital production:

Green Screen as RGB Color Value: 0, 177, 64

Green Screen as CMYK Color Value: 81, 0, 92, 0

Green Screen as Hex Color Value: #00b140

Green Screen as Websafe Color Value: #009933

Chroma Key Green is reasonably close to an 18% gray reflectance.

Illuminate your green screen with an uniform source with less than 2/3 EV variation.

The level of brightness at any given f-stop should be equivalent to a 90% white card under the same lighting.

Human World Population Through Time

200,000 years to reach 1 billion.

200 years to reach 7 billions.

The global population has nearly tripled since 1950, from 2.6 billion people to 7.6 billion.

https://edition.cnn.com/2018/11/08/health/global-burden-disease-fertility-study/index.html?no-st=1542440193

Equirectangular 360 videos/photos to Unity3D to VR

SUMMARY

- A lot of 360 technology is natively supported in Unity3D. Examples here: https://assetstore.unity.com/packages/essentials/tutorial-projects/vr-samples-51519

- Use the Google Cardboard VR API to export for Android or iOS. https://developers.google.com/vr/?hl=en https://developers.google.com/vr/develop/unity/get-started-ios

- Images and videos are for the most equirectangular 2:1 360 captures, mapped onto a skybox (stills) or an inverted sphere (videos). Panoramas are also supported.

- Stereo is achieved in different formats, but mostly with a 2:1 over-under layout.

- Videos can be streamed from a server.

- You can export 360 mono/stereo stills/videos from Unity3D with VR Panorama.

- 4K is probably the best average resolution size for mobiles.

- Interaction can be driven through the Google API gaze scripts/plugins or through Google Cloud Speech Recognition (paid service, https://assetstore.unity.com/packages/add-ons/machinelearning/google-cloud-speech-recognition-vr-ar-desktop-desktop-72625 )

DETAILS

- Google VR game to iOS in 15 minutes

- Step by Step Google VR and responding to events with Unity3D 2017.x

https://boostlog.io/@mohammedalsayedomar/create-cardboard-apps-in-unity-5ac8f81e47018500491f38c8

https://www.sitepoint.com/building-a-google-cardboard-vr-app-in-unity/

- Gaze interaction examples

https://assetstore.unity.com/packages/tools/gui/gaze-ui-for-canvas-70881

https://s3.amazonaws.com/xrcommunity/tutorials/vrgazecontrol/VRGazeControl.unitypackage

https://assetstore.unity.com/packages/tools/gui/cardboard-vr-touchless-menu-trigger-58897

- Basics details about equirectangular 2:1 360 images and videos.

- Skybox cubemap texturing, shading and camera component for stills.

- Video player component on a sphere’s with a flipped normals shader.

- Note that you can also use a pre-modeled sphere with inverted normals.

- Note that for audio you will need an audio component on the sphere model.

- Setup a Full 360 stereoscopic video playback using an over-under layout split onto two cameras.

- Note you cannot generate a stereoscopic image from two 360 captures, it has to be done through a dedicated consumer rig.

http://bernieroehl.com/360stereoinunity/

VR Actions for Playmaker

https://assetstore.unity.com/packages/tools/vr-actions-for-playmaker-52109

100 Best Unity3d VR Assets

http://meta-guide.com/embodiment/100-best-unity3d-vr-assets

…find more tutorials/reference under this blog page

(more…)

Guy Ritchie – You Must Be The Master of Your Own Suit and Your Own Kingdom

The world is trying to tell you who you are.

You yourself are trying to tell you who you are.

At some there has to be some reconciliation.

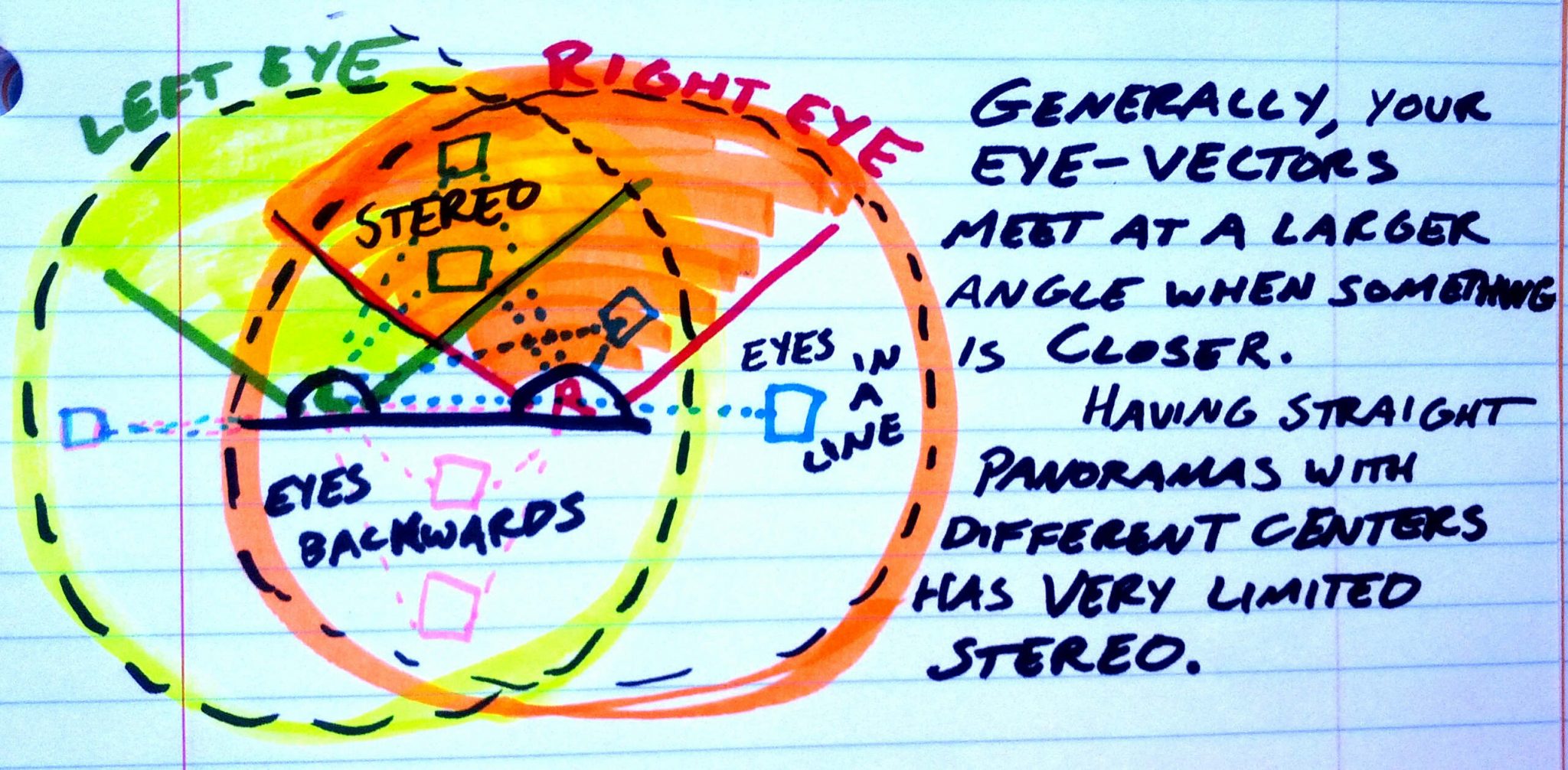

why you cannot generate a stereoscopic image from just two 360 captures

elevr.com/elevrant-panoramic-twist/

Today we discuss panoramic 3d video capture and how understanding its geometry leads to some new potential focus techniques.

With ordinary 2-camera stereoscopy, like you see at a 3d movie, each camera captures its own partial panorama of video, so the two partial circles of video are part of two side-by-side panoramas, each centering on a different point (where the cameras are).

This is great if you want to stare straight ahead from a fixed position. The eyes can measure the depth of any object in the middle of this Venn diagram of overlap. I think of the line of sight as being vectors shooting out of your eyeballs, and when those vectors hit an object from different angles, you get 3d information. When something’s closer, the vectors hit at a wider angle, and when an object is really far away, the vectors approach being parallel.

But even if both these cameras captured spherically, you’d have problems once you turn your head. Your ability to measure depth lessens and lessens, with generally smaller vector angles, until when you’re staring directly to the right they overlap entirely, zero angle no matter how close or far something is. And when you turn to face behind you, the panoramas are backwards, in a way that makes it impossible to focus your eyes on anything.

So a setup with two separate 360 panoramas captured an eye-width apart is no good for actual panoramas.

But you can stitch together a panorama using pairs of cameras an eye-width apart, where the center of the panorama is not on any one camera but at the center of a ball of cameras. Depending on the field of view that gets captured and how it’s stitched together, a four-cameras-per-eye setup might produce something with more or less twist, and more or less twist-reduction between cameras. Ideally, you’d have a many camera setup that lets you get a fully symmetric twist around each panorama. Or, for a circle of lots of cameras facing directly outward, you could crop the footage for each camera: stitch together the right parts of each camera’s capture for the left eye, and the left parts of each camera’s capture for the right eye.

google carboard camera apps to capture 360 images

Cardboard Camera

itunes.apple.com/nz/app/cardboard-camera/id1095487294?mt=8

360 Panorama

itunes.apple.com/nz/app/360-panorama/id377342622?mt=8

Panorama 360 Camera

itunes.apple.com/nz/app/panorama-360-camera/id399394507?mt=8

Acting Upward

A growing community of collaborators dedicated to helping actors, artists & filmmakers gain experience & improve their craft.

Photogrammetry from 360 cameras

Agisoft PhotoScan is one of the most common tools used, but you will need the professional version to work with panos.

These do not support 360 cameras:

– Autodesk Recap

– Reality Capture

– MeshLab

medium.com/@smitty/spherical-and-panoramic-photogrammetry-resources-2edbaeac13ac

www.nctechimaging.com//downloads-files/PhotoScan_Application_Note_v1.1.pdf

360rumors.com/2017/11/software-institut-pascal-converts-360-video-3d-model-vr.html

WalkAboutWorlds

https://sketchfab.com/models/9bc44ba457104b57943c29a79e4103bd

walkaboutworlds.com/Walkabout/beta.html

CloudCompare – point cloud editor for photogrammetry

CloudCompare is a 3D point cloud (and triangular mesh) processing software. It has been originally designed to perform comparison between two dense 3D points clouds (such as the ones acquired with a laser scanner) or between a point cloud and a triangular mesh. It relies on a specific octree structure dedicated to this task.

Afterwards, it has been extended to a more generic point cloud processing software, including many advanced algorithms (registration, resampling, color/normal/scalar fields handling, statistics computation, sensor management, interactive or automatic segmentation, display enhancement, etc.).

photogrammetry using Autodesk ReCap Pro

https://www.autodesk.com/products/recap/overview?plc=RECAP

see more tutorials under the page

www.autodesk.com/products/recap/overview

Spatial Media Metadata Injector – for 360 videos

The Spatial Media Metadata Injector adds metadata to a video file indicating that the file contains 360 video. Use the metadata injector to prepare 360 videos for upload to YouTube.

github.com/google/spatial-media/releases/tag/v2.1

The Windows release requires a 64-bit version of Windows. If you’re using a 32-bit version of Windows, you can still run the metadata injector from the Python source code as follows:

- Install Python.

- Download and extract the metadata injector source code.

- From the “spatialmedia” directory in Windows Explorer, double click on “gui”. Alternatively, from the command prompt, change to the “spatialmedia” directory, and run “python gui.py”.

360.Video.Metadata.Tool.mac.zip

360.Video.Metadata.Tool.win.zip